Supermicro 2029UZ-TN20R25M Performance

For this exercise, we are using our legacy Linux-Bench scripts which help us see cross-platform “least common denominator” results we have been using for years as well as several results from our updated Linux-Bench2 scripts. Starting with our 2nd Generation Intel Xeon Scalable benchmarks, we are adding a number of our workload testing features to the mix as the next evolution of our platform.

At this point, our benchmarking sessions take days to run and we are generating well over a thousand data points. We are also running workloads for software companies that want to see how their software works on the latest hardware. As a result, this is a small sample of the data we are collecting and can share publicly. Our position is always that we are happy to provide some free data but we also have services to let companies run their own workloads in our lab, such as with our DemoEval service. What we do provide is an extremely controlled environment where we know every step is exactly the same and each run is done in a real-world data center, not a test bench.

We are going to show off a few results, and highlight a number of interesting data points in this article.

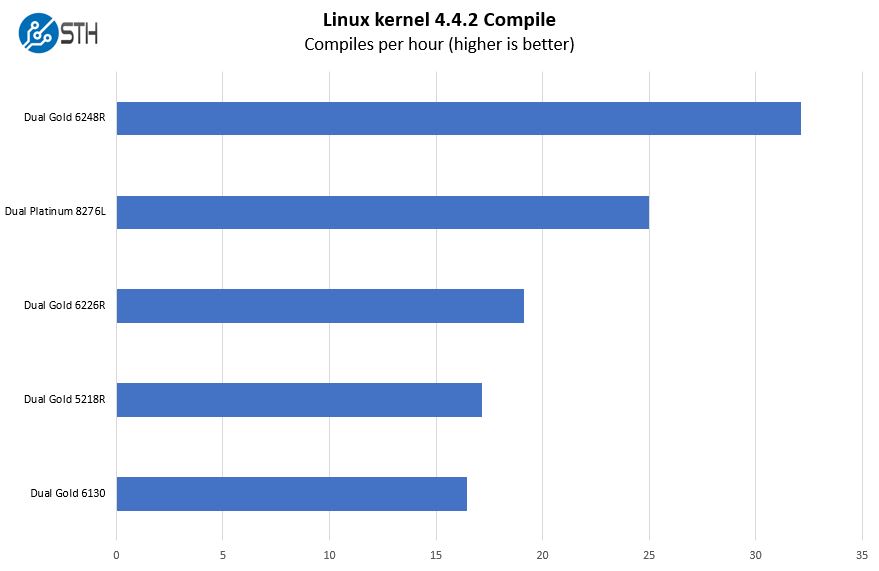

Python Linux 4.4.2 Kernel Compile Benchmark

This is one of the most requested benchmarks for STH over the past few years. The task was simple, we have a standard configuration file, the Linux 4.4.2 kernel from kernel.org, and make the standard auto-generated configuration utilizing every thread in the system. We are expressing results in terms of compiles per hour to make the results easier to read:

With the recent Big 2nd Gen Intel Xeon Scalable Refresh we now have a series of new SKUs that offer a lot more performance per dollar than previous generations. Here we wanted to highlight a theme that we will see throughout this section with the SKU levels often leapfrogging the previous levels such as the Gold 5200R SKUs faster than many previous-gen Gold 6200 SKUs and Gold 6200R often out-performing Platinum SKUs. When you configure a server such as the Supermicro 2029UZ-TN20R25M you will likely want the “R” refresh SKUs unless you need an “L” SKU for higher-capacity memory or Intel Optane DCPMM support.

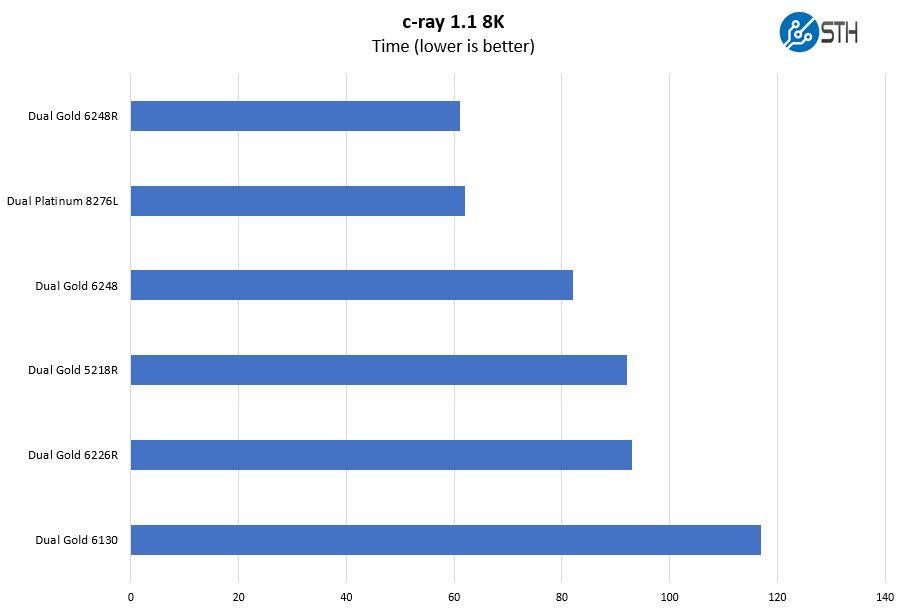

c-ray 1.1 Performance

We have been using c-ray for our performance testing for years now. It is a ray tracing benchmark that is extremely popular to show differences in processors under multi-threaded workloads. We are going to use our 8K results which work well at this end of the performance spectrum.

One may notice that we have slightly fewer SKUs in our charts in this review. That is an impact of both having the Xeon Scalable refresh happen alongside this review as well as some of the shelter-in-place impacts. Still, we wanted to give some sense of a performance range using different generations from the first generation Xeon Scalable to the newest second-gen refresh.

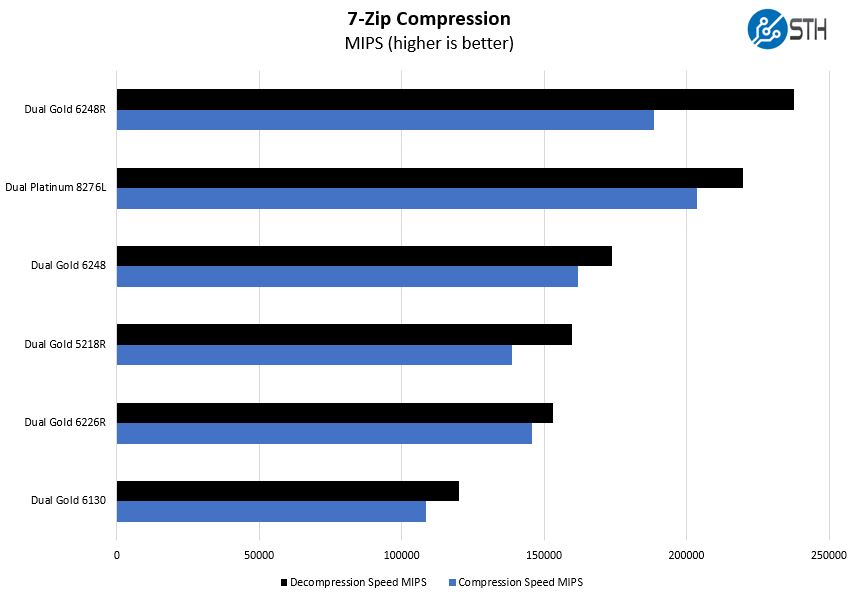

7-zip Compression Performance

7-zip is a widely used compression/ decompression program that works cross-platform. We started using the program during our early days with Windows testing. It is now part of Linux-Bench.

Here we can see the nice range that we get in the Supermicro 2029UZ-TN20R25M in terms of CPU options. Compression is important in storage solutions, and here one can increase the power budget to something like the dual Xeon Gold 6248R and get a lot of additional performance with the system.

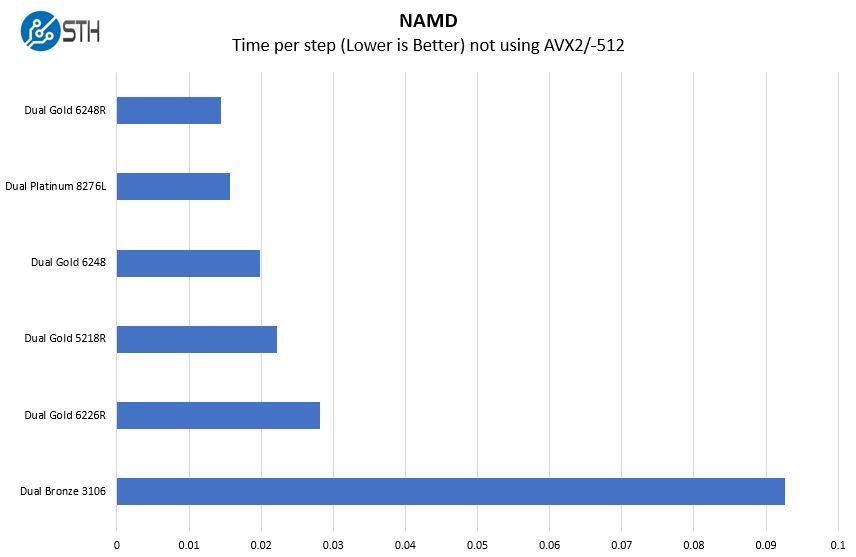

NAMD Performance

NAMD is a molecular modeling benchmark developed by the Theoretical and Computational Biophysics Group in the Beckman Institute for Advanced Science and Technology at the University of Illinois at Urbana-Champaign. More information on the benchmark can be found here. With GROMACS we have been working hard to support AVX-512 and AVX2 supporting AMD Zen architecture. Here are the comparison results for the legacy data set:

We had a pair of Intel Xeon Bronze 3106 chips in the lab if you think that you simply need PCIe and memory connectivity, and do not plan to use the SYS-2029UZ-TN20R25M CPU’s to a great extent, something like these or the updated Bronze 3206R’s are an option. We think that these Bronze and even the Silver-level SKUs are not the right balance point for this system especially given what we are seeing from the Gold 5218R and Gold 5220R. In the context of a configured system with 20x NVMe SSDs, moving from Bronze or Silver up to Gold is likely worth the relatively small percentage premium at the system level to get significantly more performance. Still, there are options in the stack one can use.

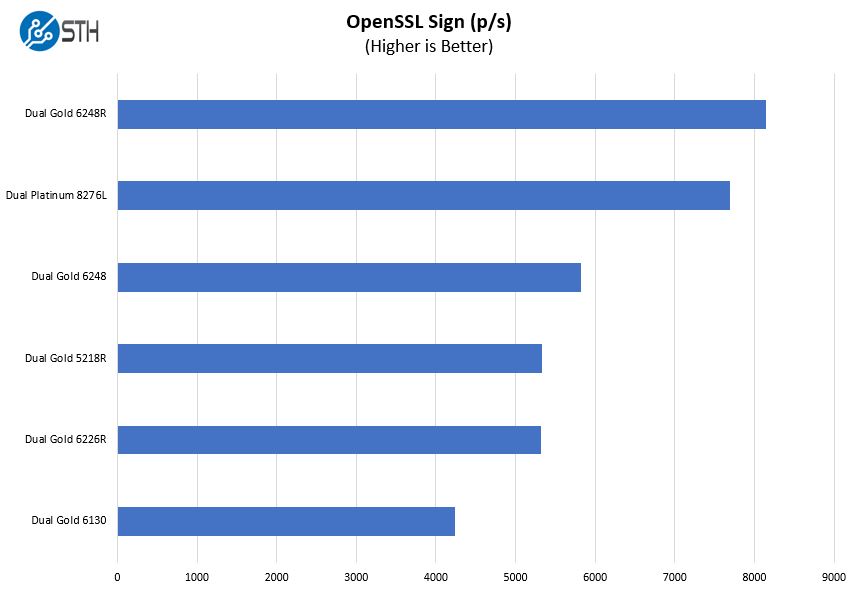

OpenSSL Performance

OpenSSL is widely used to secure communications between servers. This is an important protocol in many server stacks. We first look at our sign tests:

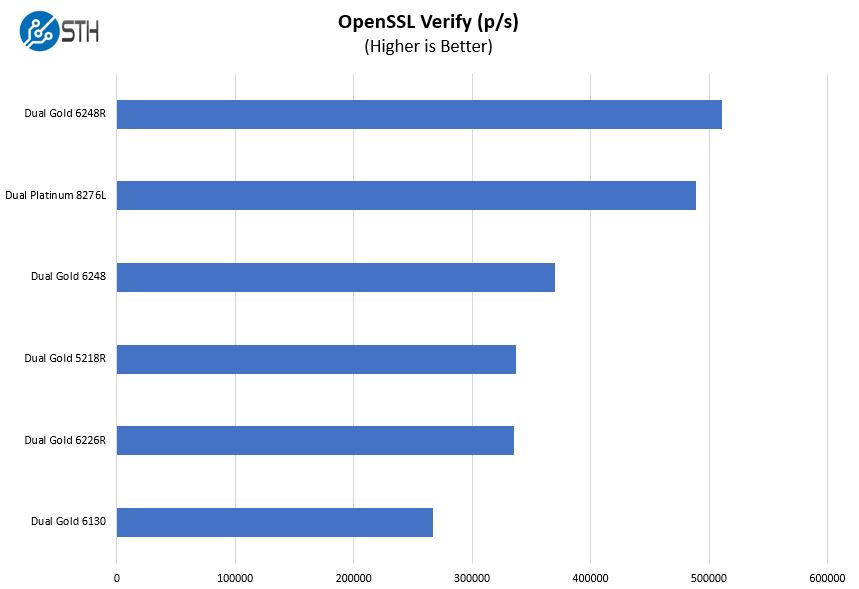

Here are the verify results:

OpenSSL is a foundational web technology. Here, the new chips perform extremely well but we wanted to point out, again, that Intel does not necessarily correlate performance to model number increments. For example, the Intel Xeon Gold 5218R has 20 lower frequency cores while the Xeon Gold 6226R has 16 higher frequency cores. Here, they perform relatively close.

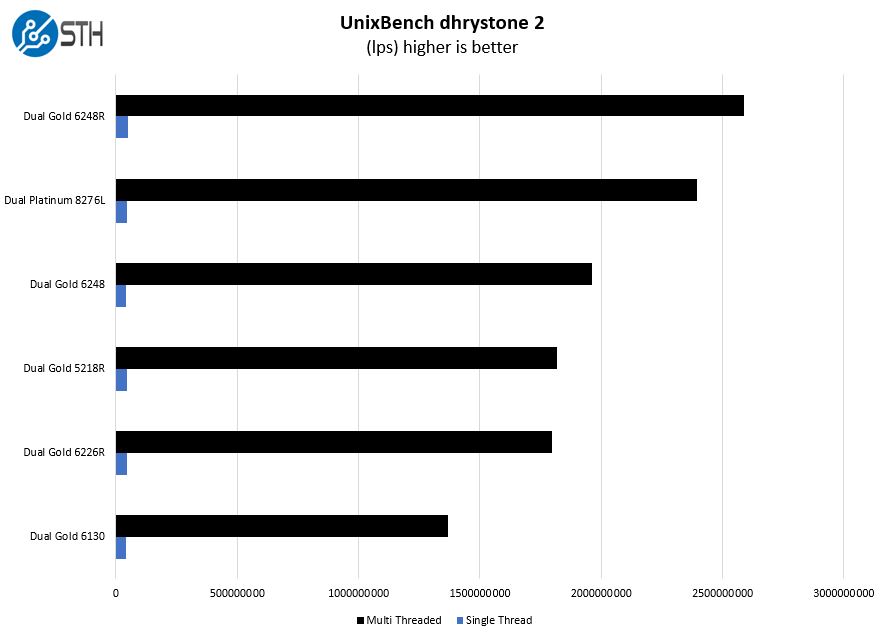

UnixBench Dhrystone 2 and Whetstone Benchmarks

Some of the longest-running tests at STH are the venerable UnixBench 5.1.3 Dhrystone 2 and Whetstone results. They are certainly aging, however, we constantly get requests for them, and many angry notes when we leave them out. UnixBench is widely used so we are including it in this data set. Here are the Dhrystone 2 results:

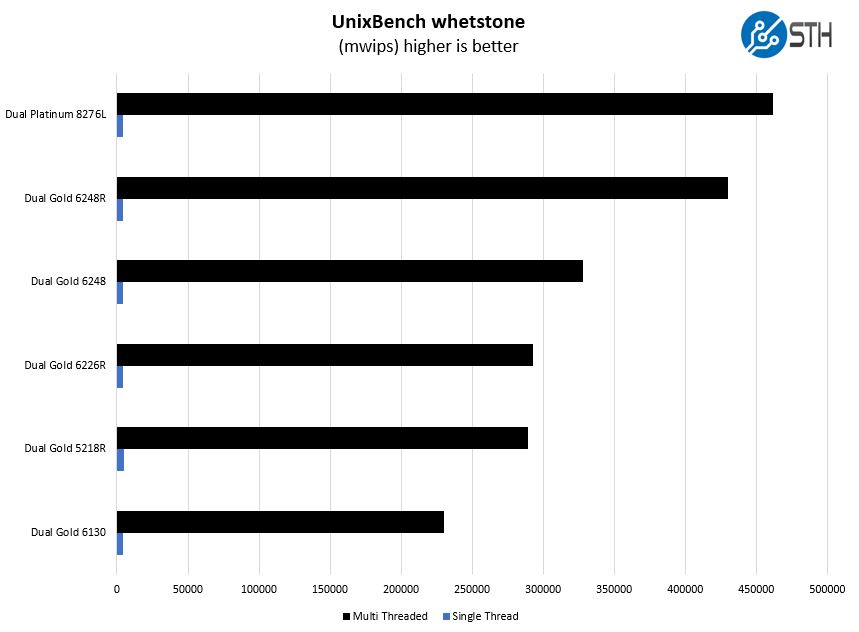

Here are the whetstone results:

We wanted to point out here the massive delta between the dual Xeon Gold 6248 and Gold 6248R results. Although we saw this billed as a “refresh” realistically, it is effectively a new mainstream Xeon lineup for 2020.

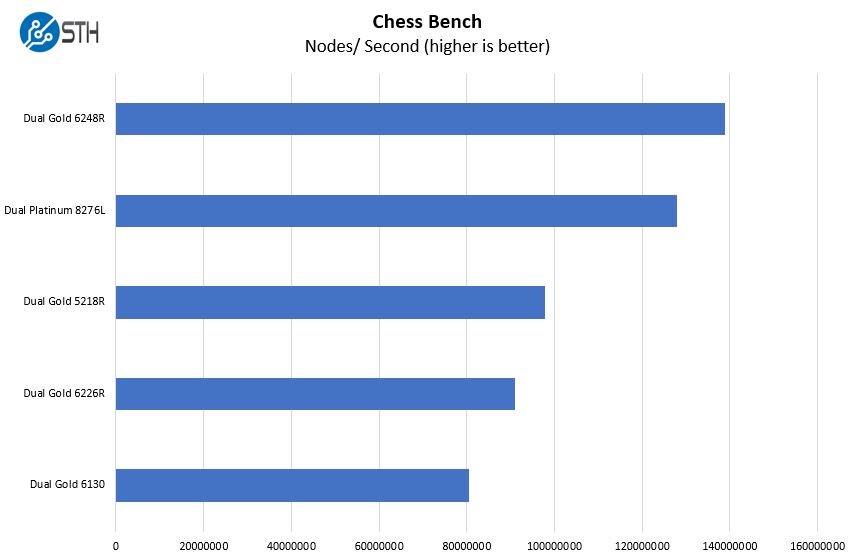

Chess Benchmarking

Chess is an interesting use case since it has almost unlimited complexity. Over the years, we have received a number of requests to bring back chess benchmarking. We have been profiling systems and are ready to start sharing results:

On the chess benchmarking side, we see something very similar to what we have seen in other tests.

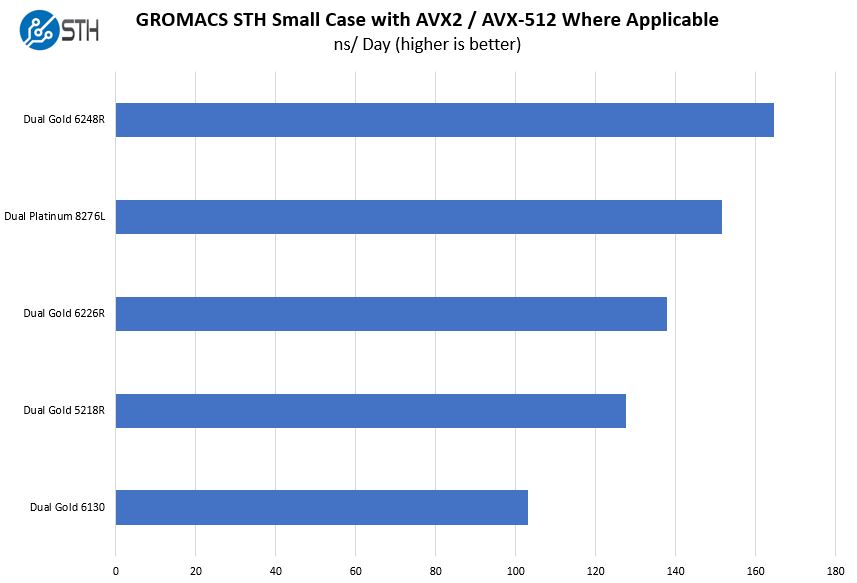

GROMACS STH Small AVX2/ AVX-512 Enabled

We have a small GROMACS molecule simulation we previewed in the first AMD EPYC 7601 Linux benchmarks piece. We are not publishing results of the newer revision here since they are not compatible with our previous results.

Here we wanted to point out something that is important in the context of the Supermicro 2029UZ-TN20R25M and that is power. When Intel did the 2020 Xeon Scalable refresh, it often increased TDP headroom. That allows 24-core Xeon Gold 6248 CPUs to handily out-perform 28-core CPUs like the Xeon Platinum 8276L on tests like this. Not all servers are designed to handle 205W TDP CPUs. The Supermicro 2029UZ-TN20R25M is designed to handle that load without requiring upgraded fans and power supplies as is often needed with some of its competitors such as the Dell EMC PowerEdge line.

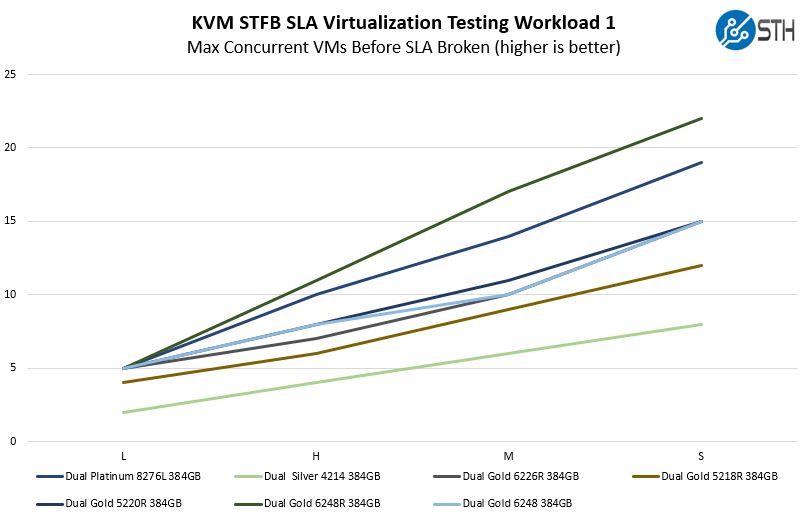

STH STFB KVM Virtualization Testing

One of the other workloads we wanted to share is from one of our DemoEval customers. We have permission to publish the results, but the application itself being tested is closed source. This is a KVM virtualization-based workload where our client is testing how many VMs it can have online at a given time while completing work under the target SLA. Each VM is a self-contained worker.

We managed to get the dual Intel Xeon Silver 4214 along with the new Xeon Gold 5220R in for a quick run of this workload to give some extra data here. We are seeing around a 3x VM density scaling factor out of a single machine by spending a few thousand more on CPUs. In the context of the system, especially if looking at this as a HCI building block, upgrading CPUs makes a lot of sense.

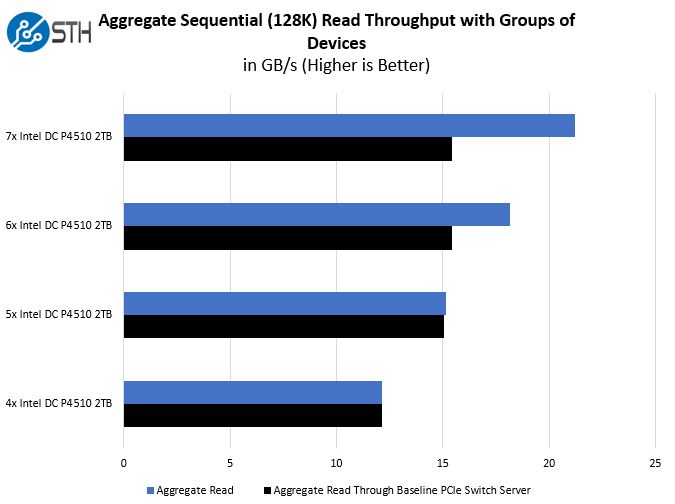

Supermicro 2029UZ-TN20R25M Storage Performance

The benefit of directly attached SSDs is extremely easy to show versus PCIe switched architectures. Since a PCIe switch connected to an Intel Xeon Scalable CPU in this generation can only utilize 16x PCIe Gen3 lanes to link to the CPU, we can see the difference in performance as we cross 5 drives and into drive 6 on the system. Here we compare the Supermicro system to a PCIe switched Xeon Scalable server we have in the lab.

As you can see, adding the 6th drive we start to get a performance benefit. By drive six, the PCIe switch uplink bandwidth is fully saturated so it simply cannot cope. This is a very simple example of why this architecture is important. There is, of course, a lot more involved in delivering high-performance storage beyond simple PCIe bandwidth, but this clearly shows the SYS-2029UZ-TN20R25M design’s direction to solve this bottleneck.

Next, we are going to cover network performance and power consumption before getting to the STH Server Spider and our final words.

It seems like an awful lot of storage bandwidth to the processor with very little bandwidth out to the network. Maybe database where the compute is local to the storage, but I don’t see the balance if serving the storage out.

It is a shame that Supermicro doesn’t offer that chassis/backplane in an single AMD EPYC CPU config, that combination of mixed 20 NVME + 4 SATA/SAS drives would be ideal while costing a whole lot less with a single EPYC CPU, while leaving plenty of available PCIe channels to support 100+Gbs network adapter(s) all without breaking a sweat.

Suggestion for the workload graph please: Dots and Dashes.

It’s tough to see what colour belongs with which processor graph line.

Thanks.

So just to confirm, 20 x PCI-e drives were connected to one of the few servers that can directly support this config. Then you install 20 NVME drives and didnt show that bandwidth or IOPS capabilities?

I apologize for dragging in Linus Sebastian of Linus Tech Tips fame because he is a bit of a buffoon, but he might have hit a genuine issue here:

https://www.youtube.com/watch?v=xWjOh0Ph8uM

Essentially, when all 24 NVME drives are under heavy load there seem to be IO problems cropping up. Apparently, even 24-core Epyc Rome chips cannot cope and he claims the problem is widespread – Google search results shown as proof.

I would like to hear from the more knowledgeable people that frequent STH. Any comments?

Good article, Patrick. However, with regards to the IPMI password, couldn’t it potentially be possible to change to the old ADMIN/ADMIN un/pw using ipmitool from the OS running on the server?

Philip – what about a bar chart?

Henry Hill – 100% true. With the whole lockdown, we had to use the Intel DC P3320 SSDs. Look for reviews on retail versions like we have. We have some of the only P3320’s in existence. They would not go overly well showing off the capabilities of this machine. Instead, we focused on a simpler to illustrate use case, crossing the PCIe Gen3 x16 backhaul limit.

Nickolay – check out the AMD/ Microsoft/ Quanta/ Broadcom/ Kioxia PCIe Gen4 SSD discussion. They were getting over 25GB/s using just 4 drives per Rome system. At these speeds, software and architecture becomes very important.

Oliver – there are new password requirements as well. More on this over the weekend.

Patrick,

A late catch-up, can you post a link to that AMD/… / Kioxia PCIe Gen4 SSD discussion, tks

Hi BinkyTo https://www.servethehome.com/kioxia-cd6-and-cm6-pcie-gen4-ssds-hit-6-7gbps-read-speeds-each/

What about storage class memory?

hi,

may i ask how it is possible to create a RAID5/ array with ESXi or Windows on bare metal?