The year is 2024, and for some unknown reason, a surprising number of folks still commit the cardinal sin of rack power sizing. That, of course, refers to the (relatively) ancient practice of using power supply wattage to size rack power requirements in colocation. While this may seem crazy to most of our STH readers, it is still a regular methodology for many. Today, we end the practice and explain why this is wrong.

Stopping The Cardinal Sin of Rack Power Sizing

Most purchasing colocation these days, especially in smaller (<100kW) environments, are paying for power based on a maximum amount delivered to a rack. For example, the 208V 30A rack has been a mainstay for many years before the latest generation of much higher-power servers entered the market. A colocation may provide something like 3kW on this circuit for lower-power racks or may just sell at the maximum capacity, which would be 208V x 30A = 6240W – 20% safety factor = 4992W or roughly 5kW.

Modern server power supplies usually run efficiently loaded between 20-100% often peaking around 50% for 80Plus certifications. Just because modern server power supplies can operate efficiently at around 100% utilization does not mean they will. Server vendors over-provision power supplies to handle power spikes.

Take the recent Supermicro Xeon 6 server used during our Intel Xeon 6 6700E Sierra Forest Shatters Xeon Expectations piece. We are using Supermicro servers because this piece came about as we discussed this trend. The system comes with power supply options ranging from 2x 1.2kW power supplies to 2x 2.6kW power supplies. Our test configuration did not top 750W, even running stress tests at 100% on all 288 cores with the Intel Xeon 6766E configurations.

The net impact in that example would be provisioning an extra 450W for a server using 1.2kW PSUs That leads us to about 60% higher power provisioning than is needed.

Of course, with many smaller power supplies, this may seem less noticeable on a per-server basis, but in the aggregate, it still amounts to a lot of overprovisioned resources in racks.

One very challenging practice that we have heard many stories of in the past is that folks not only use a single power supply, but use all power supplies in a server. If there are two 860W power supplies for a server that is configured to use only 400W maximum, that can mean that more than 75% of the power budget being paid for will be used.

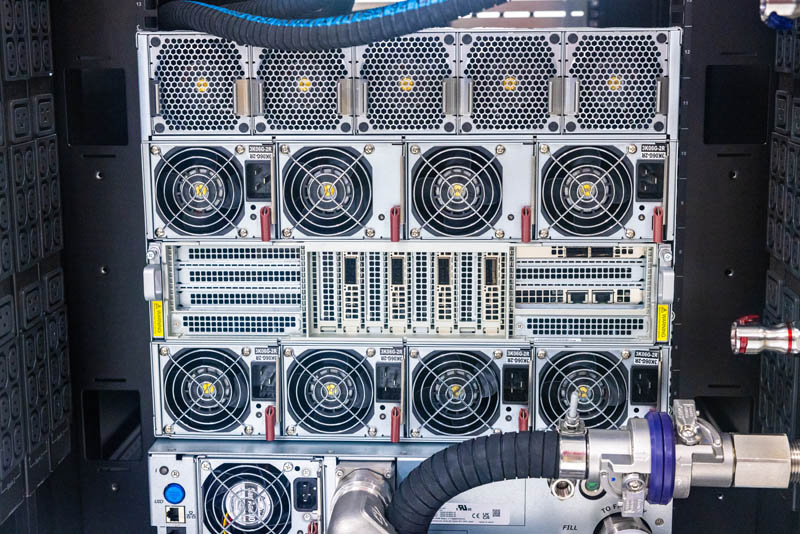

That can be even more acute in larger AI servers. Take this liquid-cooled Supermicro SYS-821GE-TNHR 8x NVIDIA H100 AI server. With eight 3kW PSUs, the server will use a maximum of around 8kW but has 24kW of PSU capacity for redundant 12kW paths.

Using the incorrect sizing figures can lead to between 4kW to 16kW being paid for and not being used.

Sometimes, having extra power infrastructure can be helpful, especially when refresh cycles come into play. At the same time, if you are paying for the maximum amount of power in a rack or across several racks, then massive overprovisioning is very costly.

Final Words

Hearing about people paying for colocation racks by adding up power supply capacity seemed silly when we ran into it in 2016. Since then, we have listened to hundreds of stories of companies doing this. AI servers are changing the game in power in their sheer capacity and the way they often ramp between different power states as a group. Most traditional servers use much less than 100% load figures in daily operation and are unlikely to hit 100% at the same time except in applications like HPC clusters. Provisioning colocation power budgets to equal all installed equipment at 100% load is safe, but it still leaves a lot of room for optimization since many clusters run at 50% or less load.

Even in lower-power circuits in single racks, overpaying for power can needlessly cost thousands of dollars per year. Using maximum load power takes a few minutes instead of just adding up the power supply ratings and can introduce huge power savings. It can also help data centers better manage their power demands as more high-power servers are introduced in the future.

It is time to spread the word and stop the cardinal sin of colocation rack power sizing.

Of course, we talked about this and much more in our recent data center power video that you can find here:

HPE and I think Dell as well have a website where you can calculate the actual power usage for your specific configuration.

I’d assume that a lot of colo setups either have somewhat limited sensitivity or just don’t want the customer dissatisfaction involved in deliberately tripping a metaphorical breaker just because someone momentarily drew more power than is budgeted, so you can probably get away with it a lot of the time; but are there any servers whose power management options include an “absolutely do not draw more than X watts, throttle whatever it takes” setting to make running your config close to the wire safer?

Obviously, if you are consistently throttling expensive silicon to save on (relatively) cheap colo power you are doing it wrong; but it’s not uncommon for there to be a worst-case configuration that exceeds the power draw of an expected workload(like the furmark case that the GPU companies kept whining about being a ‘power virus’ rather than an actual benchmark back in the day); and if you are trying to cut the margins nice and tight it would be reassuring to be able to instruct systems to simply refuse such cases.

On the other hand, that seems like it could turn into a real down-the-rabbit-hole if you’ve got a fairly heterogeneous collection of gear(it’s certainly much less exciting; both as hardware or in terms of power density than it used to be; but something like an HDD shelf deciding to do a full spin up is still a nontrivial swing in power usage and power usage that no one server’s BMC is necessarily going to be aware of); with systems needing to be aware of other systems’ power draws in order to determine what is safe at any given moment.

Except for in many industries spikes are significantly correlated horizontally (multiple instances) and, to a lesser extent, vertically (multiple tiers often buffered by caches).

Think that financial markets activity is usually active in a large segment. A phenomenon may drive people from a region, stressing that region’s allocated rack. Etc. One does now simply advise to size down.

Having just gone through the fun time of finding a colo as we are vacating our office space with a server room, it is a pain going through and figuring out how much power you may need. Plus finding out there are 208v -> 120v isolation transformers for ancient equipment you need to buy.