Solidigm D7-P5810 800GB Basic Performance

For this, we are going to run through a number of workloads just to see how the Solidigm drive performs. We would also like to provide some easy screenshots of desktop tools so you can see the results quickly and easily compared to other drives you may have.

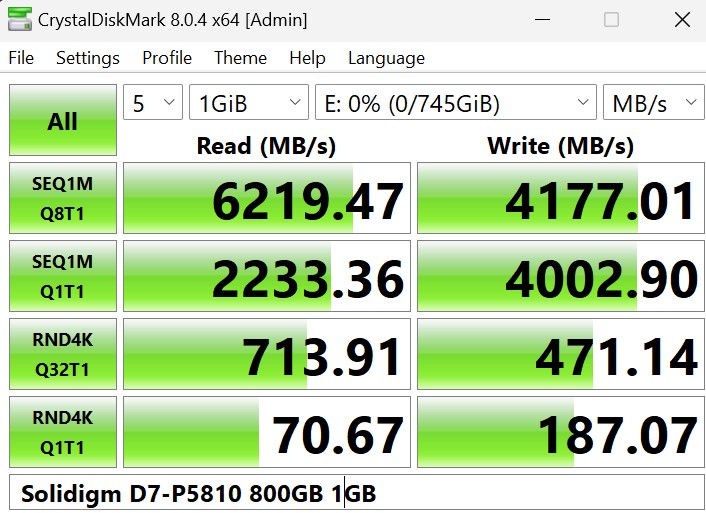

CrystalDiskMark 8.0.4 x64

CrystalDiskMark is used as a basic starting point for benchmarks as it is something commonly run by end-users as a sanity check. Here is the smaller 1GB test size:

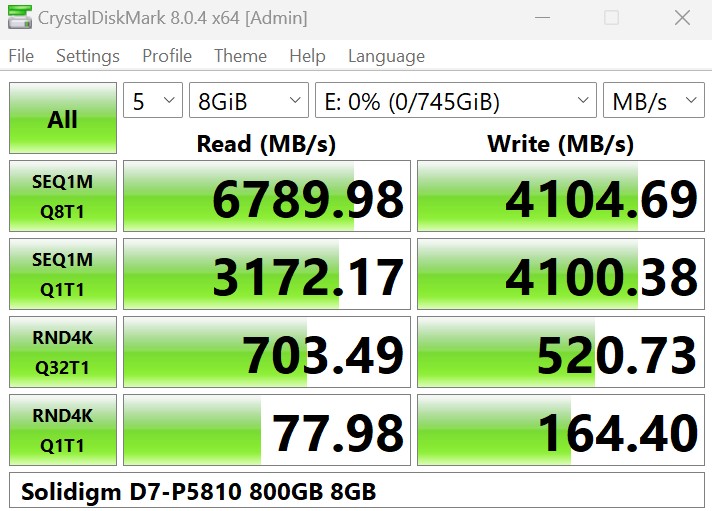

Here is the larger 8GB test size:

This was a bit slower than the DapuStor Xlenstor2 800GB that we reviewed, but also generally better than a lot of other drives out there.

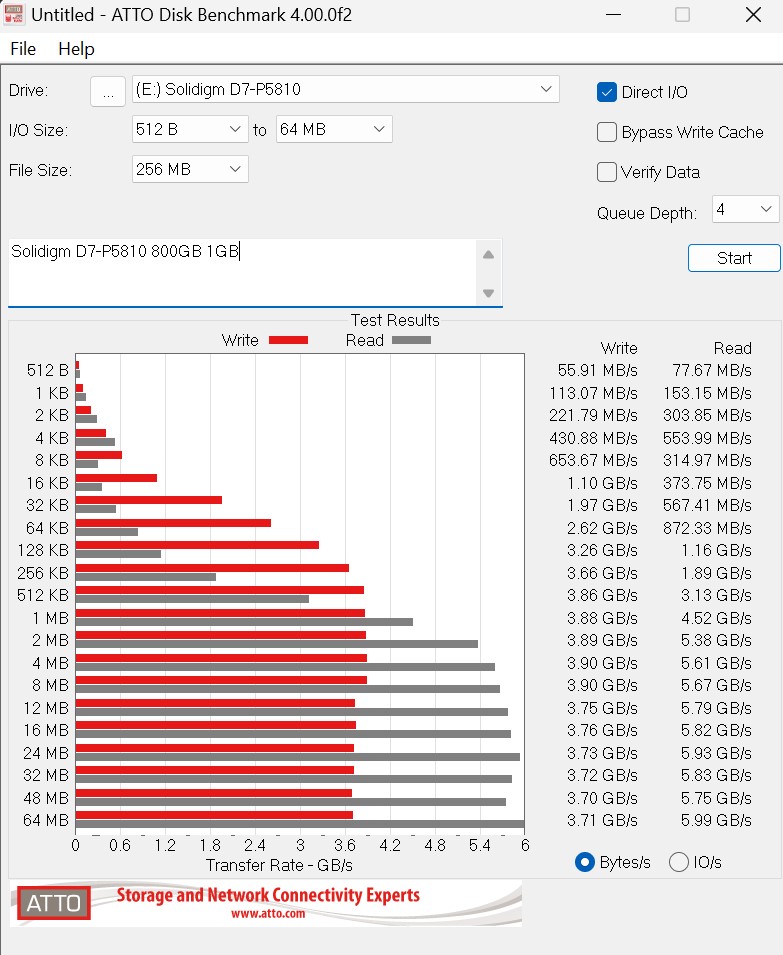

ATTO Disk Benchmark

The ATTO Disk Benchmark has been a staple of drive sequential performance testing for years. ATTO was tested at both 256MB and 8GB file sizes.

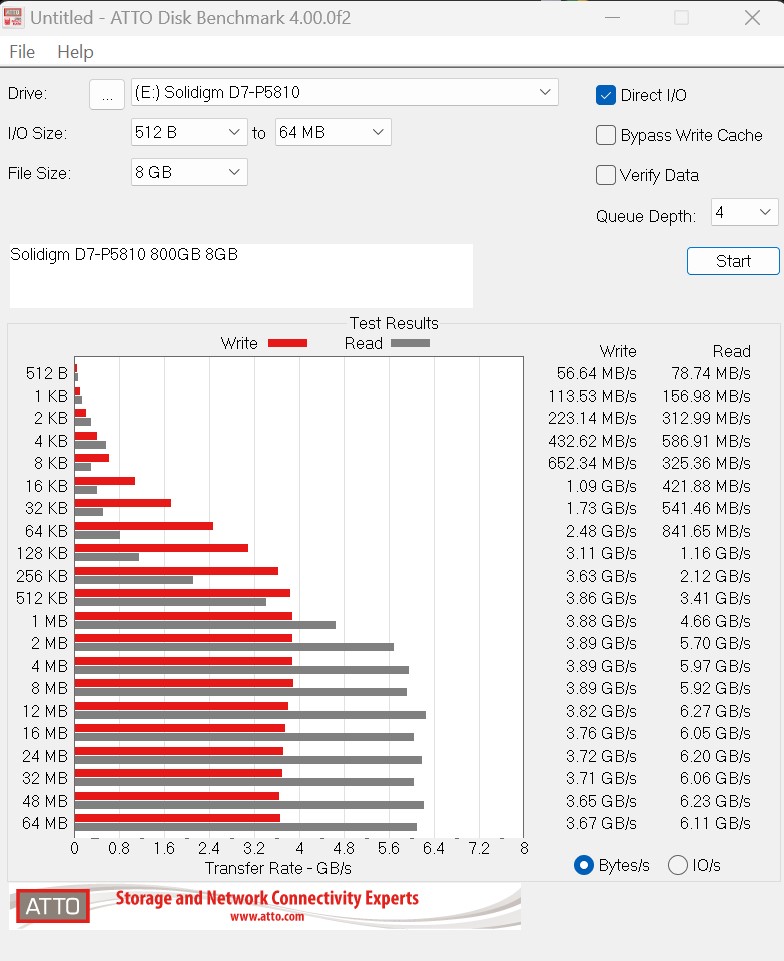

Here is the 8GB result:

Perhaps the most consistent thing we see is that the sequential read performance consistently outpaces the sequential write performance.

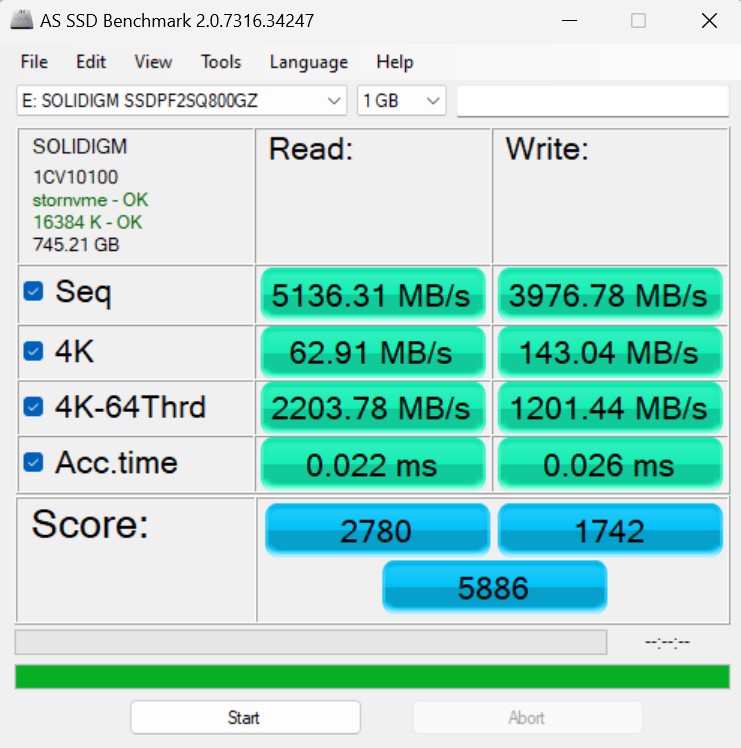

AS SSD Benchmark

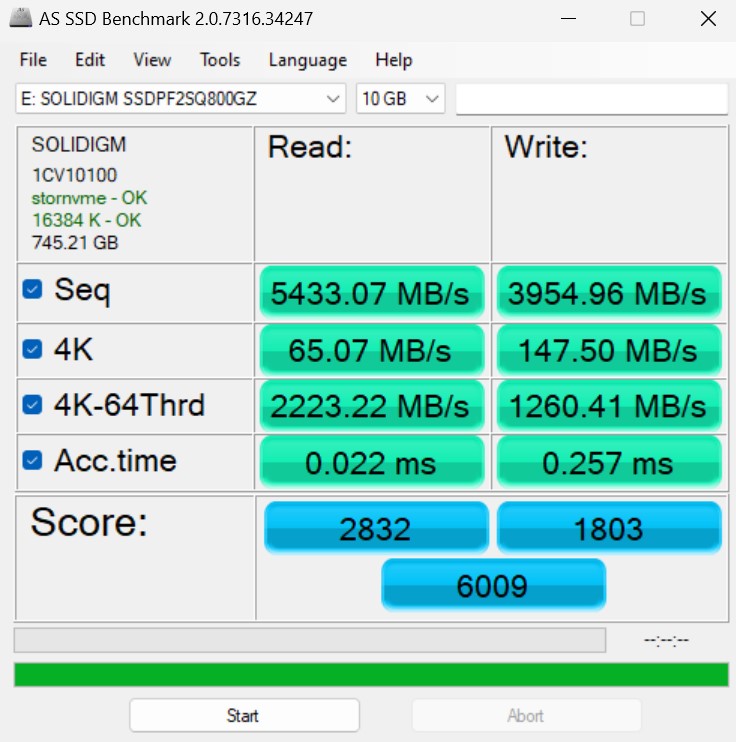

AS SSD Benchmark is another common benchmark for testing SSDs. We run all three tests for our series. Like other utilities, it was run with both the default 1GB as well as a larger 10GB test set.

Here is the 10GB test size:

Here, we can see decent 4K numbers and all-around similar numbers to what we have seen using the other utilities.

Next, let us get into some of our Linux-based benchmarking.

Intel SSDs were my goto during the long 6-Gbit SATA era (while supporting 5K Linux servers).

Dumb question:

Would the QLC 60TB running in SLC mode (15TB) perform as well as this? Or would the excess capacity slow it down?

You mentioning it and the SLC mode stuff made me realize we have the capability for 15TB SLC SSDs and nobody is really putting anything into that.

Give us back optane!

FInally, SLC.

This is actually not true SLC, it has QLC dies ran in pure pseudo SLC

Even more hilariously, the QLC drives are 5LC running in QLC

Optane is too good of a tech to allow it to die. Fixes 100% of the problems with NAND.

SLC gets you part of the way there but with the same limitations with all NAND.

I just finished our new SAN – all 2S SPR, 2TB, 2x Bluefield 3 (400GbE), 2x ConnectX7 (400GbE) – 24 x NVMe in a 2U Supermicro chassis.

Tier 0 is 4 servers and 280TB of Optane storage (4x70TB) ZFS with single parity.

Tier 1 is 12 servers and 4PB of Intel Enterprise NAND and dual parity ZFS.

Tier 2 is 45 drive top loaders (3) from Supermicro with 14TB Exos dual 12Gb/s SAS. These are limited to ConnectX6 and 2x100GbE / 200GbE.

Tier 3 is soon to be decommissioned Supermicro 90 drive top loaders.

I Picked up the Optane and NAND drives for less than half cost after the Solidigm branded drives started appearing. Still have over 2PB of NAND and 300TB of Optane in reserve.

Intel NAND was always superior to other NAND and Optane has no peer.