Today we are going to take a look at a 2.5″ SSD from Solidigm. The Solidigm D7-P5520 is a PCIe Gen4 NVMe SSD, but it is also one of the more confusing units we have seen. If you look at the label on the cover image, it says “Intel,” but Solidigm now sells the drive. This is due to Solidigm succeeding Intel after Intel sold its SSD Business to SK Hynix. As one of the first drives in the transition period, this is still branded “Intel” even though it is being sold by Solidigm. With that out of the way, let us get to the review.

Solidigm D7-P5520 7.68TB Overview

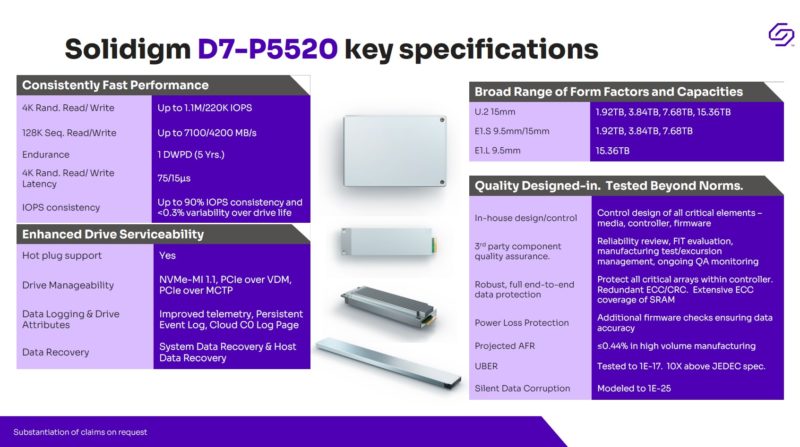

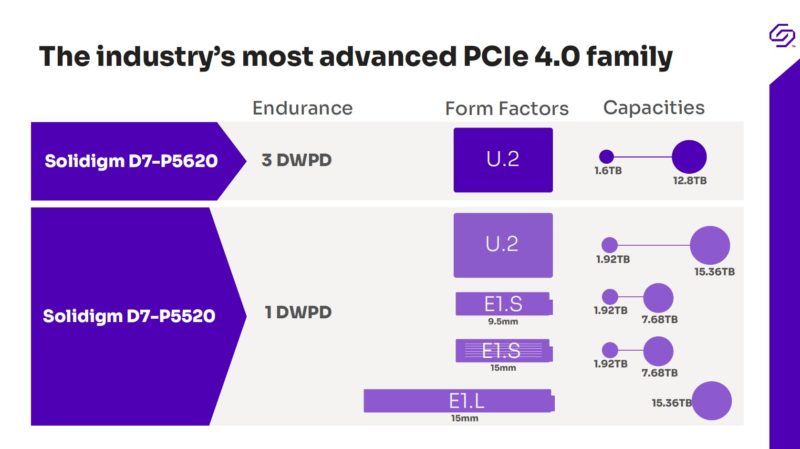

We wanted to start this review with a recap of the specs. While we are looking at the 7.68TB 2.5″ U.2 15mm drive, that is just one capacity point and form factor.

Solidigm also offers E1.S and E1.L versions of the drive.

The Solidigm “D7” may make one think immediately of the high-end 7-series drives like the venerable S3700 and P3700 from Intel. The D7-P5520 is the 1 DWPD mixed-use level of drives. There is a D7-P5620 that is the higher endurance 3 DWPD offering.

As drive capacities have grown, appetites for 7+ DWPD drives have shrunk. 1 DWPD on a 7.68TB is more endurance than a 7 DWPD 1TB SSD, but one gets the benefit of more capacity. Still, these are primary storage replacement drives rather than dedicated log devices in terms of capacity and endurance.

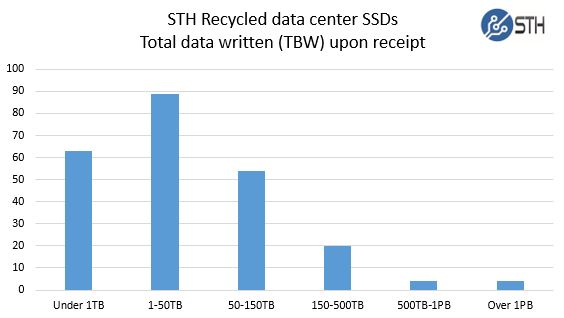

We know that folks are very sensitive to endurance ratings. Years ago when we did our Used enterprise SSDs: Dissecting our production SSD population we found even smaller capacity SSDs were running much lower than their rated endurance. As an excerpt from that piece:

These were much smaller SSDs, but we are now talking SSDs with over 10PB of write endurance even with 1 DWPD so it has become a smaller issue these days.

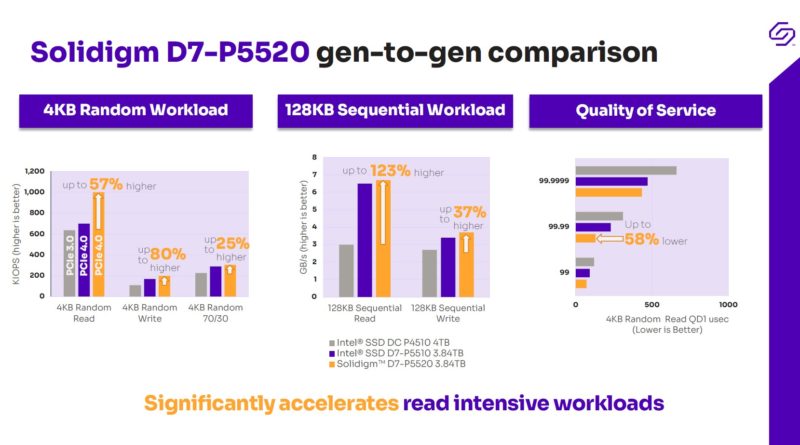

The previous generation was the Intel D7-P5510 and then the P4510 before that. We will have performance numbers in a bit, but moving from PCIe Gen3 to Gen4 helps a lot in some of the bigger claims, like the sequential read performance. Still, Solidigm is focusing more on the random and mixed workloads instead of all-out sequential performance, and that makes sense.

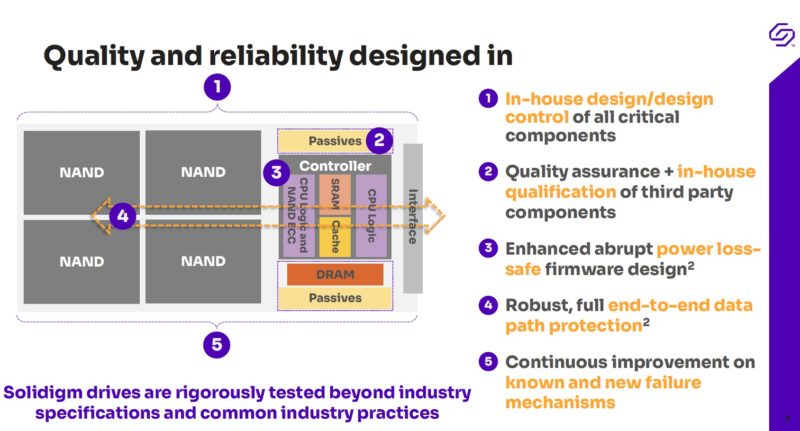

Solidigm also inherited Intel’s quality and reliability features. For many years Intel was the leader in the reliability space because of this methodology.

Let us now transition to performance.

Solidigm D7-P5520 7.68TB Performance

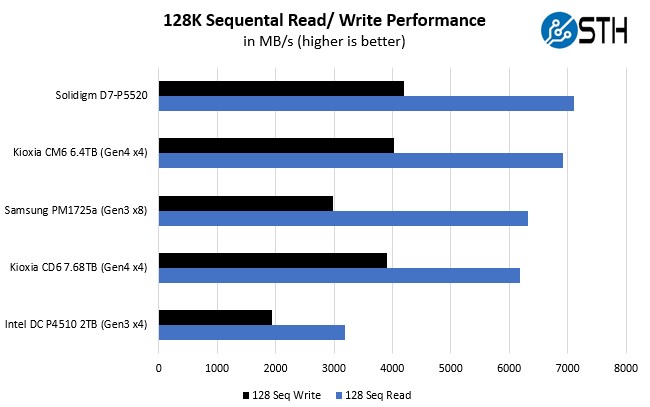

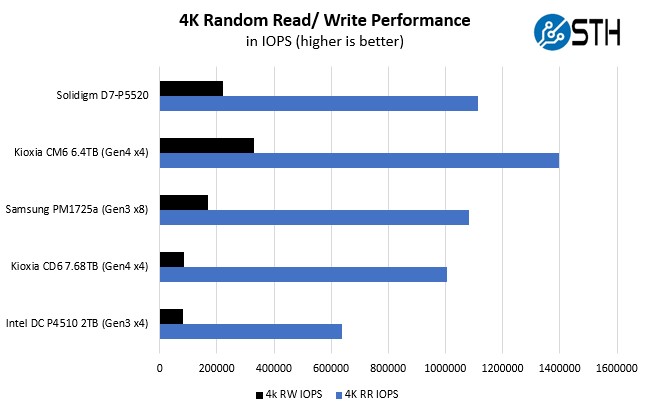

Our first test was to see sequential transfer rates and 4K random IOPS performance for the Solidigm D7-P5520 7.68TB SSD. Please excuse the smaller than normal comparison set. In the next section you will see why we have a reduced set. The main reason is that we swapped to a multi-architectural test lab. We actually tested these SSDs with Intel Ice Lake, three AMD generations, Ampere Altra and Altra Max, IBM Power9, and even Huawei HiSilicon Kunpeng 920. We just got the Kunpeng 920 as this review was about to go live and so we delayed the review to basically get it on every PCIe Gen4 platform we could.

Overall the sequential and 4K random read/ write numbers were about what we would expect from a Solidigm drive and close to the rated speeds.

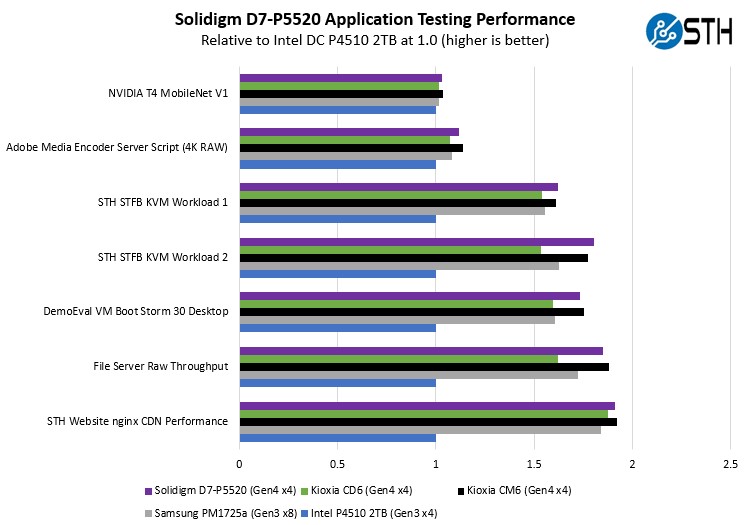

For our application testing performance, we are still using AMD EPYC. We have all of these working on x86 but we do not have all working on Arm and POWER9 yet. Still the Solidigm drive performed very well even getting top honors in a test.

As you can see, there is a lot of variabilities here in terms of how much impact the Solidigm D7-P5520 and PCIe Gen4 has. Let us go through and discuss the performance drivers.

On the NVIDIA T4 MobileNet V1 script, we see very little performance impact, but we see some. The key here is that we are being mostly limited by the performance of the NVIDIA T4 and storage is not the bottleneck. Here we can see a benefit to the newer drives, in terms of performance, but it is not huge. Perhaps the more impactful change here is the move from Gen3 x8 to Gen4 x4 frees up more PCIe connectivity for additional NVIDIA T4’s in a system, thus having a greater impact on total performance. This is a strange way to discuss system performance for storage, but it is very relevant in the AI space.

Likewise, our Adobe Media Encoder script is timing copy to the drive, then the transcoding of the video file, followed by the transfer off of the drive. Here, we have a bigger impact because we have some larger sequential reads/ writes involved, the primary performance driver is the encoding speed. The key takeaway from these tests is that if you are compute limited, but still need to go to storage for some parts of a workflow, there is an appreciable impact but not as big of an impact as getting more compute. Here, the Solidigm performed about where we would expect given the sequential read/ write numbers we saw.

On the KVM virtualization testing, we see heavier reliance upon storage. The first KVM virtualization Workload 1 is more CPU limited than Workload 2 or the VM Boot Storm workload so we see strong performance, albeit not as much as the other two. These are a KVM virtualization-based workloads where our client is testing how many VMs it can have online at a given time while completing work under the target SLA. Each VM is a self-contained worker. We know, based on our performance profiling, that Workload 2 due to the databases being used actually scales better with fast storage and Optane PMem. At the same time, if the dataset is larger, PMem does not have the capacity to scale. This profiling is also why we use Workload 1 in our CPU reviews. Something that Solidigm does well is low queue depth performance which tends to translate to better performance here even when the absolute numbers are not class-leading.

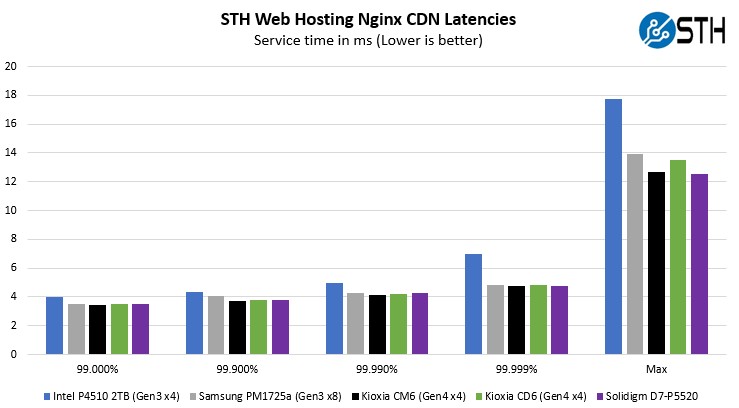

Moving to the file server and nginx CDN we see much better QoS from the new CD6 versus the PCIe Gen3 x4 drives. Perhaps this makes sense if we think of a SSD on PCIe Gen4 as having a lower-latency link as well. On the nginx CDN test, we are using an old snapshot and access patterns from the STH website, with caching disabled, to show what the performance looks like in that case. Here is a quick look at the distribution:

Of course, application testing is not raw storage testing. If you are trying to create a bespoke array to compete with Dell EMC, then you likely care solely about raw performance. If you instead are buying servers with these SSDs, then storage is usually a component of overall performance. That is why we are using a basket of applications is more interesting since they show more of an end result. This is similar to how we are showing acceleration on CPUs where the accelerator may be 3-10x faster, but it is only accelerating 10% of an application. We just want something different to show our readers because if we just print fio charts people may get the impression that 2x the sequential reads of PCIe Gen4 over Gen3 mean 2x the application performance. That is not realistic except for pure local array performance (even networking adds overhead with RDMA.)

Now, for the big project: we tested these drives using every PCIe Gen4 architecture we could find, and not just x86, nor even just servers that are available in the US.

That’s so cool even Power and Kunpeng!

I was this many days old when I saw a SSD review with three different ARM, AMD, and Intel CPUs and an IBM one. I’d say that’s the biggest finding of this review. It’s probably the first time I’ve ever seen a PCIe or NVMe device tested on that many types of CPUs and their PCIe roots.

Well now, that’s a different way to look at SSDs. It’s relevant tho because everything isn’t just Intel now. Gotta love STH for doin’ this.

The POWER9’s difficulties at PCIe Gen 4 speeds likely originates as it was the first PCIe Gen 4 capable host to hit the server market. Most vendors didn’t start validating Gen 4 speeds until Rome arrived years later.