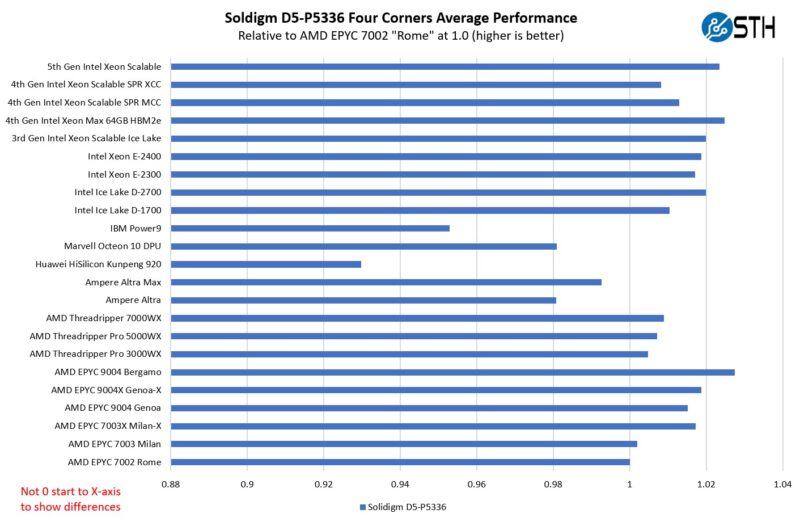

Performance by CPU Architecture

If you saw our recent More Cores More Better AMD Arm and Intel Server CPUs in 2022-2023 piece, or our pieces like the Supermicro ARS-210ME-FNR Ampere Altra Max Arm Server Review, Huawei HiSilicon Kunpeng 920 Arm Server piece, you may have seen that we have been expanding our testbeds to include more architectures. This is in addition to the Ampere Altra 80 core CPUs that are from the family used by Oracle Cloud, Microsoft Azure, and Google Cloud. We also managed to test these on the newest generation AMD EPYC Bergamo and Genoa-X SKUs.

Overall, the same patterns we have seen with other drives hold on this one. Performance is very good save for some PCIe Gen4 Arm systems we have tested (and Power9.) We used to have a “zoomed” chart but putting the Gen5 drive on a Gen5 platform versus a Gen4 platform distorted that chart too much.

This chart saw the addition of the Intel Xeon E-2400 series and the 5th Gen Intel Xeon Scalable parts. It also saw adding the Threadripper 7000 series. We did not manage to get the AMD EPYC 8004 Siena platforms into this one just due to when we were testing it and how fast the Siena platforms were coming and going into the lab.

Testing a Theory on Reliability at the Edge

We often talk about SSD reliability versus hard drives, but it is really difficult to test with a small number of drives, and fast enough to do a review like this one. This is a really interesting SSD when one thinks of reliability. Often we hear data that SSDs are something like 10x less likely to fail from vendors. Some vendors have told us there is a much larger gap. A 61.44TB NVMe SSD offers something different. Instead of a SSD versus a HDD at a given capacity point, it is really multiple HDDs to reach the capacity of a single SSD. Each drive has additional connectors, cables, backplanes and so forth that can be sources of failure, so that matters. Also just having a greater quantity of higher failure rate devices means a higher chance for failure.

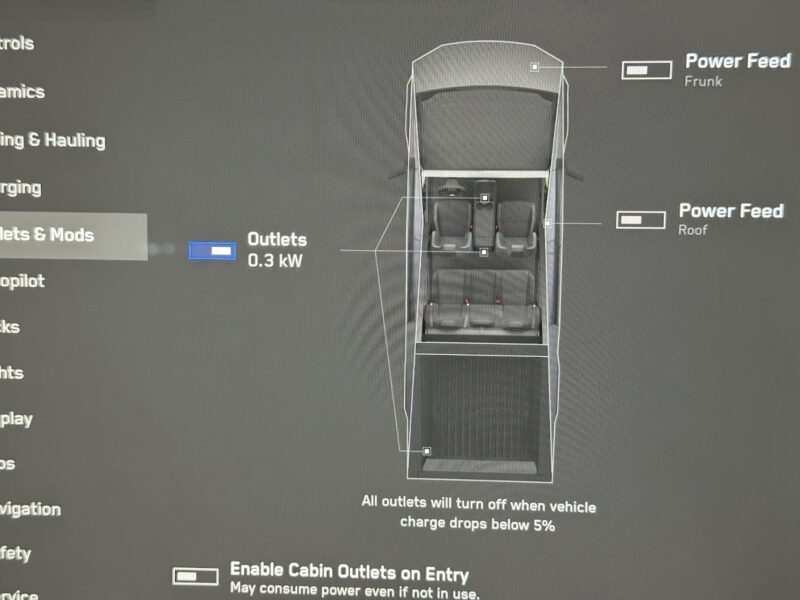

Just to see if we could make it happen, we took some very low-hour 10TB HDDs (~200 hours on each in the set) that we had in the lab and put them in a QNAP enclosure. We then took the 61.44TB drive plus two boot drives and put them into a Supermicro Snow Ridge edge platform, and stuck the whole setup into a Pelican Hardigg case and strapped it in the bed of the Cybertruck (QNAP removed here for photos.) The Cybertruck has outlets that can stay powered on while driving, so we though it might be interesting to write video capture data to both sets of storage and see if we would get a failure.

It is perfectly fair to say that 8x10TBs in RAID-Z2 offers redundancy that the single SSD does not. We also could improve reliability by using larger 20TB drives and have half as many. The other side is that for the lab we tend to use larger drives.

Still, driving around with the setup we saw the first 10TB drive on the way up to Sedona, AZ in well under 500 miles of travel. Of course, there are engineering firms that specialize in mobile computing and we were trying to test if we would see a failure. We managed to see a result.

When we talk about reliability of these drives, perhaps more interesting might be to not just the data center, but also mobile uses. A Cybertruck with air suspension and 35″ tires is far from harsh. On the other hand, hard drives weigh ~1.5lbs each, and having moving parts so the idea that a high capacity SSD might be useful in more than just the data center might give a subset of our readers an opportunity to pause. It is really interesting to think about what one can do when a SSD can replace an array of hard drives.

Final Words

Overall, a giant drive is awesome. 61.44TB is enormous and it can replace hard drives. The 30TB class NVMe SSDs are bigger than hard drives, but when you look at 30.72TB versus 24TB the difference is not huge. At 61.44TB, the difference grows. It grows even more when one factors in the extra provisions we make for hard drive reliability.

Many will focus on QLC and stop there. For some applications, that is the right call. High-endurance and speed SSDs certainly have a place. At the same time, there is a huge percentage of total data stored that resides on hard drives as video and image files that are used for sequential writes and lower cost per TB NVMe SSDs can now start to cost effectively house. That is what makes the D5-P5336 really different.

“Still, driving around with the setup we saw the first 10TB drive on the way up to Sedona, AZ in well under 500 miles of travel.”

You saw the first 10TB drive on the way? I would have thought you would see it when you installed it in the qnap before you left. Perhaps you mean you “…saw the first 10TB drive **fail** on the way…”

How can you review something across three pages without even mentioning even a ballpark figure for the price!?

Because it’s nearly $8000 and the terms of the review agreement probably stipulate they’re not allowed to share that.

I picked up one of these in December 2023 for $3700. Now that the cost of NAND has spiked they seem to be around $6400.

Ah, these are actually running PLC in pQLC mode.

Yikes.

I’m addition to price information for the SSD discussed here, I’d like to see some discussion of prices for the ASRock Rack 2U1G-B650 review last week. Why does a website devoted to server hardware have a dozen reviews of near identical 2.5 Gbit switches with prices and buy now links but no price-performance information for any of the server hardware?

Any reason for SOLIDIGM for not moving to U.3? I suppose this SSD is still U.2, which means it’s not supported by hardware RAID.

Whereas U.3 is supported by many new trimode hardware raid cards

These drives are meant for use cases where data availability/redundancy are handled somewhere else (CDNs, object storage, etc).

@Olimjon

U.2 is supported by hardware RAID. Its still PCIe.

9560-8i is specifically U.3 but supports U.2… why? Because U.2 PCIe backplane is still PCIe.

I spotted one of Patrick’s Easter Eggs: “outlets that can stay powered on while driving, so we though it might be interesting” – though instead of thought. (I never used to comment on these as I thought they were just typos, but once Patrick inserted some typos intentionally and then was surprised that hardly anyone noticed – so now I figure we are supposed to point them out just in case.)

Like @michaelp I was also wondering about why you would see the 10TB drive half way into the trip, wasn’t it there from the beginning?

“Lost”?

Interesting, who would lost when we compare the price lol

Why Threadreaper 7000WX, especialy thredreaper, is so slow compared to EmeraldRapids or Bergamo cpus with nvme on your charts.? Any ideas Patrick?

That spec sheet – Power Off Data retention.. 90 days at 104f(40c). So one hot summer on a shelf in texas/arizona and your data is gone ?

First, how do you review high capacity drives without discussing the IU/TU/whatever. Is this drive using 4k, 16k, or 64k as the smallest transfer unit? The IOPS numbers are somewhat misleading if you’re not showing off the drive’s strengths.

Secondly, when are you going to use enterprise benchmarking practices instead of consumer ones? There’s no way I’d go to you for industry analysis if you can’t even properly test a basic enterprise storage device. Seriously, CrystalDiskMark has no place in a review for enterprise storage.

Finally, how much Tesla stock do you own and are the Cyber Truck mentions paid for?

Terry Wallace wrote “That spec sheet – Power Off Data retention.. 90 days at 104f(40c).”

Thanks for posting. I was wondering what the power off data retention times were like. Given how malware and human errors affect online backups, it seems the world needs a modern media that can be shipped around in trucks and stored off-site.

After all the 2.5 Gbit switches have been rounded up, I’d vote for a focus on offline backup media.

@John S

What tools are you using to benchmark business SSDs?

Actually curious.

FIO on Linux. Windows can use vdbench or iometer, but enterprise storage is mostly Linux.

For synthetic tests you want to test the standard 4 corners. Also look at large block writes concurrent with small block reads and focus on the QoS numbers (eg: 99.999% latency on reads). With the new very high-capacity drives I would test IOPS at increasing sizes of IOs to see how it scales as most applications that will use these will already be tuned to do larger IOs since they’re applications migrating from HDD.

FYI, the driver and block level differences between windows and Linux are pretty big. Only testing on Windows also prevents you from collecting enterprise-worthy data.

I would recommend testing applications too. RocksDB (YCSB) / Postgres or MS SQL (TPC-C & TPC-H via hammerDB) / Cassandra (YDSB) / MySQL (sysbench) / various Spec workloads.

@CW Was it a retail purchase, or under some corporate agreement or something like that?

“Since this drive is 61.44TB the 0.58 DWPD rating may not seem huge”

For perspective, 0.58 DWPD would mean the drive sat writing at over 400MB/s continously 24/7.