Small Business Server Solution with Backup DR Capabilities Hardware

This is the exact list of parts used on the example server here, along with a short explanation about why that component was chosen.

Chassis: Chenbro SR30169

This NAS/server chassis is small, inexpensive, takes a standard ATX PSU (one is included), and has four front-loading hot-swap drive bays with universal 2.5”/3.5” drive trays. It is functionally very similar to Supermicro’s 721TQ-250B, but I prefer the Chenbro over the Supermicro due to the universal drive trays and standard ATX PSU.

Also, the airflow seems better in the Chenbro, since the air pulled through the drive cages is directed at the motherboard area.

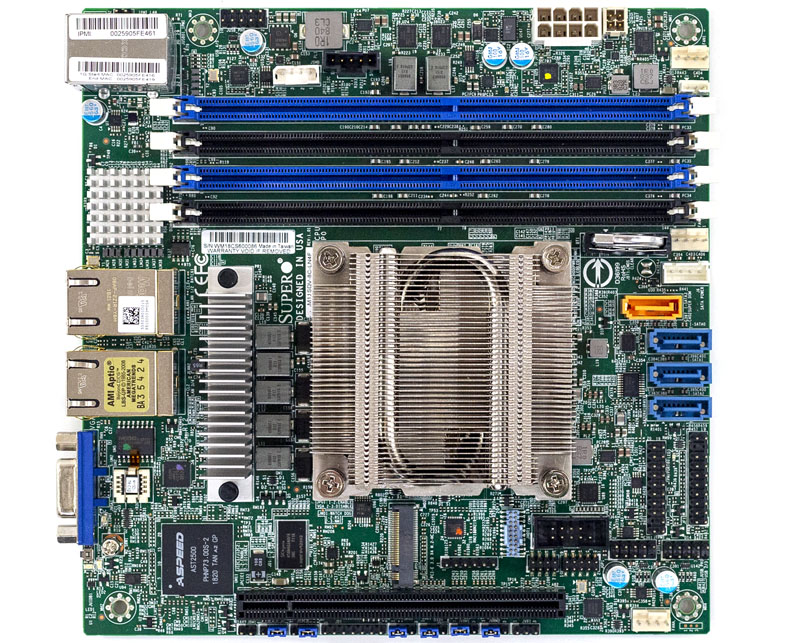

Motherboard / CPU: Supermicro M11SDV-8C-LN4F

This is just a great little system board and CPU for a small server. You can read our full Supermicro M11SDV-8C-LN4F Review if you want to learn more about it. This platform has an embedded AMD EPYC 3251 processor which is a snappy 8 core/ 16 thread solution. This platform may be small, but it offers more performance and expandability than something like the HPE ProLiant MicroServer Gen10 Plus which makes it a big upgrade for a low incremental cost increase.

As a quick note here, we use the 8C rather than the 8C+ because for some reason the 8C is $200 cheaper where I acquired the component. The only difference between the two is the lack of active cooling on the CPU on the 8C, and $200 represents a significant cost savings on this component. In the chassis we selected, we can get enough airflow to cool the part.

Memory: 4x Crucial CT16G4RFD4266

The AMD EPYC 3251 CPU utilizes DDR4-2666 speed memory and we suggest using ECC RDIMMs with the platform. At the time of purchase, it was cheaper to buy 4x 16GB sticks than 2x 32GB. This system is unlikely to require more than 64GB of RAM for the effective lifetime of the server and so the upgradability of using only two DIMMs was not prioritized over the cost savings of using four.

There is a lot of flexibility here. The platform itself supports up to 512GB of memory which means you can use up to 4x 128GB LRDIMMs if you were so inclined. We think a more realistic change to our recipe is using 4x 32GB RDIMMs for 128GB of memory. That yields 16GB/ core or 8GB/ thread which is twice what we typically use and need.

RAID Controller: Areca ARC-1216-4i

I am personally a big fan of the Areca controllers for their built-in out of band management interface. Since this server will be remotely managed, the ability to configure the RAID controller to send alert emails in the case of disk problems is invaluable. As a backup for that, the beeper is loud enough to wake the dead and will absolutely never go unnoticed in the quiet office where this server is going to be deployed.

The Areca ARC-1216-4i is the smallest current-gen Areca controller and fits perfectly with this chassis. One additional note; the Areca drivers are not included in the base VMware ESXi distributions, and as a result, I am required to create a custom installation image that integrates drivers for this RAID card. If you do not want to do this, you can use a Broadcom or Microchip adapter, but you lose the out-of-band management feature.

Storage Arrays: Samsung and Seagate

Storage is a big part of this solution, and we need SSDs for the primary array, hard drives for the secondary array, and boot media.

Primary SSD Array: 2x Samsung 860 EVO 2TB

The clients we build these for are extremely cost-conscious, and the workload handled by this server is not very storage intensive; thus the decision was made to go with consumer-class SSDs for the primary array. In addition, there is a side benefit that if needed replacement SSDs can be acquired at any local computer shop at a moment’s notice – something not available with an enterprise-grade part. I have personally had great luck with the Samsung SSDs on both client and server deployments.

Also, getting a Samsung server SSD replaced under warranty is significantly more difficult than a consumer drive if you do not have a dedicated Samsung sales rep. Samsung’s small business data center SSD RMA process for a drive we recently had fail is now many weeks into even getting an RMA number issued. If you are purchasing in the data center or enterprise SSD category, we suggest Intel, Micron, Kioxia, SanDisk or another brand until Samsung fixes this process. I have an in-warranty Samsung SSD failure but after two months I am still waiting for a replacement or refund.

Although we are using 2TB SSDs, one can scale this up to larger drives easily if the deployment requires it. If you do decide to use larger SSDs, you will also likely want larger HDDs.

Secondary HDD Array: 2x Seagate Enterprise Capacity 6TB

For the secondary HDD array, we are using two Seagate Enterprise Capacity 6TB SATA 7200rpm drives. This is an enterprise-class mechanical hard drive. It was not chosen for any particular reason other than striking the right balance of specs and price.

This drive was only a little more expensive than one of its IronWolf brethren and came with a longer warranty, and thus it was selected.

There is a point to be made that one should look at 8TB/10TB Helium-filled drives as that was a major technology breakthrough in hard drives. They also offer more capacity. When we put this solution together, these were the right option, but there is a lot of flexibility so long as one maintains a 3x the SSD capacity or more ratio.

USB Boot Drive: SanDisk Cruzer Ultra Fit

Not much to say here; this is a small form factor USB drive the system will be booting from. A USB boot drive was chosen over a SATA DOM or M.2 SSD for a few reasons: lower price, it is easier to replace if it fails, and for an ESXi boot drive there is no difference once the system is booted up. These drives can be purchased for under $10 in 16GB or 32GB sizes. Here is an Amazon ad that will pull current pricing since these are often subject to deals and dynamic pricing:

Basically any USB thumb drive will do this job; I prefer the compact ones that are very low profile when installing since it will be sticking out the back of the Chenbro chassis. I do not want any chance it might accidentally be broken while someone is adjusting cabling behind the server.

Replacement Case Fan: Scythe Kaze Flex 120mm

We replace the fans in the Chenbro chassis with a Scythe Kaze Flex 120mm fan, part number SU1225FD12H-RP. This is a moderately high RPM 120MM fan. The fan that comes with the Chenbro SR30169 is a PWM model that does not push a lot of air.

Additionally, the stock fan on the SR30169 has an idle RPM low enough it runs afoul of the Supermicro M11SDV BMC’s minimum fan RPM threshold. When combined with buying the passively-cooled version of the M11SDV system board, I felt the need to replace the stock fan on the SR30169 and this is the model that was chosen. It is still reasonably quiet but is rated to push ~90 CFM of air. I do not know the exact throughput of the stock fan on the SR30169, but my chosen fan definitely seems to push more air and is quieter while doing so.

A non-PWM fan was chosen to avoid the step of having to reconfigure fan thresholds in the Supermicro M11SDV IPMI. I have performed system burn-in testing at 100% CPU for many hours with this fan and the passively-cooled CPU and have remained completely stable and cool.

Wrapping-up

Hopefully, this hardware guide to the Small Business Server Solution with Backup DR Capabilities piece helps others think through hardware options.

Does it really have to run on windows? Their website suggests that it’s capable of running on Linux under system requirements.

Veeam itself can happily back up Linux or any other OS that can be virtualized under a supported hypervisor, but the Backup & Replication Console itself requires 64-bit Windows. You can find the list of supported OS environments here: https://helpcenter.veeam.com/docs/backup/vsphere/system_requirements.html?ver=100#console

Surprised you didn’t offer the low cost ha of VMware vsan in this build?

Joe, I’m not sure exactly which product you’re referring to. Please feel free to shoot me a link over on the STH forums and I’ll try and evaluate it.

Hello will, not all remote offices have reliable air conditioning, so I would like to know if this setup is OK for occasional peak afternoon ambient temperature of 45 degree Celsius? PS: New Delhi Summer peak. Motherboard spec seems to support Environmental Specifications

Operating Temperature:

0°C to 60°C (32°F to 140°F).

Also any rough what average Total Initial capital cost we are looming at?

Ram, I would guess this particular setup wouldn’t be happy with the 45C ambient, but that’s just a guess as I’ve never attempted to test it. The 8C+ version of the board would likely be more appropriate with its active cooler, but 45C is a really hot ambient environment so not sure how the setup would hold up in general.

At the time of purchase, the server I built here was approximately $2800 USD all-in.

That’s a helpful article WILL/STH. Joe shaw I mean just the license to do that is over 2x what this entire setup costs, it adds more system and switch port needs and the system cost is higher. I don’t really understand why you’re talking vSAN and HA. That’s a completely different price class.

Jed, that’s definitely why I asked for clarification. I am unaware of an ‘affordable’ HA or vSAN bundle; vSphere Essentials Plus – which gets you HA – is $5600 by itself. In addition, last time I used it vSAN was super strict about all your hardware being explicitly on the HCL, and meeting those requirements would definitely increase costs versus the equipment chosen here.

Will/STH, excellent article. I’ve been in your shoes before and have built very similar solutions. In the early days, I used FreeNAS on a VM for the storage with the replication function to sync across to a separate location. Cheap and very reliable, you could technically script some of the automation but overall not the most user friendly solution. Now I’m also using Veeam, both on the same host and also on a separate PC in a different floor/department to have some sort of “off-site” mirror as well.

Nice one, thanks! We do similar setup for our clients, but we are using Windows Hyper-V Server (free) as hypervisor which offers replica as well, or Proxmox which is also loved by STH team, as I can see :)

I have a Micron In-Warranty failure that I have been dealing with for over 2 months now. Micron keeps saying it is the VARs problem to RMA it, even though the drive was purchased 6 months ago, and the VAR says why should it be an RMA since it is more than 30 days since purchase. I personally agree with the VAR that it shouldn’t be an RMA at this point, but Micron is trying to throw this in a circle.

In my case, Samsung issued a RMA and I sent in the drive – a 1.92TB PM863 unit. After weeks of no contact, I called and was told that they couldn’t replace the drive because they had no stock on 1.92TB PM863 drives, and so I would be referred to their refunds department, who would be contacting me. The refunds department has no inbound phone number or email address and has failed to reach out to me for ~3 weeks now.

Great advice, for a small company looking to implement a solution 5 yrs ago!

Marc: If your so gung how to smash up a open, honest and clean review from a trusted resource, then you please feel free to produce a 2020, whatever you consider to be up to date suggestion and review; and like myself, you will have a multitude of users give unsolicited, unwarranted and uninformed comments about the fact buying brand new technology to fit a need is unsustainable from a costing pov; and they are a generation or version back to get started. I appreciate very much the thought and independence and through review STS consistently produces FOR FREE, and multiple ways informs their base these are from their perspective and review purposes only… Your mileage may vary.. but its a guide as to what works for the dialogue…

Will, how much the hardware BOM for the system costed you? Do you have a bill of material and component-wise price break up? It would be great if you can email me the same. Dell has something like PowerEdge T40 (https://www.dell.com/en-in/work/shop/povw/poweredge-t40) and seems like they are much lower in cost.

Hi, you can see page 2 for the BOM. Also, STH will have its T40 review in the next few weeks.

Will,

The cheapest “fault tolerant cluster” we know to be possible is simply to double your hardware and run a single image across two machines with scalemp! We’ve the luxury of a budget for dedicated Stratix gear (NEC re rand) but the same budget let’s us experiment with the wild side and informally and without any warranty of any kind, and ymmverydefinitrlelyvary and our software is all written to be highly resilient in the first place, but assigning hyperv to processor affinity and pulling the cord on one of the two born has been found to lose us no data. But we’re feeding the databases from CICS with a GPS sybcged high resolution time source – that’s for starters. Fault tolerant systems are a way of life not a technical bandaid.

Will,

Dry heat = static discharge and very high mortality rates for any hardware. It pays to maintain humidity the hotter your machine room gets.

Ram Shsnkar,

I think you are in need of a custom cabinet with a heat exchanger and pump for positive atmosphere inside so pushing condensation the outside. The truck Is a large sealed expansion chamber regulated by valves and the air moved by the largest slowest fans possible, placed at the final exit.