At STH, we have more AMD EPYC combinations tested than just about anywhere. Although we only publish a little over a dozen data points in our public reviews, our testing business is generating well over 1000 performance data points per set of chips. We have somewhere around $125,000 worth of chips in the lab just from the AMD EPYC and Intel Xeon Scalable lines, and that excludes previous generation chips or those from other lines (e.g. Intel C3958 “Denverton” or Xeon D-1587 parts.) The bottom line is this: our team does a ton of testing and therefore we get a lot of questions. Today we wanted to publish answers in a FAQ style format for our readers regarding one of the most asked about areas: AMD EPYC single socket “P” parts.

Single Socket AMD EPYC FAQ

Let us get started on the FAQ. We are going to order these starting with the most received questions:

Q: If you put a dual socket capable AMD EPYC processor (e.g. an AMD EPYC 7601) in a single socket system, will I have access to only 64 PCIe 3.0 lanes?

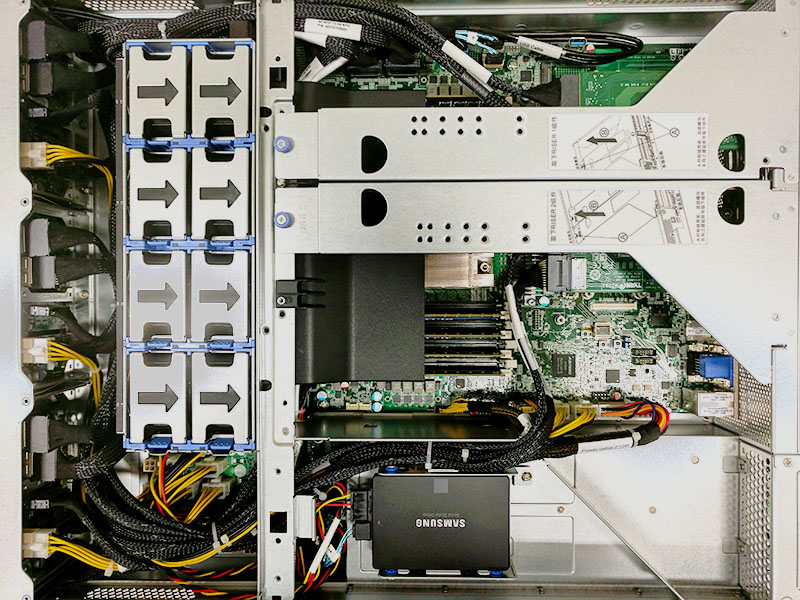

A: You will have access to all 128 PCIe lanes. We tested the AMD EPYC 7401 in a single socket Tyan Transport SX B826T70AE24HR server and had access to all 24 of the NVMe devices we had installed, plus the NICs and boot M.2 devices. That means that the full 128 PCIe lanes were active, not just 64.

Q: If you put a single socket capable AMD EPYC processor (e.g. an AMD EPYC 7351P) in a dual socket system, will I have access to all 128 PCIe 3.0 lanes?

A: Not necessarily. We are seeing most motherboard vendors implement EPYC PCB such that installing a single AMD EPYC CPU in a dual socket server motherboard will render half of the PCIe lanes inactive. To be more precise given AMD EPYC’s high speed I/O lanes being either SATA or PCIe (or Infinity Fabric), I/O that would be connected to CPU2 in a dual socket EPYC motherboard with only one CPU installed will not be accessible.

This is very similar to how we saw Intel Xeon E5 series systems and Intel Xeon Scalable systems behave. AMD simply has the alternative single socket mode to also allow up to 128x PCIe lanes. If you are only planning to ever use a single CPU in a system, get a single socket AMD EPYC server.

We tried this in our Supermicro 2U Ultra test server and confirmed that only half of the I/O and DIMM slots were active with a single CPU.

Q: What is the performance impact of using a P part versus a standard dual socket capable part?

A: This was a somewhat surprising result, although it should not have been. AMD confirmed that there would be a negligible performance impact regarding using the P parts, and we were able to reproduce on some tests. Our tests are tuned to give a <1.5% (and generally <1%) test variance per run, and we have a process to get reliable numbers by running dozens of test runs, excluding the top and bottom quartile, then finding the remaining mean in our data set. We also tracking temperature and humidity information in the data center. Without accurate power, temperature and humidity monitoring, one can run into environmental impacts that have cooling performance changes which thereby impact performance.

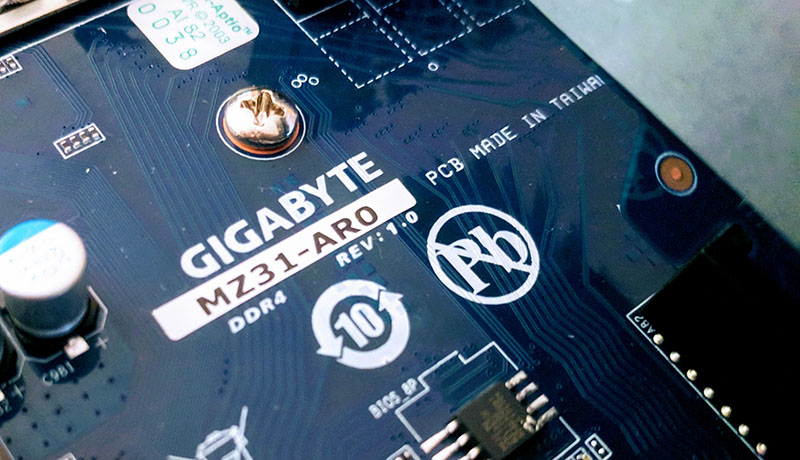

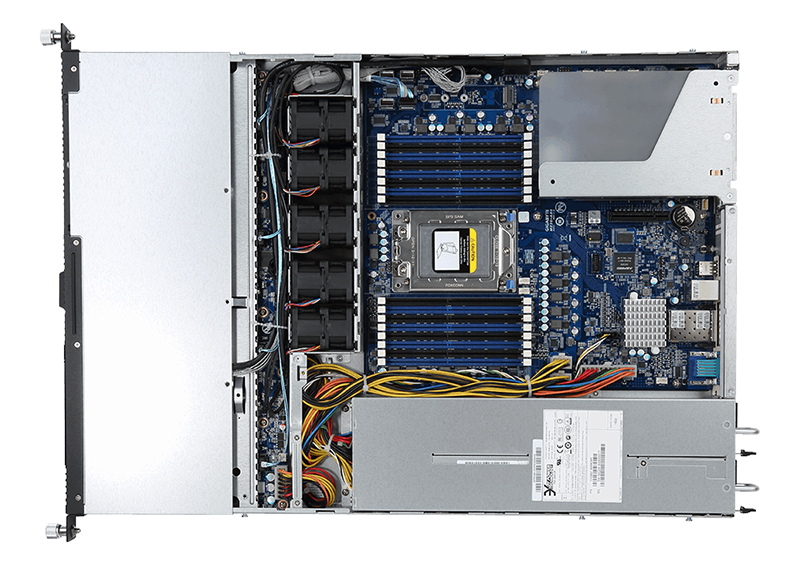

Since we had a single AMD EPYC 7351P and two AMD EPYC 7351 parts, we tested both variants in a Gigabyte R151-Z30 server.

The Gigabyte R151-Z30 is powered by the Gigabyte MZ31-AR0 motherboard and has onboard SFP+ 10 gigabit Ethernet. It also has excellent cooling and a low power overhead as an entry-level offering.

Here were the variances on a few benchmarks. Note seeing a <1.5% variance on 0-100% is difficult to see so the scale is truncated and labeled as such:

Is there a difference? Yes. Is it enormous? No. Although the differences were consistent, they are also within our 1.5% testing variance so we cannot call them significant. For those wondering, the ES1 workload is one of the benchmarks we use for our consulting work instead of STH. It is an Elasticsearch benchmark where we index and search hundreds of thousands of full-text research papers. Likewise, Redis T6 is one of our Redis test cases.

Q: What is the power consumption impact of using a P part versus a standard dual socket capable part?

A: This one surprised us a bit. We saw about a 3W difference in the P part versus the non-P part in terms of power consumption. It was small, but it was fairly consistent.

Q: How is 1P AMD EPYC for 1U commodity 1A colocation on 120V or 110V?

A: The AMD EPYC 7251 is close. Since one is likely to use 8-16 DDR4 RDIMMs with the system, as well as a number of add-in cards, we are going to suggest looking instead to 2A 120V colocation or 1A 208V power budgets. AMD EPYC systems can easily idle below 100W, however, CPU usage spikes and fans spinning up can cause >120W usage fairly easily.

Q: Is there a difference with using PCIe peripherals in single-socket or dual-socket servers?

A: There is. In a single socket server, the AMD EPYC essentially has 32x PCIe lanes per die, and four die per package. If you have 8x NVMe SSDs on those 32 lanes, drive to drive traffic does not need to pass over Infinity Fabric. Likewise, if you have two GPUs installed, the two GPUs can communicate over the same silicon die instead of traversing inter-die Infinity Fabric.

Q: When is AMD EPYC shipping? When can I get one?

A: A number of system vendors are already shipping 1P and 2P AMD EPYC systems and from what we hear, all SKUs have been in production for some time. If you are looking for one-off motherboards we suggest instead looking at full systems.

The 16 and 32 DIMM configurations of AMD EPYC will often require a chassis-specific design for optimal airflow and expansion reconfigurability. Further, we would heed the advice of having a single vendor to go back to for requesting firmware/ driver updates.

Q: When are OEMs such as Dell EMC and HPE shipping EPYC?

A: From what we hear, we expect them in Q4 2017. Dell EMC recently teased both 1U and 2U, single and dual socket servers at VMworld Europe 2017:

https://www.youtube.com/watch?v=D1-1WgL2Jas

Q: Should I get a single socket “P” part or a standard part?

A: If you are deploying 1P servers, the AMD EPYC 7351P, 7401P and 7551P provide amazing values given their aggressive pricing. If you need to go above or below those CPUs in AMD’s stack, then that limited range may mean using a non-P part in a single socket server. Given AMD’s P offerings cover 16, 24 and 32-core models, we expect most single-socket servers to ship with them.

In dual-socket servers, if they are originally deployed with a single CPU, we suggest using a non-P dual socket capable part to leave open an upgrade path that requires minimal downtime.

Thank you.

Most useful. I didn’t know about the 1 cpu in a 2 cpu server.

Interesting to hear that you do Benchmarks such as ElasticSearch as well instead of only the mostly synthetic CPU benchmarks. Is it possible to get access to this for a fee?

Q: Is there a difference with using PCIe peripherals in single-socket or dual-socket servers?

A: There is. In a single socket server, the AMD EPYC essentially has 32x PCIe lanes per die, and for die per package.

You meant to write “… FOUR die per package.”

Thank you.

Do you plan to test the 7551P? I would like to see the value vs a dual E5-2680 v4.

@Dam’s – tests completed, article is in progress of being written.

We are simply finishing up some of the 2P configs. I think we have 4-5 remaining as of now, limited by a single 2P EPYC test system.

Thanks for the extensive testing. Would you recommend EPYC? Would you recommend them over Intel’s lineup and in which usecase?

Thanks for the extensive testing. Would you recommend EPYC? Would you recommend them over Intel’s lineup and in which usecase?

I look forward to your unique coverage of the epyc platforms. I and others who want to build a new workstation have some interesting choices on paper with threadripper/ epyc and equivalent priced intel.

However when attempting to plan the costs of an epyc system and choosing a tower based motherboard (in order to get the higher core count, ecc memory, 10Gb onboard nics, IPMI etc), it soon becomes apparent there is no way to buy it.

In the UK at least atm, the processor can be preordered (7401P), but with no motherboard choices, only rack mount barebone server systems. I had the same difficulty with opteron ATX-E boards, and eventually had to order a supermicro board from Germany.

It’s a shame the keenly priced 7401P doesn’t come with the necessary component options for enthusiasts to build a 2017 home server, and instead we seem to be forced down the consumer based threadripper platform with unwanted overclocking and gamer features which I’m sure won’t compete with the 24x7x365 reliability you get from server quality components.

Hi Rob – we have motherboards and CPUs readily available in the US. I do think Threadripper is more interesting for a workstation.

this one is best sofatware for my windows-10 latest computure.

Where are the examples of hot swap able U.2 drives? I don’t understand why I am being linked to this site from my questions regarding hot swap able u.2 drives.

What’s the implication of single socket to server board count, related component count and the needed number of baseboard management controller? I guess maybe the lower power consumption from the single socket architecture would reduce the server board area and maybe some number of components next to it but will it reduce the server board count or instead increase it? What the impact to the number of baseboard management controller we need then?