One question asked frequently on this site is how can low-cost Infiniband host channel adapters (HCA) be used to achieve high data transfer speeds between workstations, servers and storage. Unlike standard 10/100/1000/10000 Ethernet connections, Infiniband setup is a bit more complicated and does not work out of the box. To the inexperienced user, setting up Infiniband can either seem daunting or to the unaware they may think it is as easy as plugging in cards and connecting cables. The real answer lies between those two extremes. With this guide, you should be able to setup Infiniband on a Ubuntu 12.04 system using low cost components and see a 10 gigabit connection (or more) for under $100 by completing 16 steps.

There are two options for an iSCSI target with SRP functionality for Linux the Linux-iSCSI.org project (LIO) and the generic SCSI Target Subsystem for Linux (SCST), with the latter having more support for older Infiniband hardware. This how-to will step you through setting up an SRP target using both a virtual disk and a block I/O device.

Test Configuration

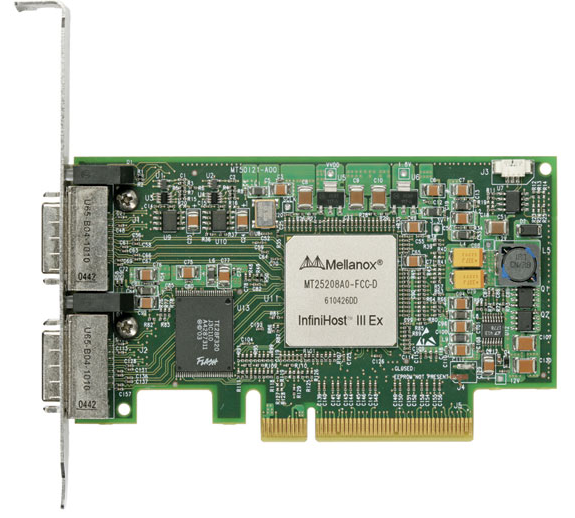

The Mellanox MHEA28-XTC cards I use for this “how to” are dual port 10Gbps Memfree Infiniband host channel adapters (HCA) using 8x PCI-e. For both the target and the initiator, 8x electrical PCI-e slots are used. The following steps are somewhat generic and also should work at least with Mellanox HCAs. To this end, the Mellanox MHEA28-XTC cards are available very inexpensively (see this ebay search.)

Target:

- CPU: Intel Core i7-930

- Motherboard: Asus P6X58D-E

- Memory: 24GB (6x4GB) G. Skill Sniper DDR3 1600

- SAS HBA: IBM ServeRAID M1015 with IT firmware

- Drives: Boot: Intel SSD 320 80GB, Target: 2x Crucial C300 64GB

- Infiniband HCA: Mellanox MHEA28-XTC

Initiator:

- CPU: Intel Core i7-2600K

- Motherboard: Asus P67 Sabertooth

- Memory: 16GB (4x4GB) G. Skill Ripjaw DDR3 1600

- Drive: Crucial M4 256GB

- Infiniband HCA: Mellanox MHEA28-XTC

Creating a Virtual Disk

Note: The first two steps can be skipped if you plan on putting the vDisk on an already existing partition.

1. For this how to, the vDisk will reside on a Linux RAM disk using a tmpfs file system. Start by creating a mount point for the tmpfs mount.

max@IBTarget:/ $ mkdir /tmp/RAM

2. Create the file system and mount it at the DIR created in step 1. The RAM disk will be used only for testing, thus a 10GB file system is plenty large.

max@IBTarget:/ $ sudo mount -t tmpfs -o size=10G tmpfs /tmp/RAM

Optional: Use the df command to check the new mount. It will be the last line of the output.

3. Create a blank file using the dd command. The following will create an 8GB file named vdisk0 in the RAM DIR.

max@IBTarget:/ $ dd if=/dev/zero of=/tmp/RAM/vdisk0 bs=1024M count=8

Configuring Ubuntu for SRP over Infiniband

1. For an Infiniband network to function, a subnet manager is required to be run on one of the nodes. The subnet manager can be run on a switch or, more common for point to point setup, on the target or initiator. OpenSM is a free subnet manager which will be run on the target machine.

max@IBTarget:/ $ sudo apt-get install opensm

2. Ubuntu does not have a binary package for the SCST suite so the current version and modules need to be compiled. A PPA exists, but it is an old version of SCST(v2) and has not been updated in 75 weeks as of this writing. If a build environment is not already set up, install the meta package build-essential.

max@IBTarget:/ $ sudo apt-get install build-essential

3. Download the SCST code tarball which contains both the kernel modules and the administrative tools from here. Alternately, use Subversion to checkout the current trunk.

max@IBTarget:/ $ svn co https://scst.svn.sourceforge.net/svnroot/scst/trunk scst

4. Change directory to where the tarball was downloaded and untar the file.

max@IBTarget:/ $: cd /(Your Download Folder) && tar xfvz scst.tar.gz

5. Once finished, change directory into the new DIR.

max@IBTarget:/ $ cd scst/trunk

6. Three packages are needed from the SCST suite: scst, scstadm, and srpt.

max@IBTarget:/ $ make scst scstadm srpt

max@IBTarget:/ $ sudo make scst_install scstadm_install srpt_install

7. The initiation script for SCST needs to be edited to load the SRP Target module on boot. For my version of SCST the line that needed to be changed is 101.

max@IBTarget:/ $ sudo vim /etc/init.d/scst

The line is in the parse_scst_conf() function.

SCST_MODULES=”scst” => SCST_MODULES=”scst ib_srpt”

8. SCST is not configured to start on boot by default, so you must make entries for it in the rcX.d DIRs. The simplest way to do this on Ubuntu is to use update-rc.d. Update-rc.d will configure scst to start in all muli-user run levels and stop in all others.

max@IBTarget:/ $ sudo update-rc.d scst defaults

9. Add the ib_umad module to the /etc/modules file so it will be loaded when your machine starts.

max@IBTarget:/ $ sudo sh -c “echo ib_umad >> /etc/modules”

10. Now load the modules into the kernel using modprobe. There are three modules which need to be loaded: scst_vdisk, ib_umad, and ib_srpt. This will only have to be done once, and after everything is configured, the scst script in init.d will take care of loading the needed modules.

max@IBTarget:/ $ sudo -s

root@IBTarget:/# modprobe scst_vdisk && scstadmin -list_handler

Collecting current configuration: done.

Handler

————-

vdisk_fileio

vdisk_blockio

vdisk_nullio

vcdrom

All done.

root@IBTarget:/tmp# modprobe ib_srpt && scstadmin -list_driver

Collecting current configuration: done.

Driver

——-

ib_srpt

All done.

11. With the kernel modules loaded the SRP target can now be configured. For this how-to I created two SRP exports using the file I/O and the block I/O handlers.

a. vdisk_fileio handler allows a virtual disk file to be exported as an SRP device. I will be using the vDisk I created earlier.

root@IBTarget:/# scstadmin -open_dev RAMTest -handler vdisk_fileio -attributes filename=/tmp/RAM/vdisk0

Collecting current configuration: done.

-> Making requested changes.

-> Opening device ‘RAMTest’ using handler ‘vdisk_fileio’: done.

-> Done.

All done.

b. vdisk_blockio handler allows a physical disk, partition, mdadm device, or a LVM volume to be exported as an iSCSI device. Here I will be using an mdadm RAID 0 of two 64GB Crucial C300 SSDs.

root@IBTarget:/# scstadmin -open_dev SSDTest -handler vdisk_blockio -attributes filename=/dev/md0

Collecting current configuration: done.

-> Making requested changes.

-> Opening device ‘SSDTest’ using handler ‘vdisk_blockio’: done.

-> Done.

All done.

The configured devices can be viewed using scstadmin -list_device.

root@IBTarget:/# scstadmin -list_device

Collecting current configuration: done,

Handler Device

————————–

vdisk_nullio –

vdisk_fileio RAMTest

vdisk_blockio SSDTest

vcdrom –

All done.

12. Add new group and implicitly create a target.

root@IBTarget:/# scstadmin -add_group WIN7 -driver ib_srpt -target ib_srpt_target_0

13. Export each device as a LUN.

root@IBTarget:/# scstadmin -add_lun 0 -driver ib_srpt -target ib_srpt_target_0 -group WIN7 -device SSDTest -attributes read_only=0

root@IBTarget:/# scstadmin -add_lun 1 -driver ib_srpt -target ib_srpt_target_0 -group WIN7 -device RAMTest -attributes read_only=0

14. An initiator needs to be added to the initiator group. The initiator name is the target node GUID concatenated with the initiator node GUID. These are both hexadecimal values and use the prefix “0x” to denote this. To get the node GUIDs in Linux use the command ibstat and in Windows use vstat. An example initiator name is “0x001a4bffff0c8398001a4bffff0c935c”. You can also get the initiator name by restarting the scst service once the configuration is saved and watch /var/log/syslog as you try to connect to the target with the initiator. If you are only dealing with a single connection and single group, you can simply use the wildcard ‘*’ as the initiator name.

root@IBTarget:/# scstadmin -add_init * -driver ib_srpt -target ib_srpt_target_0 -group WIN7

15. Write the configuration to /etc/scst.conf and check the configuration for errors.

root@IBTarget:/# scstadmin -write_config /etc/scst.conf

root@IBTarget:/# scstadmin -check_config

This is the what my scst.conf looks like when everything is setup:

root@IBTarget:/#cat /etc/scst.conf

HANDLER vdisk_blockio {

DEVICE SSDTest {

filename /dev/md0

}

}

HANDLER vdisk_fileio {

DEVICE RAMTest {

filename /tmp/RAM/vdisk0

}

}

TARGET_DRIVER ib_srpt {

TARGET ib_srpt_target_0 {

enabled 1

rel_tgt_id 1

GROUP WIN7 {

LUN 0 SSDTest

LUN 1 RAMTest

INITIATOR *

}

}

}

16. Now reboot the machine or reload SCST

root@IBTarget:/# service scst reload

Conclusion

Overall, this guide is a bit more difficult than an average how-to install a 10 Gigabit Ethernet guide. With that given, the aforementioned steps will allow you to take two $45 (second hand) adapters and a CX4 cable and allow 10 Gigabit speeds. In the next piece, I will have performance figures for the setup to show that it is in fact working as we would expect. This is certainly a bit more involved, but it is also something that can be done fairly quickly even with a basic Ubuntu installation.

always wanted a guide like this. thx

Great guide. How would it work in CentOS, debian or Mint? Does it work the same way in Solaris Express 11 or OI? Looked intimidating at first but I was able to copy most of those commands.

Very good article.

The problem comes with the last drivers of OFED with debian. This is the reason why i changed my Squeeze against a SL6.2.

I wrote a post (in french) to set-up the last drivers available from OFED (version 1.5.4.1) on a Scientific Linux distro (RHEL clone).

http://kurogeek.wordpress.com/2012/06/30/infiniband-ofed-1-5-4-1-sur-scientific-linux-6-2/

Tnaks.

Chris.

Jason76, I have done this set up on Mint12, Wheezy(7/18/12), and Ubuntu. Debian needs some extra steps to get kernel headers and sudo may not be installed. I just downloaded CentOS, but am not familiar with Red Hat based distros. Note: OFED was not installed on any of systems I have tested this on, just mainline kernel support.

I have an OI storage server, but haven’t taken it down to install a card. I will work on doing that.

Just a word of caution. With OI and Solaris Express 11 I believe you need a card with onboard memory. That is something the MHEA28-XTC does not have.

Messing around with Ubuntu, SRP and vSphere 4 – got a single host connected to my zfs datastore, thanks!

One thing – can I connect two initiators to a single target?

hi

did it. it works…

but seeing massive kernel errors in log

did you have same prob and is there any resolution?

have 4 vmware esxi 4.1 servers all seeing 3luns of raid SSD pushing 1800MB/s read write!

Cheers

Bruce McKenzie

also tried OI with QDR cards

the performance was terrible on ZFS with 8 drives in ZFS2 volume… i then setup a 60GB read and 60GB Write SSD drives to see if it improved but could only get 300MB/s R/W from it. tried on Solaris 11 also, and it was no better..???

SCSI RDMA protoco, you have not thought.

Hi !

Thanks for the great writing.

Though it fails for me miserably on Ubuntu 12.04. having ZFS on boot.

Seeing only fatal errors at scstadmin commands.

The system even does not shutdown and hungs in an endless loop ….

What I am missing ?

BTW: You probably now other samples like

yours ?? Would be happy to proceed !

Thanks anyway,

Manfred

Hi Max,

Thanks for putting this info together. It’s been a real help to me. I’ve followed along for several days now, starting with fresh Ubuntu builds a number of times. I’ve got the infiniband up and going between a Windows 7 box and an Ubuntu 12.04.2 installation. The bump in the road I seem to be having comes when I try to set up the SRP target on the Ubuntu box. In an earlier step when I try to list the drivers:

modprobe ib_srpt && scstadmin -list_driver

the driver list is empty. No mention of ib_srpt.

Later, when I attempt to add a target to a group:

scstadmin -add_group WIN7 -driver ib_srpt -target ib_srpt_target_0

I get the following message:

“No such driver exists.”

This message appears to be originating from the SCST code. I’ve spent days trying to get to the bottom of this.

Admittedly, I’m not a Linux pro. I spend the majority of my time working on code and not operating systems.

If you or anyone else might be in a position to shed a little light on what might be at the heart of the issue, I would be tremendously grateful.

Dear Chris Dalrymple & Max

I am also running the same problem that ib_srpt is not loading under scstadmin. It seem to me that the driver ib_srpt only have SRP Initiator functionality included, however, does not include an SRP Target functionality

Dear Chris Dalrymple,

I solved the problem using 13.04

1. make sure you manually remove ib_srpt.ko (version 2.0) install by the 13.04.

2. make sure you download the latest stable release of scst which currently is 2.2 and its ib_srpt.ko is version 2.2 as well

i am running ubuntu 12.04.2 with mellanox ofed for linux installed. I later followed the guide you posted above. everything seems to work except scstadmin -list_driver is blank and does not show the ib_srp driver. I am pretty much stopped at that point. I have tried removing and re-installing srp from scst with no luck

Grant

1. make sure you manually remove ib_srpt.ko (version 2.0) install by the 13.04/12.04

2. make sure you download the latest stable release of scst which currently is 2.2 and its ib_srpt.ko needs to be version 2.2 as well ( use modinfo to find out your driver version)

Hello,

I’m trying to follow this nice how-to with a zfs block device, but I can only get the tmpfs device to work..

I’ve created a zfs pool and in there a zfs block device with this command:

sudo zfs create -V tank/test

After that I’m trying to set the block device with scstadmin command as follows:

sudo scstadmin -open_dev ZfsTest -handler vdisk_blockio -attributes filename=/tank/test

In dmesg error number -2 returns, it says that the file does not exist.

Because it is a zfs block device, it’s not mounted, so it’s not there in the filesystem..

Does anyone know how to get this to work with a zfs block device?

Thanks in advance.

Cheers

Nevermind, I wasn’t pointing to the block device, but to the zfs structure.

The zfs block devices are under /dev/zvol/[poolname]/[blk_dev_x]

Hello

I read this article of yours from ten years ago with great interest. Is there any chance that you will make needed changes to it and help how to do the same installation for a modern ubuntu 20 or 22?

Thanks in advance.