The Intel Xeon E7 series is meant for high-end applications. These can range from applications such as real-time in-memory analytics to running ERP applications like Oracle and SAP. Downtime in these applications can have significant implications for revenue and reporting. We decided to take two similarly configured quad socket Intel Xeon E7 V3/ V4 systems to see how long the boot process takes. Reboots for patching or maintenance is one of the biggest causes of downtime on these systems. If you think that having over 70 cores / 140 threads would faster booting than your notebook, you would be incorrect.

Unlike the Intel Xeon E5 series, there are far fewer vendors that offer systems with four Intel Xeon E7 chips. We have both a Dell and a Supermicro system that we are using to see just how fast these systems boot.

Test Configurations

We had two systems that we configured similarly. The Dell system uses high-end Intel Xeon E7 V4 processors while the Supermicro system uses high-end Intel Xeon E7 V3 processors. Both systems are compatible with V3 and V4 chips. We only had 1TB of RAM available for this test due to a few concurrent systems we had setup. As a result, both systems had 512GB installed using 32GB DDR4 RDIMMs. These systems are capable of using up to 12TB of RAM and using that much RAM would have a marked impact on initialization and boot times.

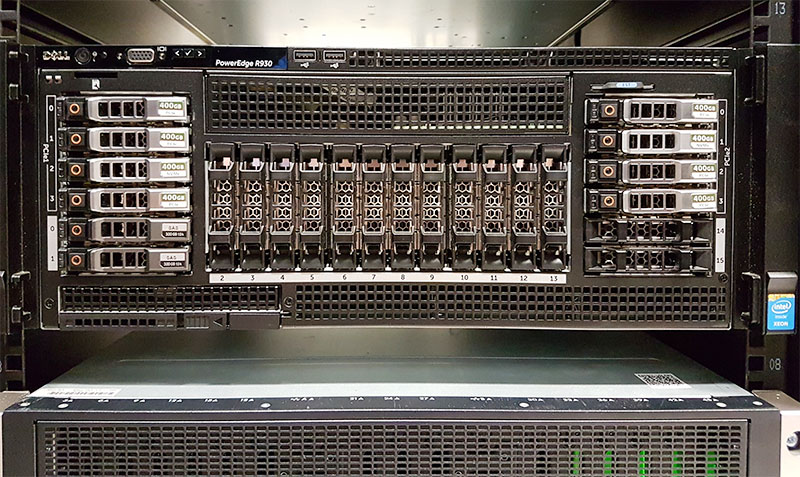

Test System 1: Dell Quad E7 V4

- Platform: Dell PowerEdge R930

- CPUs: 4x Intel Xeon E7-8890 V4 (24 core/ 48 thread each, 192 threads total)

- RAM: 512GB (16x 32GB DDR4 RDIMMs)

- Networking: Mellanox ConnectX-3 Pro 40GbE, Intel 10GbE

- HBA: Dell PERC 710i

- SAS SSD: 400GB Samsung

Test System 2: Supermicro Quad E7 V3

- Platform: Supermicro SuperServer 8048B-TR4FT

- CPUs: 4x Intel Xeon E7-8870 V3 (18 core/ 36 thread each, 144 threads total)

- RAM: 512GB (16x 32GB DDR4 RDIMMs)

- Networking: Mellanox ConnectX-3 Pro 40GbE, Intel 10GbE

- HBA: LSI SAS3008

- SAS SSD: 400GB Samsung

We set both systems up to skip PXE booting but did leave Option ROMs on. The SAS controllers were set to HBA mode and we were booting off of non-RAID 400GB SAS SSDs. We did have the Dell Lifecycle Controller active. From a systems management perspective, the Dell Lifecycle Controller is awesome but we did want to show the impact in terms of boot time.

Racing Quad Intel Xeon E7 V3/ V4 Systems

We loaded up the management interfaces of each system and opened the iKVM sessions. We then started a video so we could show the boot process side-by-side. While we could have used text-based tools, we wanted to show the impact in an easy format.

As you can see, both systems take 4.5 minutes or more to boot. To hit “five nines” or 99.999% uptime, the maximum downtime per year is 5.26 minutes per year. “Four nines” uptime would require 52.56 minutes per year or less of downtime. That equates to around 4.38 minutes per month.

The practical implication of this is that monthly boots (e.g. for OS patches) on Intel Xeon E7 V3/ V4 systems will mean sub-“four nines” uptime, and that does not include any shutdown time. Likewise, hitting 99.999% availability would mean that the Dell PowerEdge R930 system could not be rebooted.

Final Words

The way many IT organizations deal with this is to create “maintenance windows” where the systems are not expected to be online. Thus, they can patch and reboot the system during these windows and still have excellent uptime figures. Although Intel does tout RAS features in the E7 V3/ V4 systems, there is a real impact in terms of uptime and reliability simply from booting these servers. This is not a Dell/ Supermicro centric boot feature. We did not have a HPE DL580 Gen9 server available in the lab, however, it will likewise slowly initialize memory (serially) and take several minutes to boot.

For the fun fact of the day, if you have a SSD in your laptop, odds are it boots much faster than these large 4-socket systems.

This shouldn’t come as a surprise to anyone who has worked in enterprise hardware. I remember systems with separate RAID cards that would take a couple of minutes just to boot the firmware on the RAID cards before the rest of the boot sequence would even get going. You’ll note that the vast majority of the “boot” process is all in firmware as the systems self-test while actually booting the OS is a matter of a few seconds. The processing power of the CPUs is irrelevant.

Incidentally, if you need 5-9’s uptime 24/7 where scheduled service time windows aren’t an option the solution is easy: You use a high-availability cluster setup where there’s at least one machine (usually more) up at any given time.

If you’re running a five-nines environment on a single, standalone server, you’re going to be in a whole world of hurt when (yes, when. Not if) you run into an unexpected outage due to hardware failure.

Five nines isn’t supposed to refer to uptime of a *server*, it’s supposed to refer to the uptime of a *service*.

I just sent this to one of my new clients. They run a $15m/ yr company’s primary database for everything they do on a hosted HP DL580 Gen9. It is hosted by one of the v. large providers so they think it’ll never go down.

Frankly, I’m a little surprised that STH deemed this worthy of an article/posting. Given the knowledge level demonstrated by previous posts, this one seems amateurish. The subtitle “Results will shock you” is outlandish. I fully expected the big systems to take a long time because I deal with big systems regularly; as it would seem you guys do as well. Maybe that’s an incorrect assumption on my part.

Hi Scott,

Sometimes we post these tidbits just to present 3rd party data for folks to use in their discussions with others. We have many SMB service providers on the site and content like this helps them have discussions. If you look at the comment from BostonRSox that is a good example of how these get used.

Back in the day the uptime was a point of pride for administrators. Nowadays I see it more as a sign of complacency.

You could use something like ‘ksplice’ in Linux to reduce the likelihood of a reboot…