QNAP GM-1002 TNS-h1083X-E2236-16G Node Overview

In the rear of the system, there are two nodes. Although the PSUs are shared, we illuminated the A side node in Blue and the B side node in Green just to help with the orientation here. Something we will note is that we swapped the nodes successfully between the slots so the A/B is determined by where they are placed in the chassis.

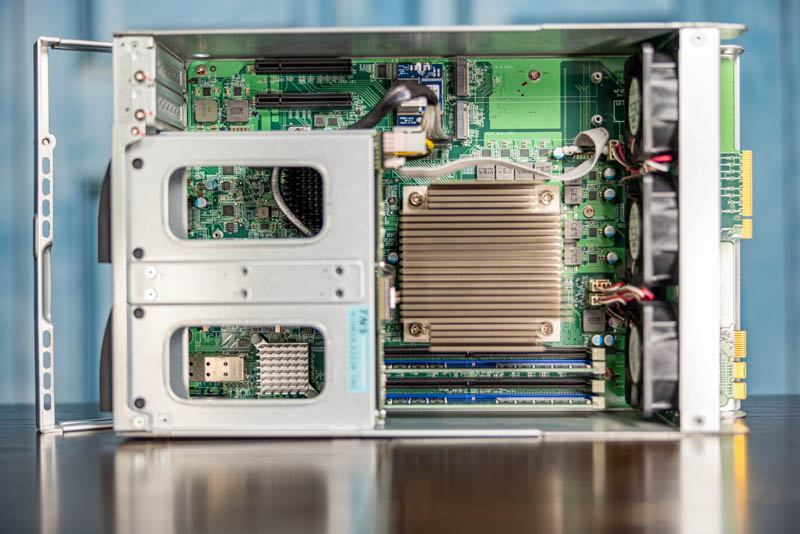

The nodes themselves have a latching mechanism and are around 2U tall each. We are going to start our overview at the connector end that is placed into the backplane.

First, one can see the connectors that provide power and data connections. One of the two connections may look somewhat familiar to our readers. The big benefit of this connectivity is that the units can be swapped in/ out without the need to remove cables. If one needs to upgrade a node, send it in for service, or simply to remove it for another reason, this can be done without having to fiddle with cables.

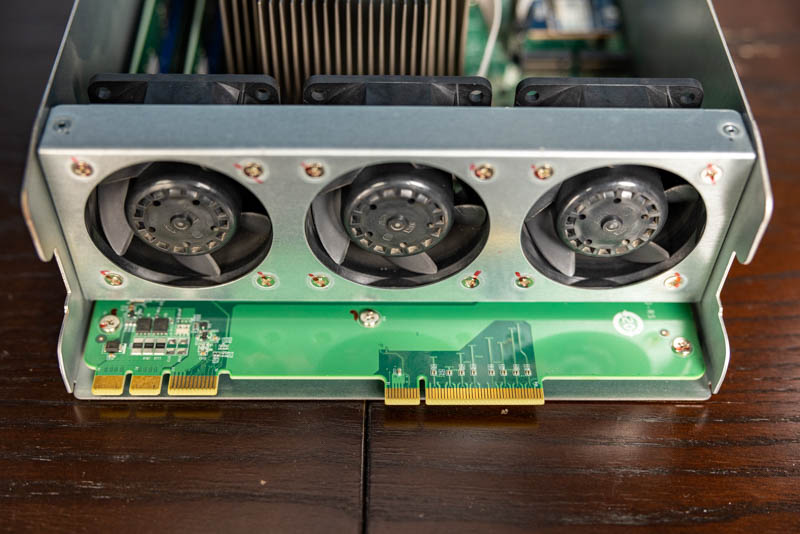

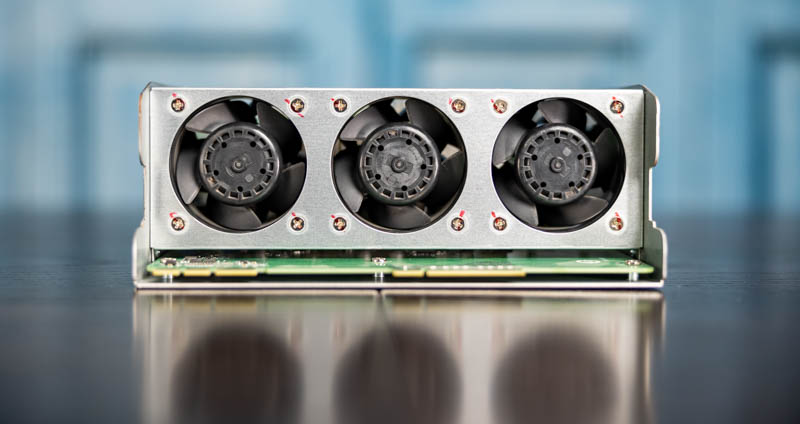

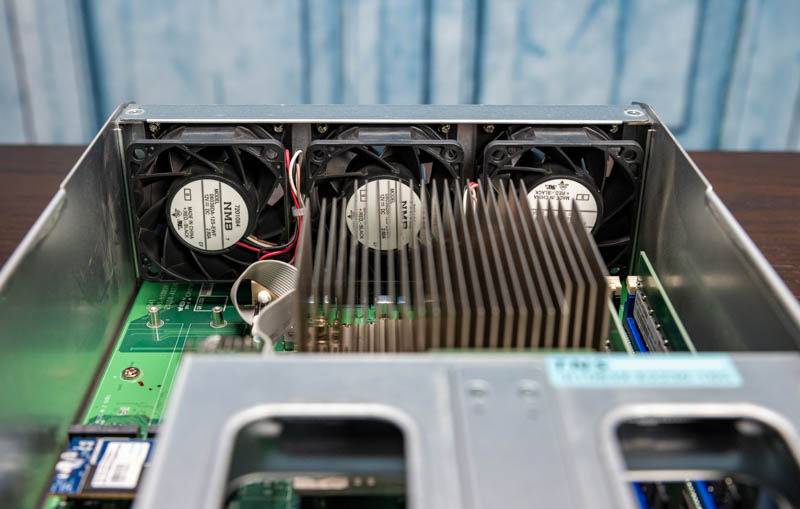

Each node has three fans. These fans pull air through the front drive bays and then through the node. Some multi-node chassis have chassis fans. Others adopt fans on each node. The benefit to having fans located on each node is that one can swap fans without removing the entire chassis from a rack.

Just behind this, on the TNS-h1083x-E2236-16G NAS node, we have an Intel Xeon E-2236. This is a 6 core / 12 thread CPU. For an 8-10 bay NAS, this is plenty of CPU power to run NAS functions as well as some apps and virtual machines.

For those who want to use the QNAP app and virtualization functions, there is 16GB of onboard memory. By default, we get two 8GB DDR4 ECC UDIMMs. With the Intel Xeon E-2236, we can get up to 128GB in a 4x 32GB configuration.

We are going to quickly note that the GM-1001 uses TNS-h1083X-E2234-8G nodes that have an Intel Xeon E-2234 and 8GB of memory.

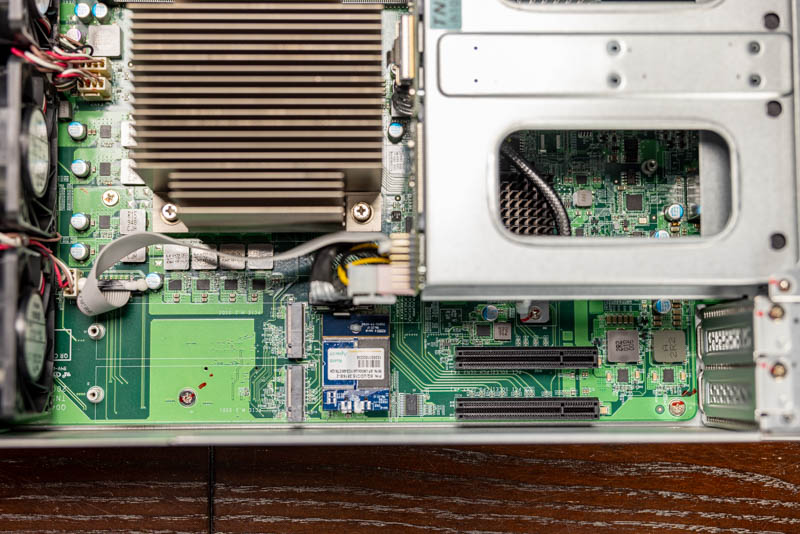

Behind the fan opposite the four DDR4 DIMM slots, we have dual M.2 slots. These are M.2 2280 PCIe Gen3 x2 slots. One can add two x2 NVMe SSDs here. These are both connected to the PCH.

Behind those slots, we have a DOM that has the firmware for the unit.

Behind that DOM we have two PCIe slots. Both are PCIe Gen3. One is an x4 (PCH) and one is an x8 (CPU) electrical slot. QNAP has a number of add-in cards including external SAS controllers and SATA controllers to connect JBODs, 32Gb/16Gb FC cards, or additional NICs. One is limited slightly to the electrical connections and the low-profile designs, but QNAP has a fairly large ecosystem here and has partners such as Marvell and ATTO with products qualified in the system as well.

On the rear of the unit, we have two 2.5″ bays in each node. These are PCIe Gen3 x4 NVMe drive bays for two U.2 drives. Alternatively, they can be used with SATA drives. Given what we have seen with the Kioxia CD6-L PCIe Gen4 NVMe SSD Review Ending Data Center SATA, we think it is time to focus on U.2 storage for these.

Underneath those drives, we have two RJ45 ports. These are Realtek-based 2.5GbE NICs. We are really excited that QNAP went the extra step with 2.5GbE instead of 1GbE NICs. This just felt a bit more modern. Under those NICs are four 10Gbps USB 3.2 Gen2 Type-A ports. Those offer a lot of connectivity which is a nice touch as well. Building upon a Xeon D-1500 series or Atom C3000 series, these Gen2 ports are a feature we would not get.

The other major feature on the rear of the chassis (aside from the power button / serial port) is dual 10GbE. Specifically, these are NVIDIA-Mellanox ConnectX-4 Lx SFP+ ports. We were a bit surprised to see that QNAP went with a higher-end NIC than many of the other 10GbE solutions out there.

Something that would have been perhaps more interesting is if QNAP used the CX4 variant that supports 25GbE. As we saw in our Mellanox ConnectX-4 Lx Mini-Review, it is a popular 25GbE solution. Adding 25GbE would not fit with QNAP’s line of SFP+ 10GbE switches, but it would likely have been a lower-cost upgrade but a feature propelling the solution onto more lists. After all, if someone is looking for a NAS with 25GbE, this does not have 25GbE even though the ConnectX-4 Lx series has a very popular 25GbE variant. We understand the decision, but it would have been a nice feature to have.

Hardware is only part of the equation. Next, we are going to look at the QuTS hero ZFS-based software solution.

Thanks for the review.

IMHO Qnap has pretty good hardware, an ok price/perf ratio, and a quite useable GUI for even beginners.

BUT: There software quality control has become absolutely horrendous in the last years.

I still have administered QNAP NASes for some people in my family until i could convince them to invest into a DIY linux server i build and administer for them late last year.

There were serious vuernabilities so frequently like i have NEVER experienced in any IT product whatsoever.

Even when one reades the relevant IT news sites, there is no other company by far mentioned with urgent security issues.

Add to that bugs, fatal updates..

It was already bad years ago, and it got even worse. They push out new products and OS features and seem to have much too few or too few experienced personal dedicated to security and QA.

One just needs to look at the QNAP forums.

I would not trust them with any production setup. Not for a second. And the seeming ease of use of their web panel does not make up for the manhours and lost sleep that those regular – serious – security issues will take up over even 2-3 years.

I can not speak for the new ZFS based OS version, but it seem to not differ to much from the crappy quality of the non-ZFS version.

This is quite sad, because IMHO if they could get the quality right and security, they would be easily the best brand on the SOHO/Home user NAS market.

But as a potential customer mostly only discovers the security problems after buying the product, pumping out new SKUs to generate cashflow instead of investing in long term quality seems to be the (shortsighted) strategy of QNAP.

‘Although the PSUs are shared, we illuminated the A side node in Blue and the B side node in Green’ …just because you could. You don’t have to make up a reason to look cool :)

Fan’s 12V x 1.80A @ full speed.. 21.6Wx6pcs. Thats approx. 130w of power, i’m not surprised at 70% of speed they will eat close to 100W and dBA59 is disaster for office use.

Interesting, but there are two things that would make this really interesting and much more useful. Either:

A) Get us some dual ported SAS drives and let us run it active/active, or at minimum a failover at the backplane end (even if it ends with some downtime).

B) A cluster with a bunch of these using vSAN or S2D type storage (moreso than Gluster even) so that you can have full node redundancy. Two of these in 6U and it can survive a full node failure would be a good thing to have.

Otherwise, sure, cool, but besides saving 1U of rack space and a few nice features, it seems a very small benefit over two 2U NAS that cost less and can handle more drives. And forget NAS, this is at the price point where I could purchase two 2U servers with 8 LFF drive bays!