One of the coolest demos at Intel Innovation 2022 was the QCT Liquid Cooled Rack demo. We covered this briefly in our Tour of the QCT Intel Sapphire Rapids System Overload Booth, but we wanted to go into details a bit more in a separate piece.

QCT Liquid Cooled Rack Intel Sapphire Rapids Bake-Off

Here is a look at the rack that we saw on the Intel Innovation exhibition floor.

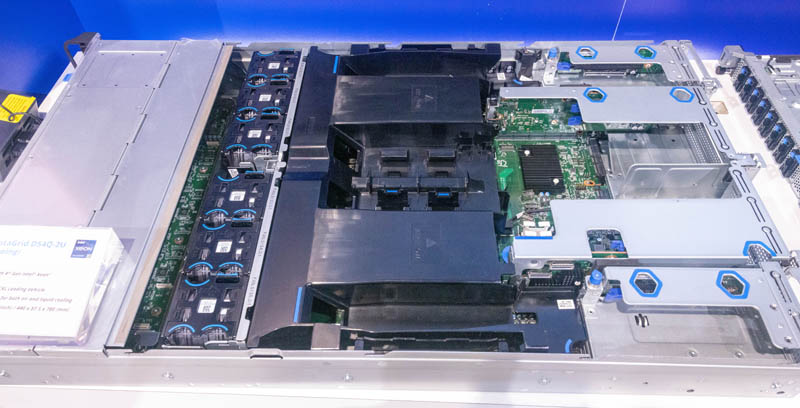

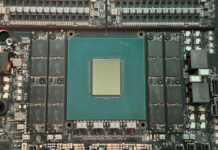

In the middle is perhaps the most important part. We see a QCT D54Q-2U liquid and air cooled server stacked in the rack.

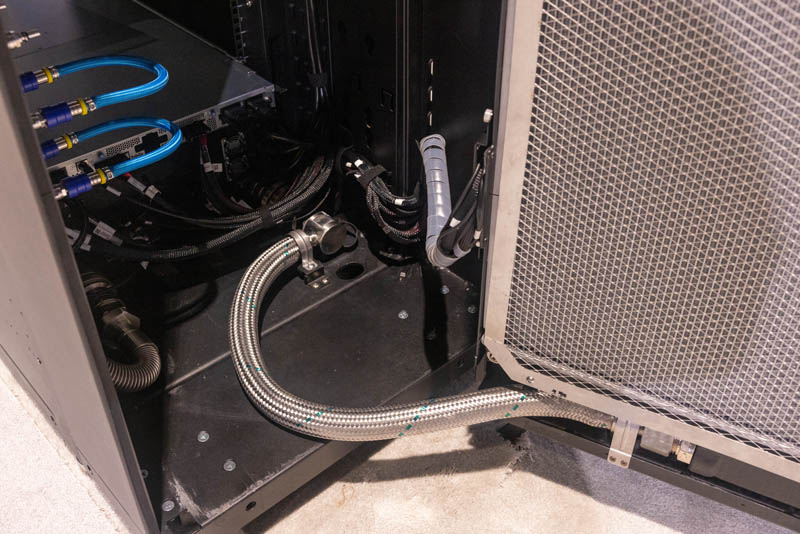

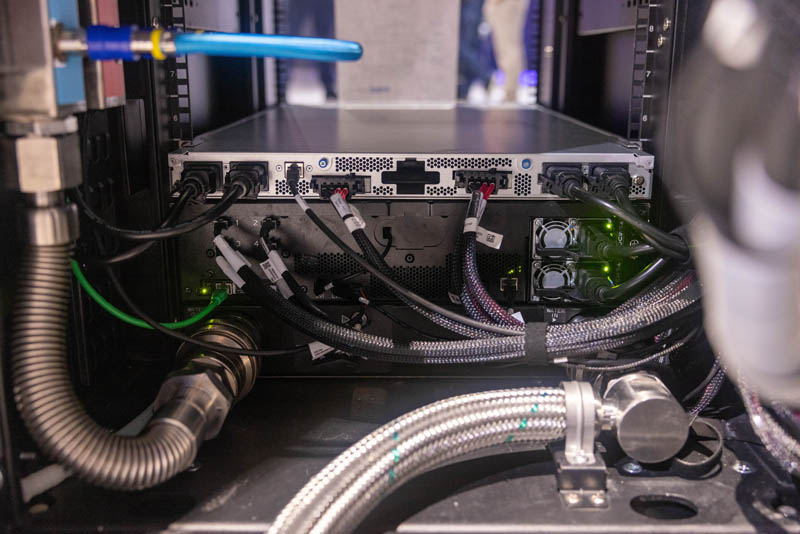

Below the servers at the bottom of the rack is a redundant 3kW PSU to drive all of the cooling. That may seem like a lot, but it is less than the fans in a full rack of servers. At the very bottom is a CDU. The CDU has a filter (left) along with two pumps (center and right.) These are hot-swappable designs.

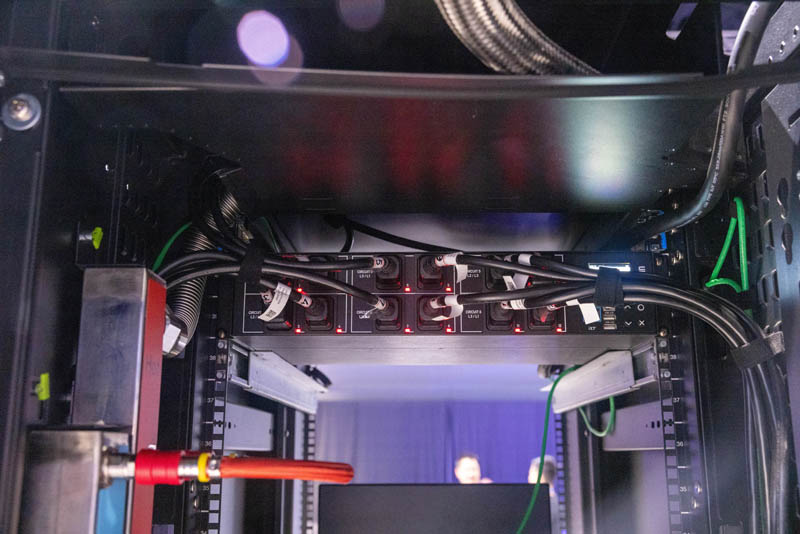

Here is the rear of the rack. It is a massive wall of sixteen fans.

These fans are also swappable via cages that disengage from the rear. While the fans themselves use more power than a typical server chassis fan, they also use less power for equivalent cooling to smaller chassis fans. That is perhaps the biggest driver of efficiency improvement in this rack.

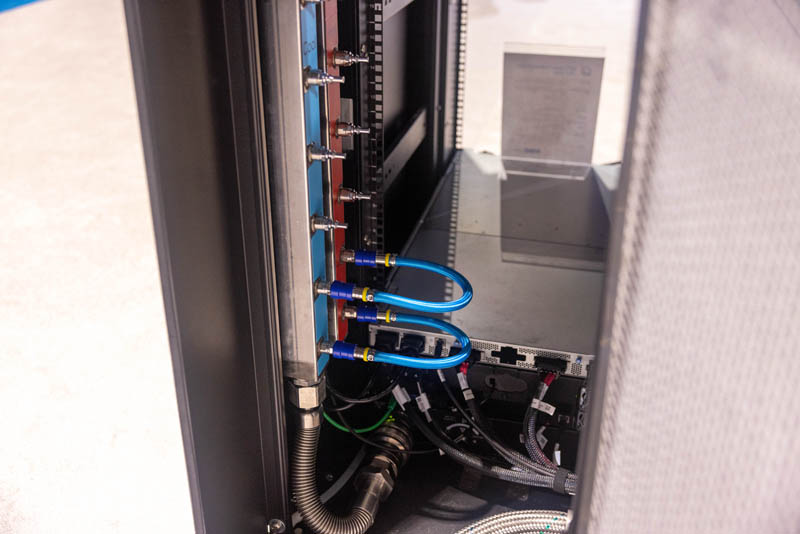

Opening the door, we see a full rear door heat exchanger setup.

That requires flexible hoses at the top and bottom of the rack.

Here is the bottom of the heat exchanger.

Starting from the top of the rack, we have a small PDU. This was likely for demo purposes. Also, the entire rack is being monitored via BMCs so one can see the performance of not just the servers, but the entire liquid cooling solution down to the CDU and pumps at the bottom of the unit.

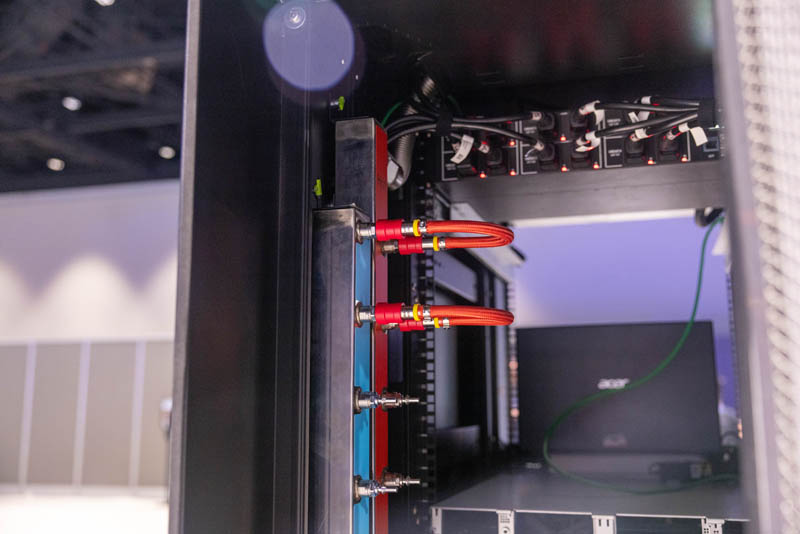

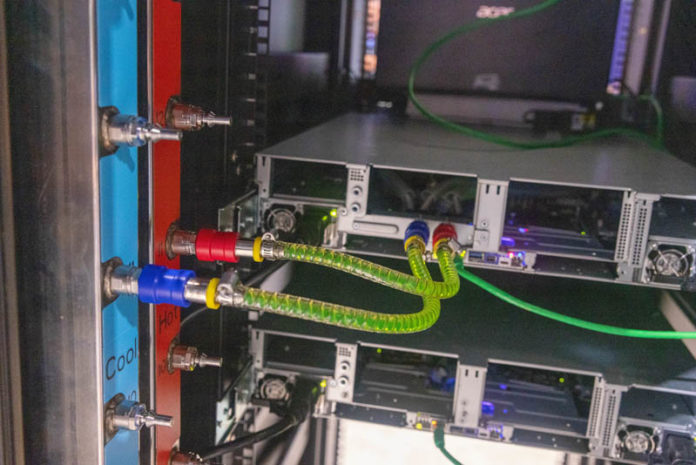

On the side of the rack, there is a rack manifold. This only has two servers, so we see additional hoses between the hot and cold sides of the manifold.

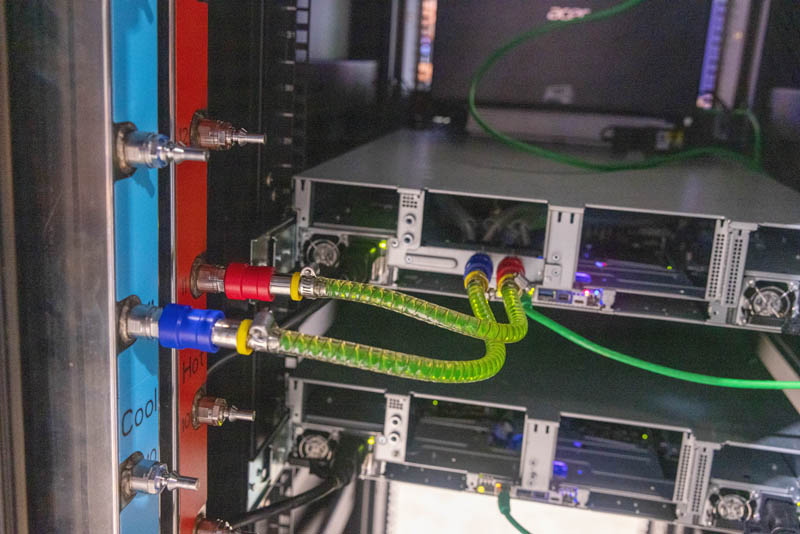

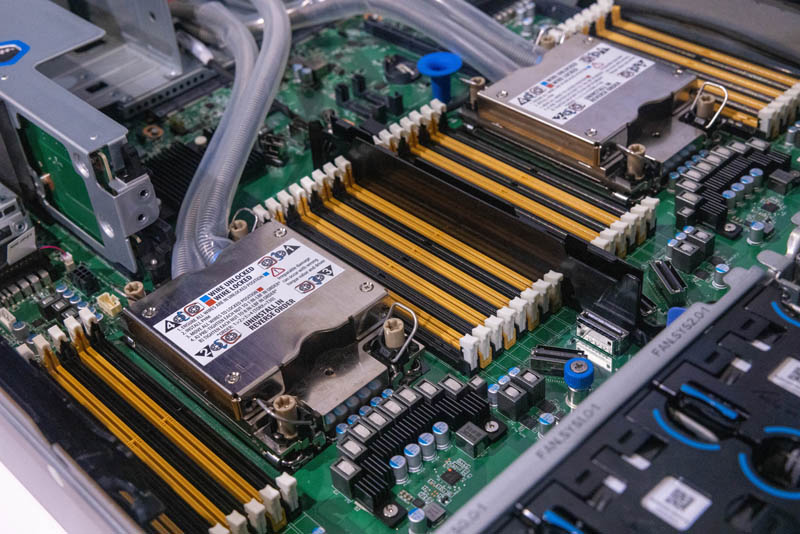

This is the liquid-cooled server connected to the CDU.

Here is a look at the liquid-cooled versus the air-cooled server. One can see that a rear expansion slot is used for the liquid cooling headers.

We covered the liquid-cooled server in the QCT booth tour.

Here is the air-cooled unit. In either case, QCT is using fans to cool things like the storage and other components. Removing hundreds of watts of power from the system just by removing the CPU heat means that the chassis fans spin much more slowly and more efficiently.

Below, the servers, there are more hot to cold manifold hoses to help with the pressure of there only being a single server connected.

At the bottom, we can see the power supply for the cooling, as well as the rear of the CDU.

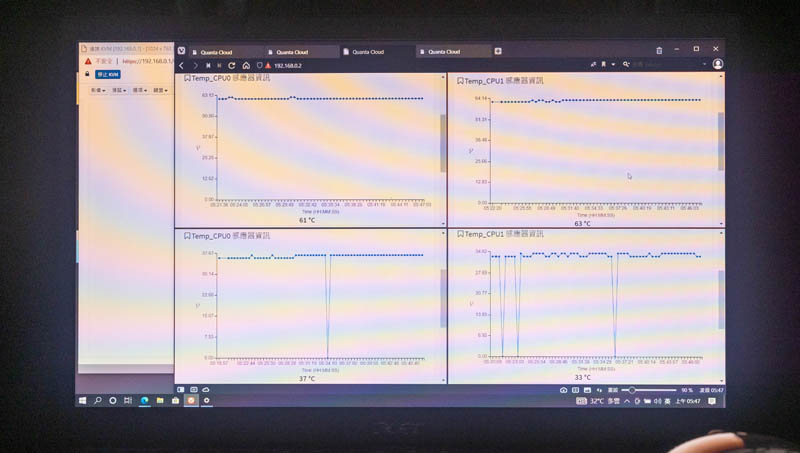

The impact was really interesting. On the top, one can see the CPU temperatures for the Sapphire Rapids Xeon CPUs on air cooling at 61-63C. On the bottom, we have the two liquid-cooled CPUs in the 33-37C range. QCT says that it usually sees around a 30C difference, and that is supported at least by the CPU1 temps.

Much of the ROI is due to the lower cooling costs. One other benefit is that with lower CPU temperatures, the CPUs are able to hold turbo frequencies longer in liquid-cooled systems. That should allow more performance in many scenarios.

STH asked QCT a few questions about this solution.

STH: Are there certain industries or customer segments that QCT sees are adopting the liquid cooling rack solution faster than others?

QCT: We are seeing all customer classes across different market segments being interested in adopting this kind of solution to unleash full compute power without being limited by thermal constraints or being able to cool their DC more efficiently.

STH: What types of systems are typically being used? (standard servers, dense CPU compute, GPU compute, other?)

QCT: 1U scalable, multi-nodes, and GPU servers typically are the first to use in liquid cooling solutions. QCT will also support, but not limited to, 1U, 2U, and GPU config servers with liquid cooling solutions.

STH: What is the difference in up-front costs for the liquid-cooled rack? What are the typical payback periods for QCT customers?

QCT: Customers pay higher CapEx upfront but enjoy better OpEx with a liquid cooling solution. They enjoy better TCO over a period of time which highly depends on each DC’s situation such as different power costs. We work with customers on this TCO.

STH: How much power goes to air cooling servers these days as a percentage of system power?

QCT: This could vary quite a lot. In prior generations, we hope to limit cooling power below 5% of total system power. Nowadays, this kind of expectation or design expectation is unreasonable for most designs due to CPU and GPU power being too high. It could easily go as high as a double-digit percentage nowadays, but actual numbers vary.

STH: Are there any important installation considerations for the L2A solution such as altitude, rack depth/ weight, or others?

QCT: QCT L2A Rack is 19” EIA and 21” OCP compliant, and the extra depth needed for RDHx (rear) door is 220mm which is 80mm thinner than the ORV3 spec. The only factor that one needs to keep in mind is the weight tolerance of the floor, QCT liquid to air rack weight is around 500kg (without servers). Besides that, the rack is L11 flexible which requires no additional data center infrastructure and can be placed alongside any brand of a traditional air-cooled server rack.

Final Words

At Intel Innovation 2022, we saw QCT’s Liquid Cooling rack solution and a cool demo. The demo showed around a 30C difference in CPU temperatures by going liquid cooled. Aside from the lower temperatures (and therefore better turbo performance) the lower cooling costs are important. Our other hope is that this helps our readers understand that liquid cooling options exist even without adding facility water to each rack. While the demo was just two 2U servers, we hope it helped explain the liquid cooling concept.

Should license that cooler to nVidia for overclocked 4090 models!

The rear door radiators are oversized for a single server. The large delta between air and liquid cooled will shrink with a full rack.

Steve Harrington, Chilldyne.

If you filled it up you could always put higher RPM fans on it.