PNY GeForce 2080 Ti Blower Power Tests

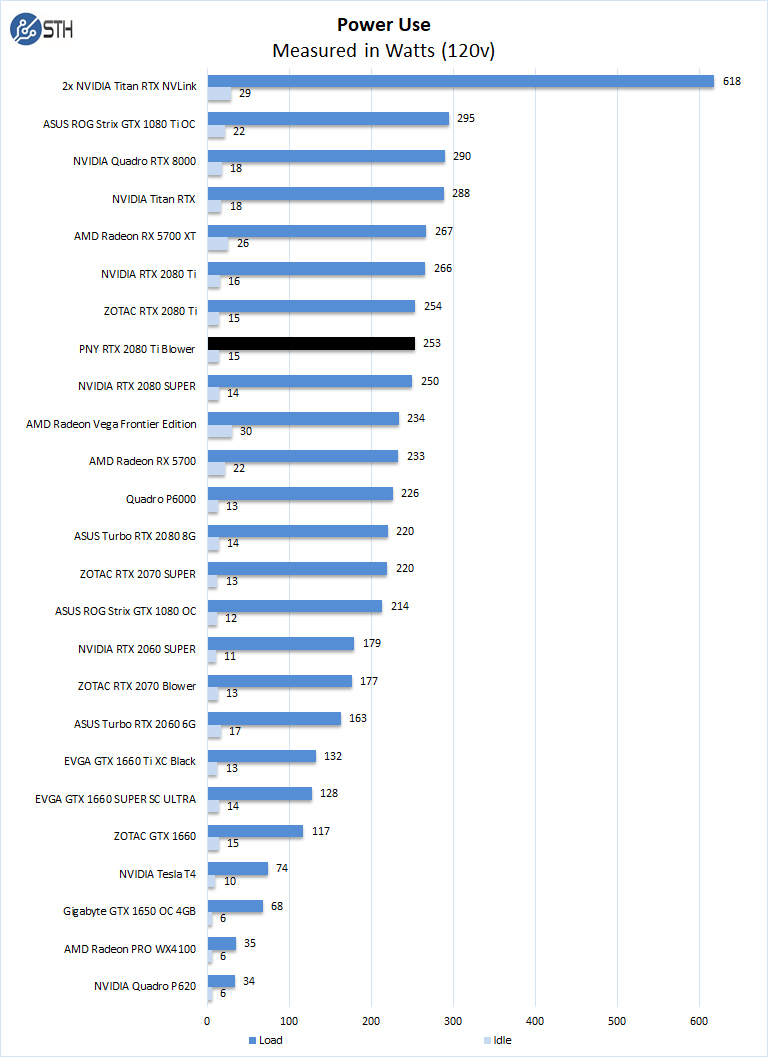

For our power testing, we used AIDA64 to stress the PNY RTX 2080 Ti Blower, then HWiNFO, to monitor power use and temperatures.

After the stress test has ramped up the PNY RTX 2080 Ti Blower, we see it tops out at 253Watts under full load and 15Watts at idle. This is the lowest of our three cards and that is part of the point. With eight of these in a system, one would use just over 2kW on the GPUs. For deep learning training, these power consumption figures add up when there are 80-100 GPUs per rack and one is paying for power and cooling.

Cooling Performance

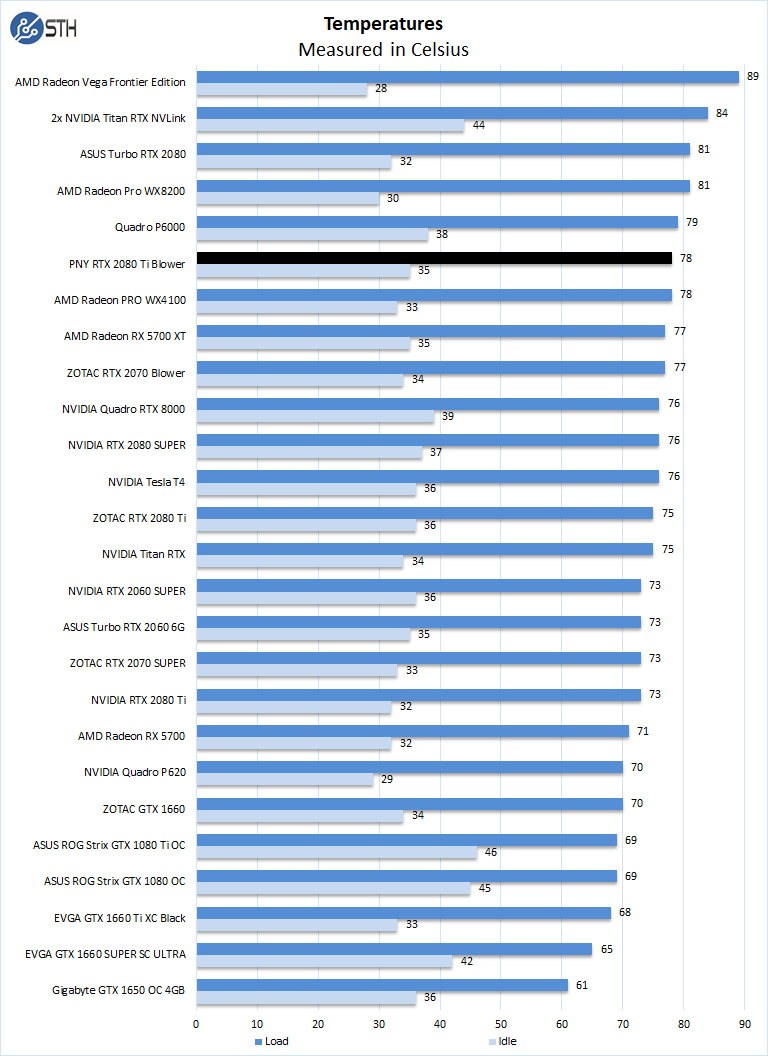

A key reason that we started this series was to answer the cooling question. Blower-style coolers have different capabilities than some of the large dual and triple fan gaming cards.

Temperatures for the PNY RTX 2080 Ti Blower ran at 78C under full loads, in this case, the highest temperatures we saw were achieved while running OctaneRender benchmarks. Idle temperatures were reasonable at 35C.

This shows why PNY needed to use a small clock speed decrement. The GeForce RTX 2080 Ti is at the edge of what is easy to cool using an active blower-style cooler. Going above 250-300W, NVIDIA moved to SXM2, SXM3, and soon OAM form factors for dense GPU compute.

Final Words

We found the PNY RTX 2080 Ti Blower to be extremely capable with our Deep Learning benchmarks with no real difference between it and the dual-fan GPU units we tested previously. This is good news for users who want to install the PNY GeForce RTX 2080 Ti Blower into a server or workstation in a dense configuration such as 4, 8, or 10 GPUs per 4U. Through our testing, we found the PNY GeForce RTX 2080 Ti Blower to be competitive with other solutions while being designed for density. That is a great result.

One must consider if a PNY GeForce RTX 2080 Ti Blower will fit your workloads. If you have a workstation with 1-2 GPUs, it is probably not the best option and dual-fan coolers can make sense. That is the point. These are designed for dense configurations.

I wouldn’t touch anything from PNY with a 10 foot pole. Everything I’ve bought from them has died prematurely.

We rack 8 of the Supermicro 10 GPU servers adding about 3 racks a quarter. That isn’t much, but it’s 240 + 4 spare GPUs per quarter. We’ve been doing them since the 1080 Ti days. Our server reseller gets us good deals on other brands but says PNY doesn’t want the business as much so we get other brands.

I’m gonna be honest here. That cooler looks like they’re just using something cheap. I’d like to see PNY actually design a nice cooler like the old NVIDIA FE cards had with vapor chamber and a metal housing. Maybe if they’d do that they could keep clock speeds higher or even push them and have a better solution for dense compute like you’re saying.

If they’re not doing that, then its too easy to buy Zotac for GPU server and workstation

The octane benchmark is probably using a scene that doesn’t fit in 11GB of VRAM, hence the large difference between high VRAM cards. It doesn’t say much about the card’s actual performance since it is heavily bottlenecked by out-of-core memory accessing.

@IndustrialAIAdmin What brands and models do you run in such environment?