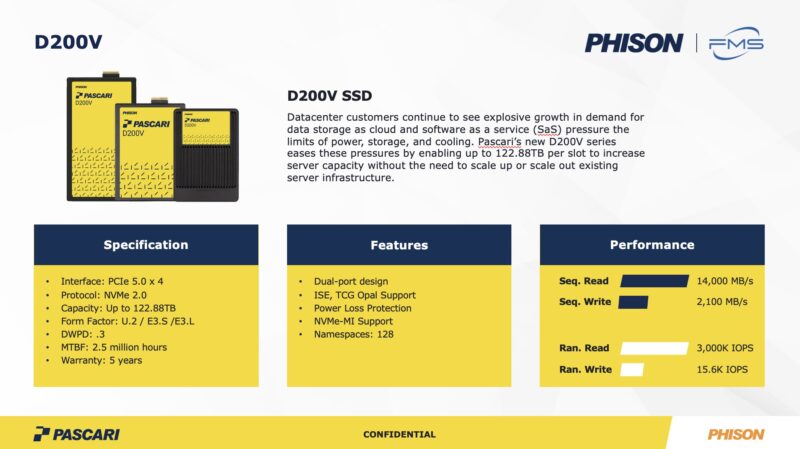

Phison is joining the 122.88TB NVMe SSD party with something decidedly different. The company is offering a PCIe Gen5 NVMe SSD variant in the Phison Pascari D200V line. High-capacity NVMe SSDs have become a big game-changer in the AI space as they can drastically cut footprint and power over hard drive solutions.

Phison Pascari D200V PCIe Gen5 NVMe SSD with 122.88TB of Capacity Announced

The drives support sequential reads of 14000MB/s and writes of 2100MB/s. Typically, this tier of storage is very read-heavy. In 2016, STH was in the Phison booth at Flash Memory Summit 2016 with a 24x 960GB solution (23.04TB) that delivered 1M IOPS. Now, a single drive offers 122.88TB of capacity and 3 million random read IOPS.

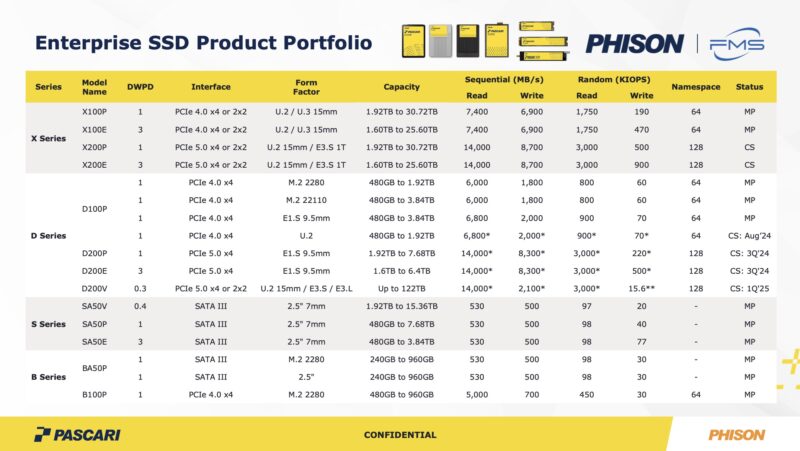

The larger capacities are only available in U.2 or E3.L, with the U.2 version slated for the end of 2024, while the E3.L 122.88TB version is slated for July 2025. The E3.S will top out at 30.72TB.

Phison Pascari also has a number of other D200 drives coming, such as the D200P and D200E, but those will be lower-capacity drives.

Notable in this slide is that the D200V is slated for Q1 2025.

Final Words

We titled our Solidigm D5-P5336 61.44TB SSD Review Hard Drives Lost. Our idea was that SSDs provided much better power, space, and reliability advantages to the point that hard drives would become obsolete. Still, to this day, we have heard estimates of 90% of data being stored on hard drives. What changed is AI clusters. Modern large AI clusters tend to have large storage arrays located nearby. Swapping from hard drives to high-capacity NVMe SSDs not only has a performance benefit but it saves a lot of power. We have seen figures of tens of kW for even relatively smaller-scale AI storage in the 10PB range just by using 61.44TB NVMe SSDs. Since power is a big constraint, high-capacity flash has become extremely popular, and we are seeing many vendors announce or release 122.88TB drives to capture that market demand.

By my understanding flash is still more expensive than spinning disks. This could explain why disks are used in many cases.

I find it interesting what technology should be used for offline data storage and archival. While hundreds of hard disks on a shelf sound like a bad idea, multi-level flash is likely even worse. Cell leakage in a drive that remains unpowered over a couple years is almost guaranteed to result in complete loss of data.

Is there a standard way to power on a large SSD not connected to any computer and cause the firmware can run its housekeeping? Mounting each drive every month seems to open up the chances of a malware attack while multi-zone cloud even lacks resilience toward non-hostile but systematic CrowdStrike kinds of errors.

Please stop spread this 3M iops. Those iops aren’t exist in real live scenario.

15K write iops made this story a joke.

As soon as you start writing something – say 10K iops, reads will down from 3M to 100K.

@Eric Olson: If longevity bothers you, you can add redundant data using something like Parchive or similar. This will reduce your usable space but allow the data to survive a larger amount of corruption. In theory if you add enough redundancy to shrink a drive’s capacity down to what it would be if it were SLC, then you’ll also get the same amount of data longevity as the SLC drive would have.

Also it’s a myth that a flash drive needs to be powered to “charge” the cells. The cells are only charged when data is written, so to keep flash data current, the entire disk needs to be wiped with TRIM and rewritten in full, typically every 2-3 years. Rewriting the data any more frequently than that will just wear out the flash cells sooner. The more it wears out, the less time you can leave between full disk rewrites. This process could easily be done in a system that is not connected to any networks if that bothers you, but it shouldn’t bother you because you have multiple copies of your important data so if one of the copies fails it’s no big deal.

Magnetic drives are really no better or worse for long term storage. Some drives last for years, others have the bearings seize within a year of sitting on a shelf. Really at this point tape + spare drives is the only proven medium for long term storage, but you still can’t beat copying your data onto new media every few years.

Malvinious wrote, “The cells are only charged when data is written, so to keep flash data current, the entire disk needs to be wiped with TRIM and rewritten in full, typically every 2-3 years.”

Thanks for the reply.

If the firmware doesn’t automatically check and fix charge levels when powered on, would reading the data by performing a scrub be enough for the firmware to trigger a rewrite if charge levels have drifted?

If so, do all SSDs check the charge levels while reading and if necessary recharge the cells? In particular, I wonder if the large drive reviewed here checks automatically, only during read or needs an explicit rewrite to retain the data when powered on.

@Eric Olson: You’d probably have to find out from the drive manufacturers as I imagine this sort of scrub operation would vary greatly between drives. I don’t imagine cheap drives would bother with it, but enterprise drives may.

I’m no expert but I believe it works in simple terms by reading the voltage in the cell and using that to assign it to a specific pattern of bits. It’s plausible that if the voltage sits too close to the edge of one of the permitted bands, the drive recognises this and reflashes it, but again there is no standard for this so it would be highly dependent on what the firmware does.

It’s possible some drive firmware does a background scrub like this and reflashes when there is minimal disk activity, so in those cases leaving the drive powered on for a few days a year could be enough to reflash any data at risk of being lost, but you’d want to know for certain the firmware actually does this otherwise you’d be wasting your time leaving the drive powered.

If you were using the drive for off-line storage, and you only powered it up once a year, then you could reasonably assume all the cells need topping up and rewrite the drive without caring what the firmware does, so that would be the safest option in my opinion.

I have seen ridiculous prices on SSDs in the last three years:

Kingston Enterprise U2 (NVMe) 7.68 TB were much less expensive on Amazon Germany than their counterpart i SATA (DC500m or DC600M).

SAS SSDs from other vendors were incredibly expensive even for just 2 TB.

My explanation is: servers vendors (like DELL) are still applying crazy prices for NVMe servers and SSDs and that prevent buyers to move to NVMe. Some CTO are also stuck in the past, there is no advantage to stay in SATA or SAS with DataBases or high I/O VMs.

My policy is to buy from DELL the cheapest server with a fast but affordable CPU (not too many cores),

with only 16 GB ram and a NVMe backplane populated with a single 960 GB SSD.

After delivery, I buy 768 GB ram from third-party vendors and U2 / U3 SSDs from Micron (Kingston has ceased their U2 unfortunately, without replacement).

@Laurent: The reason why Dell and others are so expensive is because if a part fails you typically get it replaced within four hours, or at the very least the next business day. Keeping enough spare parts on hand for long enough to do that isn’t cheap, so that’s why they charge a premium. Not to mention all the testing they do to ensure the parts work well together, so really, you’re paying for peace of mind.

If your workloads don’t need that kind of reliability then yes, buying aftermarket parts will save you a lot of money, if you are ok with a bit more potential downtime and the occasional compatibility/stability issue. Google used to be famous for doing this, buying lots of consumer grade hardware and just engineering their software to work around the inevitable hardware failures without any interruptions.

Just depends on what your use case is.

It’s cheaper nowadays to just buy extra SSDs than to get a Dell service contract. You’ll leave extras in the DC. Even if you’re paying $100 to $200 for remote hands for the quick job, it still costs so much less.

is my math right here?

14000MB/s = 14GB/s

122TB=122000GB

122000GB/14GB/s = 8714.286 seconds to write 122TB

8714.286/60 = 145.238 hours to write 122TB

145.238 / 24 = 6.052 days to write 122TB

122TB * .3DWPD = 36.6TB/day

122TB / 6.052 days = 20.165TB/day written at max sequential drive speed.

With that said…is endurance really a concern if this drive were used on a constantly powered data collection system? For example, the disk gets swapped out from an edge device every 6 days, and shipped to a datacenter for analysis and processing of the data stored on that disk.

for the sake of the math, assume its writing sequentially those 6 days.

is it even possible to wear this 122TB drive out in 5 years?