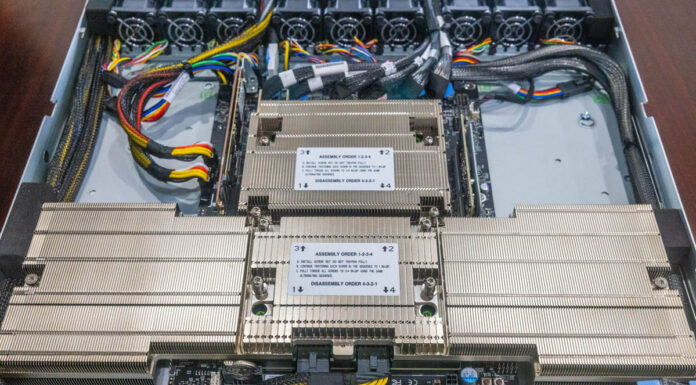

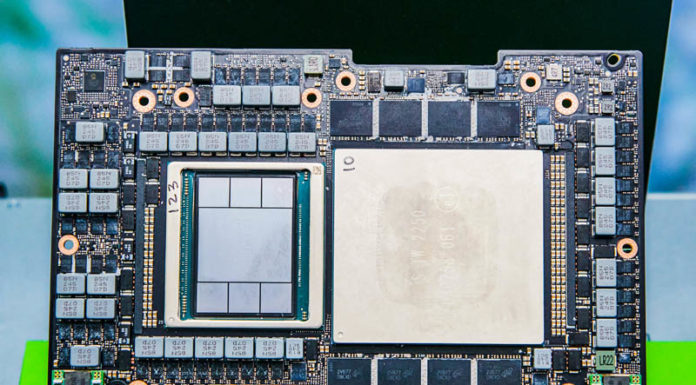

We review the Supermicro ARS-111GL-NHR, a 1U air-cooled NVIDIA GH200 server, and show why Grace Hopper is super cool

The Intel Xeon 6 R1S offering will include a Granite Rapids P-core single-socket platform with 136 lanes of PCIe Gen5

In the final review of our long-running series, we have the keepLink KP9000-9XH-X switch review with 8 ports of 2.5GbE and one SFP+ 10G port

The NVIDIA GH200 or "Grace Hopper" is far from a single product. We have a quick guide so when someone says "GH200" you know what to look for

Micron HBM3E 12-High will bring 36GB packages for higher capacity AI accelerators in the coming months as it starts to ship

The ASUS NUC 14 Pro shows a level of mini PC refinement that is refreshing with even small details covered in its design

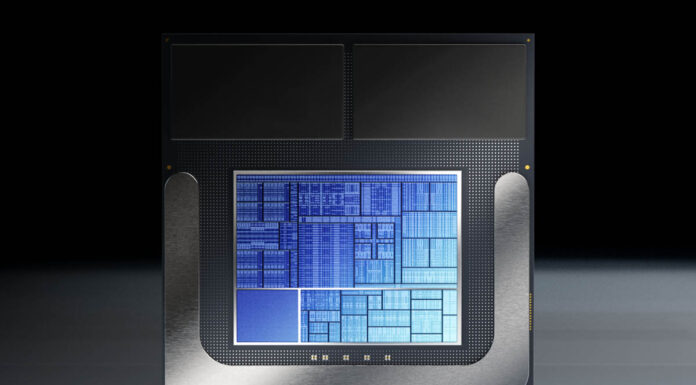

With the Intel Core Ultra 200V series, Intel is making radical changes. The new chips ditch hyper-theading, get a new iGPU, and onboard memory

At FMS 2024, we saw inside the massive 128TB or 122.88TB Samsung BM1743 NVMe SSD for dense all-flash storage applications

Subscribe to our newsletters to stay up to date on the latest reviews and coverage from STH and more delivered to your inbox

The YuanLey YS25-0801P is an 8-port 2.5GbE and 1-port SFP+ 10G switch that offers PoE/ PoE+ capabilities at a low cost