Today marks the end of a 27 plus year journey for what was one of the best technology sites the world has ever seen

While it may seem like a small upgrade at first, the Minisforum UM890 Pro is an overhauled and much better mini PC with optional OCulink

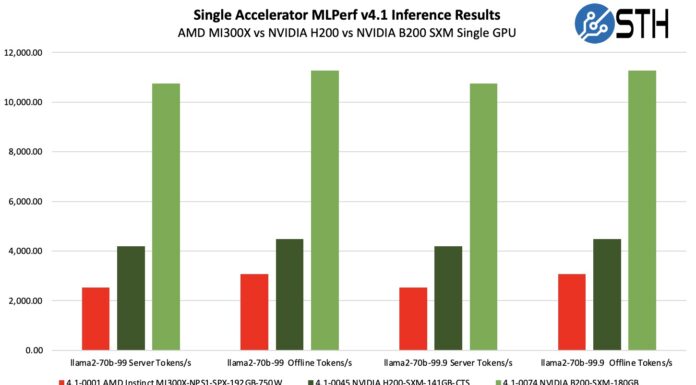

In MLPerf Inference v4.1 the upcoming NVIDIA B200 whalloped the current AMD MI300X but UntetherAI submitted some excellent efficiency runs

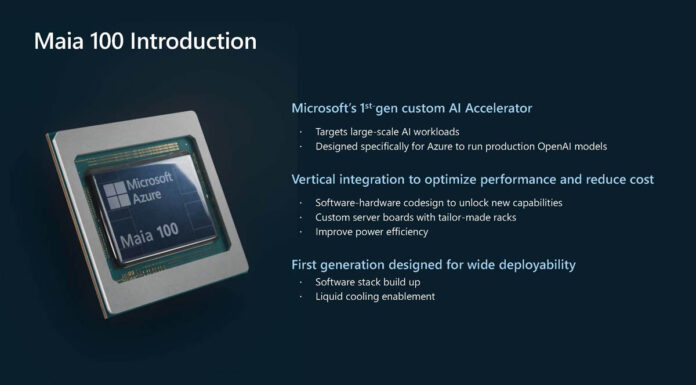

We learned more about the Microsoft Maia 100 which is the company's AI accelerator to run OpenAI models at lower costs

At Hot Chips 2024, Ampere went into the AmpereOne microarchitecture details and discussed how it designed and built its custom Arm processor

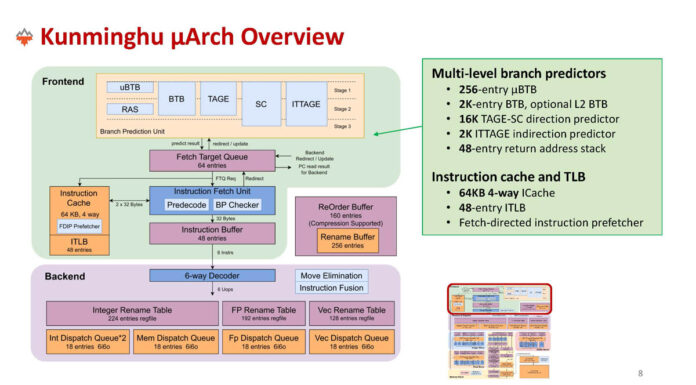

The XiangShan RISC-V CPU project from Chinese universities is designing and delivering cores targeting Arm Neoverse N2 and Cortex A76 designs

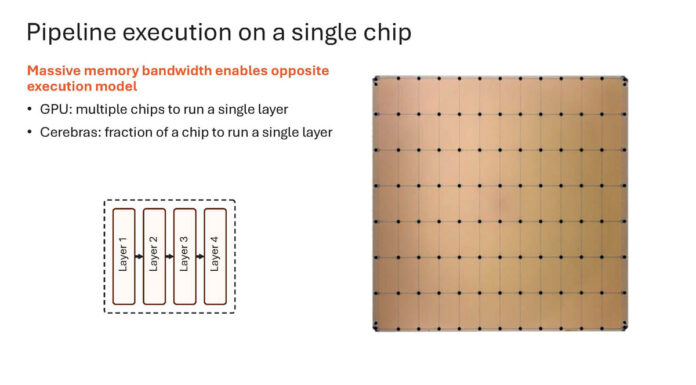

Cerebras enters AI inference and uses its giant chip to run circles around NVIDIA HGX H100 platform performance

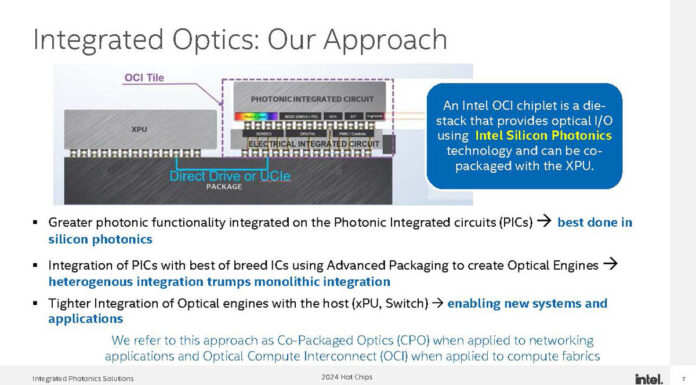

At Hot Chips 2024 Intel showed off a 4Tbps optical chiplet to displace copper chip-to-chip links with longer reach efficient optics

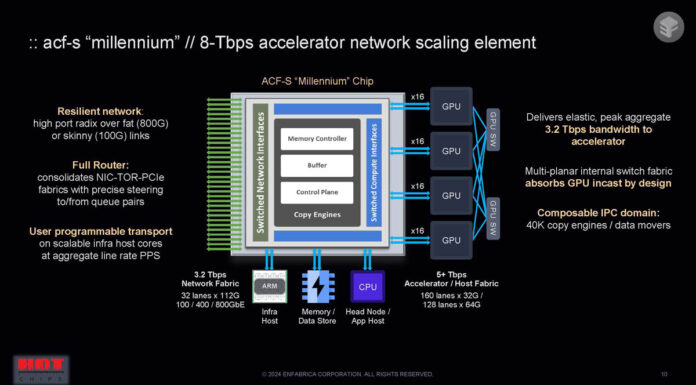

The Enfabrica ACF-S takes aspects of scale-up and scale-up combining up to 3.2Tbps of networking and 10x PCIe Gen5 x16 links into one chip

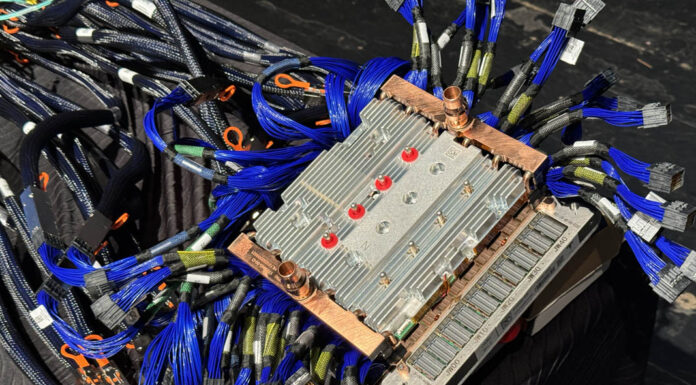

Tesla DOJO Exa-Scale Lossy AI Network using the Tesla Transport Protocol over Ethernet TTPoE

Patrick Kennedy - 4

Tesla brought its Dojo V1 networking hardware to Hot Chips 2024 and announced that it is donating its own TTPoE protocol to the Ultra Ethernet Consortium