Cerebras Lab Tour

After the interview wrapped up, I had the opportunity to visit Jean-Philippe Fricker’s lab at Cerebras. Even with a mighty Panasonic S1R in hand, I was allowed a single picture. If you want to see more about the CS-1 on STH, we had a write-up around SC19: Cerebras CS-1 Wafer-Scale AI System at SC19.

What I can share is that this lab had one of the more complete sets of test stations and devices that you would expect in a fab-less chip company. There were even fixtures for the packaging process present. We discussed this in the interview, but this is not something I see in many labs these days.

The one standout in Jean-Philippe’s design is that Cerebras is solving problems at scale. For example, we did a piece on How to Install NVIDIA Tesla SXM2 GPUs in DeepLearning12. Instead of, in that example, having to figure out the tension on screws, problems were being solved such as how to reliably deliver power and liquid for cooling across the entire surface area of a chip. For those wondering, Cerebras is not using a simple water block with a single cool/ warm nozzle setup like we see cooling next-generation Intel Xeon, AMD EPYC, and OAM form factor AI accelerators. Instead, Cerebras is solving that problem at a bigger scale.

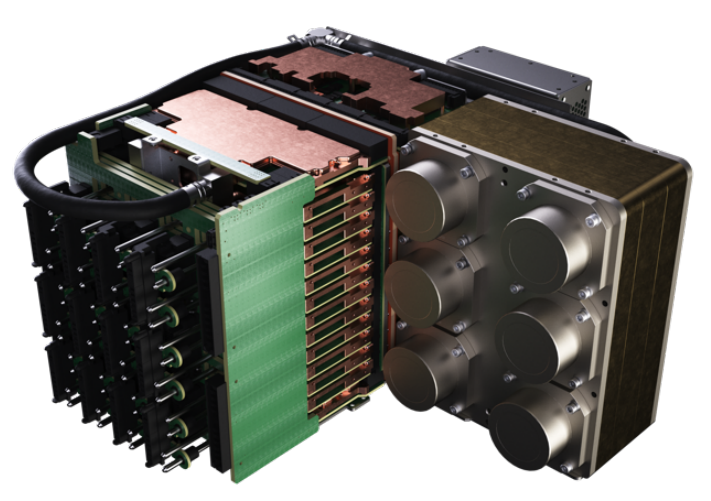

In terms of customers, the sole picture I could take was of a mechanical showcase.

This may simply look as though it is three CS-1’s in a rack. What I can tell you is that it is to show customers who have facility water how they can hook three units per rack up to facility water for cooling. The rear of the unit had 208V PSUs along with a quick disconnect feature to allow servicing the units in the future without disturbing the water flow. It also means that the facility can be prepared quickly and the units can be online quickly after being positioned on the floor.

Final Words

I just wanted to thank Andrew for taking the time out of his day to sit down with me. I am fairly certain that our discussion went well over the allotted time. I also wanted to thank Jean-Philippe for showing me around his lab and Aishwarya for being a gracious host.

The key takeaway is that if you are a STH reader, and have a big dollar AI challenge to solve, I think you should give the Cerebras solution a look. We review some of the larger NVIDIA GPU compute servers such as the Inspur Systems NF5468M5, DeepLearning11, and Gigabyte G481-S80 as a few examples, but the Cerebras solution is designed at a different scale. When I first saw the technology around Hot Chips 31, the impact was clear. After seeing the Cerebras lab, the scale of challenges they are solving, and how they are gearing to scale, this is the AI chip revolution.

Can’t wait to be able to take one of these babies for a test drive on the cloud! I wonder how fast a BERT pre-training would take…

Great article. I have three transcription/typo errors you can correct:

We came up with four or five tenants, and one of them was, it should be purpose-built. => tenets: a principle or belief, especially one of the main principles of a religion or philosophy.

“the tenets of classical liberalism”

Often they are contractually committed to buy more than one at the start attending a proof of concept and acceptance. => attendant: occurring with or as a result of; accompanying.

“the sea and its attendant attractions” (although I admit that attending has a correct meaning for this sentence, but it is seldom used). … attendant a proof …

and at those were unparalleled => we’re

This is an superb interview! Kudos to ‘Serve the Home’ for pulling it together.

It highlights the elements that make American style innovation so effective, good engineers who know whom to rely on and financial intermediaries who understand the issues, yet are willing to trust the engineers.

Holy balls that’s properly poking the actual limits of what kind of computing humankind can currently achieve.

What kind of power can a single wafer pull? I would guess around 5-10kW? Does it require facility water cooling?