At the OCP Regional Summit 2023 in Prague, we saw an OCP DC-SCM 1.0 module at the show and thought it was worth a moment to show. For those who are not aware, the OCP DC-SCM project is designed to put server management features onto a card format so that motherboards are not BMC-specific.

OCP DC-SCM 1.0 Shown at OCP Regional Summit 2023

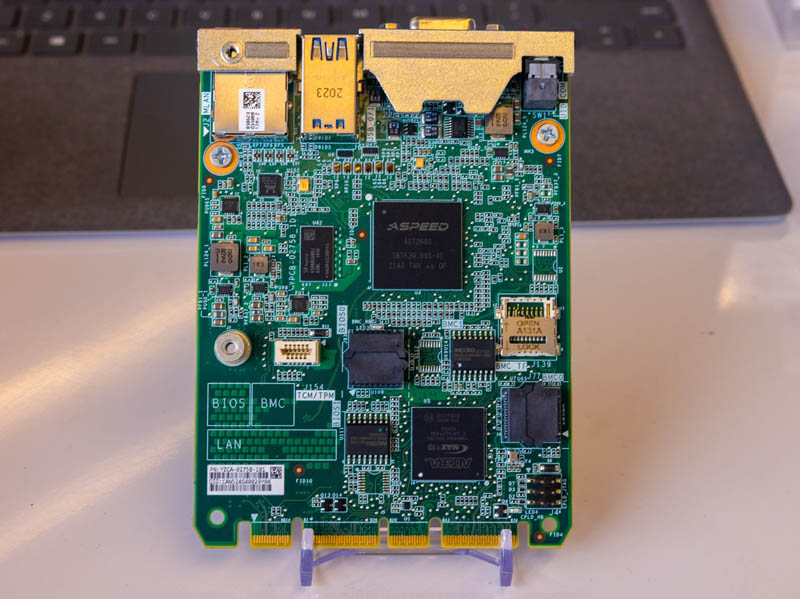

Here is the OCP DC-SCM 1.0 module at the IEIT booth. One can see the familiar ASPEED AST2600 baseboard management controller or BMC. There is also an Intel-Altera Max 10 FPGA, BIOS for the BMC and the system, and more. On the I/O side we get our USB, VGA, and out-of-band management ports.

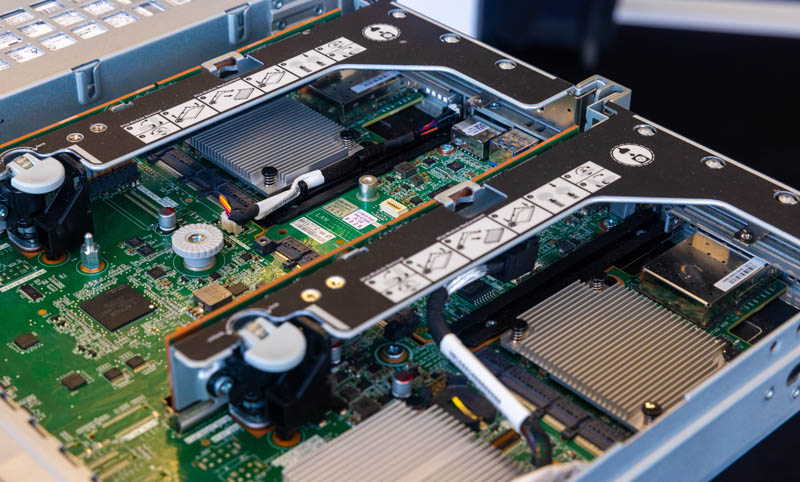

The DC-SCM can have features like PFR 3.0 support, a host TPM SPI 2.0, storage for the BMC logs, and dual BIOS flash storage for the system and BMC. Here is the card installed in a Genoa server between the two risers and OCP NIC 3.0 slots.

This is going to be a technology we see in more servers over the next few generations.

Final Words

Perhaps the biggest impact of the OCP DC-SCM is that it allows for server management features to be customized separately from the main motherboard. OCP has a DC-MHS project that we have mentioned a few times that moves much of the I/O off of the motherboard, and one component of that is the DC-SCM. To make this a bit easier to understand, companies like Dell and HPE that use proprietary BMCs as vendor lock-in tools can adopt DC-SCM and then offer more industry-standard OpenBMC or MegaRAC firmware on an ASPEED BMC and not have to change the rest of their server design. We will be covering more on DC-SCM in the future but thought it would be worth showing off a card for now.

That’s more board area than I would have expected.

Do you know if this is just a matter of what is necessary and what isn’t too tightly constrained(ie. you can only cut external I/O so much before an ethernet port, VGA, serial, and a USB port or two becomes impossible without defining some oddball high density connector; and once you’ve allocated the relatively constrained external space it’s not much of a stretch to make it OCP NIC-like depth rather than aggressively shallow); or are some of the users expecting to put capabilities significantly punchier than the usual Aspeed or proprietary equivalent in the BMC spot and wanted sufficient board space to handle chips that run warm or have a lot of pins broken out into a fair amount of RAM or the like?

I’ve only read about those sorts of capabilities being wrapped around NICs; but I don’t see a reason, in principle, why the BMC couldn’t be expanded from lightweight supervisory and peripheral emulation into full-on being-the-hypervisor if one wanted.

I wonder why they never went digital. For all those years, a DVI plug sharing both analog+digital would be much better. The analog thing is not working out well with screens, that cant lock properly on the signal – i often end up with POST codes cropped out, because they appear in the corner, and the complex OSD menu to auto-adjust is either not able to pick up the thing well (because of black background), or the menu disappears on mode changes while the boot flickers so I can never adjust it.

Please, its 2023 now. Go digital and phase out that analog transport – when both the source and destination is already digital (LCD for past decade). Or go with TypeC and at least that gets a benefit for KVM switcher people, to have a server on 1 cable as there is an USB2 path always active in it.