NVIDIA Titan RTX Compute Related Benchmarks

With our NVIDIA Titan RTX review, you will see some of the expansion to our compute-focused review test suite.

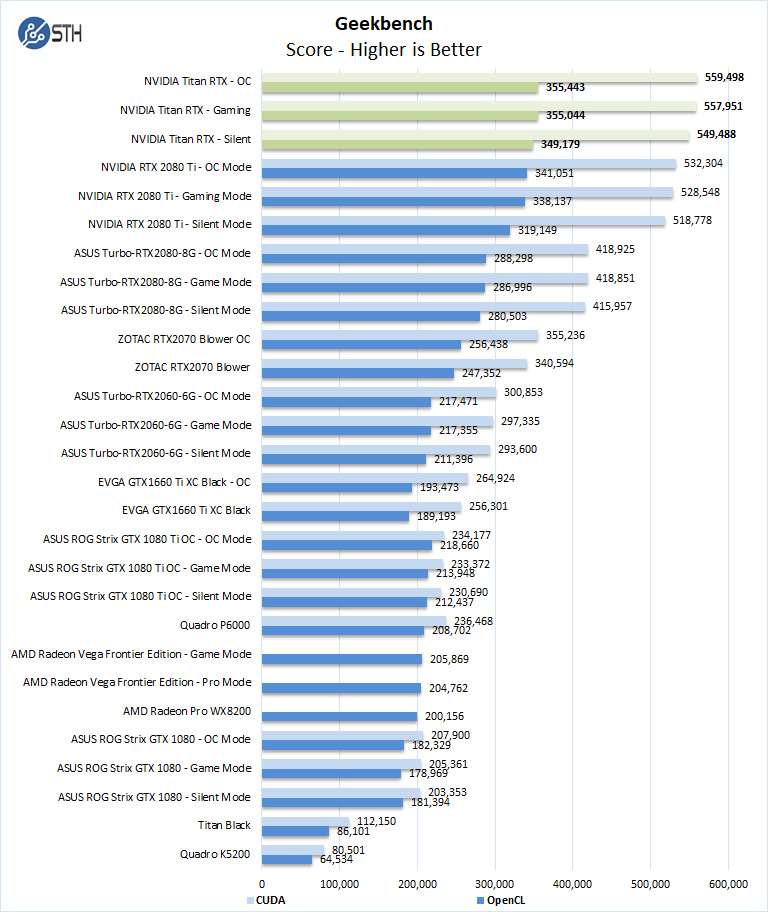

Geekbench 4

Geekbench 4 measures the compute performance of your GPU using image processing to computer vision to number crunching.

Our first compute benchmark we see the NVIDIA Titan RTX shows impressive CUDA and OpenCL results which are just above the GeForce RTX 2080 Ti performance. This is a test that does not utilize many of the differentiating features of the Titan RTX, but it still shows appreciable gains. That is part of the appeal of the NVIDIA Titan RTX. The company extracts a premium, but it will be faster across the board.

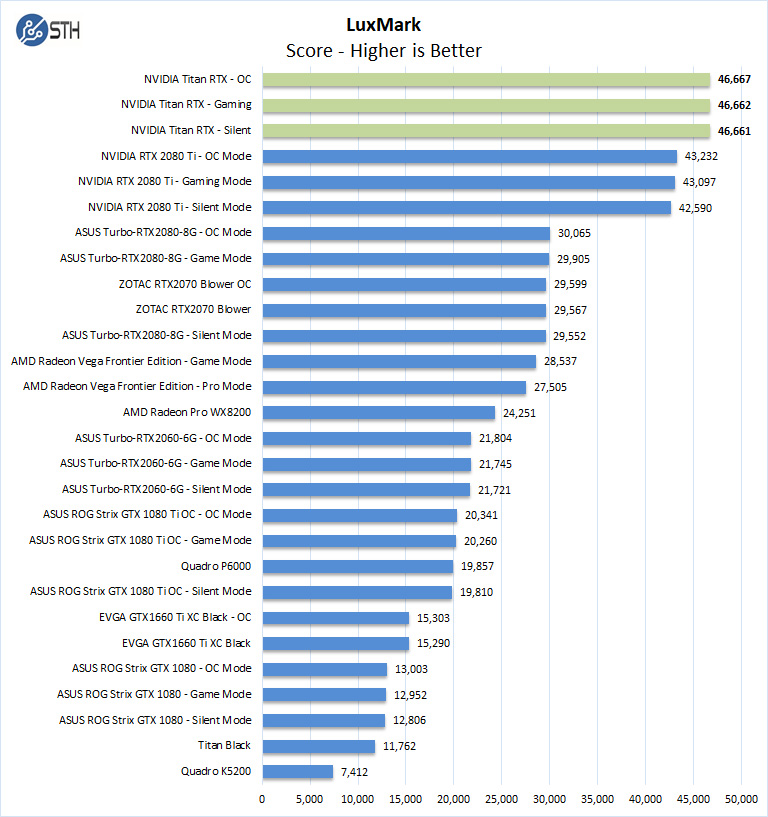

LuxMark

LuxMark is an OpenCL benchmark tool based on LuxRender.

Here we again see performance above that of the GeForce RTX 2080 Ti and clearly shows huge improvements with the Titan RTX. The NVIDIA Titan RTX has almost twice the Radeon Pro WX 8200 performance, the card we started this series reviewing. Scaling up in a card is generally preferred making this an impressive result.

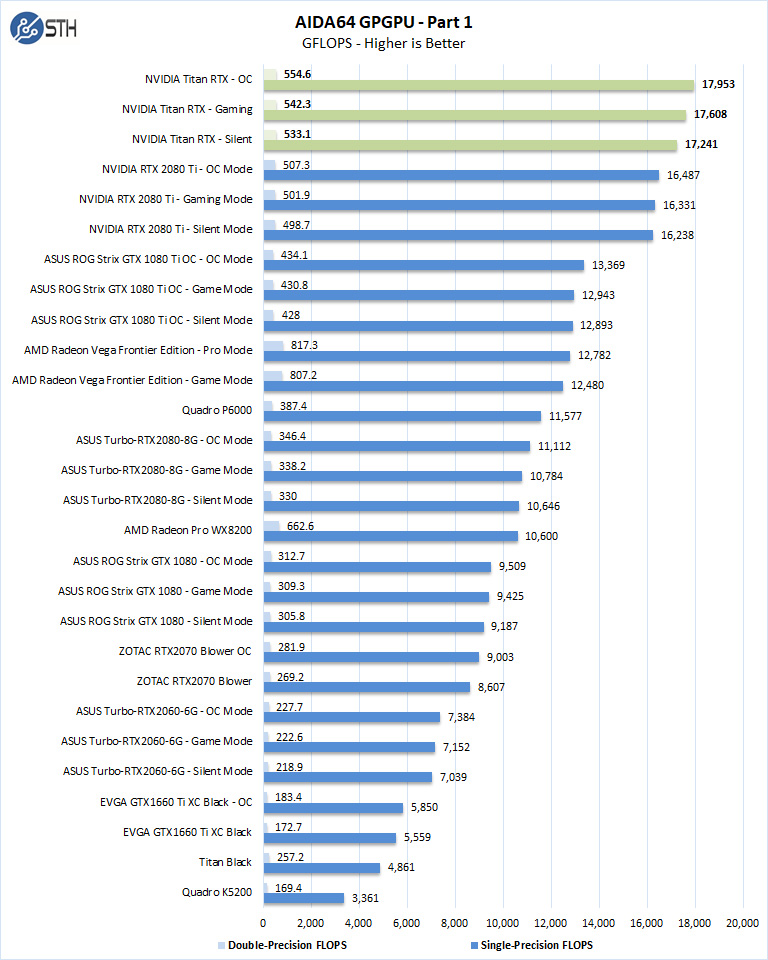

AIDA64 GPGPU

These benchmarks are designed to measure GPGPU computing performance via different OpenCL workloads.

- Single-Precision FLOPS: Measures the classic MAD (Multiply-Addition) performance of the GPU, otherwise known as FLOPS (Floating-Point Operations Per Second), with single-precision (32-bit, “float”) floating-point data.

- Double-Precision FLOPS: Measures the classic MAD (Multiply-Addition) performance of the GPU, otherwise known as FLOPS (Floating-Point Operations Per Second), with double-precision (64-bit, “double”) floating-point data.

Again, this is the fastest across the board.

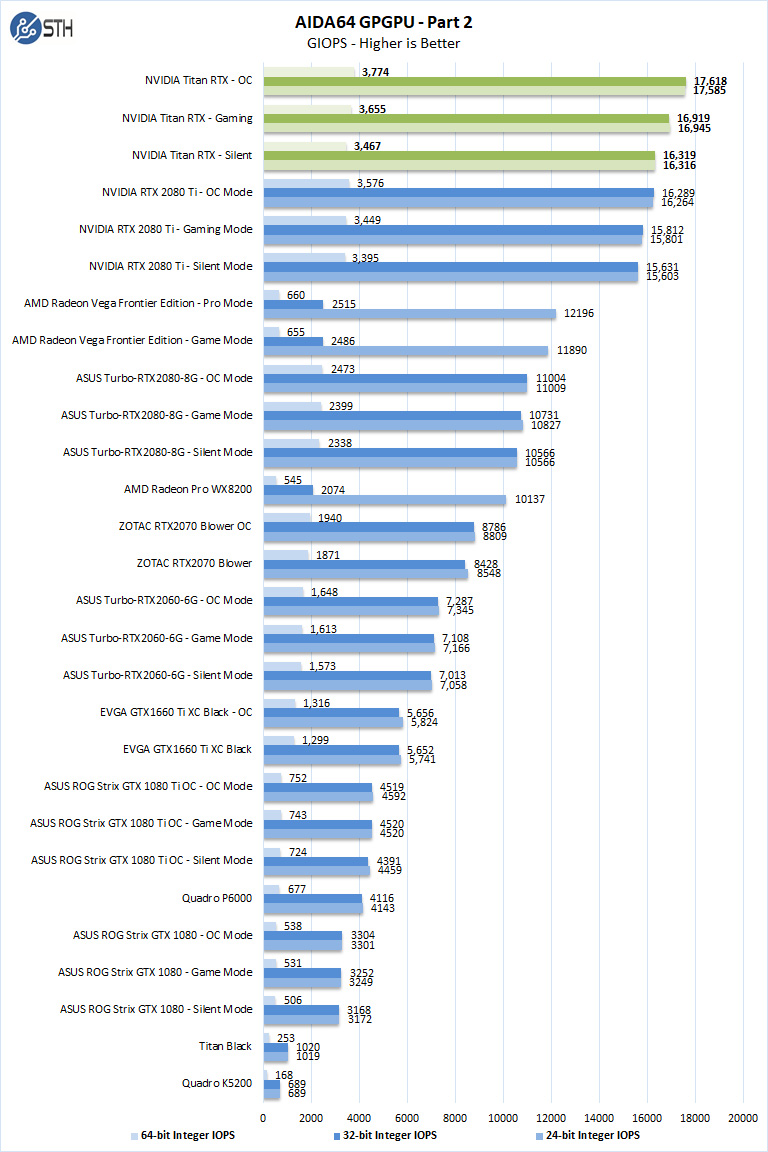

The next set of benchmarks from AIDA64 are:

- 24-bit Integer IOPS: Measures the classic MAD (Multiply-Addition) performance of the GPU, otherwise known as IOPS (Integer Operations Per Second), with 24-bit integer (“int24”) data. This particular data type defined in OpenCL on the basis that many GPUs are capable of executing int24 operations via their floating-point units.

- 32-bit Integer IOPS: Measures the classic MAD (Multiply-Addition) performance of the GPU, otherwise known as IOPS (Integer Operations Per Second), with 32-bit integer (“int”) data.

- 64-bit Integer IOPS: Measures the classic MAD (Multiply-Addition) performance of the GPU, otherwise known as IOPS (Integer Operations Per Second), with 64-bit integer (“long”) data. Most GPUs do not have dedicated execution resources for 64-bit integer operations, so instead, they emulate the 64-bit integer operations via existing 32-bit integer execution units.

Taking a pause here. The NVIDIA Titan Black was released about five years ago at a $1000 price point. Over those five years for 2.5x the price (not inflation adjusted) you get 4x the memory, and about 17x the compute performance. Compare that to the CPU side where you got about 2x the performance at about 3x the price over the same timeframe.

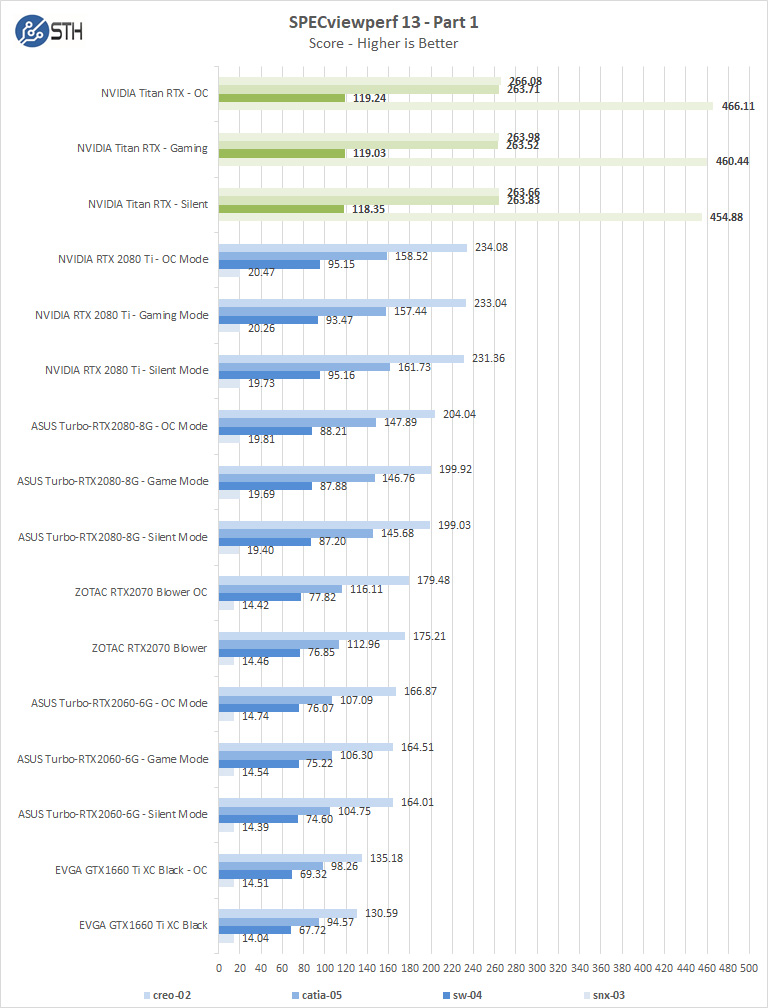

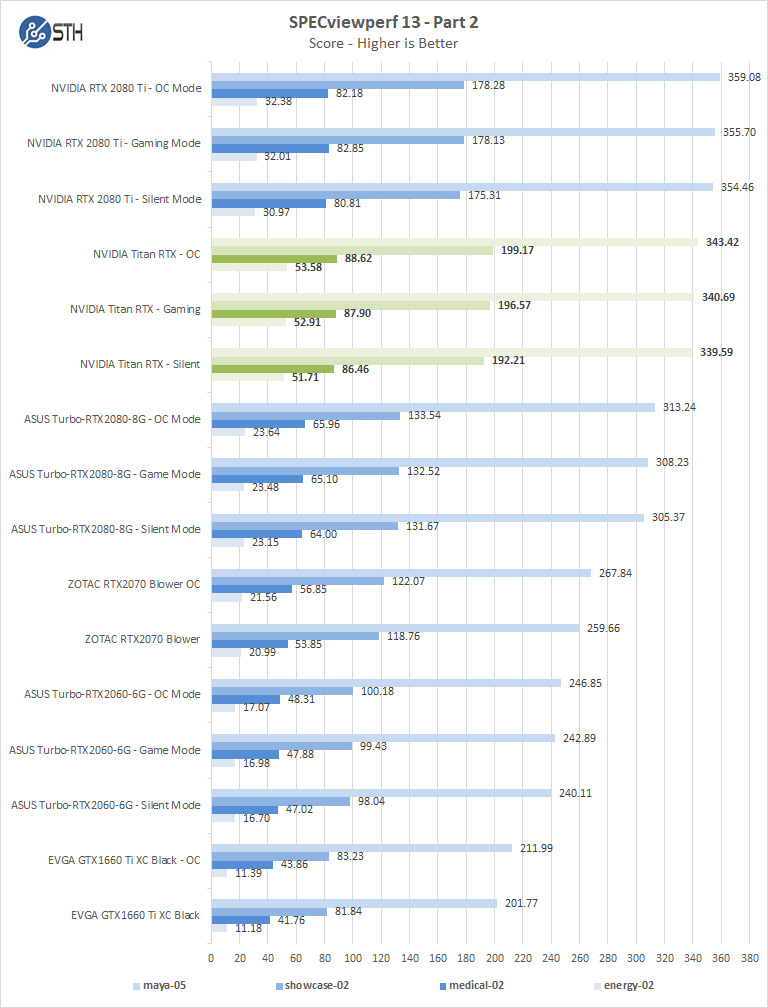

SPECviewperf 13

SPECviewperf 13 measures the 3D graphics performance of systems running under the OpenGL and Direct X application programming interfaces.

At the first part of the SPECviewperf, we find snx-03 results get a considerable boost with the Titan RTX, while in the part 2 the Titan RTX drops just below RTX 2080 Ti’s numbers in maya-05, in the rest of the numbers the Titan RTX shows strong numbers. We need to re-run this to see why that one test was faster, but overall, the Titan RTX shows some extremely strong performance improvement over the RTX 2080 Ti.

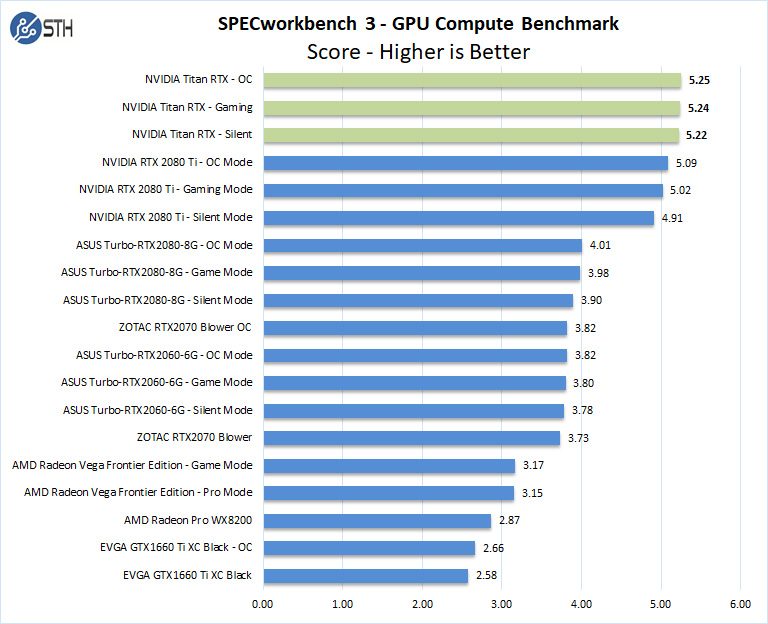

SPECworkstation 3

SPECworkstation3 measures the 3D graphics performance of systems running under the OpenGL and Direct X application programming interfaces.

With the highest price tag and the most compute resources, we can clearly see the NVIDIA GeForce RTX 2080 Ti again take the lead. In our compute benchmarks, the RTX 2080 Ti is simply faster in every test.

Let us move on and start our new tests with rendering-related benchmarks.

Whither Radeon VII?

Radeon VII is a great card for programs like Davinci Resolve (on par with the RTX Titan X).

NVidia’s drivers are oftened better optimized for benchmarking programs.

When you really want to know the performance of a graphics card go to pugetsystems, they test with real programs and are open for comments and also react on the comments.

Hi hoohoo – easy answer:

1) We do not have one.

2) On the DemoEval side, we have yet to get a request for one but we have had many requests for the Titan RTX.

Unfortunately, the economics, for us, of buying a $2500 GPU to put in the lab are actually better than getting a $700 Radeon VII. If someone has $700 lying around and wants an answer, we are happy to do the work. We just need to fund it.

Absolutely Just Stunning Titan RTX Mr. Harmon Read your Report.

Gold is Best your correct on that.

Im Building a new system June/July will have PciE 4.0 Im sure it will work even better than PciE 3.0

Im am Blown away, I have offer on my 2080Ti from last week, I just might let it go to him and get this

Titan RTX.

*Excellent Review Mr. Harmon Thank you for this

My head is spinning right now :)

Remarkable ! The magnitudes of scale are 5x over the last K2500. This represents ability to complete computation and rendering on a matter of seconds vs minutes -hours for older systems. Mr Cray would be besides himself with envy and desire to have one. Any institution of higher learning will either aquire this or be left behind in the dustbin. Titan Indeed..Great Review!

Please use same driver version for all cards. There can be over 10% delta in cuda between drivers in some cuda programs, while other programs see 0% delta.