NVIDIA is now showing off its new Tesla V100S GPUs featuring more compute and memory performance. What is really interesting here is that NVIDIA is doing so while keeping maximum power consumption constant. The NVIDIA Tesla V100S is currently listed for PCIe only in NVIDIA’s documentation, but it now should out-perform the SXM2 variant on a single GPU basis.

NVIDIA Tesla V100S Specs

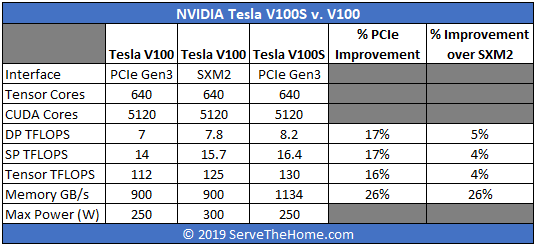

The NVIDIA Tesla “Volta” generation has been out since 2017, making this an upgrade to a GPU that is almost three years into its cycle. It seems as though NVIDIA is taking advantage of a more mature process to deliver late-cycle performance gains. Now that we have official specs for the NVIDIA Tesla V100S, we can see what this late-cycle improvement brings. Here is the spec table with how much the new cards improve over the previous iteration:

The SXM2 modules are what you would have seen if you saw our How to Install NVIDIA Tesla SXM2 GPUs in DeepLearning12. They have been around since the “Pascal” Tesla P100 generation and offer NVLink. With the custom form factor, NVIDIA was able to utilize better cooling for higher TDP. Note, the NVIDIA DGX-2 and NVIDIA DGX-2H utilize SXM3 for the NVSwitch architecture. In those platforms, the SXM3 modules hit up to 450W in the DGX-2H. NVIDIA has experience pushing TDP and clock speeds already. Now that is trickling down to more mainstream GPUs.

NVIDIA is claiming 8.2 double-precision TFLOPS, 16.4 single-precision TFLOPS, and the Tensor Core TFLOPS are up to 130 TFLOPS. That is only a 4-5% improvement over the SXM2 module, but in the PCIe form factor, it is a 16-17% improvement.

Memory capacity remains at 32GB but the HBM2 bandwidth increases to 1134GB/s for a 26% improvement.

What is perhaps most interesting about the NVIDIA Tesla V100S is that the power consumption of the PCIe card is rated still at 250W. So one is getting 16-17% more compute performance and 26% more memory bandwidth at the same power consumption level.

Final Words

The new NVIDIA Tesla V100S is a step forward, but the V100 itself has been out for a long time. STH tested an 8x Tesla V100 PCIe system almost a year ago in our Inspur Systems NF5468M5 Review 4U 8x GPU Server. For buyers of similar systems, the updated GPU offering 16-26% more performance per GPU is very attractive.

Still, NVIDIA needs a PCIe Gen4 part as competitive deep learning cards are coming out already utilizing PCIe Gen4. AMD, IBM, and Huawei all have PCIe Gen4 platforms available and Intel is now saying Ice Lake with 10nm and PCIe Gen4 will be a second half of 2019 product, although timelines have slipped recently. Sticking with the Intel cadence, and waiting for competition means that NVIDIA has been able to sell the Tesla V100 for a longer than normal product cycle. Now that there is some competition from the likes of Cerebras, Graphcore, and Habana Labs, we are seeing NVIDIA update their Tesla V100 in what has to be a late-cycle refresh.

I would like to inquire for Nvidia Tesla V100 32G