One of the AI inferencing products we hear a ton about in our discussions with companies is the NVIDIA Tesla T4. As a low profile, single slot card that is based on the NVIDIA Turing architecture. At Supercomputing 2018, we first heard that Google would be adding NVIDIA Tesla T4 GPUs to GCP in an early access capacity. Today, Google Cloud Platform is moving that into beta with more deployments and in more regions.

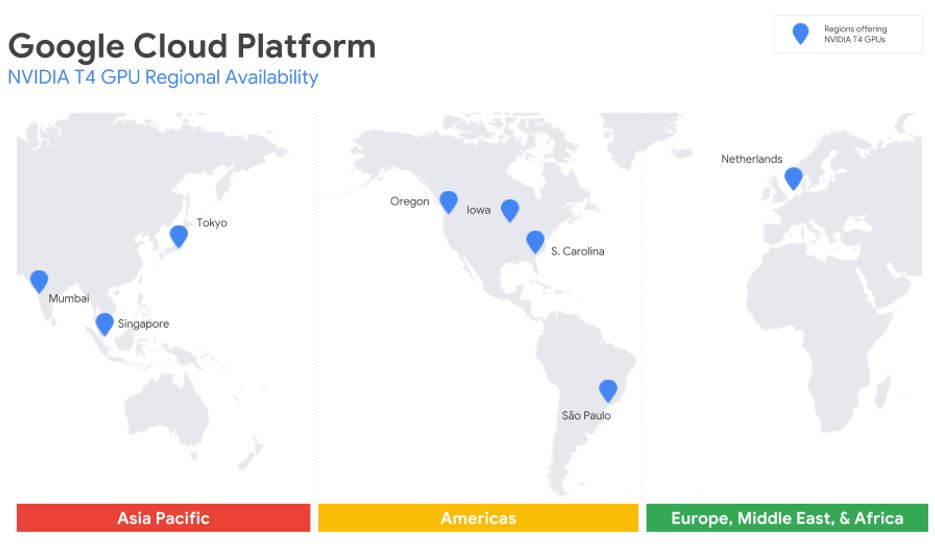

Google Cloud, is launching a public beta with NVIDIA Tesla T4 GPU in eight GCP regions worldwide. This makes, NVIDIA Tesla T4 GPU instances available in three US regions, the Netherlands, Brazil, India, Japan, Mumbai, and Singapore.

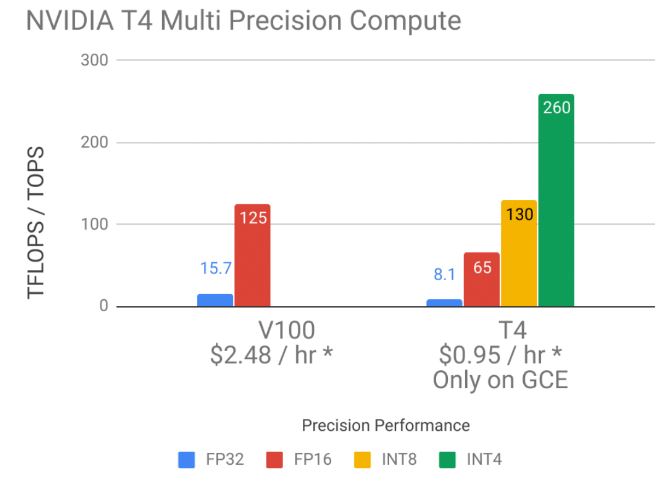

As part of the announcement, Google Cloud is highlighting the relative cost for single, half, and INT8 / INT4 compute of the new NVIDIA Tesla T4 powered instances.

The on-demand pricing of $0.95 per hour for the NVIDIA Tesla T4 can see up to a 30% discount with sustained use. One can also use preemptible VM instances with the Tesla T4 which can be used for as low as $0.29 per hour.

Each NVIDIA Tesla T4 is equipped with 16GB of GPU memory, delivering 260 TOPS of computing performance. Google Cloud will also support virtual workstations using the Tesla T4 and one can use Turing’s RT cores to leverage ray tracing capabilities.

Final Words

We expect to see more NVIDIA Tesla T4 roll-outs this year. Both from major cloud providers, but also with major server OEMs. It seems like this card has a lot of people excited due to its Turing architecture, and low power single-slot low-profile form factor. This is a GPU that can fit into almost any 1U or 2U server out there making plentiful deployment options between purchased hardware and leased through companies like Google Cloud.