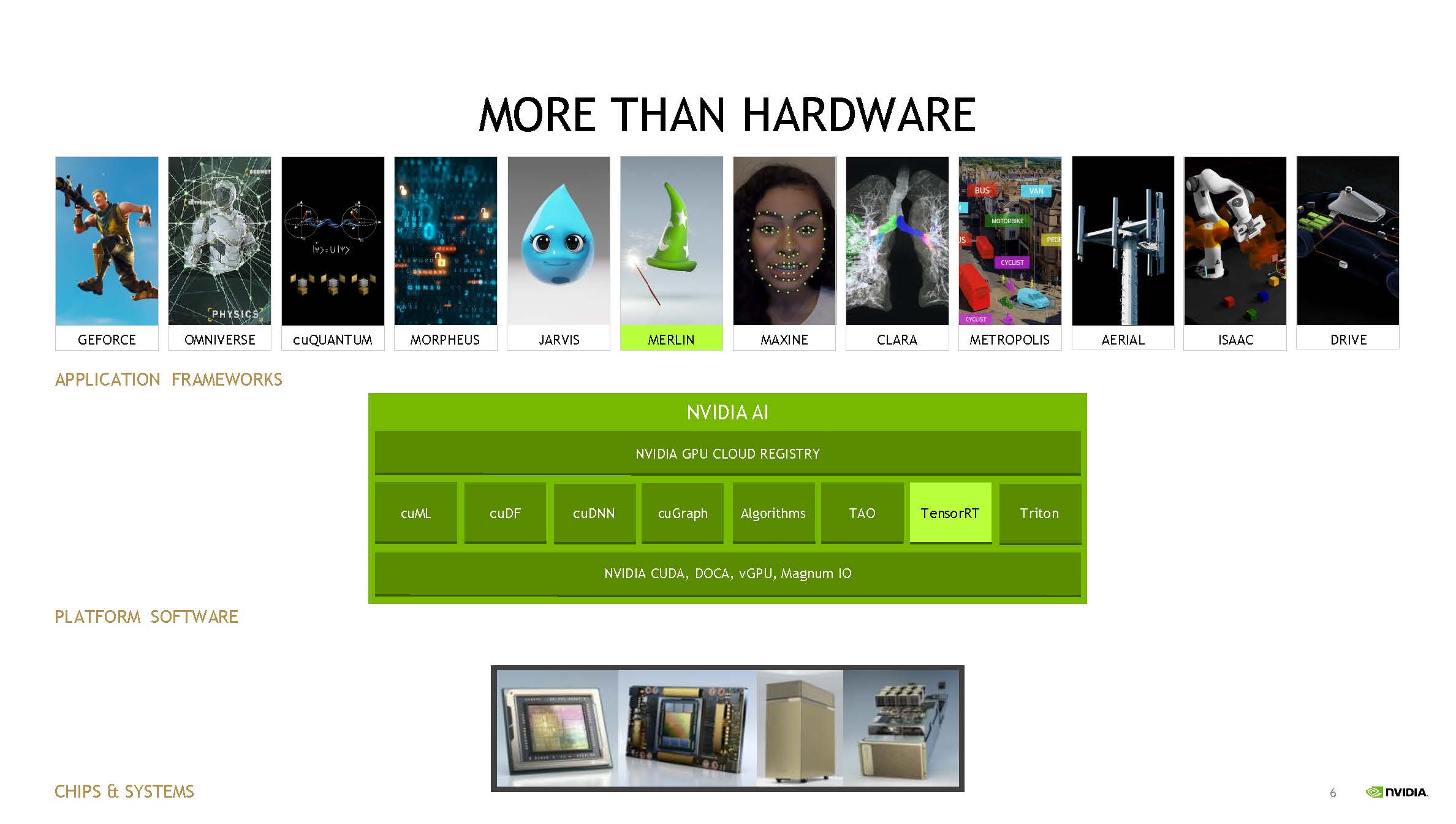

Today, NVIDIA has a major announcement with TensorRT 8. We often cover the hardware side of NVIDIA, but the software side is also huge for NVIDIA. What currently sets NVIDIA apart in the market is the breadth of its hardware and software ecosystem.

As with major CUDA releases, TensorRT is one of the foundational technologies that is driving the adoption of AI in new market segments.

NVIDIA TensorRT 8 and RecSys Announcements

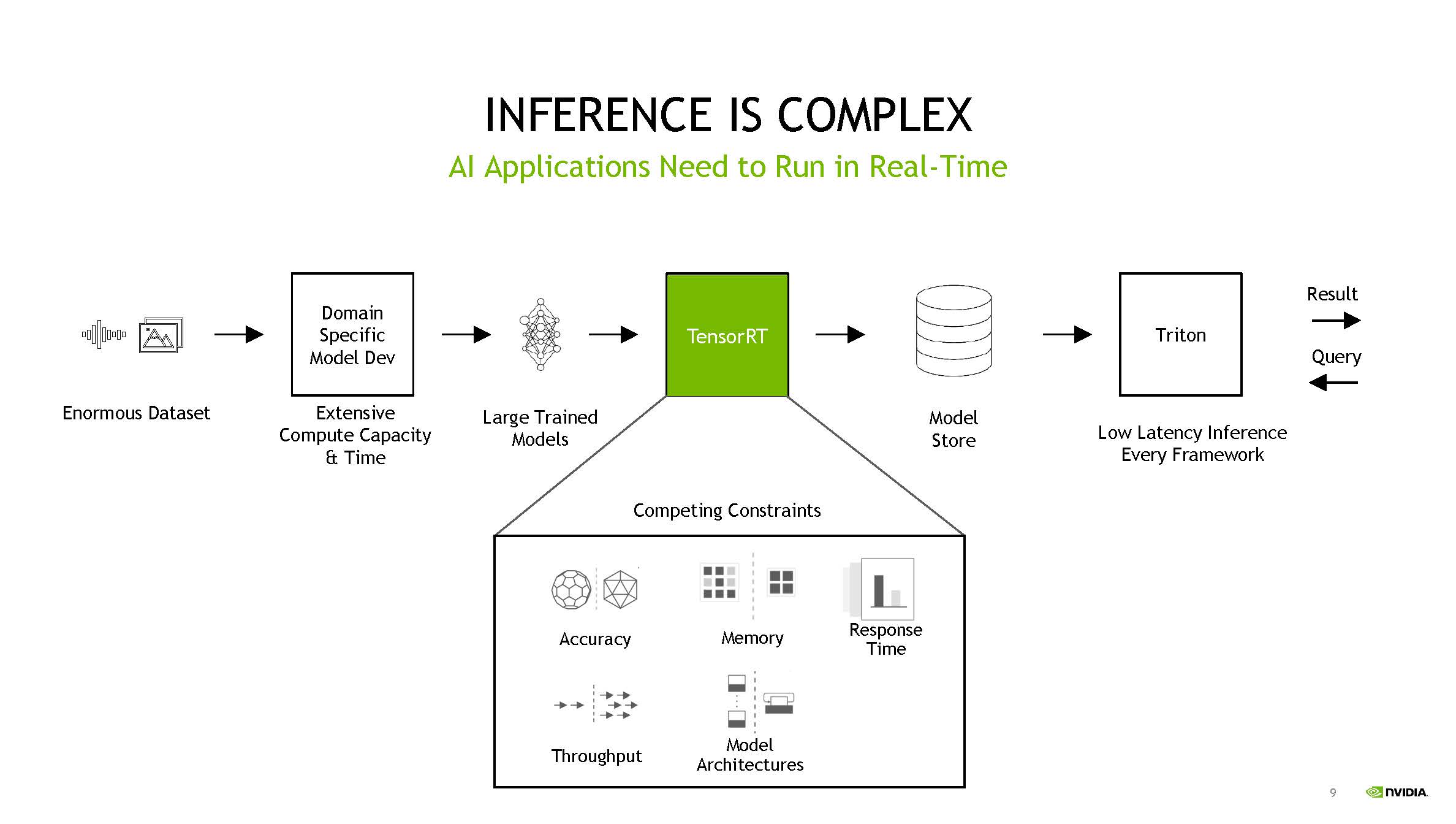

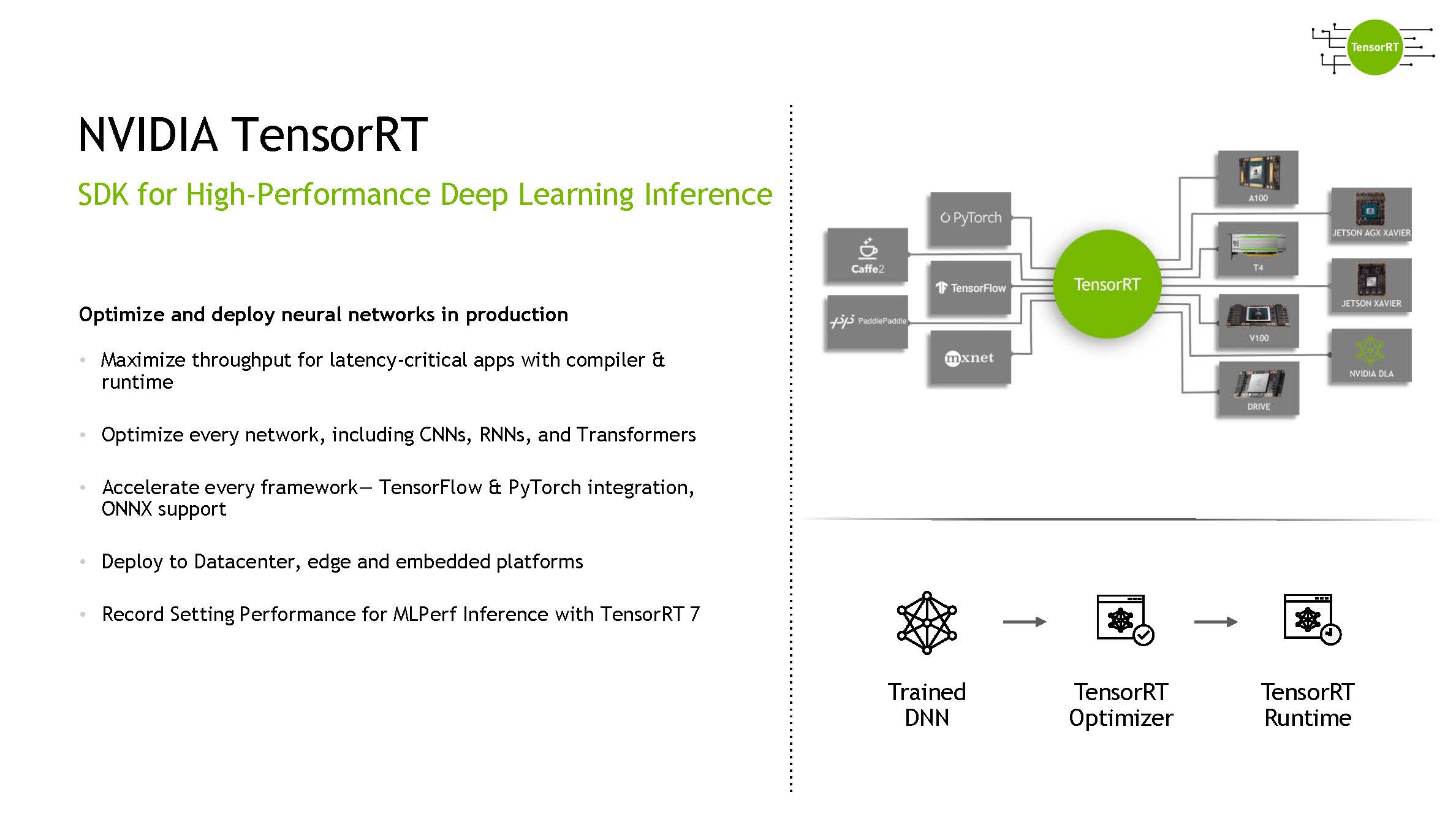

TensorRT is a SDK for high-performance inference using NVIDIA’s GPUs. While NVIDIA has a major lead in the data center training market for large models, TensorRT is designed to allow models to be implemented at the edge and in devices where the trained model can be put to practical use. Some examples for TensorRT are conversational AI, natural language processing, and computer vision.

When NVIDIA discusses its edge inference platforms for automotive, robotics, data center, manufacturing, and elsewhere, a key part of this is TensorRT being used across everything from Jetson SOMs to NVIDIA A100 GPUs.

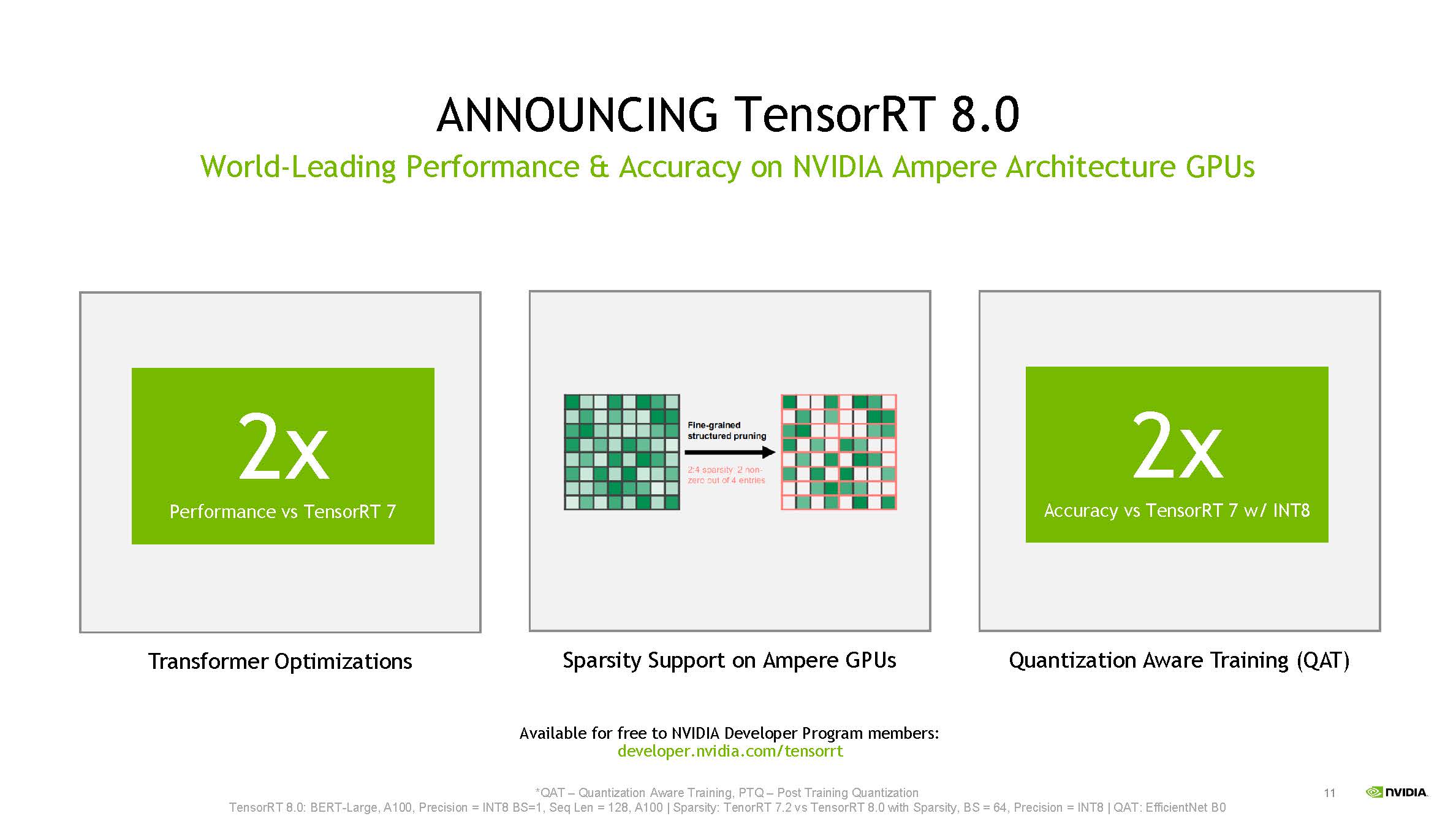

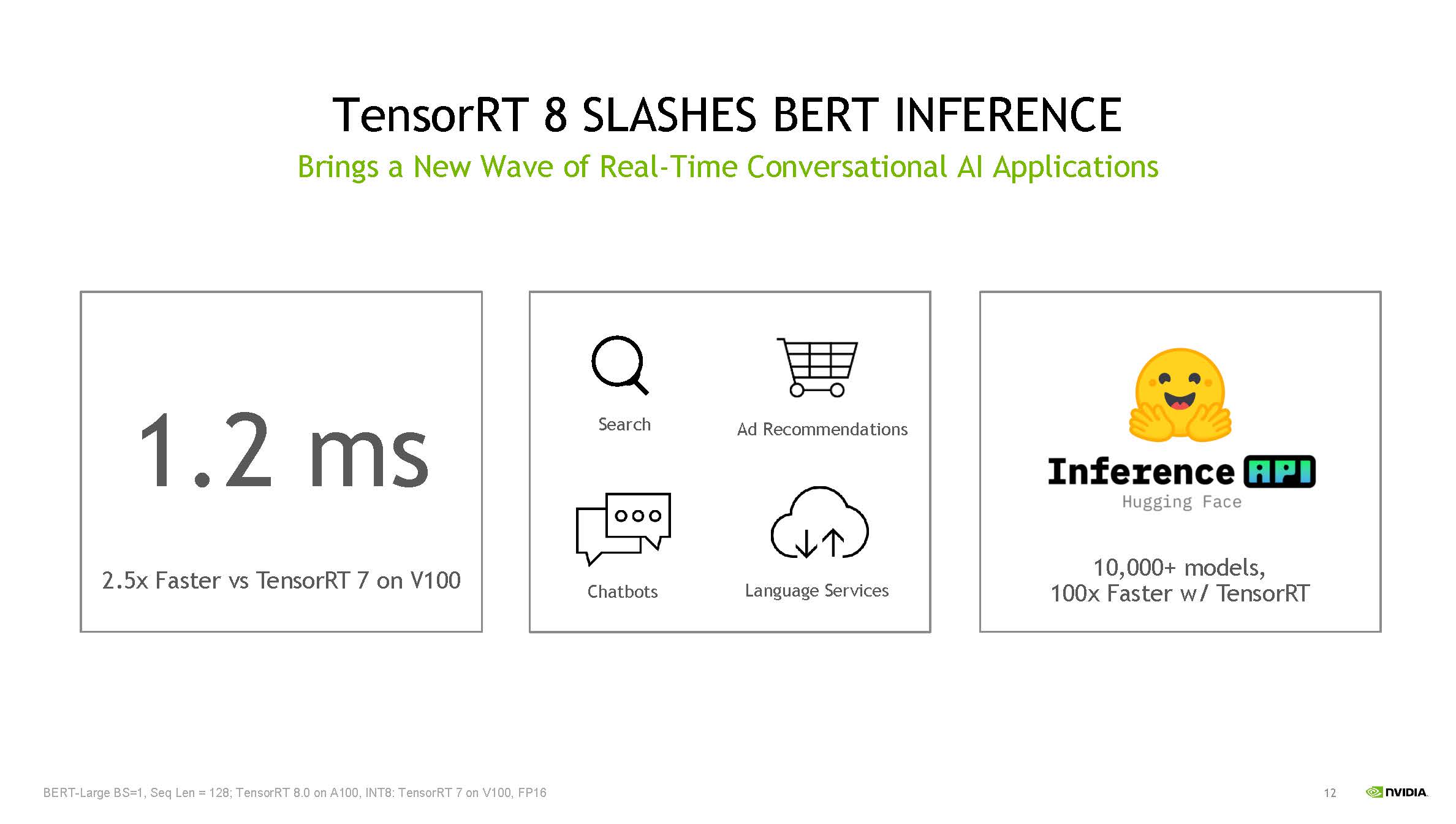

TensorRT 8 brings the next level of optimizations including features such as sparsity support and INT8 accuracy on Ampere generation GPUs. NVIDIA makes new versions of its software available that include new optimizations and thus higher performance and potentially higher accuracy. Optimizations for inference can also mean fitting models into smaller memory footprints which is extremely important for edge inference.

There is an item on this slide that we are a bit sad to see. Quantization Aware Training or (QAT) is not the same QAT as Intel uses it. QuickAssist Technology has been around for ages and is largely a set of accelerators that NVIDIA’s new Mellanox-powered gear can accelerate. See Intel QuickAssist Technology and OpenSSL Benchmarks and Setup Tips and Intel QuickAssist at 40GbE Speeds: IPsec VPN Testing. We first saw Intel market QAT in the data center space with the Atom C2000 series in 2013’s Rangely line and it now runs at 100Gbps. It is unfortunate we have two silicon providers in the data center using the same acronym for two functions that can be running at the same time in a server.

Getting back to TensorRT 8, we get better performance as we would expect. NVIDIA can move both hardware and software advancements over time which makes for very large gains chronologically in the CUDA ecosystem.

The other side of the announcement is around recommender systems. Recommender systems we see all around us which makes them a huge driver for investment.

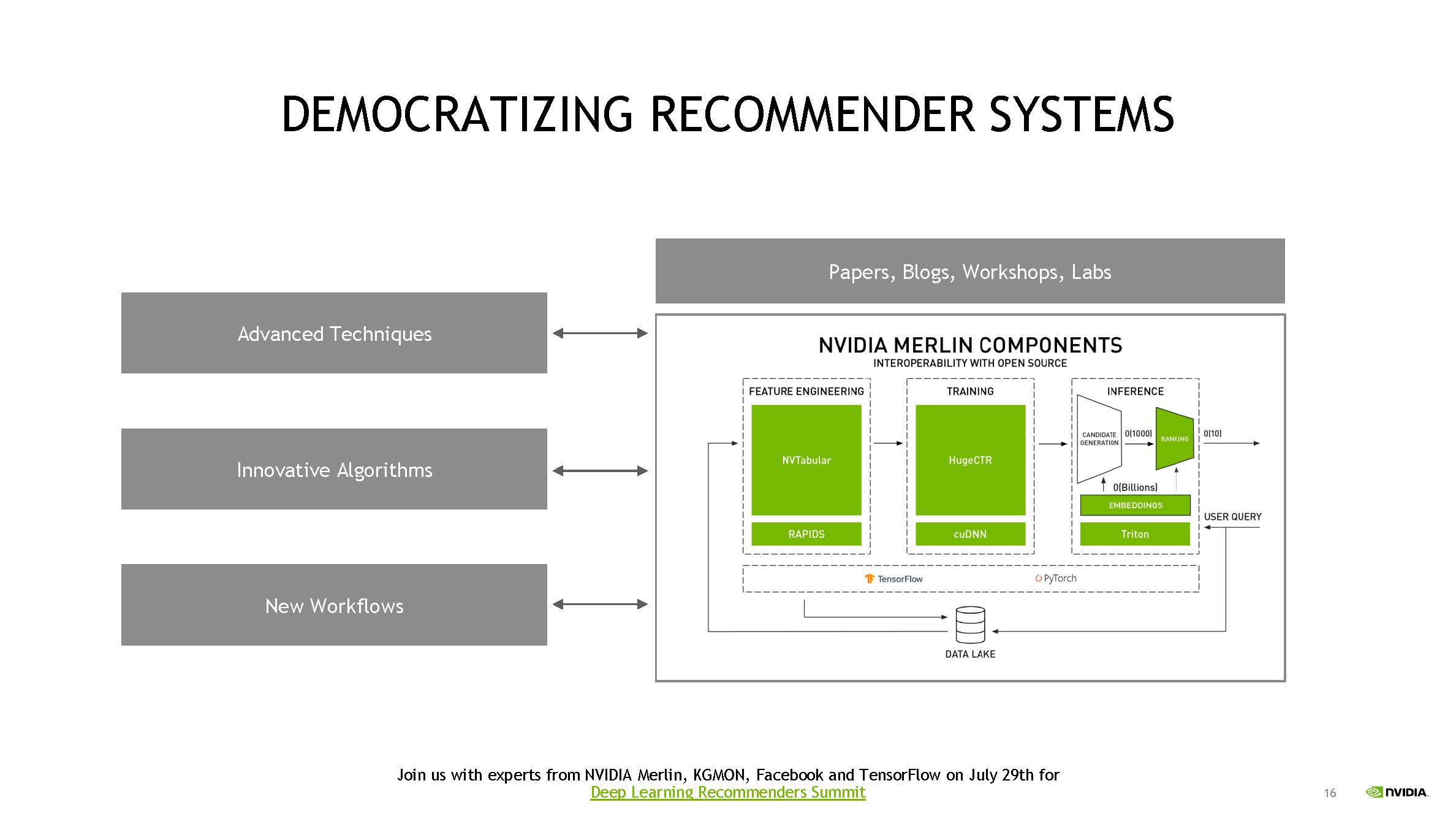

NVIDIA announced a number of wins in the recommender systems space over the past few months and is showing it is focused on driving performance in this space with Merlin.

Final Words

For most of our readers, TensorRT 8 is going to be the more impactful of the two announcements. While we often cover the hardware, we should note that one of the big reasons NVIDIA has maintained its edge in the AI space is because it has shown years of generational improvements both in the hardware and in the software space. That hardware also expands to new power and performance envelops to expand the reach of the ecosystem making it important as well.

Excellent