NVIDIA RTX 6000 Ada Compute Benchmarks

At STH, we do not test games on GPUs, on either professional or even gaming-focused products. We also took a pause in the series when GPUs spiked in price and had tight availability over the past few years. Now that GPUs are a more regular market, we are rebooting the series, including adding new benchmarks. We are also re-running the NVIDIA RTX A6000 the immediate previous-gen although we have the RTX 6000 and RTX 8000. We do have things like the RTX 3090 if you want a proxy for Ampere performance. That is not exactly on point, but in many benchmarks, it is close enough for a ballpark performance delta.

This is the start of our new 2023 GPU Benchmarks and how we will continue. We do have a few more to include, but these are not ready yet.

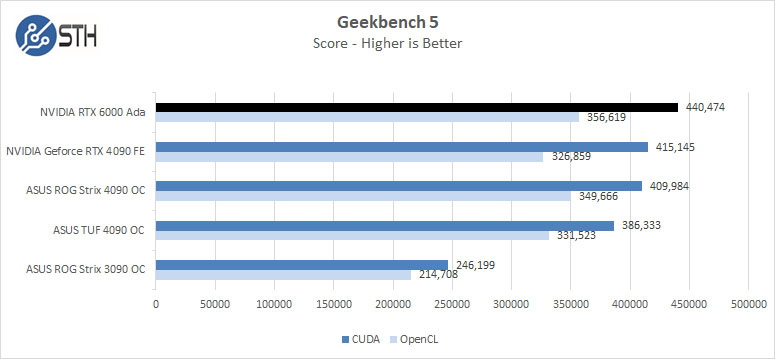

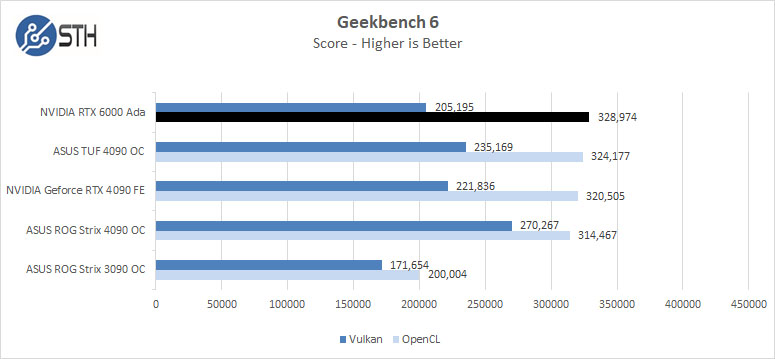

Geekbench 5 and 6

Geekbench 5 and 6 measure the compute performance of your GPU using image processing computer vision to number crunching.

The top result is Geekbench 5, while all below are from Geekbench 5 runs.

Geekbench 6 was just released while testing these GPUs. It does not include CUDA benchmarks which I hope they bring back. Instead, they use OpenCL and Vulkan.

These benchmarks show RTX 6000 Ada leading except for Vulkan results, which fall behind the 4090s in Geekbench 5 and 6.

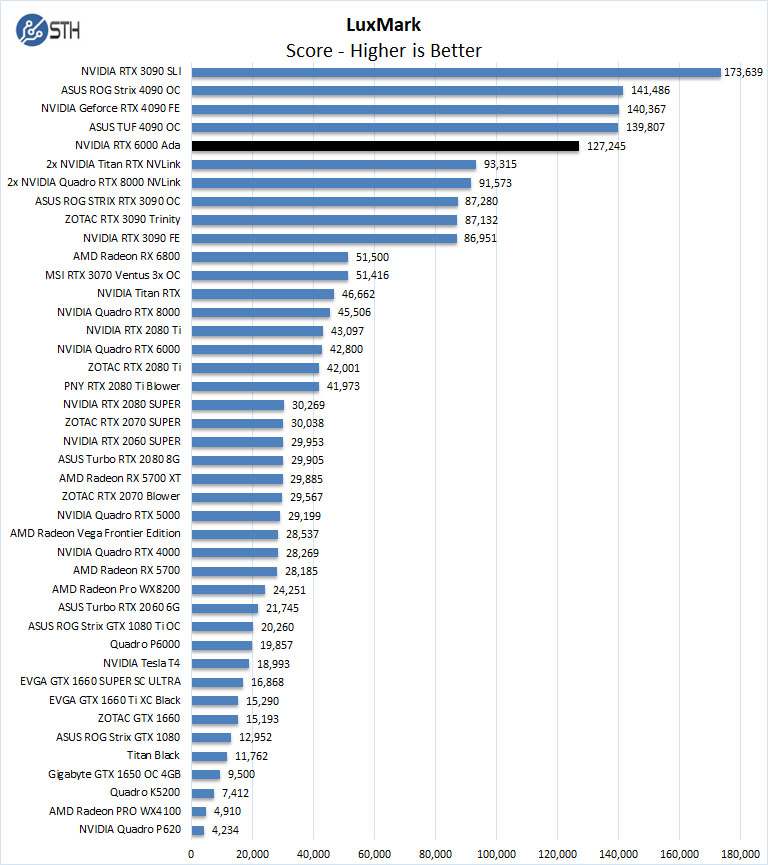

LuxMark

LuxMark is an OpenCL benchmark tool based on LuxRender.

The RTX 6000 Ada falls behind the 4090s in LuxMark by 10,000 points, which is a large delta. On the other hand, it is almost 3x the performance of the two-generation old RTX 6000. That is the most likely upgrade point for many of our readers.

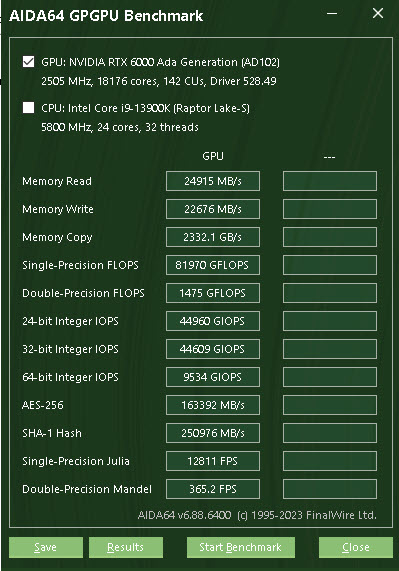

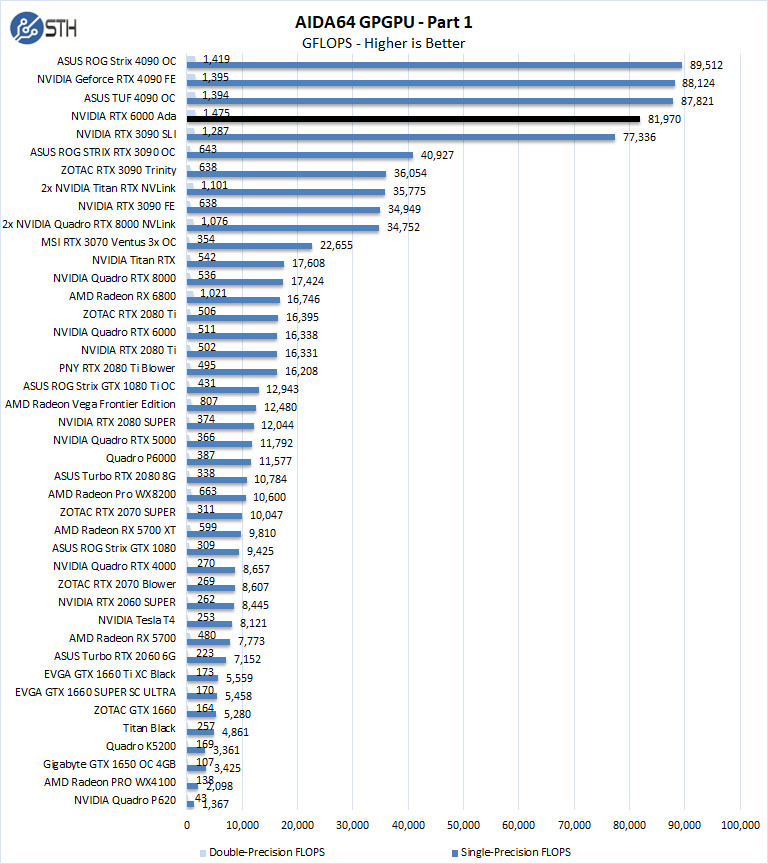

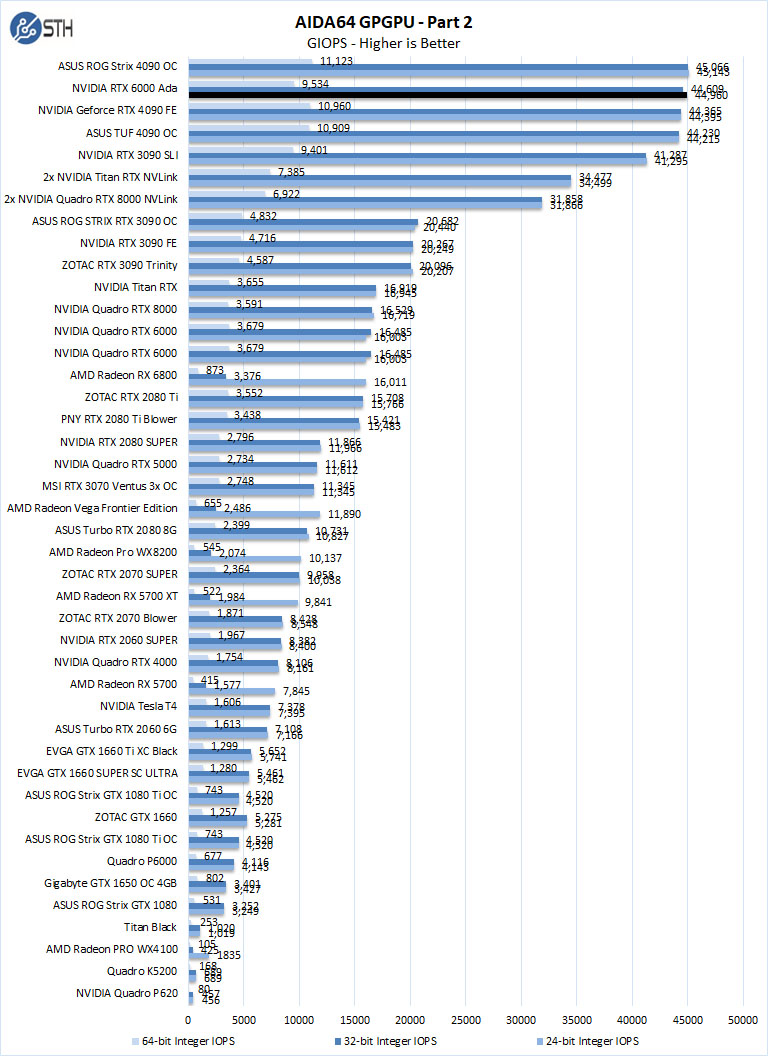

AIDA64 GPGPU

These benchmarks are designed to measure GPGPU computing performance via different OpenCL workloads.

- Single-Precision FLOPS: Measures the classic MAD (Multiply-Addition) performance of the GPU, otherwise known as FLOPS (Floating-Point Operations Per Second), with single-precision (32-bit, “float”) floating-point data.

- Double-Precision FLOPS: Measures the classic MAD (Multiply-Addition) performance of the GPU, otherwise known as FLOPS (Floating-Point Operations Per Second), with double-precision (64-bit, “double”) floating-point data.

The next set of benchmarks from AIDA64 are:

- 24-bit Integer IOPS: Measures the classic MAD (Multiply-Addition) performance of the GPU, otherwise known as IOPS (Integer Operations Per Second), with 24-bit integer (“int24”) data. This particular data type defined in OpenCL on the basis that many GPUs are capable of executing int24 operations via their floating-point units.

- 32-bit Integer IOPS: Measures the classic MAD (Multiply-Addition) performance of the GPU, otherwise known as IOPS (Integer Operations Per Second), with 32-bit integer (“int”) data.

- 64-bit Integer IOPS: Measures the classic MAD (Multiply-Addition) performance of the GPU, otherwise known as IOPS (Integer Operations Per Second), with 64-bit integer (“long”) data. Most GPUs do not have dedicated execution resources for 64-bit integer operations, so instead, they emulate the 64-bit integer operations via existing 32-bit integer execution units.

The RTX 6000 Ada falls a bit behind in the first part of this test but still outperforms the 3090 SLI and NVIDIA RTX 8000 rigs of the past. In the second part of this test, the RTX 6000 Ada holds up very well compared to the 4090s we have tested so far.

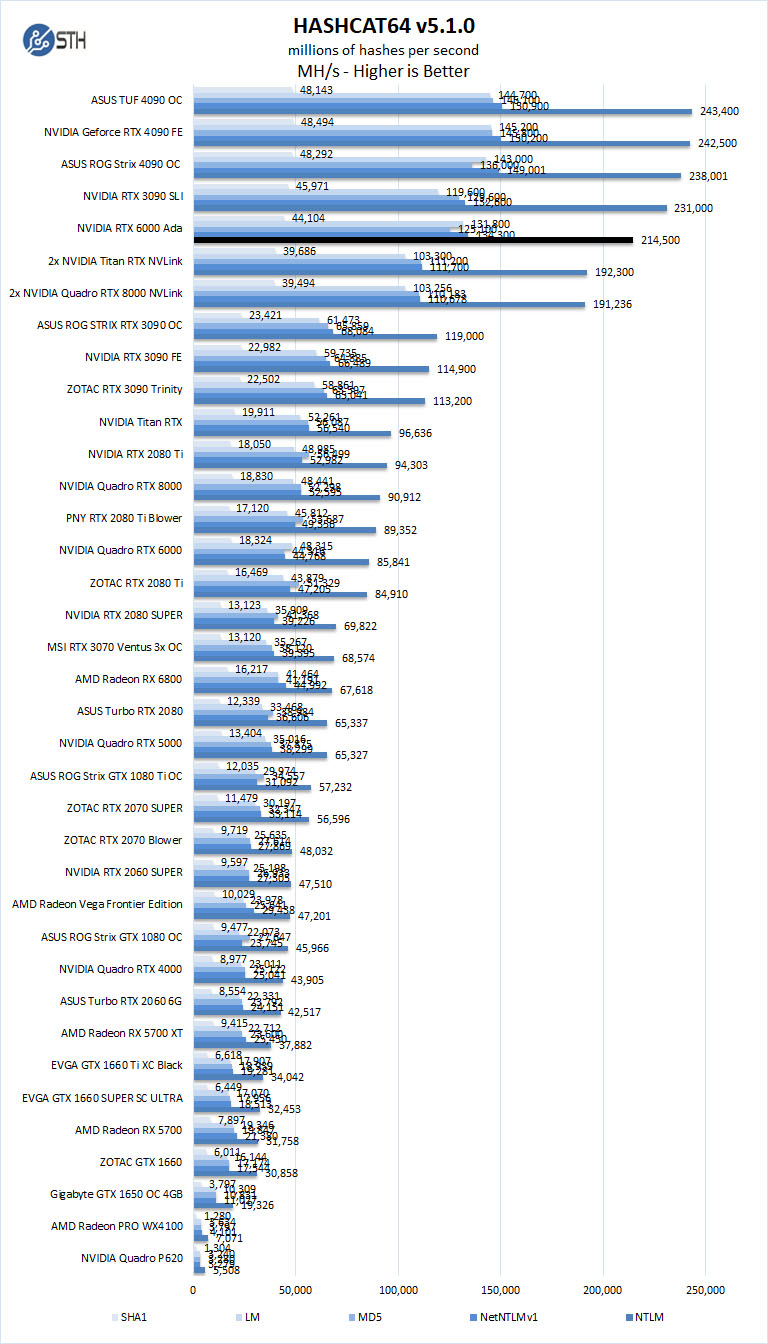

hashcat64

hashcat64 is a password-cracking benchmark that can run many different algorithms. We used the windows version and a simple command of hashcat64 -b. Out of these results, we used five results in the graph.

With HASHCAT64 the RTX 6000 Ada does not keep up well with the 4090s.

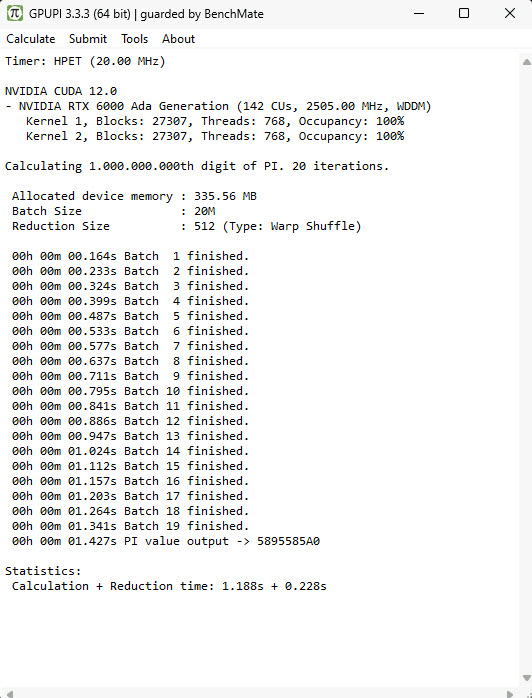

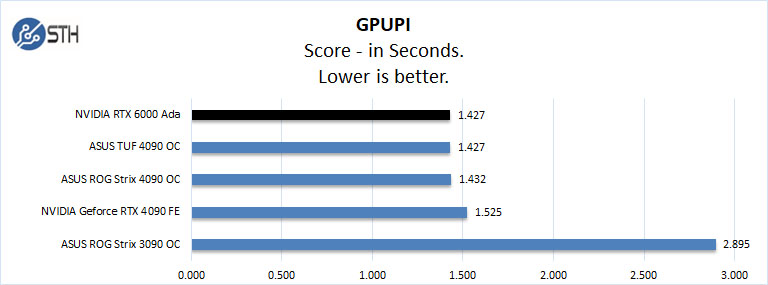

GPUPI

GPUPI calculates the mathematical constant Pi in parallel by optimizing the BPP formula for OpenCL and CUDA-capable devices like graphics cards and main processors.

The NVIDIA RTX 6000 Ada completes this task in 1.427 seconds which is equal to the fastest RTX 4090s we have tested. All of these are about 2x the previous generation.

Next, we will look at SPECviewperf 2020 performance tests.

nice review, was wondering why the RTX A6000 wasn’t taken into the comparisons, the “original” RTX A6000 is a generation before that, the A6000 is one generation old

edit: the “original” RTX 6000 (minus the A), and i ment it is 2 generations old,

sorry for the typo

Did you use Nvidia’s “Studio Driver” for the 4090 tests? That driver has optimizations for some professional applications. I’m particularly interested to see if the 4090 gets a performance increase for the specviewperf tests.

junk in price/performance terms. But we don’t have other choice if we want to use +24GB memory over the 3090/4090 memory (for 3x the price – wow sooo expensive extra 24G GDDR-6). The premium price is clearly usage of the monopoly situation of Nvidia.