At Hot Chips 34 we got to see the NVIDIA Orin at a new level of detail during one of the talks. This is NVIDIA’s Arm-based edge CPU with an integrated Ampere GPU for the edge markets.

Note: We are doing this piece live at HC34 during the presentation so please excuse typos.

NVIDIA Orin Brings Arm and Ampere to the Edge at Hot Chips 34

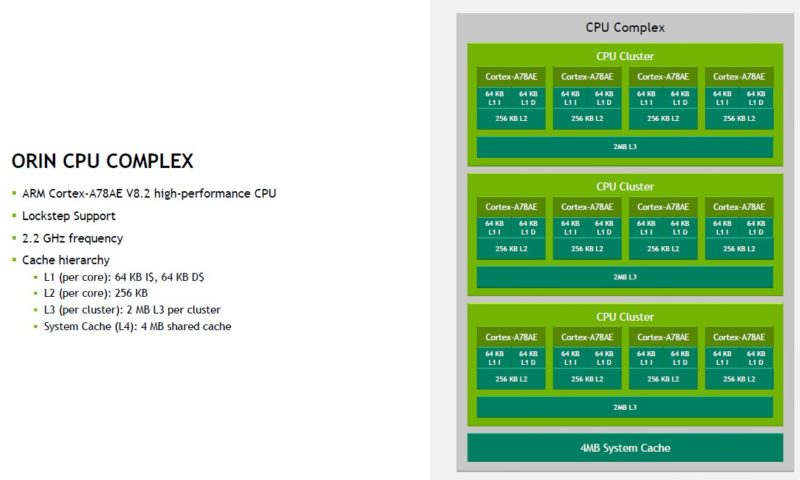

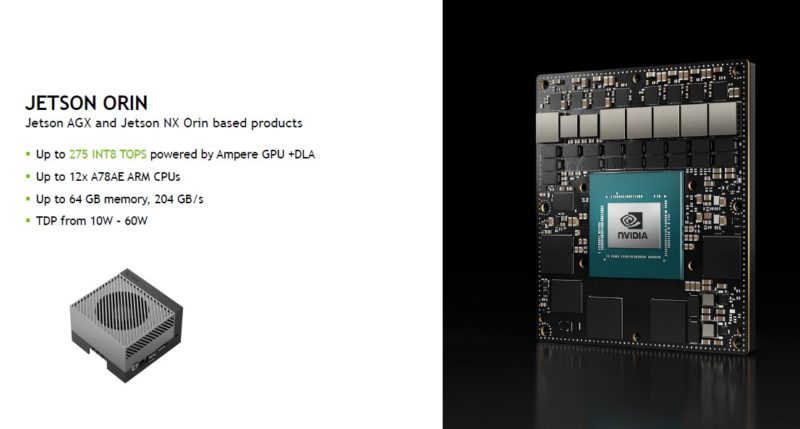

NVIDIA Orin has 12x Arm Cortex-A78AE cores using Arm v8.2. It incorporates an Ampere GPU, LPDDR5 memory, and has more I/O connectivity. The real goal of the platform is to bring NVIDIA AI inference to the edge in various form factors from automotive to robotics.

The Arm Cortex-A78AE cores operate at 2.2GHz and are arranged in three clusters each with 2MB of L3 cache per cluster in the 12-core configuration.

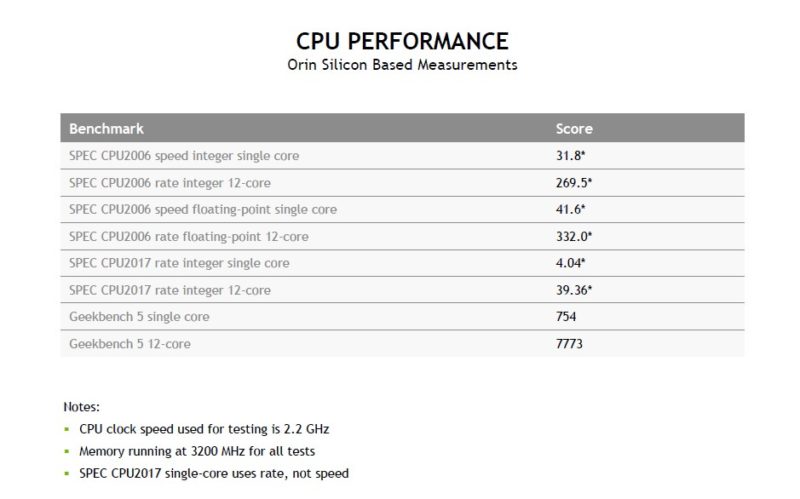

These are not official results, but for some perspective, this performance is very high. The SPEC CPU 2017 rate integer at 39.36 is very good. An 80-core 3.0GHz Ampere Altra Arm data center CPU is 301 and a 64-core AMD Milan-X is around 440. Again, those are official results compared to Orin that is estimated.

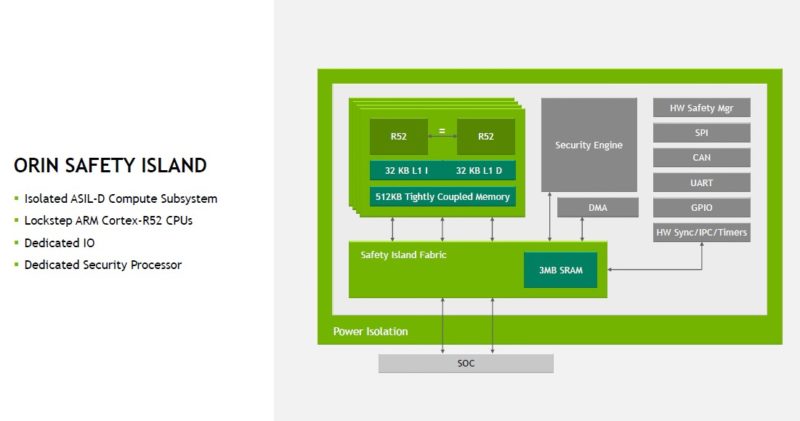

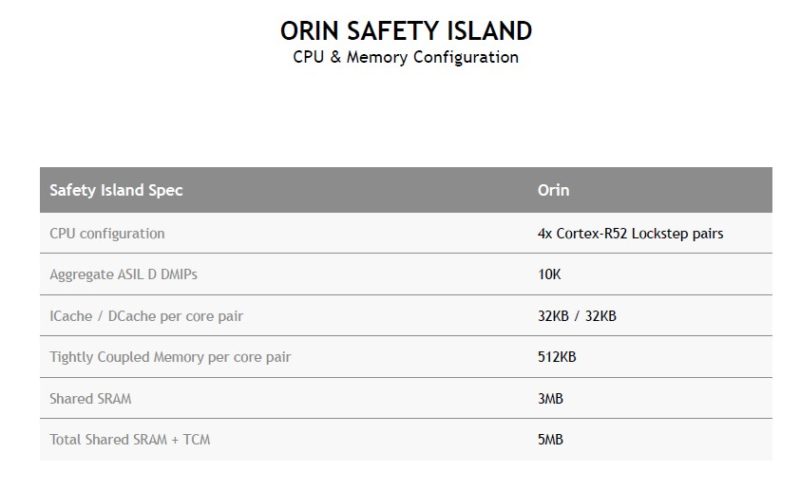

Orin a safety island complex with Arm Cortex-R52 cores.

Here are the specs on that. The lockstep pairs is perhaps the most interesting and important part.

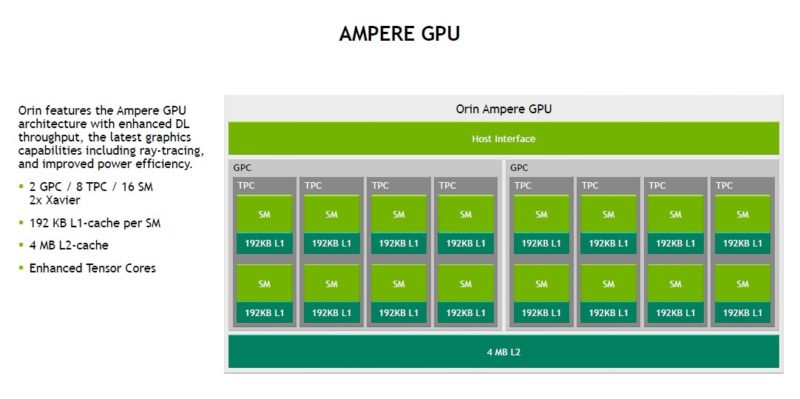

Another big feature is that Orin uses Ampere GPU IP. The embedded products skipped Turing so they went from Volta in Xavier to Ampere in Orin.

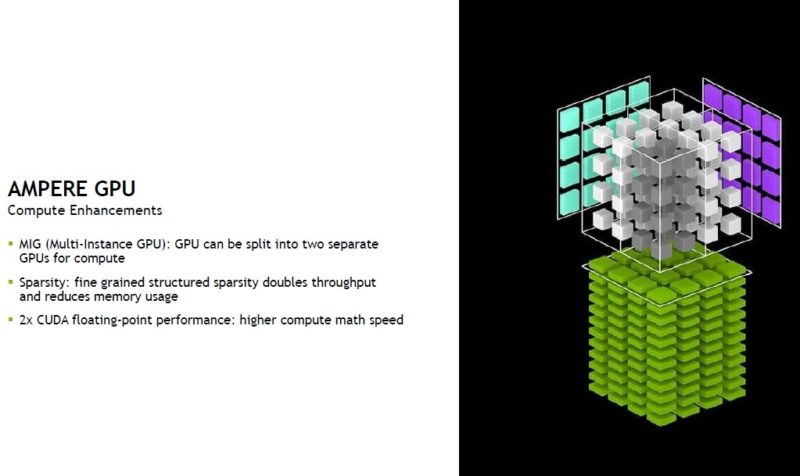

This GPU can split into two GPUs using MIG, like higher-power Ampere GPUs. This is a feature of the chip but during the talk NVIDIA said this is not supported in software and that is why we probably had not heard of it before.

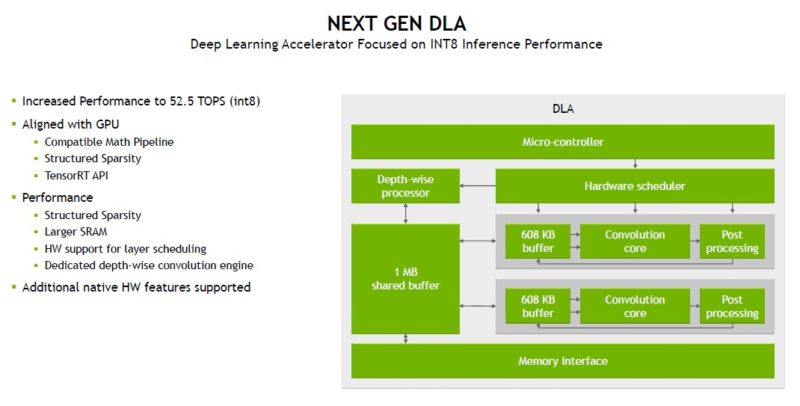

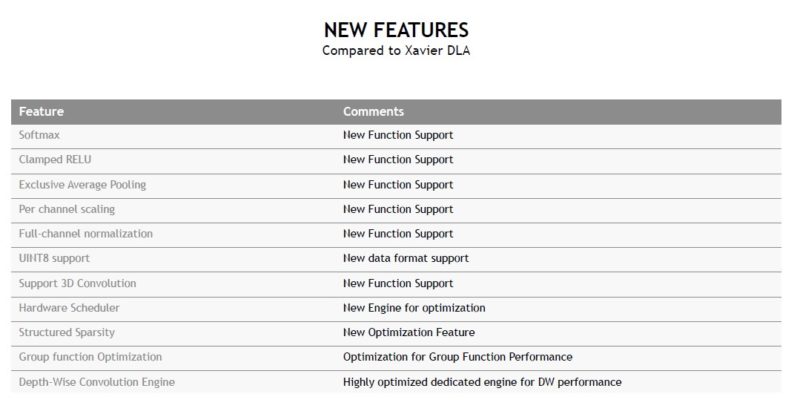

NVIDIA has its Deep Learning Accelerator with an emphasis on INT8 for AI inferencing since that is a key capability of this line. Training happens on bigger chips and systems. FP16 was removed in this generation due to power efficiency. DLA is designed for well-understood AI inference models and running at a lower power and lower area overhead. As a result, FP16 was removed in favor of INT8 optimization.

Here are the new Orin features:

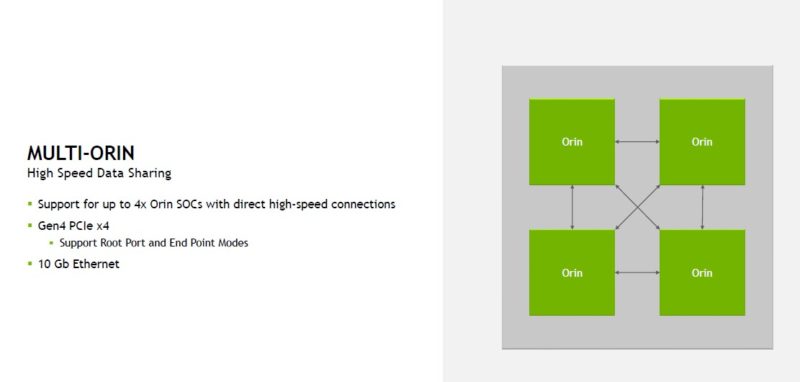

Something that I did not know before this talk was that there is a multi-Orin topology available. This can happen either with PCIe x4 links or with 10GbE.

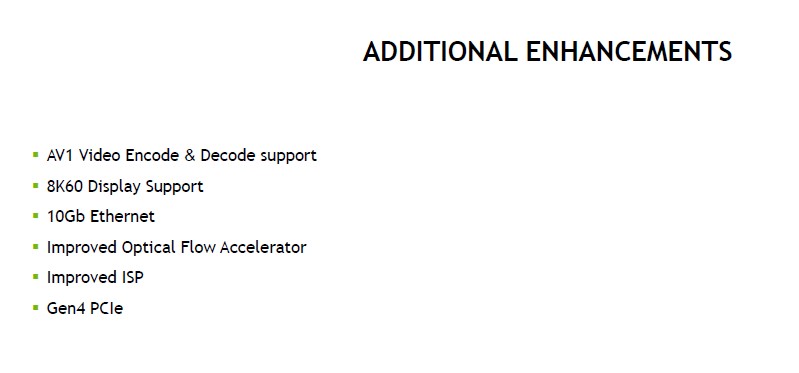

There has been a lot of talk about AV1 video encode/ decode. Orin has this along with being able to support functions for cameras and it even has 10GbE.

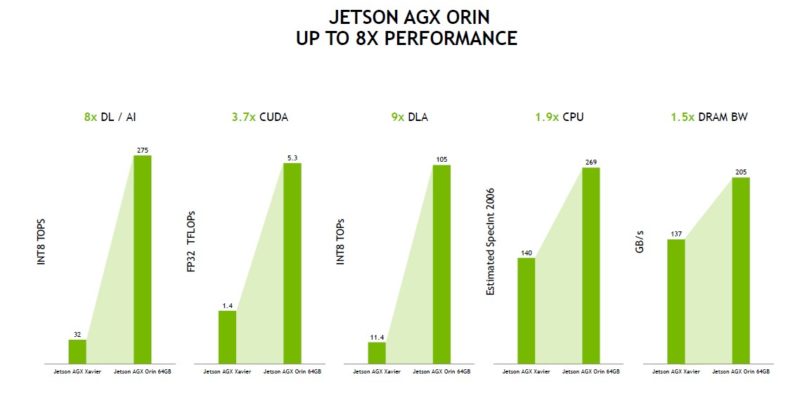

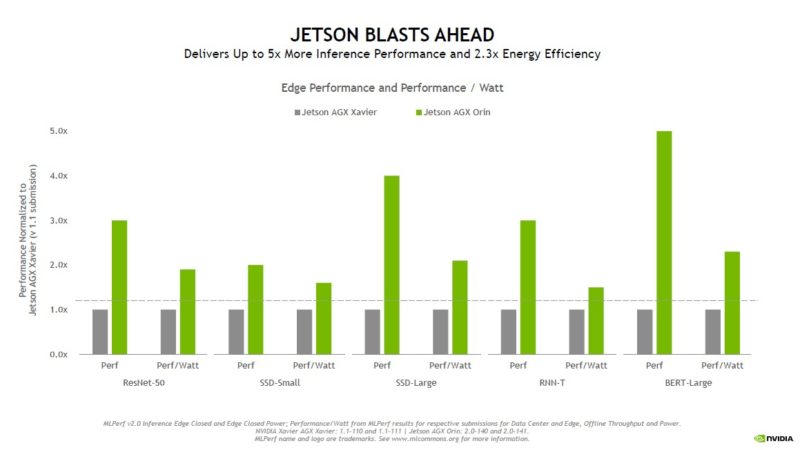

Performance is up with the more modern platform, but the main focus is really on accelerators on a platform like this.

In terms of energy efficiency, the new chips are faster and use more power, but they end up being more efficient.

The NVIDIA Jetson Orin is the line that will power robotics and has a 10W-60W TDP range.

For automotive, there is the Drive Orin Automotive. This is the one that scales to four Orin.

This is a big step function for NVIDIA and also an area where having more compute and energy efficiency is always welcome.

Final Words

We actually have a video for the NVIDIA Jetson AGX Orin that was going to go live next week but we are probably going to add in a bit from the HC34 presentation. This video was used as a bit of a test for a slightly new angle that took some time (and failures) to get together.

Stay tuned for more on this as it is a very exciting platform.