At SC20 in November 2020, we had the NVIDIA Mellanox NDR 400Gbps Infiniband Announcement. Now, NVIDIA is talking about its new switches as part of its ISC 21 launch packet.

NVIDIA NDR Infiniband 400Gbps Switches

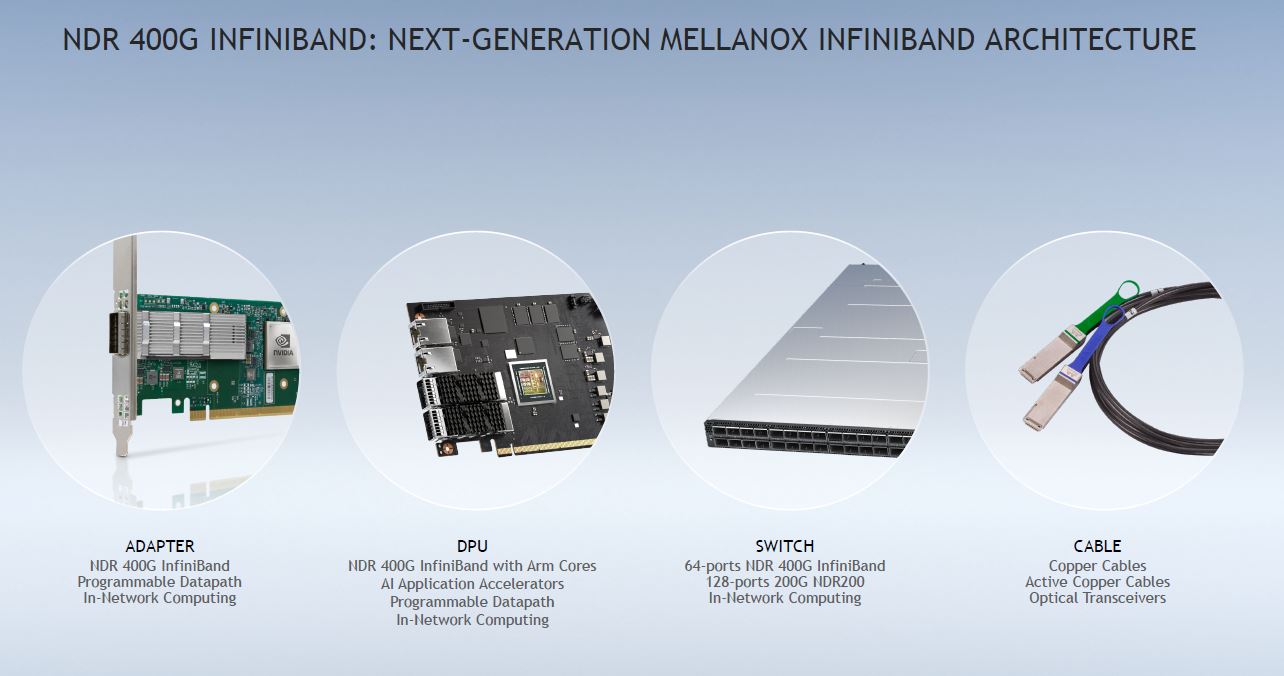

NVIDIA makes more than just switches. It has ConnectX adapters that we will see start to service 400Gbps in the PCIe Gen5 x16 slots with next-gen servers. The company also makes BlueField DPUs. The NDR BlueField DPU is a future roadmap product. Mellanox also made its own network cables and that will continue now that it is part of NVIDIA. One of the highly touted features with NDR is the ability to use copper cables even at 400Gbps NDR Infiniband speeds.

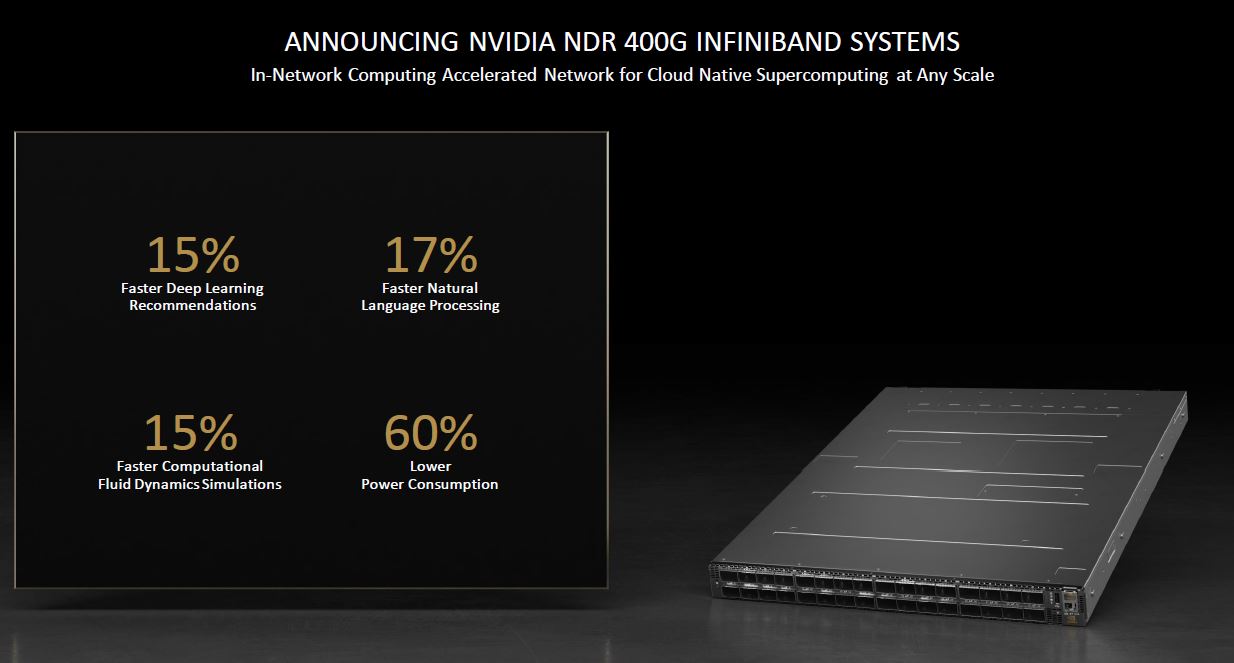

NVIDIA is now announcing its next-gen NDR Infiniband switch systems. One of the key features, aside from the faster fabric, is that NVIDIA is looking at offloading some compute tasks for distributed computing at the switch.

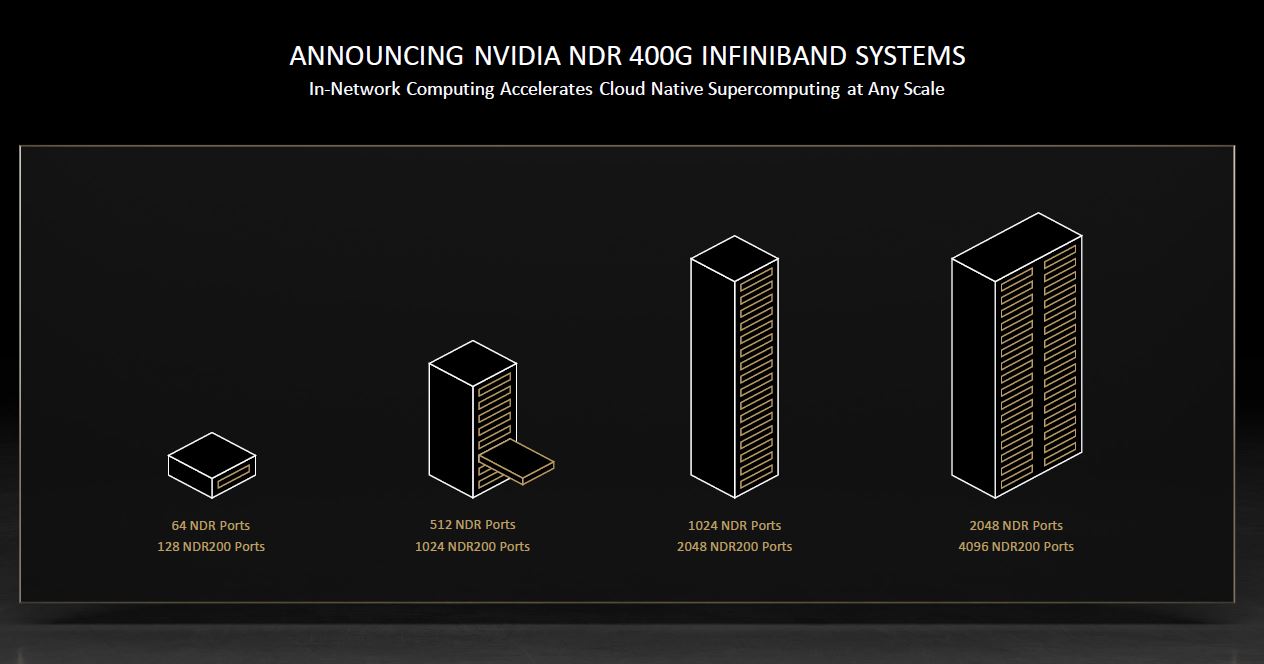

NVIDIA is also showing not just the 32-port model above but also 64, 512, 1024, and 2048 port NDR systems that can double the effective port counts running at 200Gbps NDR200 speeds.

These types of larger fabric switches are important to keep supercomputer and AI cluster topologies as flat as possible thereby reducing latency.

Final Words

What is fun here is that NDR Infiniband at 400Gbps is faster than current-gen PCIe Gen4 slots. There are technologies such as multi-host adapters that would allow one to use a PCIe Gen4 x16 from each current-gen PCIe Gen4 CPU in a dual-socket system to help utilize the bandwidth. NVIDIA has not publicly announced those cards but we have seen them in previous generations.

We know many of our readers tend to use the latest generation technology at work and previous generation technology in their labs, including Infiniband so we hope our readers look forward to these regardless of where they encounter NDR.

At some point they are going to run out of letter in the alphabet, why not call it Infiniband400 or something?

What is the signaling type on such an insanely high bandwidth copper cable like this? Are they doing QAM-over-copper already? Or is it PAM4 like GDDR6X?

0,5 m at 400G, not much of a copper…look at the GTC’s LinkX presentation

I hope they are going to sell a behemoth load of them and then we’ll start to see used 100Gbits/sec hardware on eBay at more reasonable prices :-)