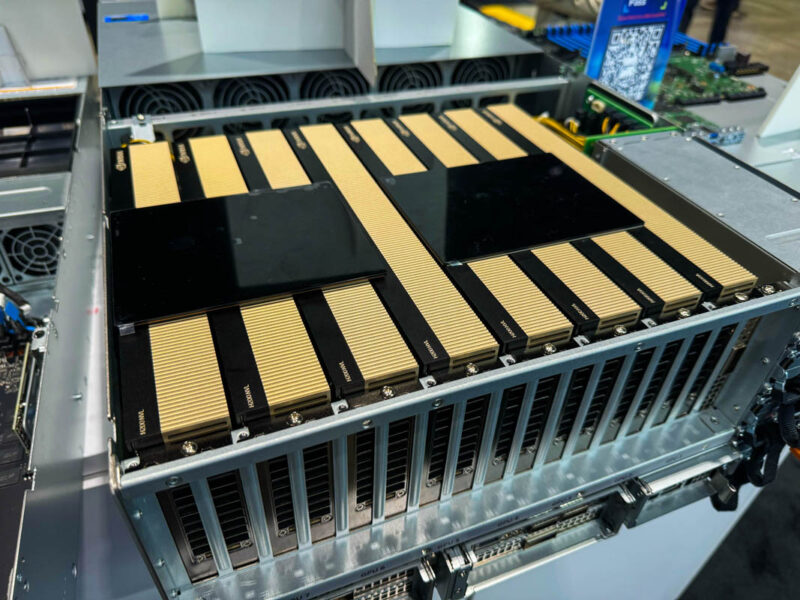

NVIDIA showed off its new PCIe form factor GPUs, the NVIDIA H200 NVL, at OCP Summit 2024. We saw these GPUs in a number of MGX systems as NVIDIA focuses on bringing lower-power (only 600W TDP max) inference-focused solutions to the market that also cost less.

NVIDIA H200 NVL 4-Way Shown at OCP Summit 2024

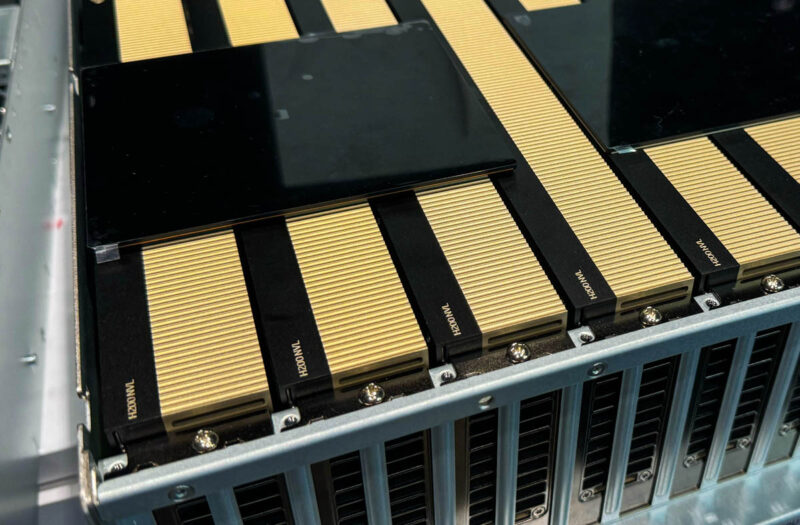

The NVIDIA H200 NVL was shown in a number of systems. You can see that these cards are marked “H200 NVL” not just “H200” as we might have seen from the NVIDIA A100 as an example. The other big feature is that there is a 4-way NVLink bridge between the different GPUs. That is a nice jump over only having a 2-way link. By utilizing the bridge, the GPUs still have PCIe, but they also have NVLink interconnect and are not using NVLink switches to make that connection, lowering power consumption and cost.

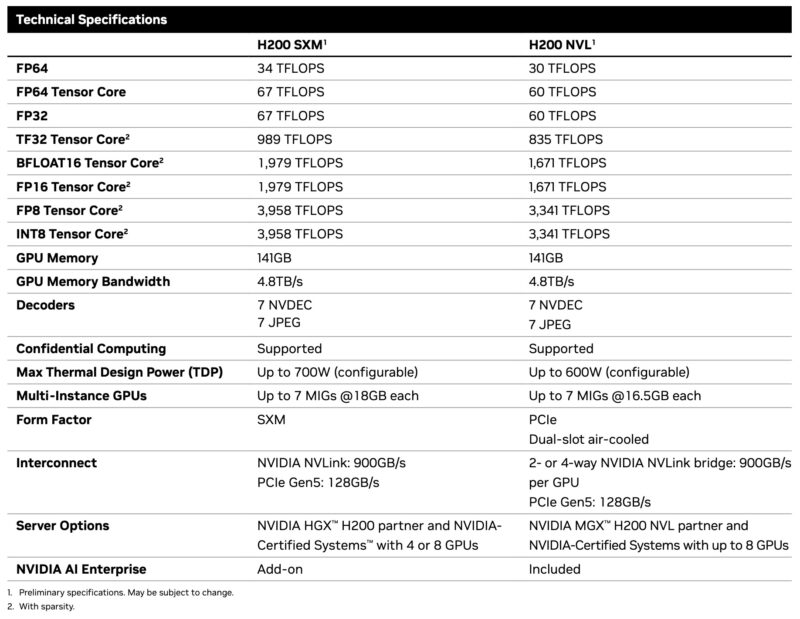

NVIDIA has the H200 NVL listed on its website, and the per-card performance is a bit lower than that of the H200 SXM parts given that the PCIe parts are limited to 600W. NVIDIA also says it is including NVIDIA AI enterprise with the H200 NVL cards on its website.

Given the 141GB spec of each card, we get 564GB of HBM across the four cards, which is formidable for inferencing workloads.

Final Words

The concept of eight double-width GPU servers has been around for many years, and so the 4-GPU H200 NVL solution allows for two of the 4-GPU clusters to be installed into one of these server designs.

These cards are certainly a step up from the NVIDIA L40S in performance, cost, and power consumption. Still, as PCIe servers are used to scale inference at a lower cost without things like NVLink switches, the NVIDIA H200 NVL can make a lot of sense. Also, some organizations prefer deploying PCIe GPUs, even if they are higher-spec 600W models, because they fit neatly into many server designs.