We are in San Jose, California today, covering the NVIDIA GTC 2024 keynote live. This is going to be a big year for the company as its current Hopper and Ampere generation parts are getting older in the market, and there is enormous momentum behind its AI components. GTC 2024 is set to be the company’s opportunity to put a distance between its offerings and all of the competitors who have been trying to take share. There is going to be a TON here, so get ready for a tidal wave of announcements.

We are going to be covering this live, so please excuse typos. Here is the live stream:

NVIDIA GTC 2024 Keynote Coverage New CPUs, GPUs, and More

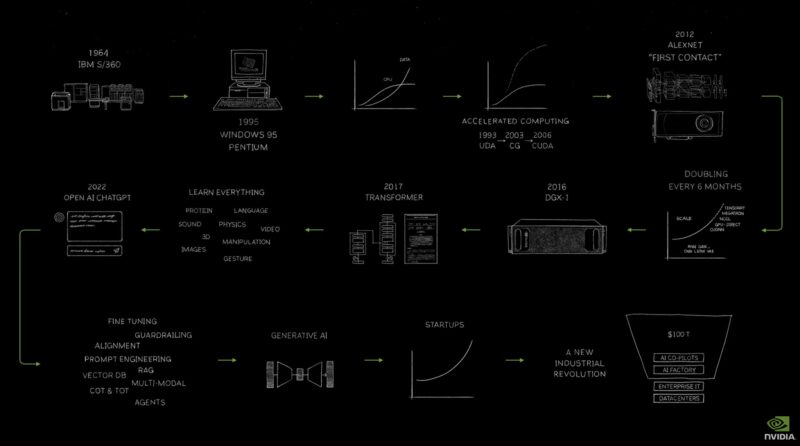

Jensen’s keynote is starting with the company’s journey over the last two decades or so.

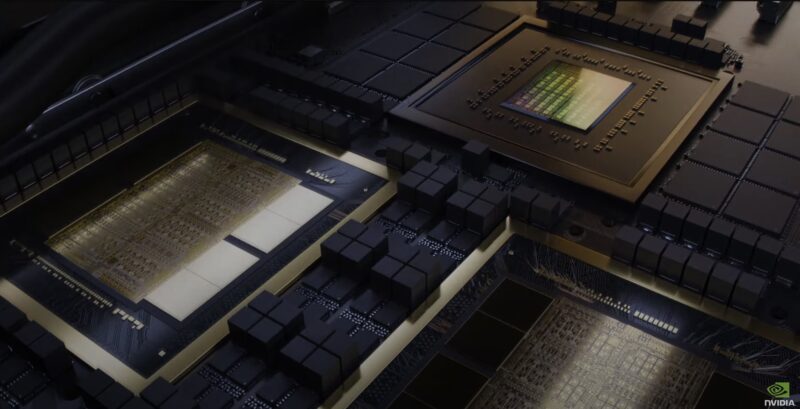

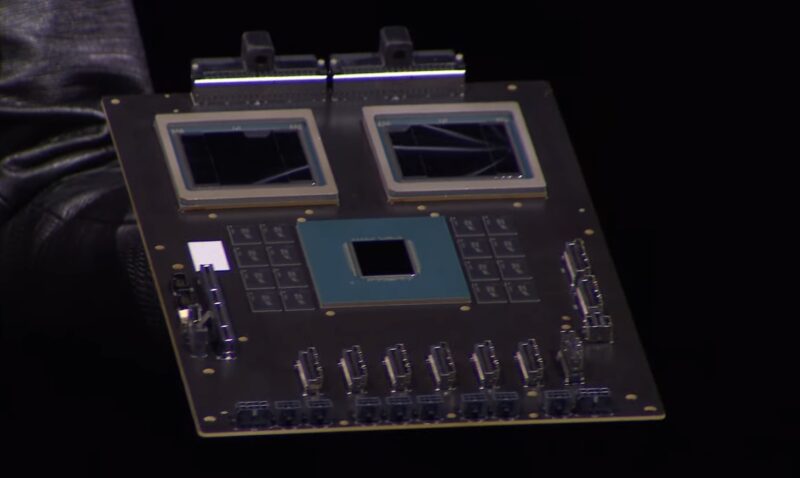

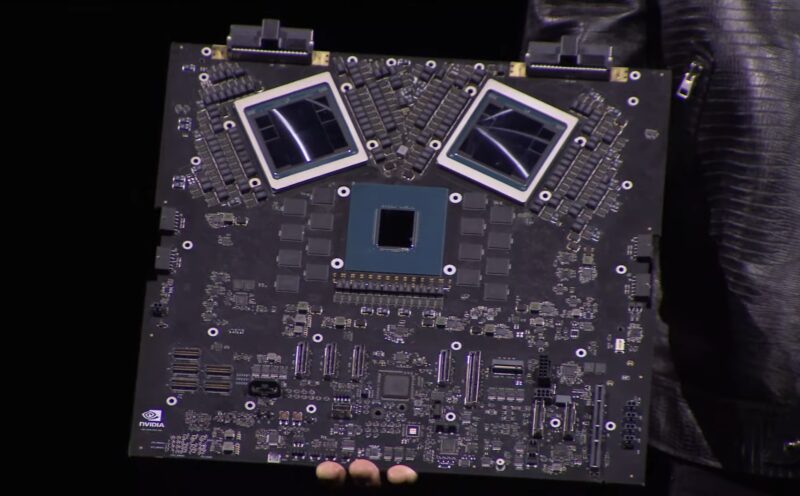

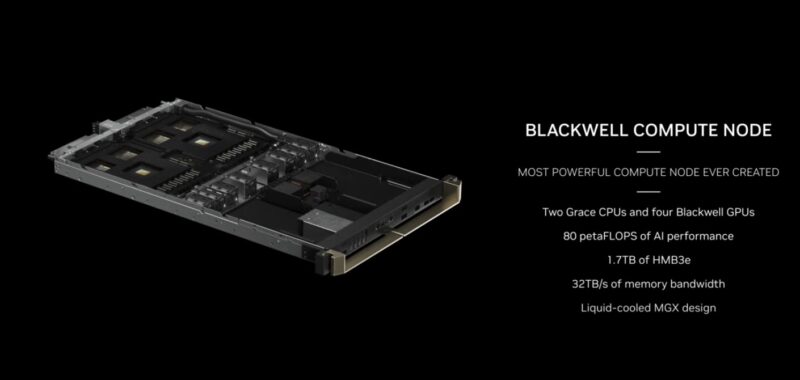

The NVIDIA Grace Blackwell is making an appearance early in the keynote. Here we can see an Arm CPU flanked by DRAM packages, and two linked next-gen GPUs. Each of the Blackwell GPUs seems to have eight HBM packages and two compute dies in this image.

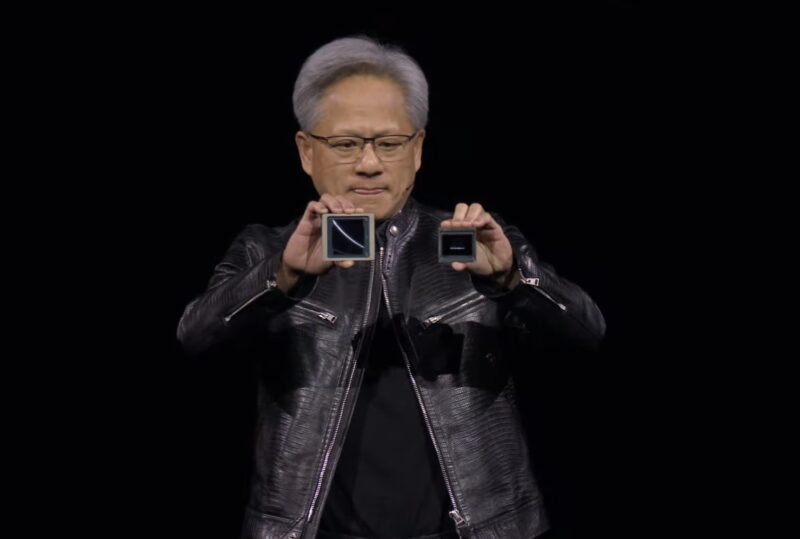

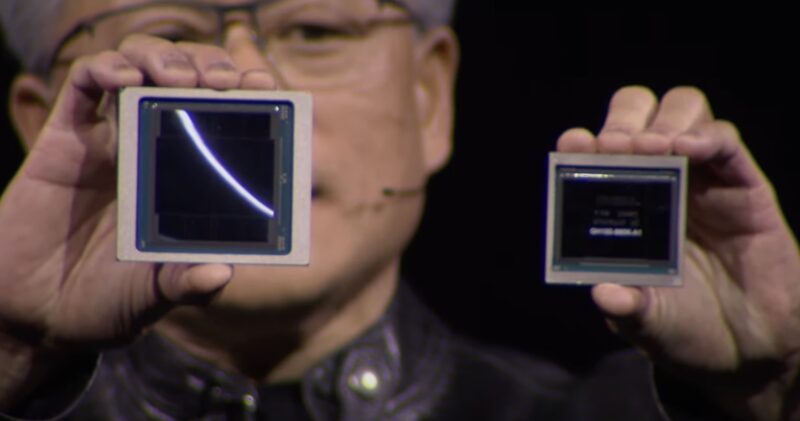

This is Blackwell and Hopper next to one another.

Here is a close up.

NVIDIA has a Blackwell GPU design for existing DGX/ HGX systems, the DGX B100 and HGX B100 is for x86 servers. This is designed for ~700W so it can work in existing systems.

Here is the NVIDIA Grace Blackwell with two Blackwell GPUs, and one Grace CPU with memory for each. There is NVLink at the top of the board and PCIe at the bottom of the board.

This is the future Arm and NVIDIA. Here is the development board below with the production board above.

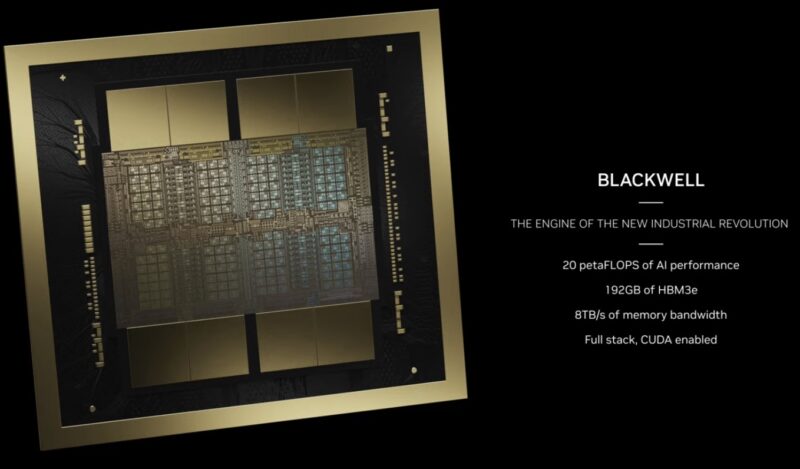

Here are specs on the Blackwell GPU with 192GB of HBM3e which sounds like 8x 8-hi HBM stacks.

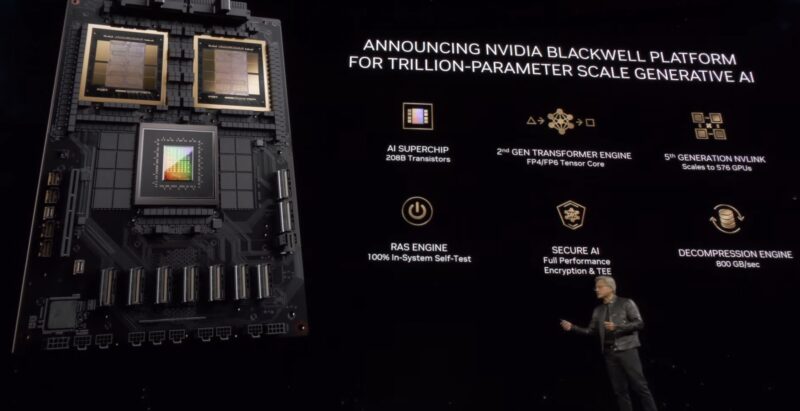

There are new features such as a new transformer engine that can handle FP4 and FP6. 5th Gen NVLink, RAS, Secure AI, and decompression engine.

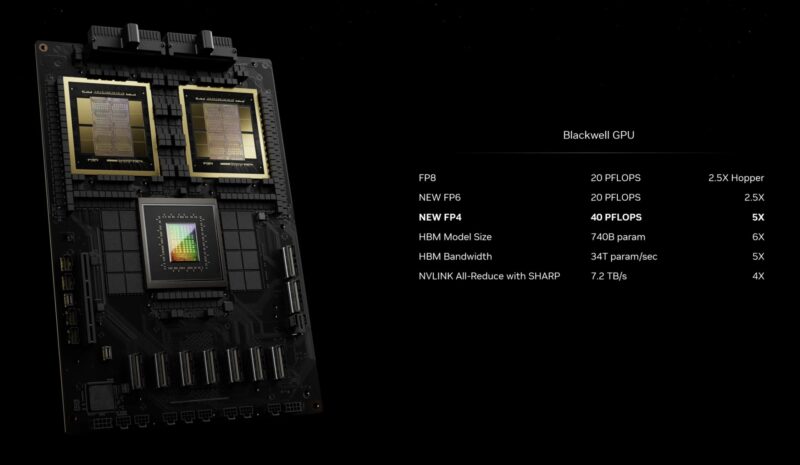

FP4 and FP6 add a ton of performance over Hopper.

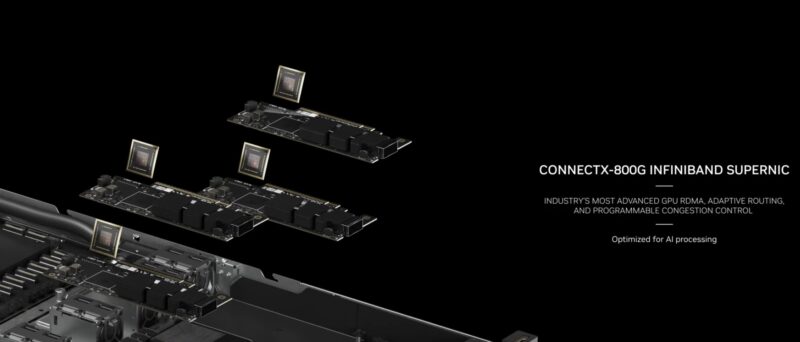

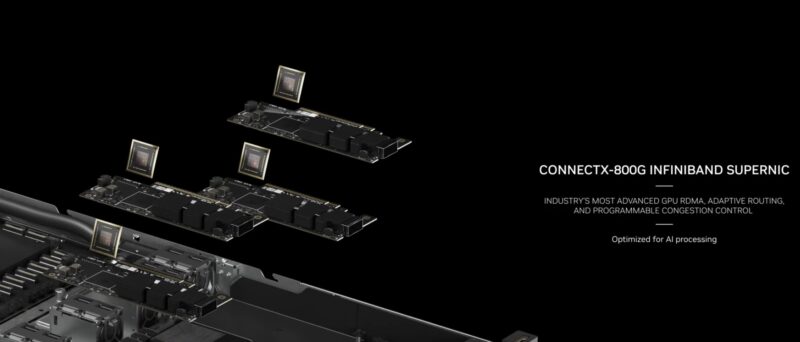

NVIDIA also announced the ConnectX-8 / ConnectX-800G Infiniband. 800GBps networking is here, so this has to be PCIe Gen6.

Interestingly, NVIDIA is still using BlueField-3 DPUs, not a newer generation. This feels strange given that everything else saw a revision.

Here is the 800Gbps NVIDIA Quantum Infiniband switch.

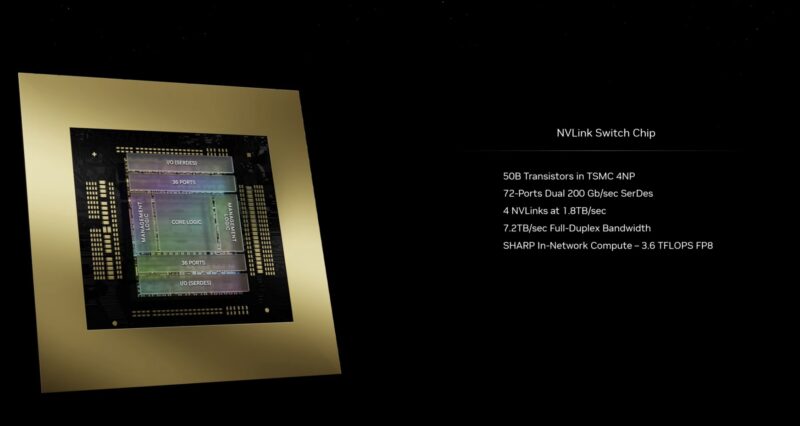

Here is the new NVLink switch chip. This is a big deal as others in the industry do not have a scaling solution like this.

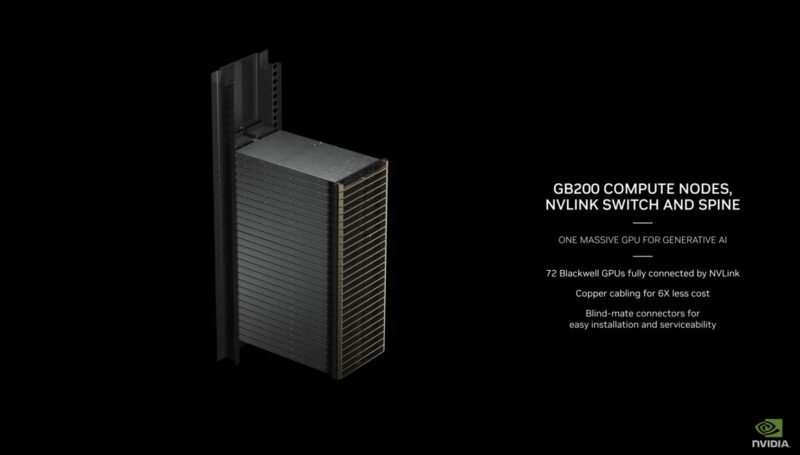

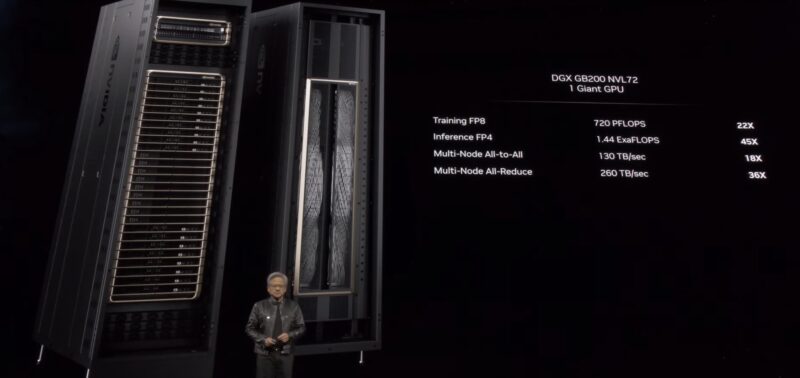

This is the new NVIDIA DGX GB200 NVL72. With 72 Blackwell GPUs connected by NVLink.

This is a liquid cooled and NVLink enabled DGX. This is way bigger than current DGX systems. This is around 120kW.

Here is the copper NVLink spine that is using copper saving around 20kW of power.

There are also switches in the NVIDIA GB200 chassis with two of the GB200 complexes.

Here is the overview of the NVIDIA Blackwell Compute Node.

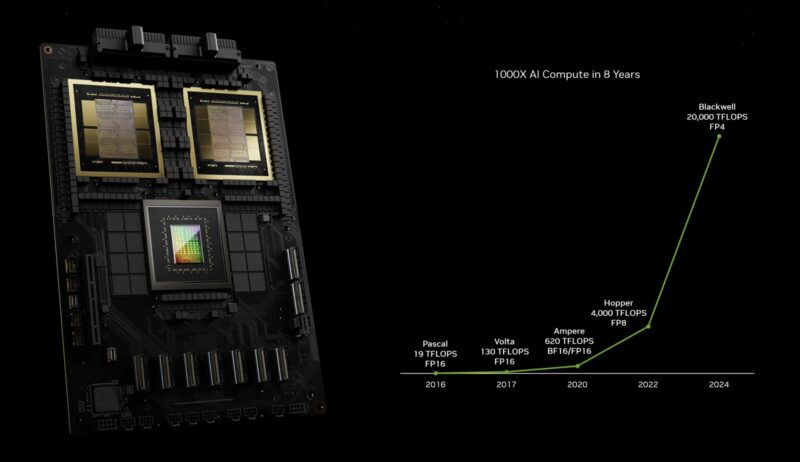

Here is the impact of just the GPUs on performance from Pascal. NVIDIA has done a lot of things like adding tensor cores, changing precision, and more to achieve this, in addition to process shrinks and just cramming more transistors onto a chip.

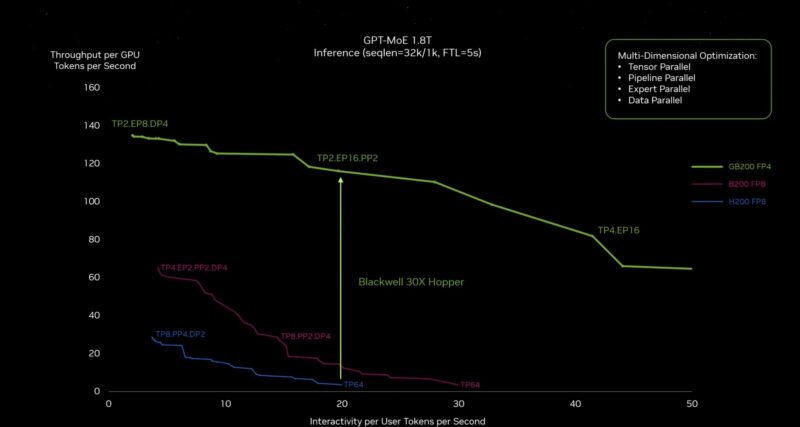

NVIDIA says Blackwell is around 30x the performance of Hopper because of the new NVSwitch and the new FP4 transformer engine. The purple line would be what would happen if NVIDIA just built a bigger Hopper with more transistors.

If you are another company making AI accelerators about the size of NVIDIA’s, this should make you nervous. AWS, Google, Microsoft, and the OEMs/ ODMs are a Blackwell customers.

NVIDIA just showed Apple Vision Pro working in Omniverse. That will unlock a big market for Apple.

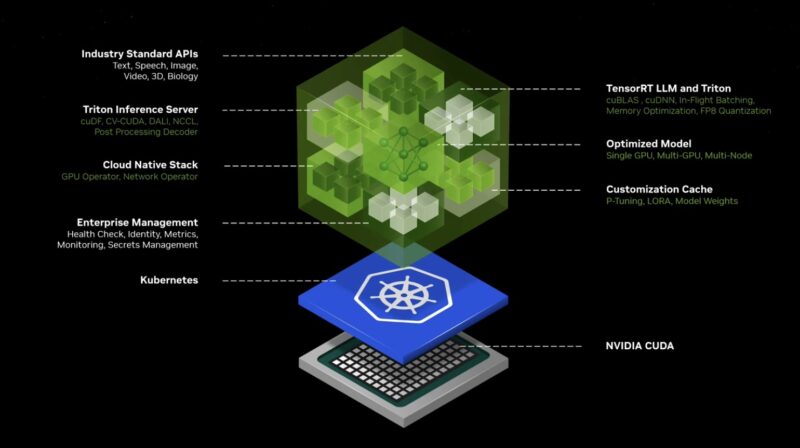

NVIDIA is packaging up pre-trained models with dependencies and making it easy to deploy microservices it calls NVIDIA Inference Microservices or NIMS. This is not just CUDA. This is making models easy to implement.

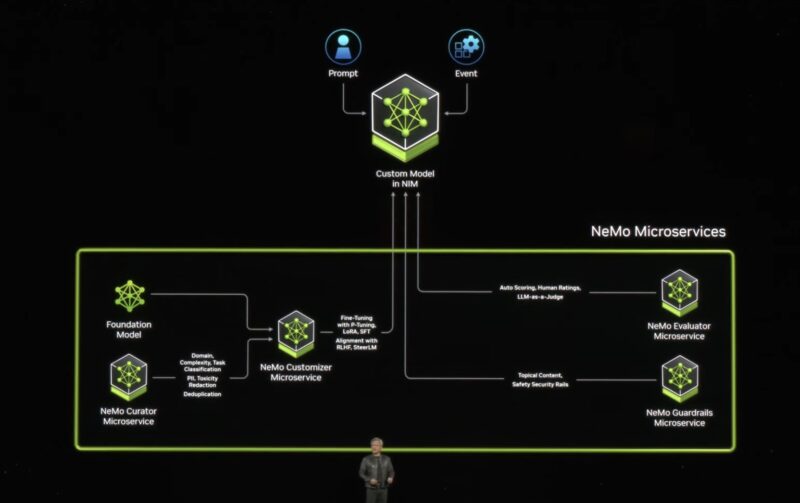

NVIDIA will help fine tune model or customize model for companies and applications.

With the NVIDIA DGX Cloud, NVIDIA wants to become the AI foundry, or like TSMC for AI.

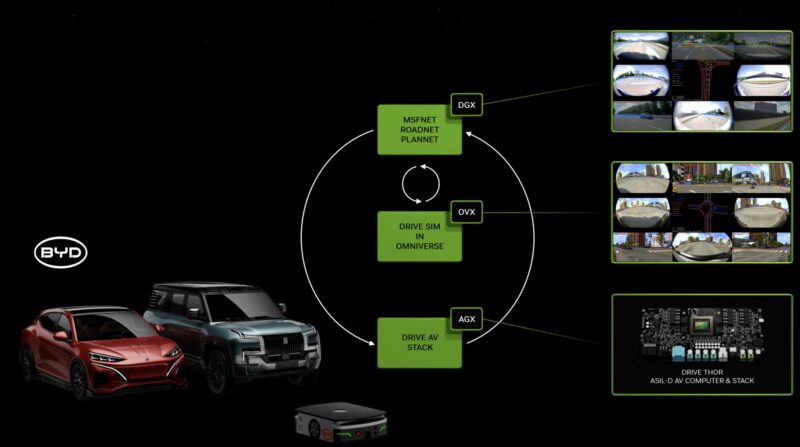

NVIDIA Drive Thor is going to be adopted by BYD among others.

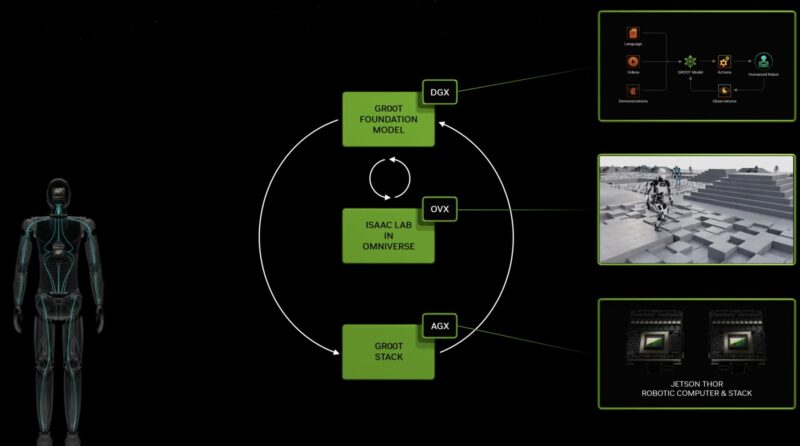

NVIDIA Jetson Thor is for robotics updating the NVIDIA Jetson Orin. One of the big bets NVIDIA is making is on this next generation being able to control humanoid robots. NVIDIA is building a software stack for this as well.

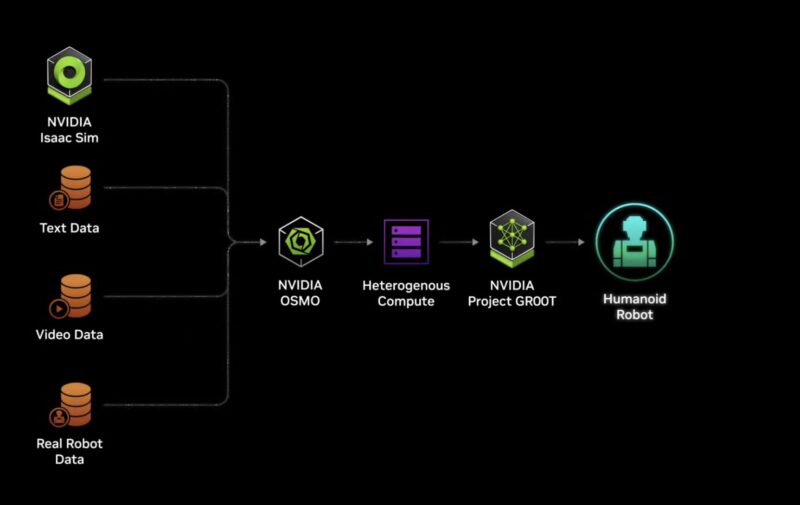

The world is made for humans, so the idea is that new humanoid robots using NVIDIA Thor and the software from NVIDIA Project GR00T to train and manage them.

Jensen got on stage with humanoid robots powered by the company, including smaller robots from Disney that learned to walk in NVIDIA Isaac SIM.

NVIDIA is trying to bridge the digital world to the physical world via robotics. Going all the way from the company’s data center products to the edge. That is the vision from this GTC.

Final Words

NVIDIA now has outlined its new portfolio across networking, high-speed AI interconnect, data center CPUs, GPUs, clusters, edge AI, robotics, healthcare and more. Over the next few weeks and months, all of NVIDIA’s competitors will need to respond. For years we have discussed the concept that it is very hard to compete against NVIDIA trying to do something the same or similar, hence why we have said startups like Cerebras might have a future, but it would be hard for an AI startup like Graphcore to compete in a similar form factor.

If you were wondering about last weekend’s Jetson Arm + NVIDIA piece, hopefully the direction for NVIDIA has become more clear.