NVIDIA GTC 2017 comes on the heels of a blockbuster earnings release from NVIDIA, buoyed in large part by its data center group. It is well known that AI, deep learning, and VR are the current-generation killer applications. NVIDIA is currently leading in all three areas, and at GTC 2017 we expected to hear more about the future from NVIDIA’s CEO.

Talking Compute Scaling

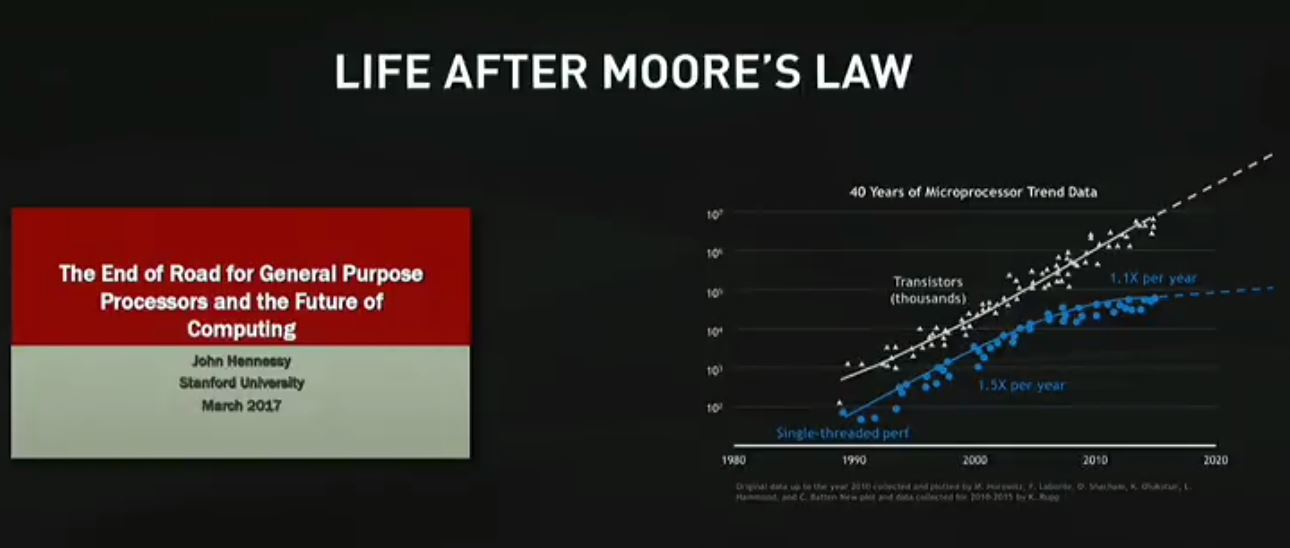

The first point is that Moore’s Law is dead. For those unaccustomed to these presentations, NVIDIA’s chief competition is Intel so the company takes a shot at Intel each show.

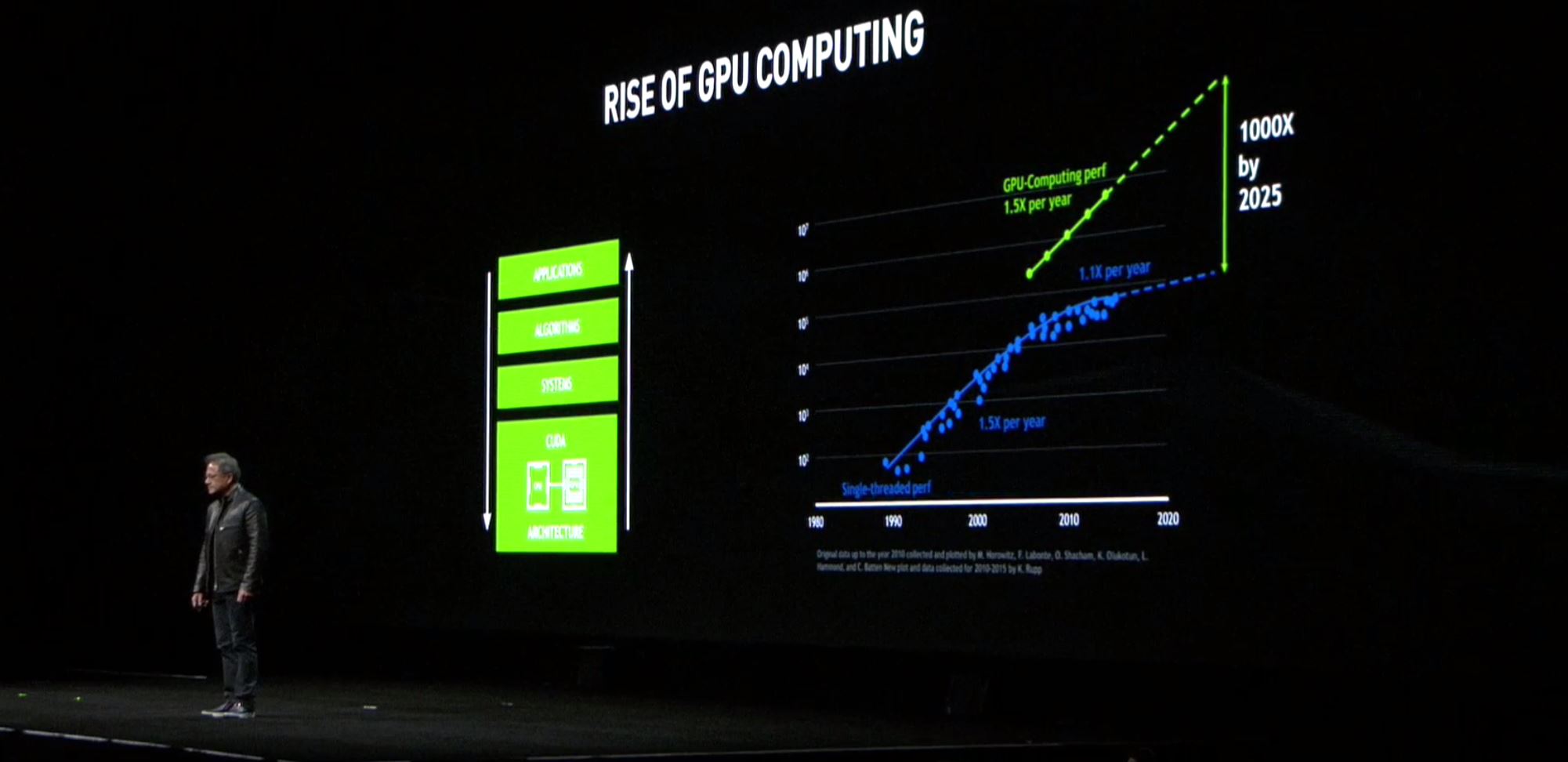

One of the big reasons is that through CUDA and GPU architectures, extracting parallelism leads to enormous performance gains. Each generation of GPU, we see performance gains backing up these claims.

Although we do not yet see GPUs running general purpose software, next-generation compute heavy workloads are running on GPUs.

Project Holodeck

NVIDIA is on a major push to get their products into design collaboration tools and virtual reality. Project Holodeck is one such project to enable that.

Our highlight from the demo was seeing the Koenigsegg walkaround.

If you have seen VR showroom demos, this is an amazing capability. You can even use capabilities you would not have in a physical showroom:

This is a very cool demo.

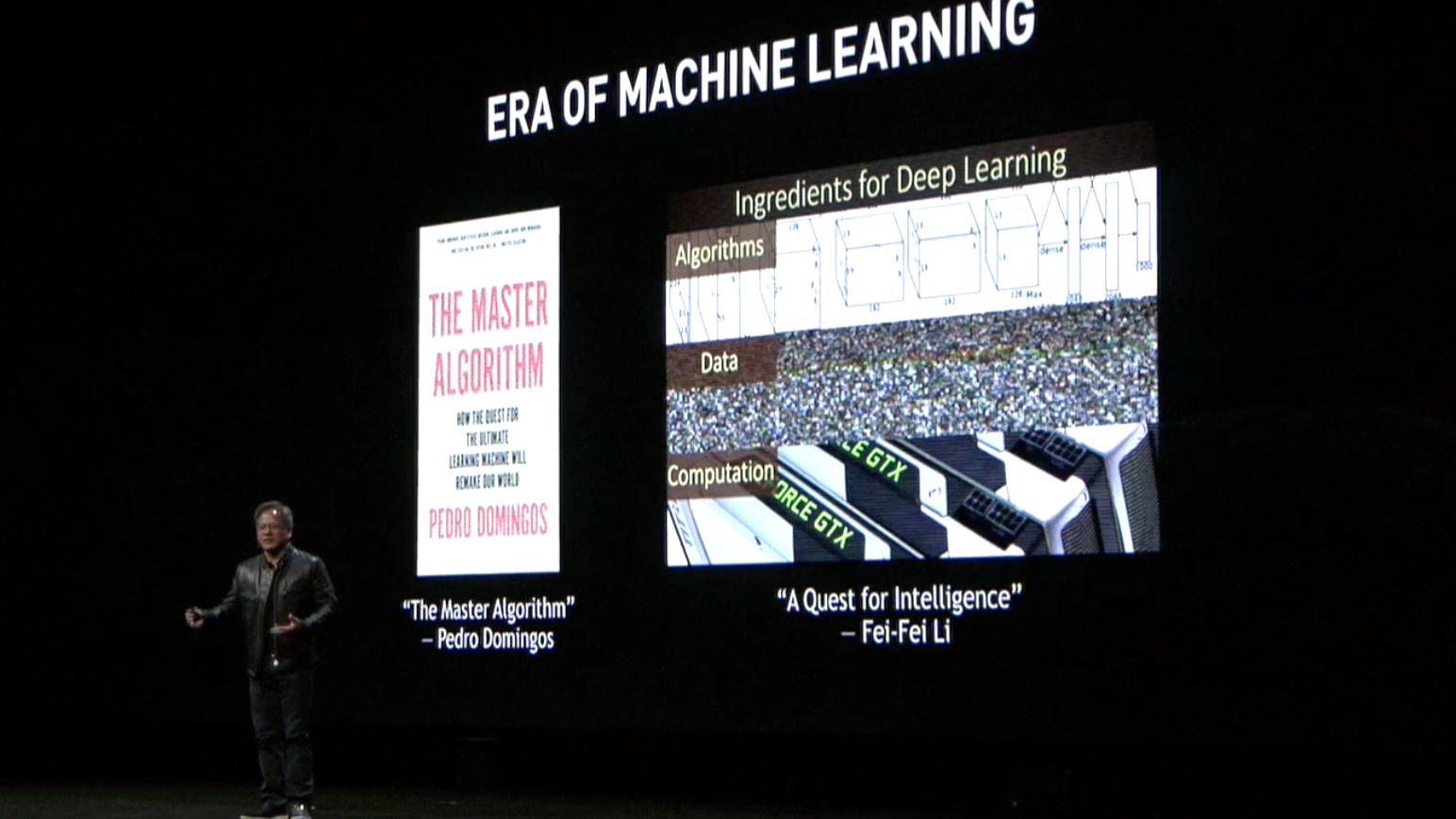

The Era of Machine Learning

If you want the big revenue driver for NVIDIA over the past few years, it is machine learning/ AI.

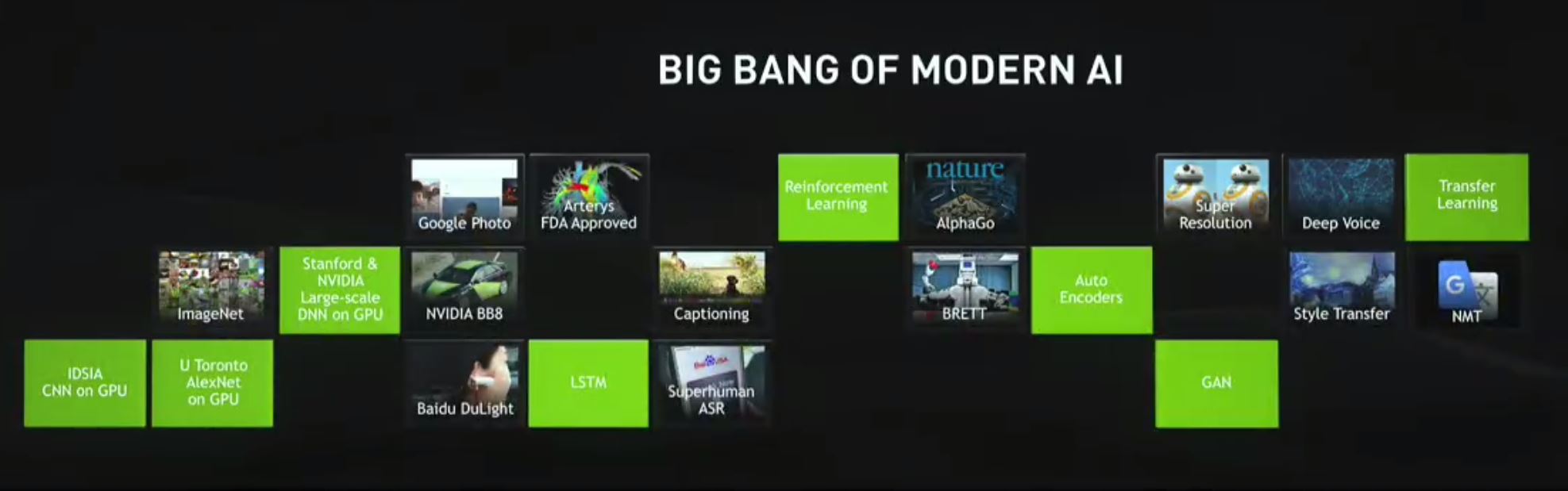

The keynote continued showing the amazing pace of modern AI and highlighting some of the new techniques and capabilities.

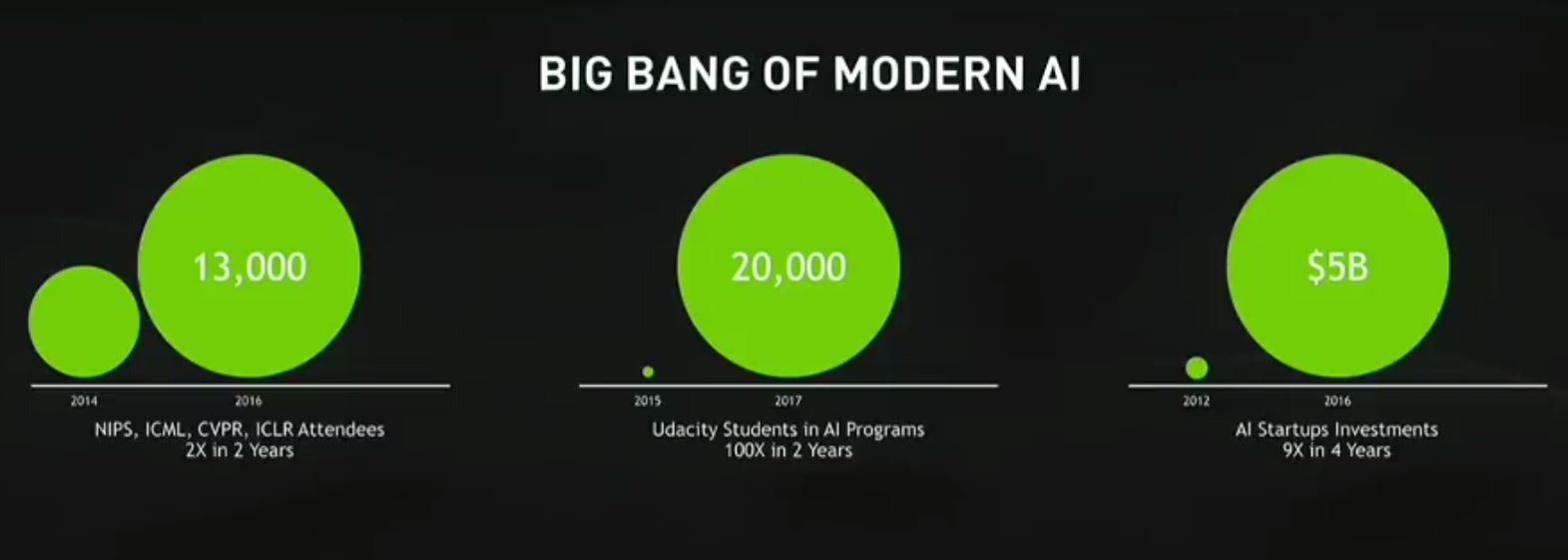

Here is the view of the growth of the modern AI/ deep learning field using a few market facts.

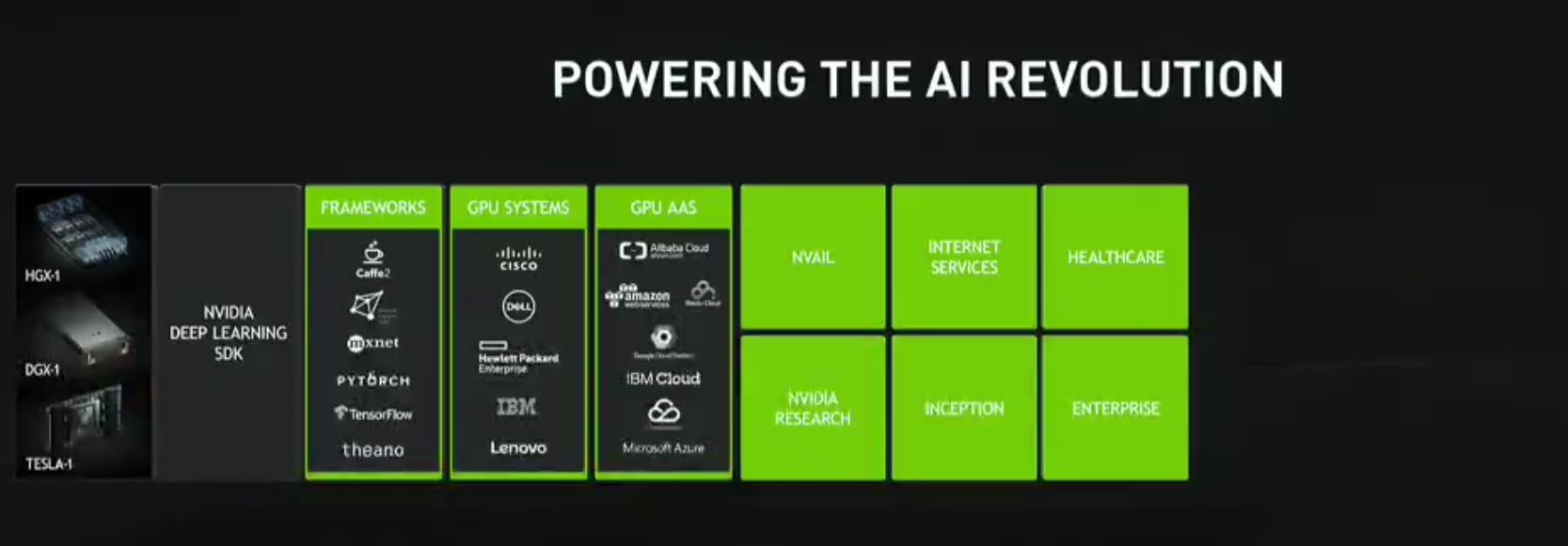

How does NVIDIA power this? NVIDIA “supports every major framework”, is available in systems from every major OEM, and is available on every major cloud.

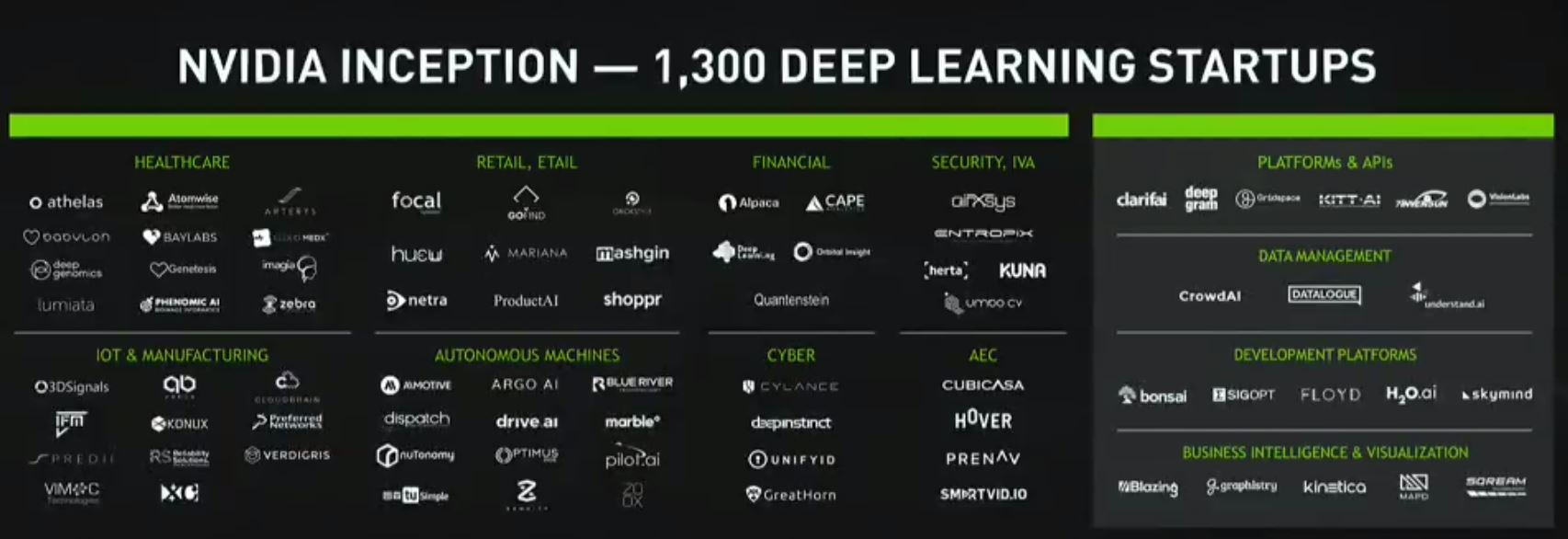

In terms of scale, NVIDIA Inception is an 18-month-old program to help deep learning startups and now has 1300 deep learning startups.

Beyond startups, NVIDIA is working in the enterprise space.

NVIDIA AI and SAP for Enterprise

A very cool application NVIDIA showed off with its SAP collaboration was the ability to recognize brand impact in video.

This can be used by the advertising industry to evaluate the amount of exposure a brand got from a given sponsorship placement.

One can see that the partnership is looking to expand well beyond this brand impact application.

Introducing NVIDIA Tesla V100

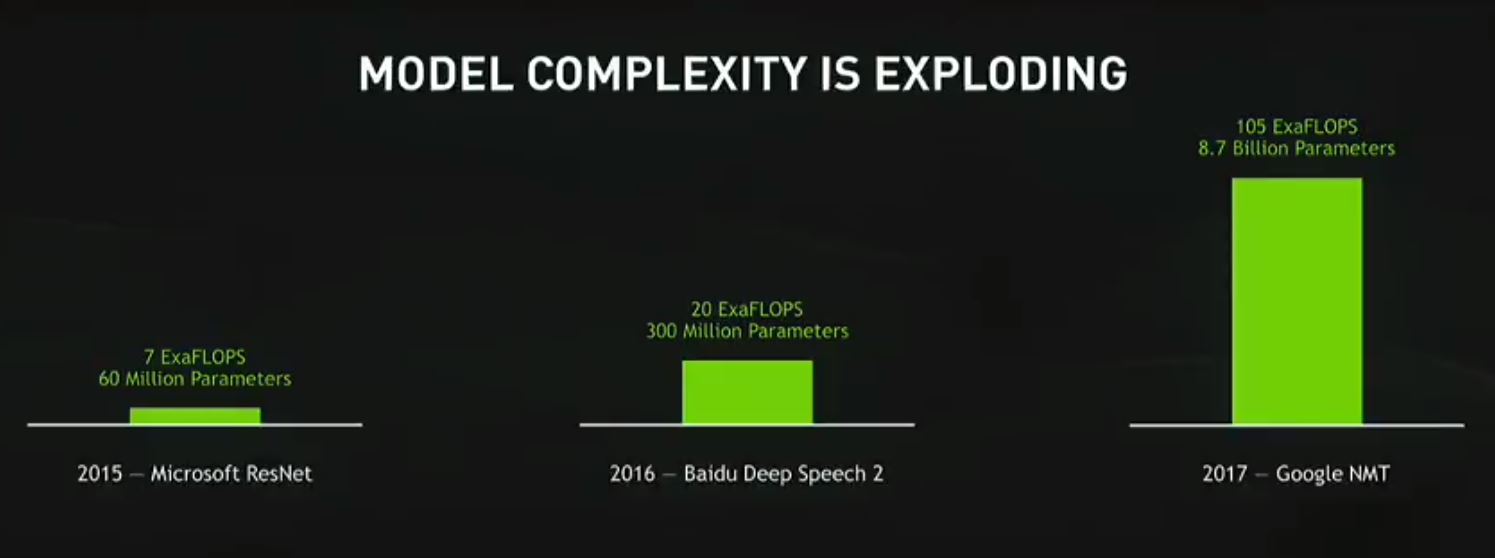

The setup for the new chip is that models are getting bigger.

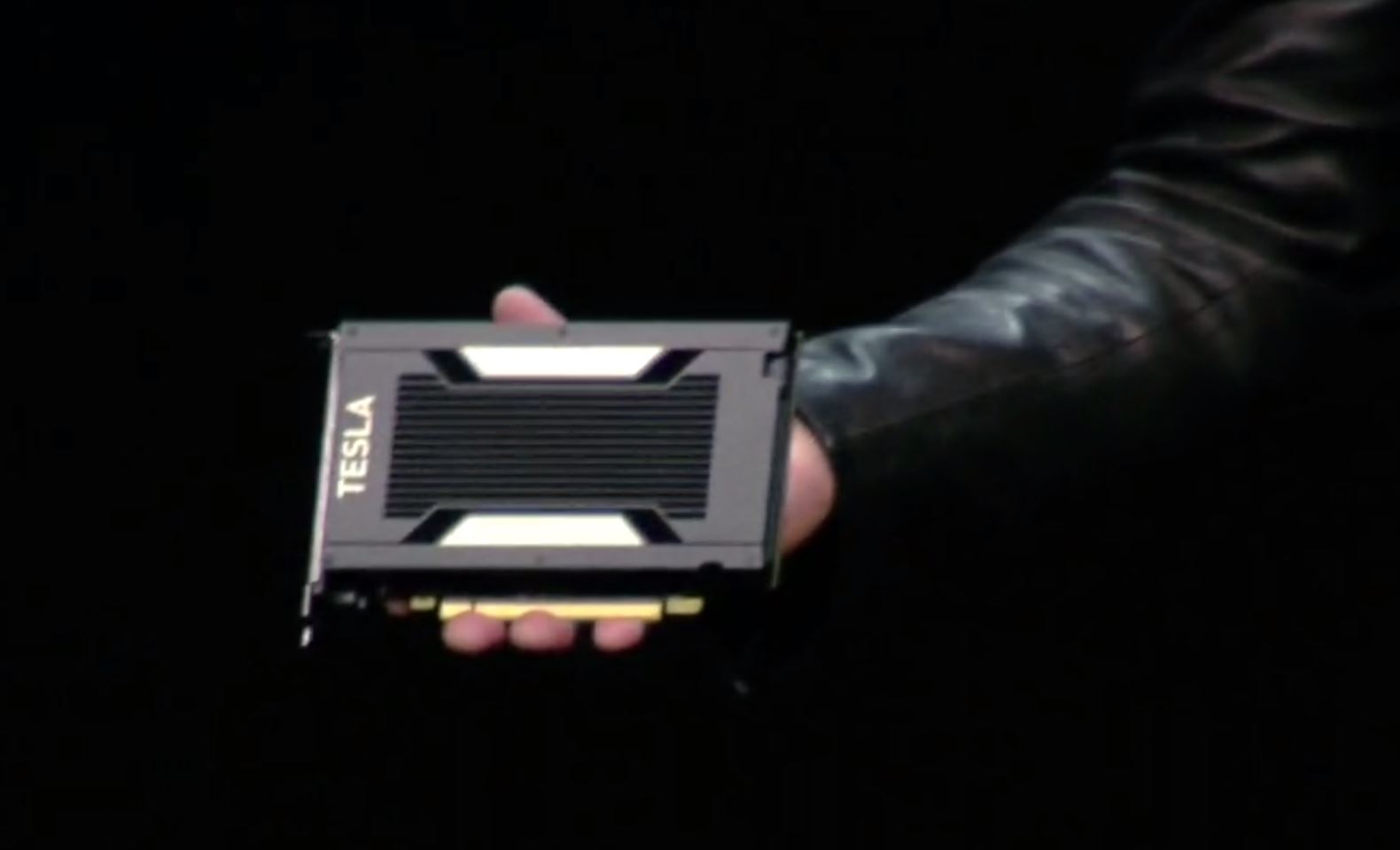

Here is the shot for the new NVIDIA Tesla V100 introduction. The NVIDIA Tesla V100 is the successor one year later to the Pascal-based P100.

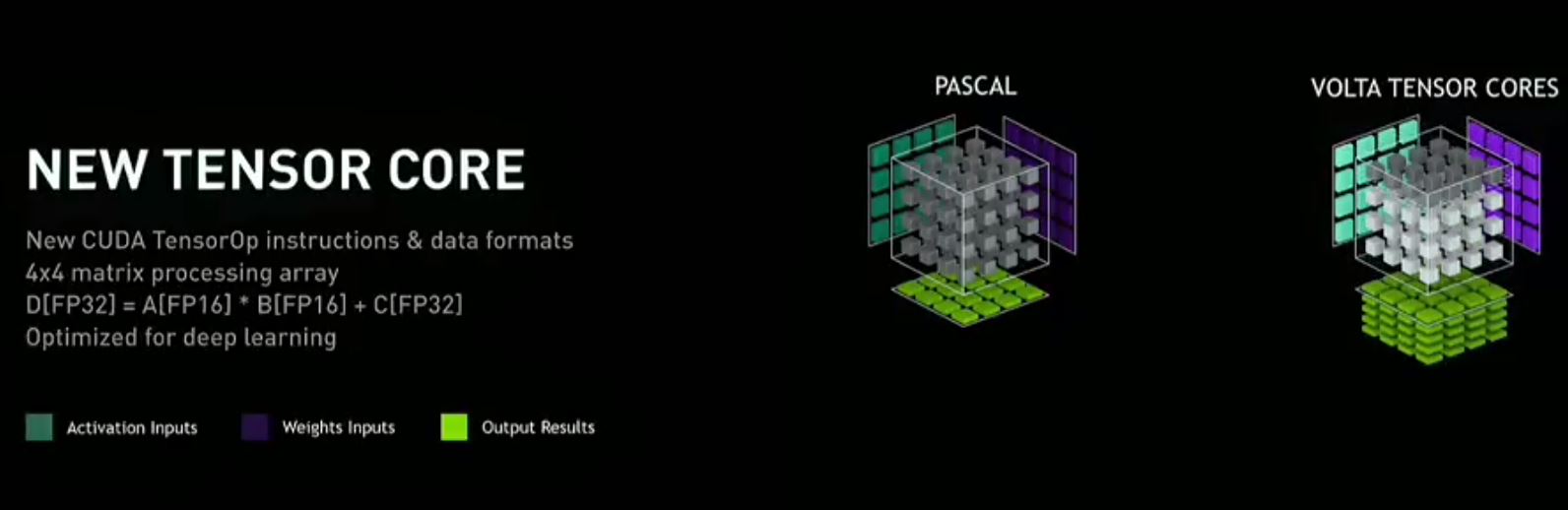

The new chip is clearly targeted at servers and HPC workloads. One of the key points is memory with a 20MB “huge” register file, 16MB cache, 16GB HBM2 providing 900GB/s. Just to give an idea in terms of performance improvement using the new Tensor Core:

Tensor Core is the new compute construct for Volta. It essentially accelerates matrix processing in Volta. Here is the comparison between the two:

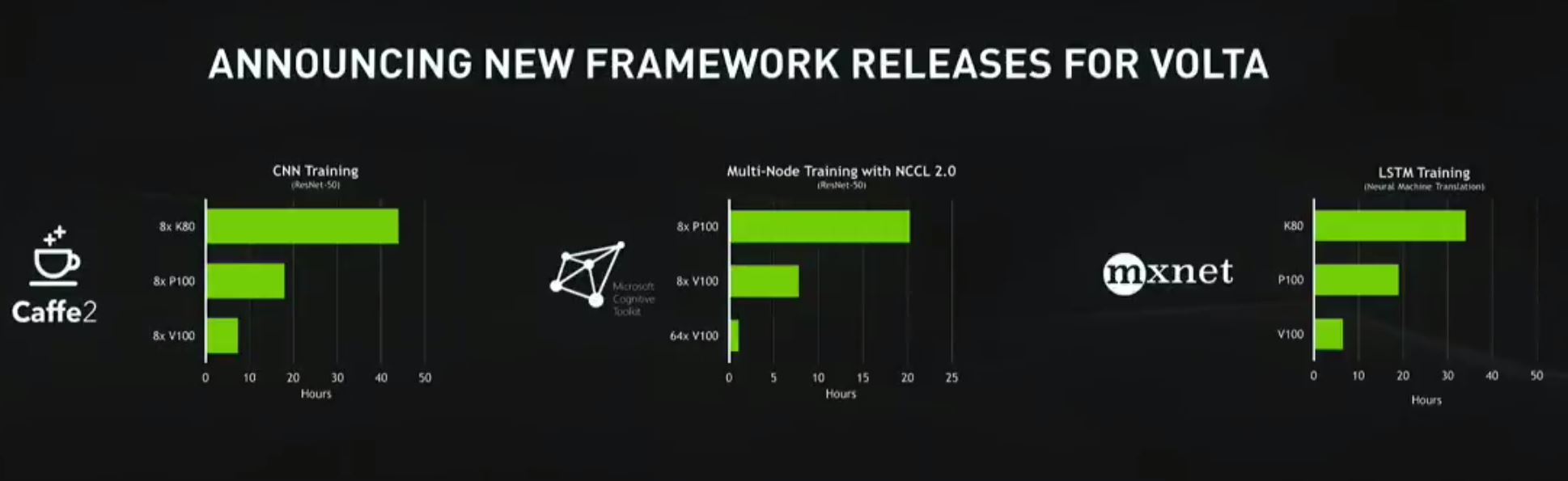

Here is an example of the K80 to the P100 to the V100 performance improvement in popular frameworks:

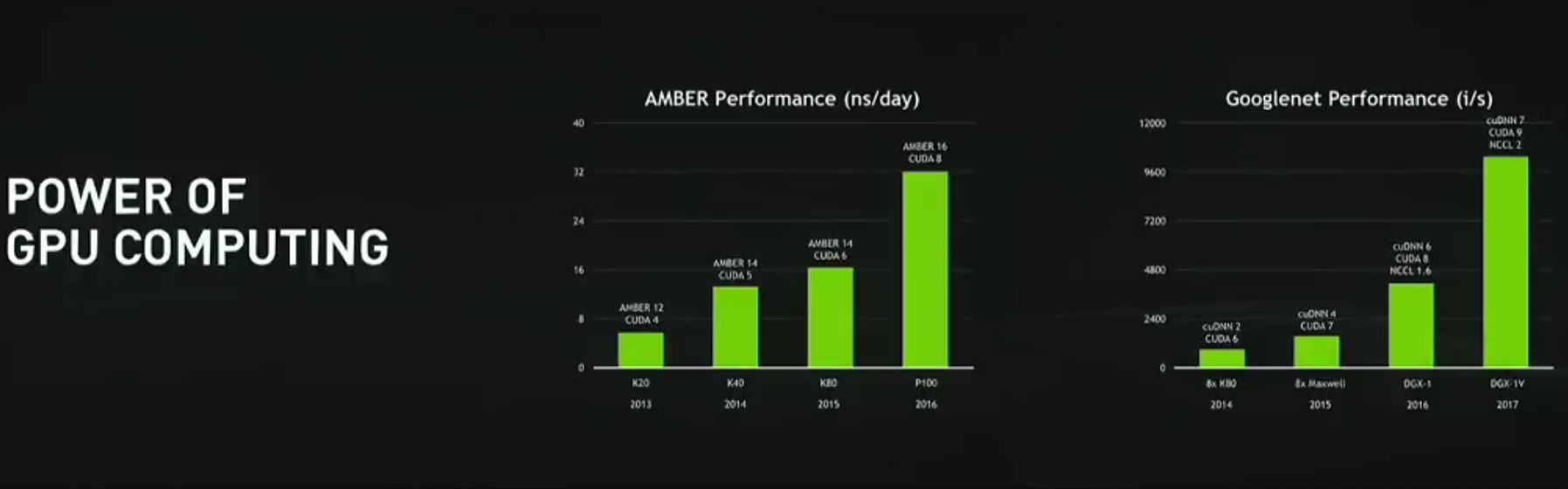

Here are the Amber (molecular) and Googlenet benchmarks for generations:

The NVLINK servers are aimed at HPC markets where NVIDIA is battling the Intel Xeon Phi x200 series. We covered Intel Xeon Phi Updates at SC16 and how Intel was taking a lot of market share. These NVLINK parts are the high-end parts so we expect NVIDIA to push these to market as fast as possible.

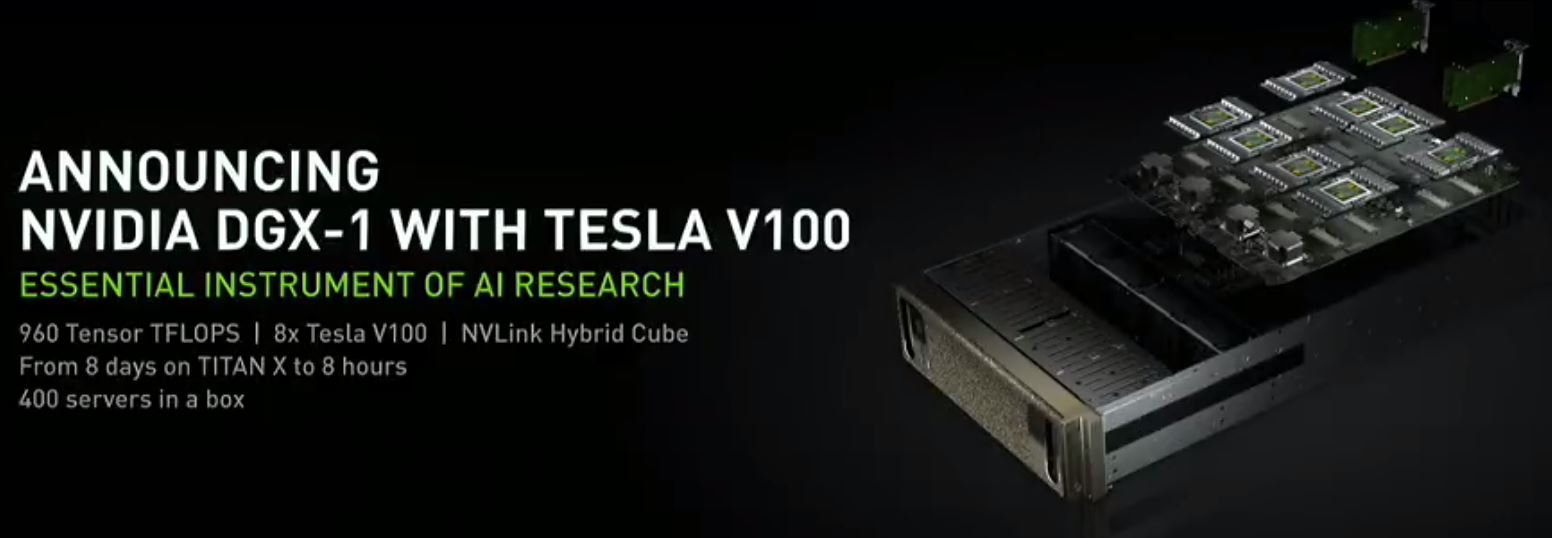

These will be part of the new DGX-1 V with 8x NVLINK Volta GPUs.

NVIDIA will be shipping in Q3 (OEM partners in Q4) and will upgrade systems bought today to Volta when it comes out.

For hyperscalers, the HGX-1 will bring 8x NVIDIA Volta based Tesla V100 GPUs for their clouds.

Finally, for developers, a 4x Volta GPU NVIDIA DGX Station:

This will also be available in Q3 and is even water cooled. One item you can notice in the NVIDIA DGX Station is that it will include NVLink not just PCIe 3.0 to connect its GPUs.

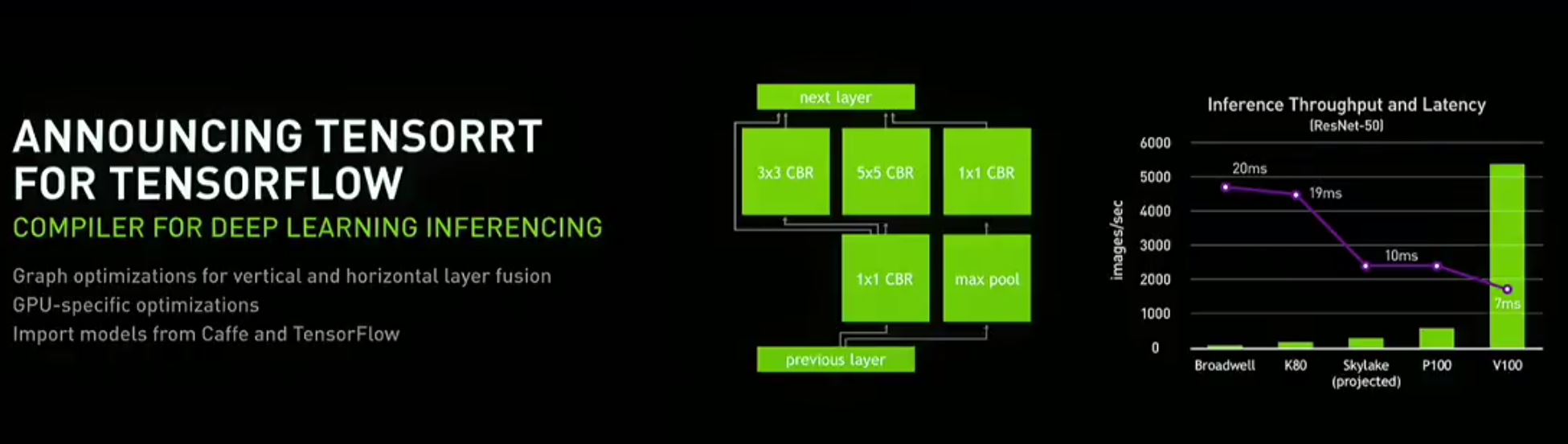

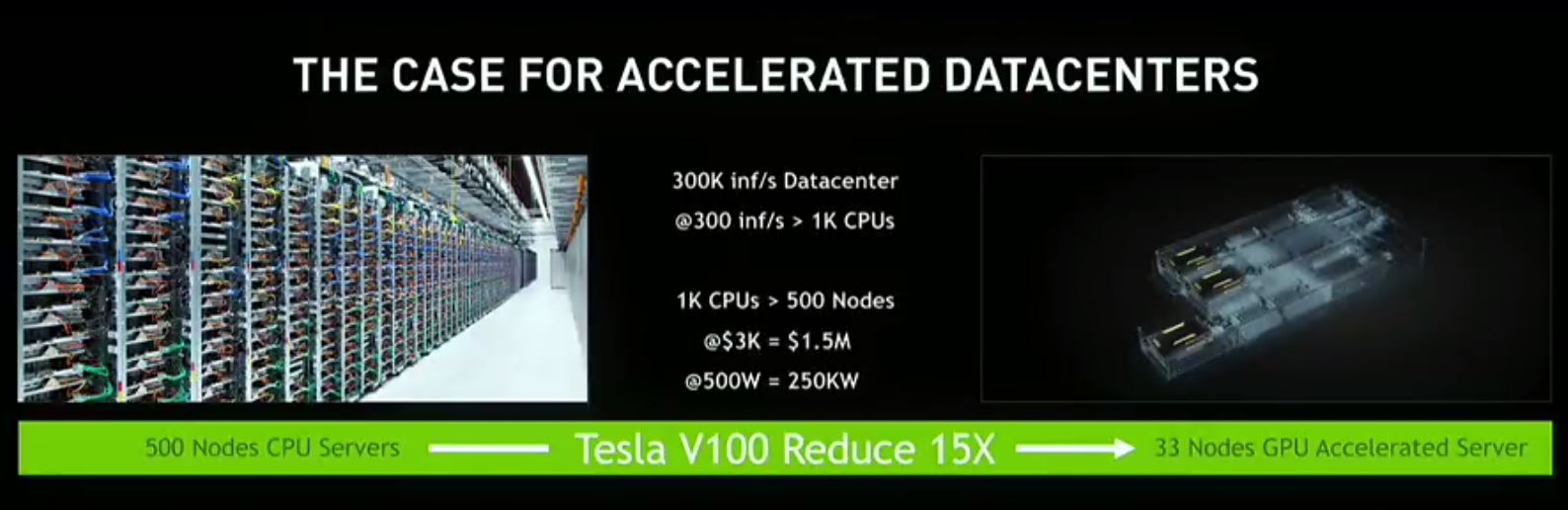

Inferencing Speedup Volta v. Skylake Claims

In terms of inferencing support, Volta is expected to be a major speedup.

The inferencing 150w FHHL single slot card is set for adding in commodity PCIe nodes.

The claim was made that you can move 500 CPU based inferencing servers down to 33 nodes with new Volta Tesla cards.

We still have some time before we expect Volta to hit the mainstream GPU market.

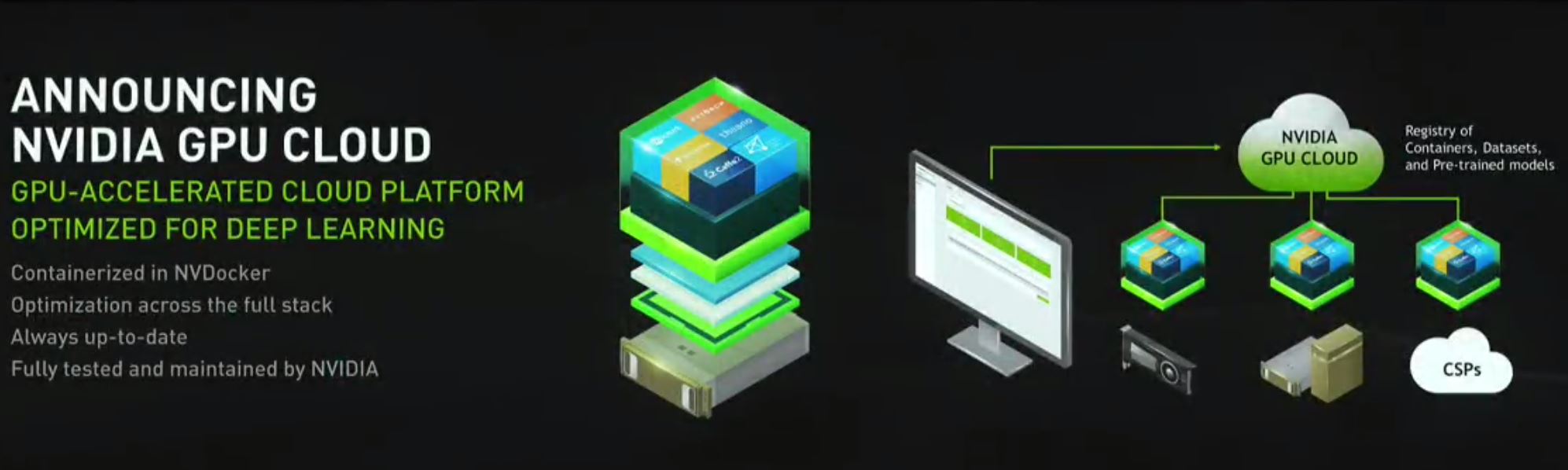

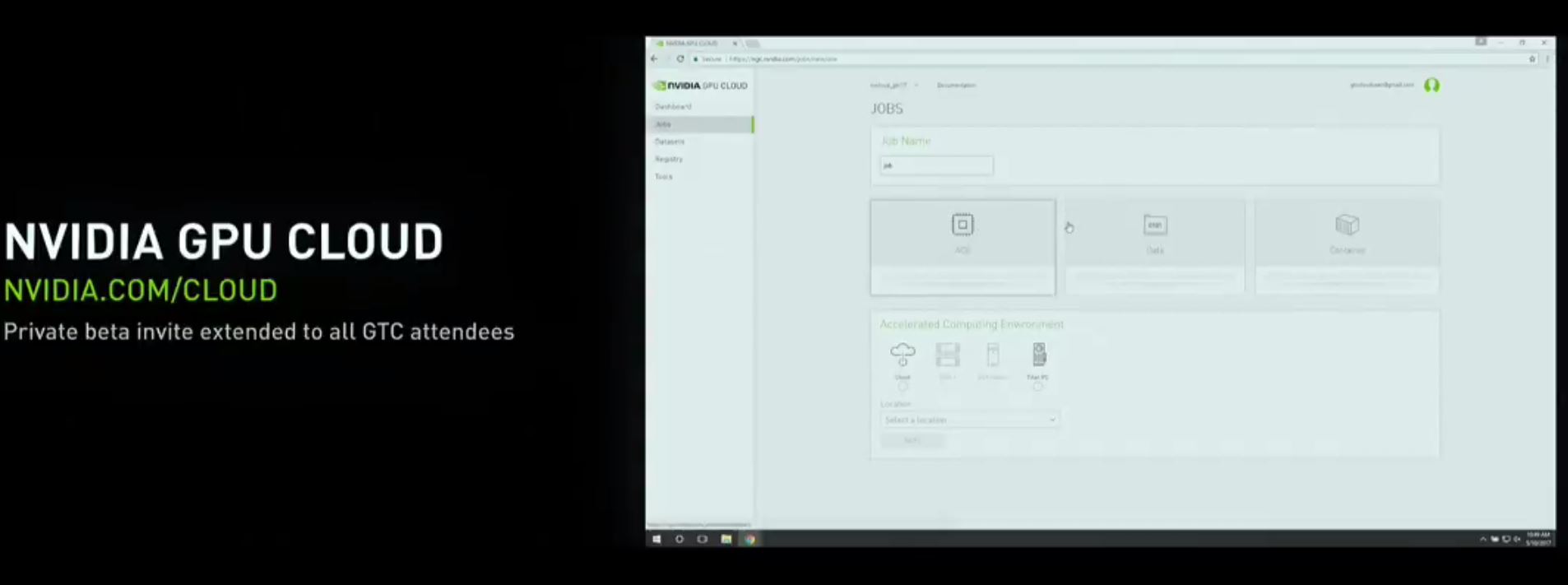

NVIDIA Cloud! Containerzied Deep Learning

NVIDIA just announced containerized GPU accelerated deep learning platform.

NVIDIA will provide a registry with the popular frameworks, datasets and pre-trained models. You can then run workloads locally or in the cloud using their web interface:

At STH, we have been using nvidia-docker for some time. We even have Monero and ZCash cryptocurrency mining instances using nvidia-docker.

Final Words

If you have been noticing more GPU content on STH, that is for a good reason. We are going to be covering the space increasingly in the future. If you are looking for the key driver of today’s coolest workloads, this is it.

Isn’t V100 a recycled product name ? Wasn’t the V100 the chip powering the last generation of 3DFX graphics cards (3DFX 3 3000 etc …) before it went under and Nvidia acquired it ?

3dfx was VSA-100 per Wikipedia https://en.wikipedia.org/wiki/3dfx_Interactive