At the 2017 Supercomputing conference (SC17) we were able to attend the NVIDIA press and analyst event. We had a pre-briefing on the event so we knew what to expect but there were a number of announcements. By far the most impactful was the announcement of the NVIDIA GPU Cloud expanding to HPC applications. Here is a quick overview of what NVIDIA announced.

NVIDIA Tesla V100 Volta Everywhere

The first announcement should be of little surprise to anyone following STH coverage of NVIDIA. The next generation NVIDIA Tesla V100 is now available just about everywhere.

On the show floor of SC17 just about every vendor (Dell EMC, Hewlett Packard Enterprise, Huawei, Cisco, IBM, Lenovo, and Supermicro) had solutions with the V100 GPU. Some were 4 to a box, some were 8, some were submerged in liquid for cooling, but they were everywhere.

Beyond just the hardware vendors, Alibaba Cloud, Amazon Web Services (in AWS P3 instances), Baidu Cloud, Microsoft Azure, Oracle Cloud and Tencent Cloud have also announced Volta-based cloud services.

HPC and AI Applications in the NVIDIA

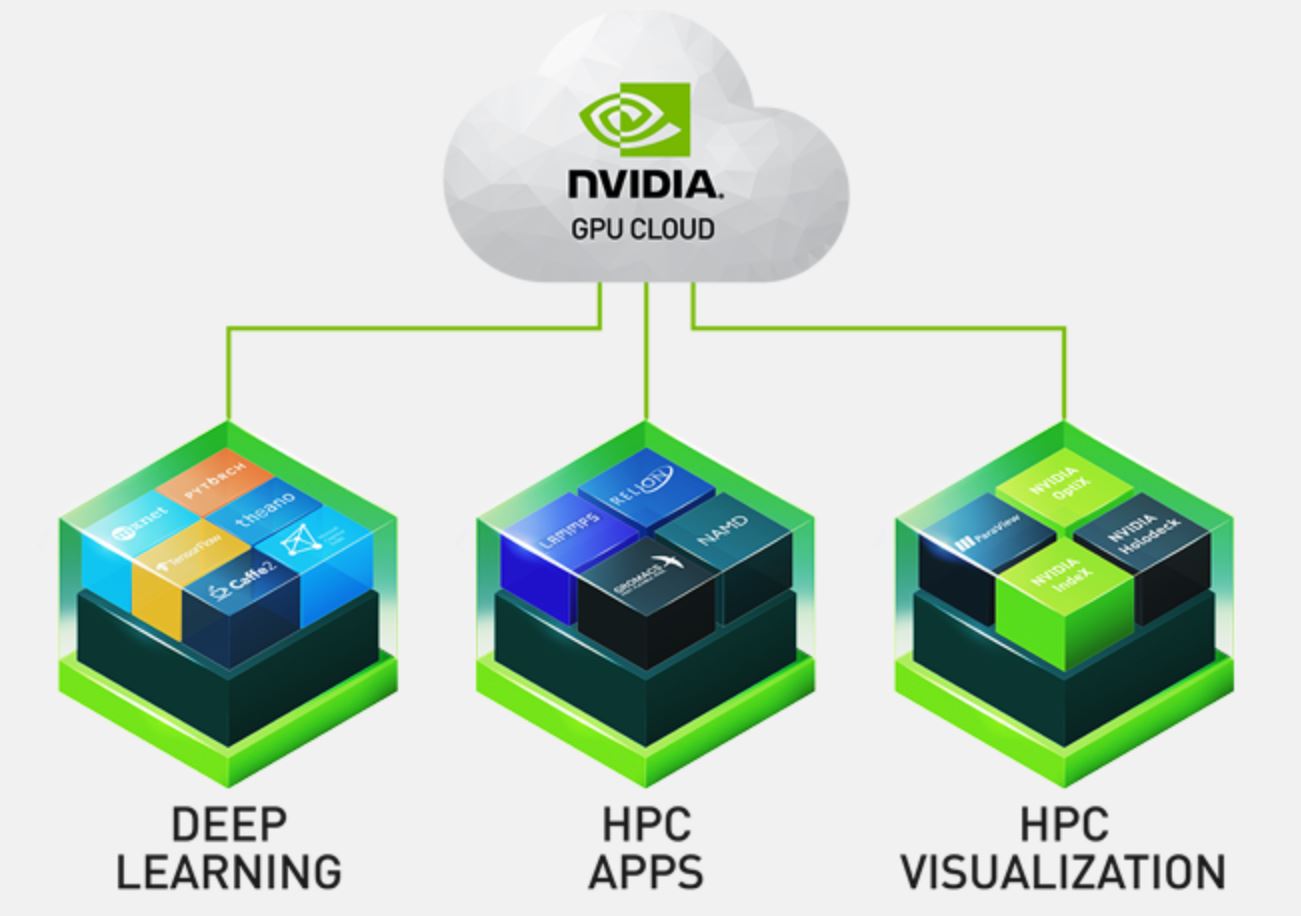

The NVIDIA GPU Cloud is NVIDIA’s offering where frameworks and applications are packaged and distributed by NVIDIA in containers. We have been working with nvidia-docker, the precursor to this service, for quite some time and it is awesome. We have recently been working to package our applications using these NVIDIA optimized containers. Essentially, NVIDIA is taking away much of the “getting the stack running” work for deep learning/ AI and HPC applications.

At SC17, NVIDIA added HPC applications and visualization to the platform. This allows users to quickly get up-and-running with GPU accelerated applications without having to manage CUDA versions and dependencies with the application versions. The real impact here is that this can shave days or weeks of time deploying systems.

We are already working with NGC at STH and will have more on this offering soon. It is interesting for a few points. First, it does offer something that is so easy, we wish we would see Intel offer something similar. Distil the vast expanse of Github and Docker Hub down to a few select best-known configurations. Second, it is fascinating in that we can see this being offered for VDI in the future and also to make NVIDIA the hub of offering on-demand GPU compute offerings.

Final Words

We have been talking about the NVIDIA Tesla V100 “Volta” for some time, and have some numbers in the editing queue for 8x Tesla V100 systems. At the same time, these just started shipping in volume from what we were hearing in the last two months so they are a somewhat known quantity. The NVIDIA GPU Cloud is the big story here. NVIDIA has the opportunity to build the “app store” model and both on-prem (DGX-1 and DGX Station along with partner systems) and cloud-based on demand GPU compute.

Looking forward to the Gromacs and NAMD benchmarks on DeepLearning10.