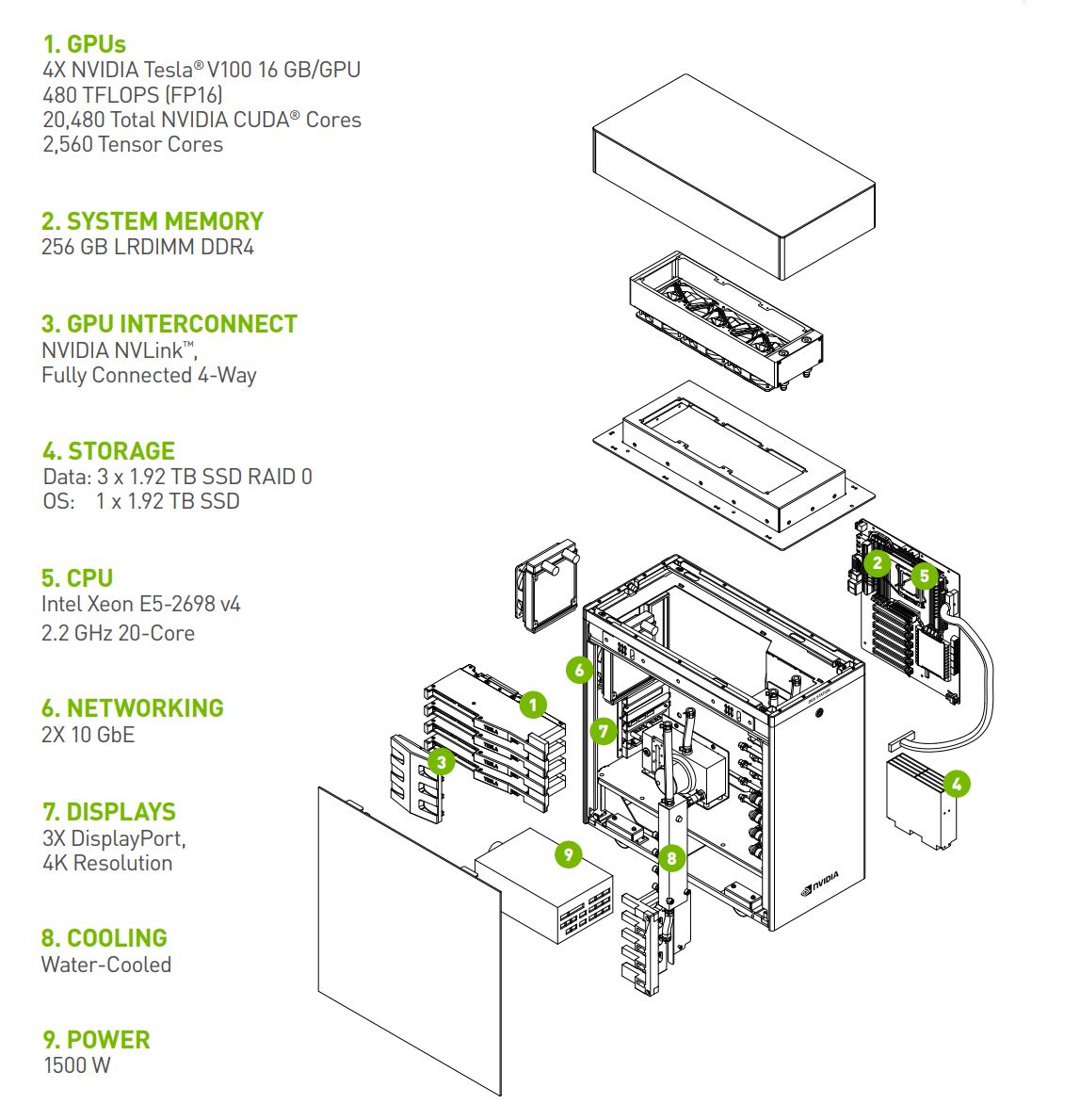

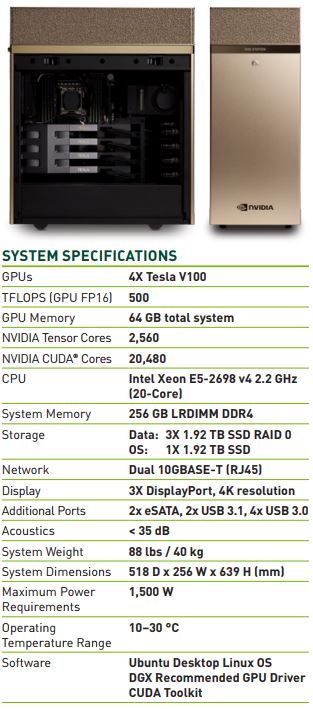

One of NVIDIA’s biggest advantages in the deep learning / AI space today is its rich CUDA optimized ecosystem that can be accessed by a wide variety of machines. Including consumer GPUs. The NVIDIA DGX Station is designed to put the power (and more) on your desktop. At launch, it was powered by four NVIDIA Tesla P100 GPUs but it is now powered by four NVIDIA Tesla V100 GPUs. Here are the key specs of the updated machine.

NVIDIA DGX Station Specs

Sporting the new NVIDIA Tesla V100 with Tensor Core technology for $69,000 you can own a dedicated system for about the same price as 1-year of cloud instance pricing. For example, an AWS p3.8xlarge instance with all up-front pricing is $68301 for 1-year.

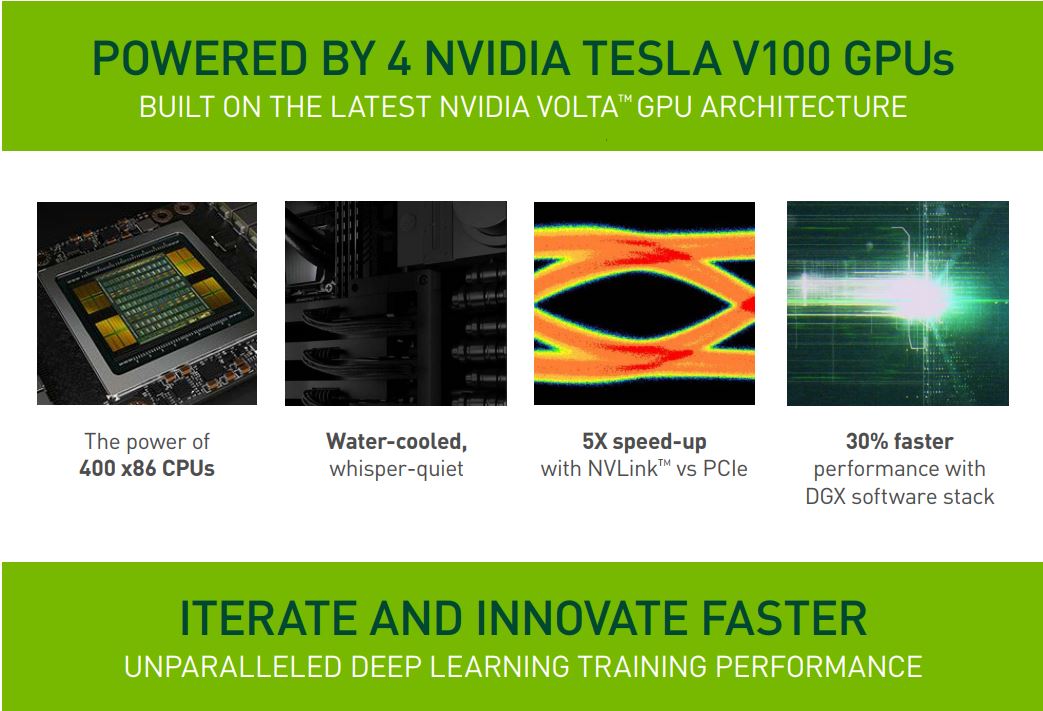

The centerpiece of the NVIDIA DGX Station offering are 4x NVIDIA Tesla V100 GPUs. NVIDIA adds NVLink via the top connectors (e.g. where the SLI connectors are found on consumer GPUs.)

In terms of the rest of the system the DGX Station has an Intel Xeon E5-2698 V4 CPU along with 256GB of RAM, giving you a bit more than a p3.8xlarge.

One of the more impressive claims is that the DGX Station runs at under 35dB making it practical to use in an office setting. This is achieved through water cooling.

NVIDIA is betting that companies competing for the top AI talent will be willing to provide the best tools. For some, this means access to massive clusters in data centers. For others, it is an Ubuntu Desktop environment at work with a massive amount of power under the hood.

We do want to point out that the DGX Station will likely need some special power. It is rated for 1500W maximum which is more than is safe to load in a standard 15A 110V / 120V circuit found in many office settings. The hidden cost may be that if you have many engineers with DGX Stations, you are likely going to have to upgrade your power and cooling at the office.

Some of the claims are quite lofty.

Final Words

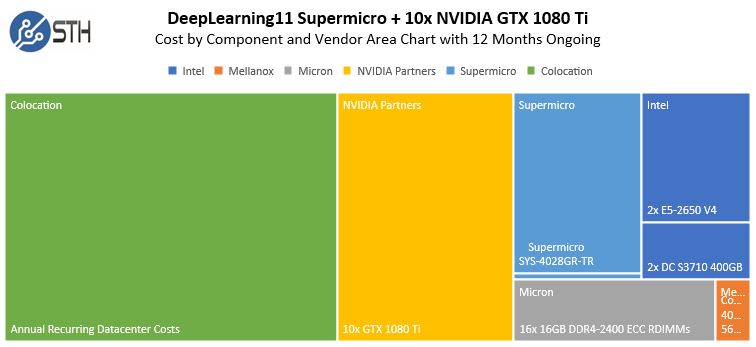

The ability to bring meaningful CUDA-based training into the desktop is an important advantage that NVIDIA has over Intel and others. The NVIDIA DGX Station is certainly something cool to behold. On the other hand, $69,000 is a lot of money. A current favorite is a 10x GTX 1080 Ti configuration we highlighted in our DeepLearning11 build costs under $30,000 including a year of operating costs. We are seeing more companies involved with deep learning / AI opt for data center clusters that can be shared and run at a higher utilization rate. The flip side is that a 10x NVIDIA GTX 1080 Ti system carries nowhere near the panache of a DGX Station to employers seeking to retain the best talent.

From the exploded CAD diagram, it appears as though the NVIDIA DGX Station and attractive integration of parts, albeit expensive, is based on an ASUS X99-E-10G WS motherboard or similar.

https://www.asus.com/us/Motherboards/X99-E-10G-WS/

Why no Intel Scalable CPU!?