NVIDIA DGX-2 Scaling to 16 GPUs

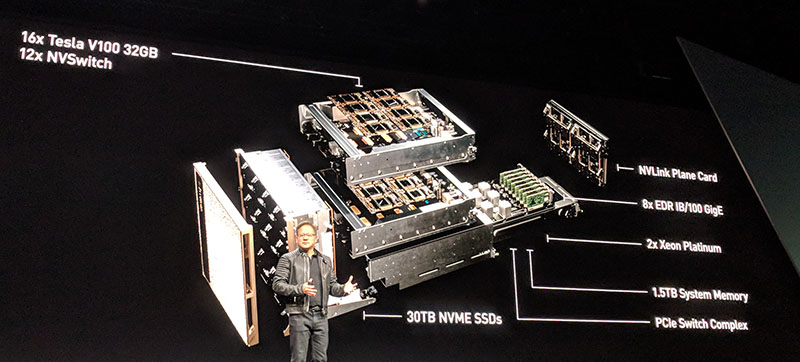

There are a number of really interesting facets to the NVIDIA DGX-2 system. First and foremost, the fact that the new solution holds 16x NVIDIA Tesla V100 32GB GPUs. For those keeping count, that means that there is a total of 512GB of high-speed GPU attached memory in the new architecture. Adding more GPU compute resources and more onboard memory means that one can hold larger models and train them faster. NVIDIA claims up to 2 petaflops from the single machine.

NVIDIA likewise is scaling other system components such as main system RAM up to 1.5TB. This makes sense as it seems to be holding a 3:1 main system RAM to GPU memory ratio. Doubling the number of GPUs means that it likewise can scale system RAM.

Networking was a point highlighted. In this generation, the NVIDIA DGX-2 is going to utilize either 100Gbps EDR Infiniband or 100GbE. NVIDIA said that has some customers who keep everything on 100GbE instead of mixing Infiniband and Ethernet in their datacenters. Mellanox has its VPI technology that allows the same ConnextX4 and later cards to support either Infiniband or 100GbE through the same ports. One simply selects which type of networking to configure and a simple software switch allows you to change. This was a no-brainer for NVIDIA to add in terms of capability.

On the competitive front, this is again extremely interesting. As NVIDIA increasingly moves into larger systems it is in direct competition with its channel partners such as HPE, Lenovo, and others. With the original DGX-1 launch, we heard that NVIDIA’s partners were having difficulty getting NVIDIA’s highest end GPUs in enough quantity as the DGX customers were getting fulfilled first. This is a great move to increase TAM and revenue for NVIDIA, but it also means that channel partners are going to look for alternatives. At this point, true alternatives are few and far between so it makes sense that NVIDIA is going in this direction with the DGX-2 and other members of the DGX line.

If you are wondering how much these cost, $399K and available in Q3 2018.