When I spoke at the ThunderX2 launch, a lot in the Arm server ecosystem improved over the previous two years. One could, at that point in 2018, install an NVIDIA GPU in a ThunderX2 server. Taking the next step, and accelerating CUDA applications as one does on the x86 side was simply not a reality. Earlier in 2019, we covered that NVIDIA Announces Arm Support for GPU Accelerated Computing. At SC19, we are now seeing the fruits of that effort with Marvell ThunderX2 CPUs attached to NVIDIA Tesla V100 GPUs along as a list of other Arm server CPU vendors NVIDIA is enabling.

NVIDIA CUDA on Arm

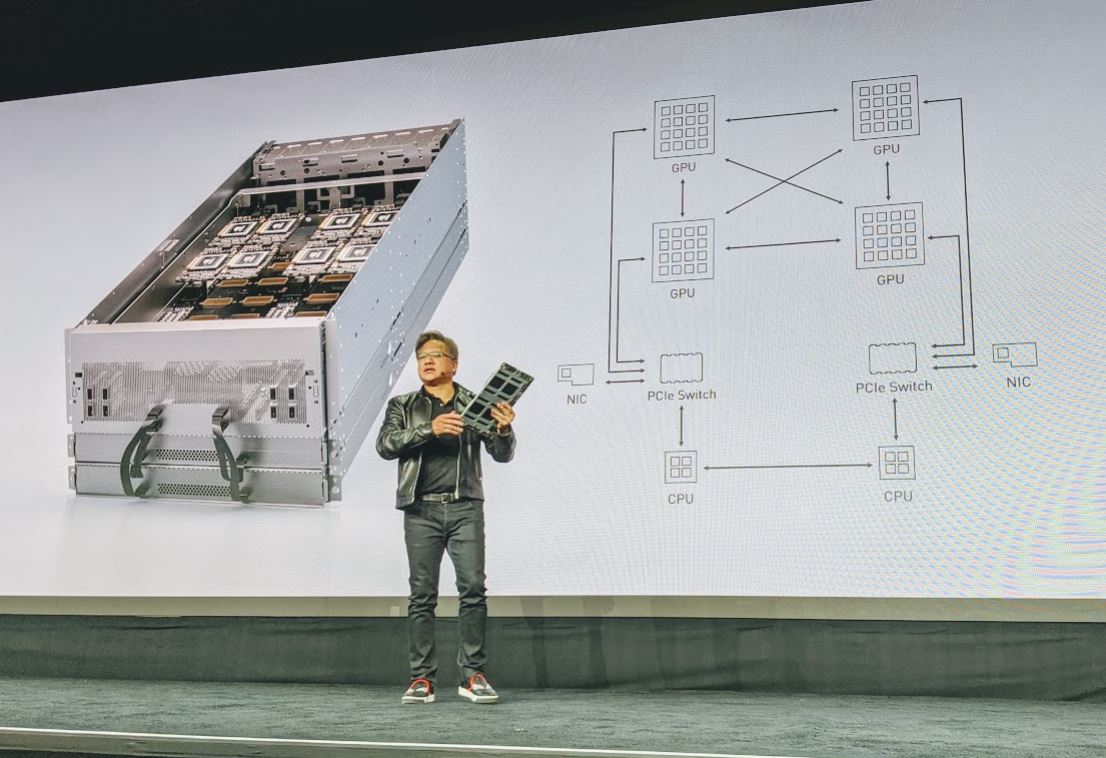

At the SC19 NVIDIA keynote, the company showed off a platform that was done in conjunction with OSS. This platform has four NVIDIA Tesla SXM2 GPUs that have NVLink connections to each other. Each GPU also is connected to one of two PCIe switches that also is connected to NICs via rear I/O slots. This is similar to half of our DeepLearning12 8x NVIDIA Tesla Server. We asked OSS and they said the GPU platform was one that they had for some time.

Each PCIe Gen3 switch is connected to a CPU tray via rear cables that run on the outside of the chassis. In the 4U chassis, there are two of these complexes for eight GPUs in the GPU tray and four NICs. One can then use standard PCIe to connect to the CPUs.

Taking a step back, the idea of NVIDIA CUDA on Arm seems strange to be discussing in 2019. We have been following the Arm-based NVIDIA Jetson CUDA platform for years. Yet here we are. Hopefully, the current NVIDIA CUDA on Arm project leads to a more standard Jetson stack that takes Arm from low-power devices to data center CPUs.

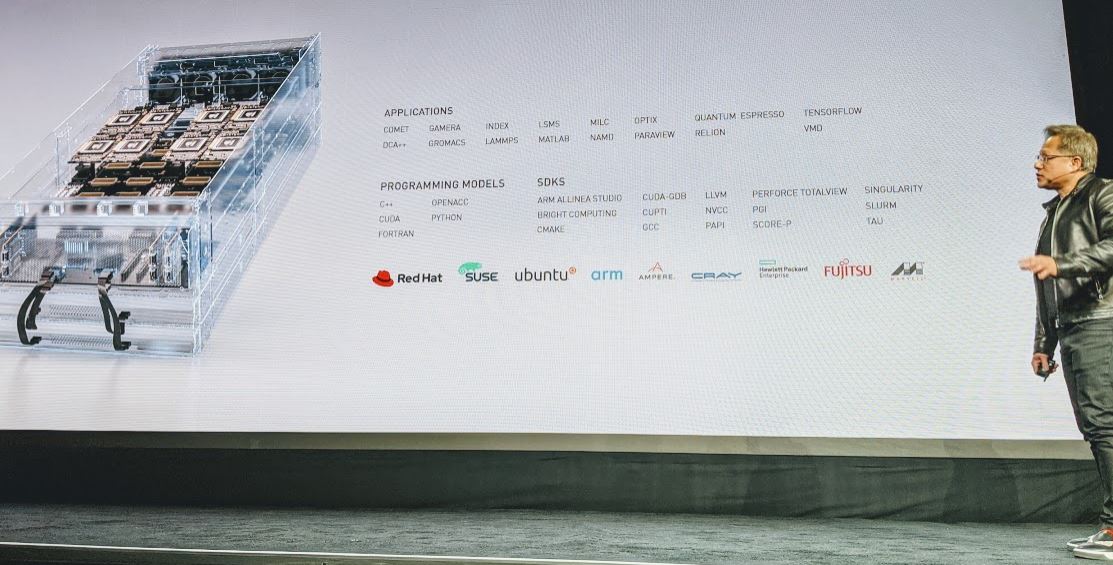

In terms of ecosystem, NVIDIA has companies like Ampere, Fujitsu, and Marvell along with Linux OS vendors, and HPE/ Cray. Cray recently offered that it is going to sell Fujitsu A64fx systems so we expect to see more Arm in the HPC data center over the next few years.

Final Words

This is a great opportunity for Arm to get a foothold in the more mainstream HPC market. Many of the accelerator applications can benefit from Arm in the future. We are not at mainstream NVIDIA CUDA on Arm at this point with the beta out. Still, this is a great first step in the process.