We are going to be covering the NVIDIA Computex 2023 keynote live. We are going to focus more on the data center gear that will be announced during the keynote. Since we are doing this live (with substantial jet lag), please excuse typos.

NVIDIA Grace Hopper Update with NVIDIA DGX GH200

One big announcement is that NVIDIA Grace Hopper is in full production.

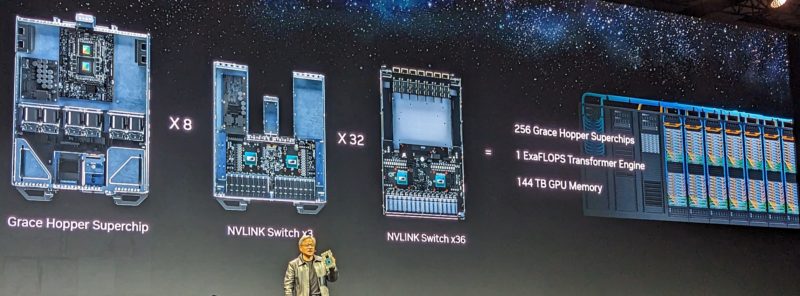

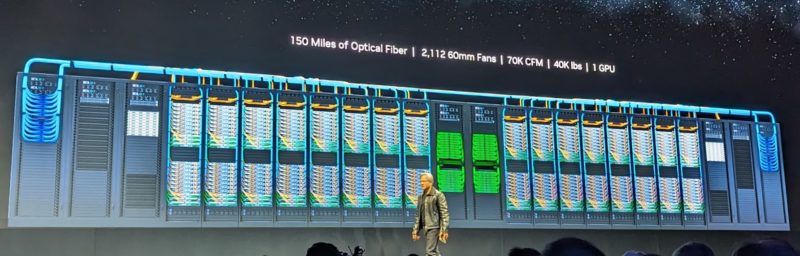

Part of the reason that is important is that NVIDIA has new solution called the NVIDIA DGX GH200. This will have 150 miles of optical fiber, 144TB of memory, over 2000 fans and more.

This offers 256 GH200 Grace Hopper chips and 36 NVSwitches to create a large supercomputer. Google Cloud, Meta, and Microsoft are the early customers. AWS is notably absent from the early list. ZT Systems is making the GH200 compute pods.

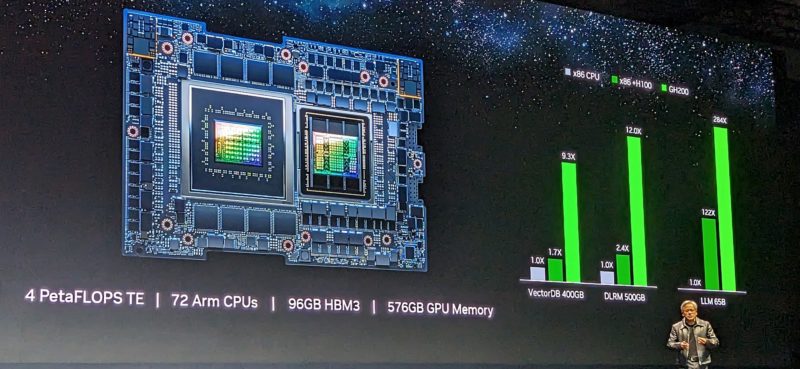

Here is the NVIDIA DGH200 speedup for large memory models. NVIDIA does a lot of research on boundary cases of AI models, and this is designed for higher memory requirement models.

Something that NVIDIA is not saying in this release, and which is really telling, is that the NVIDIA DGX GH200 is fast enough to be a Top500 supercomputer. It currently takes ~48-64 Hopper class GPUs to run linpack fast enough to make the bottom of the Top500. NVIDIA focusing solely on AI here, not HPC, really shows the company’s focus.

Something else that was a fun nugget is that the NVIDIA HGX H100 is $200,000, weighs 60lbs, and has over 35,000 components.

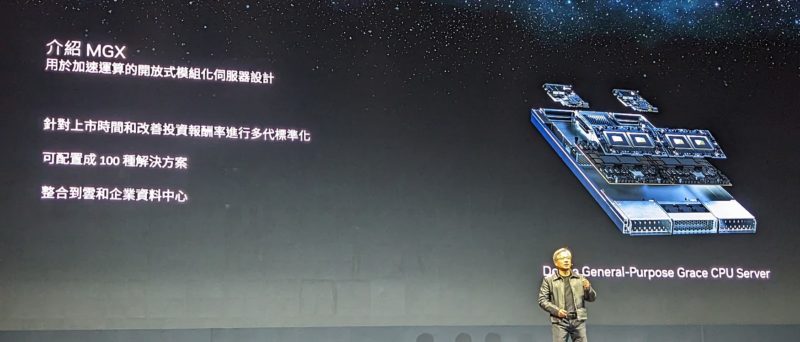

NVIDIA MGX

The NVIDIA MGX is interesting. NVIDIA is effectively standardizing a server platform for multi-generation compute and acceleration. This is designed to house different NVIDIA products and power and cool them. There are so many configurations it is almost like NVIDIA is making its own version of 19″ server specs like an OCP spec.

What is a bit interesting here is who is making the boxes. These are designed to be common computing blocks. As such, we see vendors focused on scale-out industry-standard solutions, instead of companies like HPE, Dell, and Lenovo. Hopefully, we can get a MGX system to review and show you the platform.

Something that came up in our ConnectX-7 review was that we could not get power specs from the card from NVIDIA. We are wondering if that is because the power is actually over the PCIe spec. That would lead us to think that NVIDIA is looking to build servers that do not have to conform to PCIe specs. That would actually make sense since PCIe power consumption is going up as are other components.

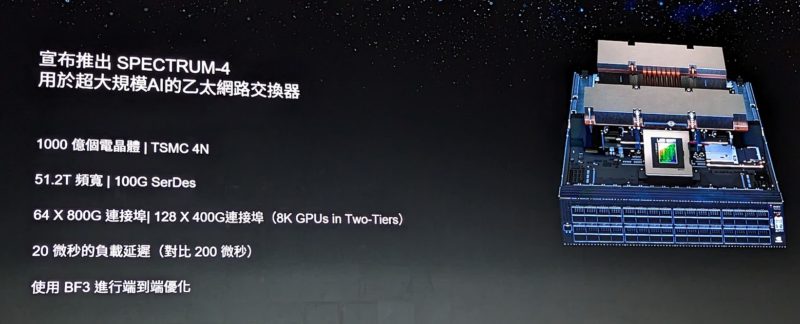

NVIDIA Spectrum-X and NVIDIA Spectrum-4

One of the big changes that NVIDIA is going through is in its network stack. While NVIDIA bought Mellanox for InfiniBand and Ethernet, there has always been tension. InfiniBand was NVIDIA’s moat. Ethernet is what all of the cloud providers want to use. Ethernet also doubles in speed every 18 months or so, we just reviewed a 64-port 400GbE switch and the NVIDIA ConnectX-7. NVIDIA is announcing NVIDIA Spectrum-X which is effectively NVIDIA Ethernet for AI.

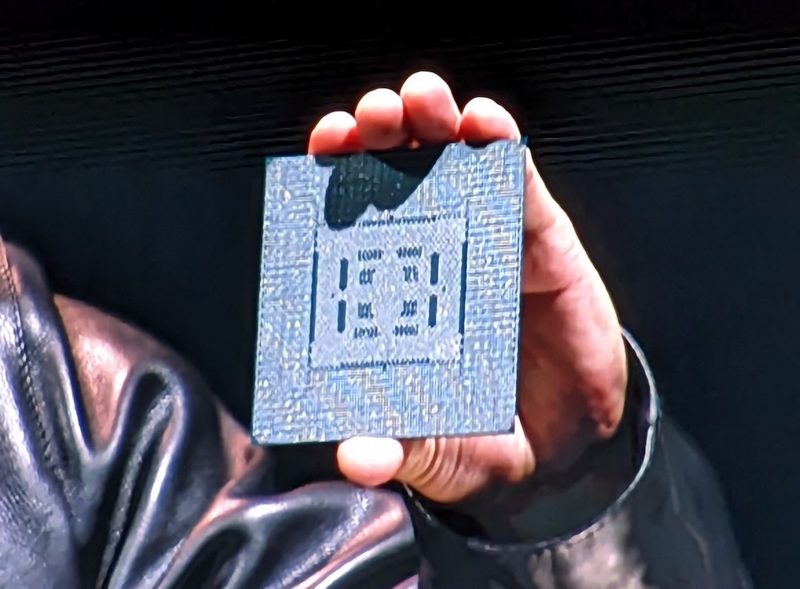

NVIDIA also says that the NVIDIA Spectrum-4 Ethernet switch is available now. This is a 64x 800GbE or 128x 400GbE switch, twice the throughput of the Broadcom Tomahawk 4 switch we looked at. NVIDIA says that it is a 500W chip, 48 PCBs, and over 2kW of power.

A big part of this push is handling the congestion control RoCE and so forth to make an Ethernet fabric perform well. The benefit is that folks can use Ethernet switches instead of InfiniBand. The big challenge for NVIDIA is now that if it says Ethernet is acceptable for AI scale-out, then organizations may opt for that, instead of InfiniBand opening the market to other players.

Final Words

There are many new technologies as part of Computex. It was great to see some of the products we have heard about getting time in the spotlight. It was also cool to see that Jensen’s family was on hand in the front row (about 7 rows in front of me) to see him speak when NVIDIA is doing very well.

Hopefully, we will get to show you some of these technologies during our show coverage.

NVIDIA leaving InfiniBand on the side of the road is good news for… home users who look for used IB hardware. 100GbE VPI cards might become more affordable sooner than I hoped on eBay :-)