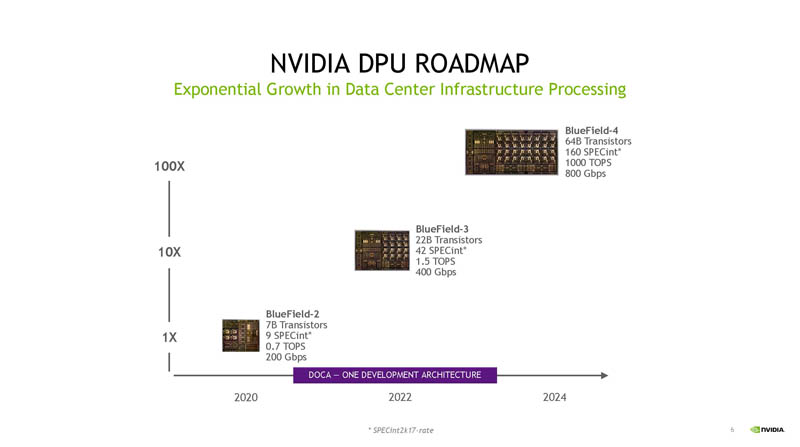

At STH we have been covering DPUs a lot. One of the upcoming DPUs that we are excited about is the 2022 NVIDIA BlueField-3 a follow-up to the BlueField-2 DPU we have been using in the lab. At Hot Chips 33, the company started talking more about the BlueField-3 DPU generation. As with all of these, we are doing them live at Hot Chips 33 so please forgive typos.

NVIDIA BlueField-3 DPU Architecture at Hot Chips 33

At STH, we have a What is a DPU A Data Processing Unit Quick Primer piece as well as the STH NIC Continuum Framework to help you understand what is a DPU and how it fits in the overall network accelerator market.

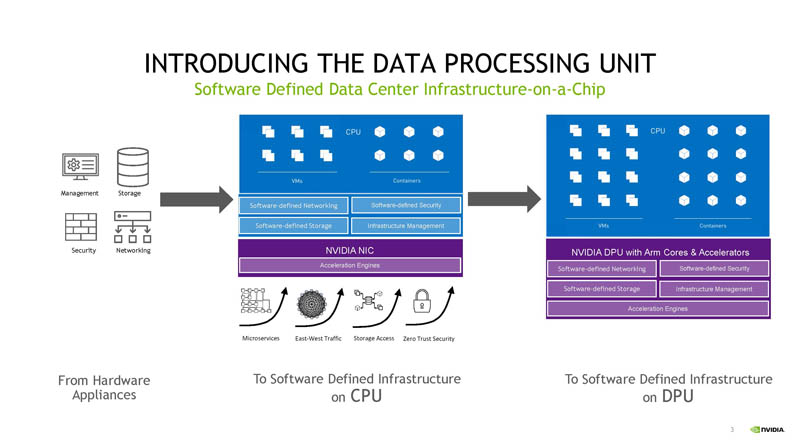

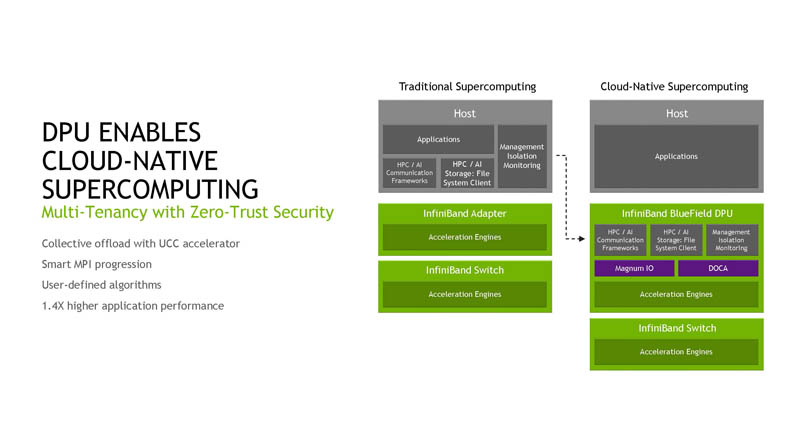

NVIDIA is building what it believes is the chip and accelerator needed to run the infrastructure portion of the data center while providing more security and deployment flexibility.

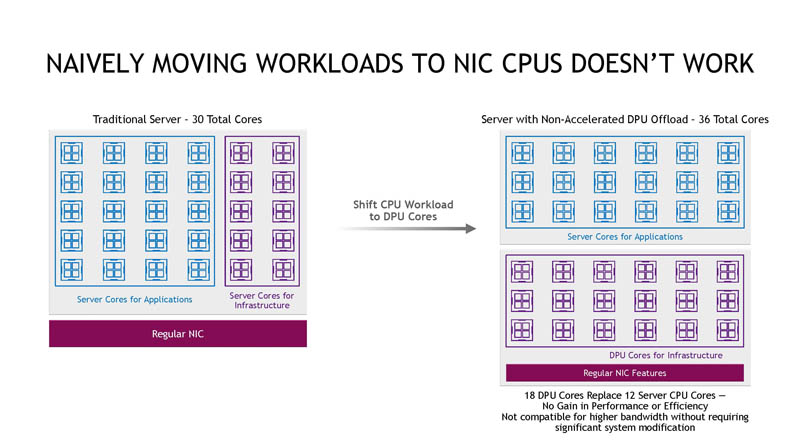

Some of the lower-end SmartNICs looked to simply move CPU workloads directly to Arm cores on the SmartNIC. That approach has fallen out of favor because often the SmartNIC cores are slower than the high-end CPU cores. At some point, one simply needs to add too many cores for this model to work.

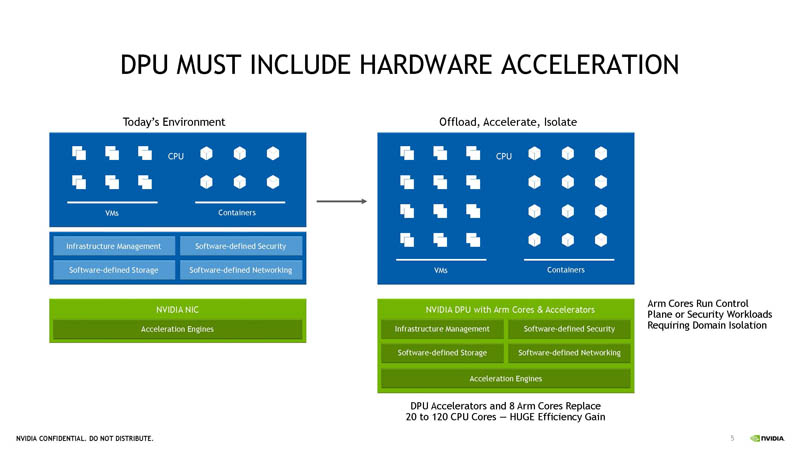

NVIDIA sees that network acceleration is important in order to get more gains from adding accelerators beyond simply adding Arm cores.

NVIDIA is looking to double the networking performance and roughly quadruple the CPU performance in each generation. Eventually, NVIDIA AI acceleration for inferencing will make its way onto the DPU.

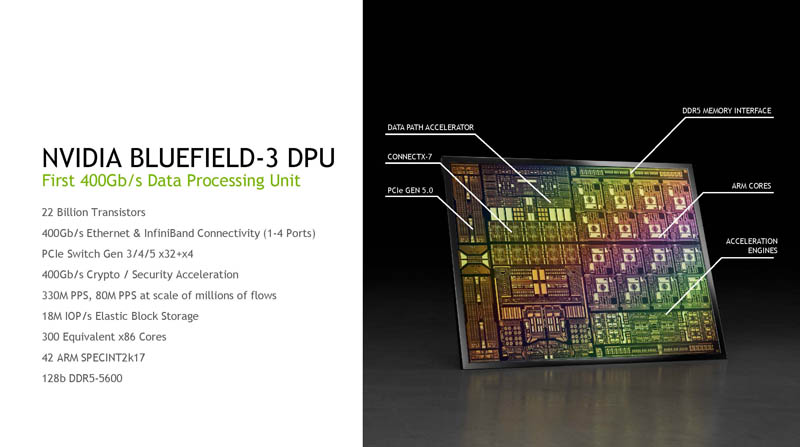

We will let our readers go through the specs on this slide, however, this is a huge amount of performance and a big jump from generation to generation. The “300 equivalent x86 cores” include workloads moving to accelerators from CPU cores.

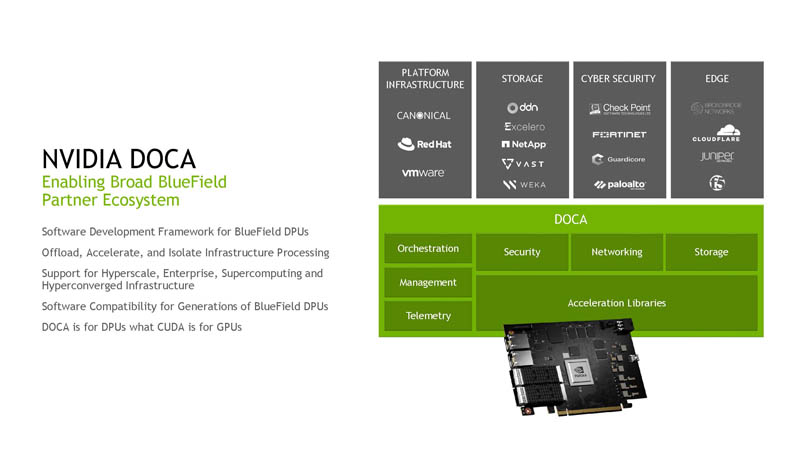

NVIDIA hopes DOCA will become the CUDA of the networking and infrastructure world.

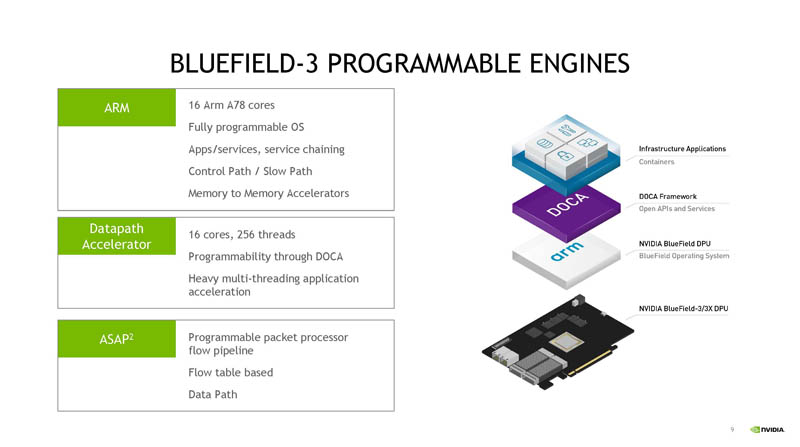

BlueField-3 uses 16x Arm A78 cores. That should mean from a CPU perspective that it is slower than the Marvell Octeon 10 36 N2 cores. NVIDIA also has data path accelerators with 16 cores and 256 threads. The company also has programmable packet processor pipelines. In some of the other DPUs out there P4 programmability would be mentioned on a slide like this.

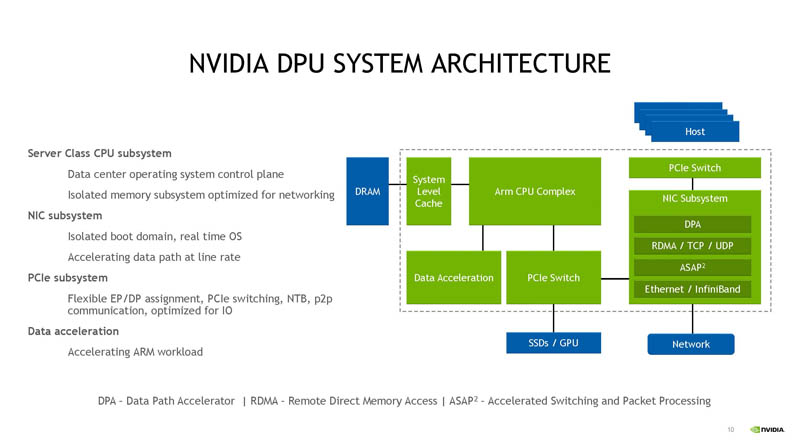

This is the overall architecture. In terms of data center operating system, for example, we use Ubuntu Server. One will see that the NIC subsystem is independent of the Arm system and runs its own real-time OS. As a result, one can reboot the Arm side and not reboot the NIC side. In current BlueField-2 DPUs, as an example, one can set the NIC up to have the Arm structure as a bump in the wire between the host and the NIC or they can run independently. With BlueField-2, the CPU capabilities are much more primitive so there is a larger performance penalty for the bump in the wire setup.

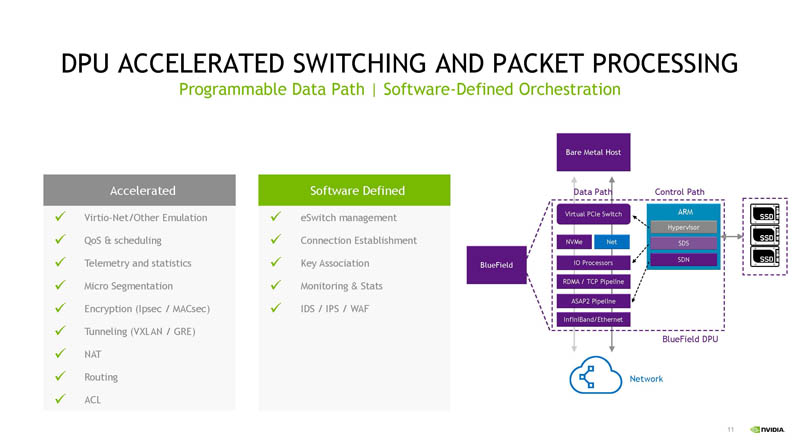

This is how NVIDIA is separating the data path and the control path along what is heavily hardware accelerated and what is software-defined.

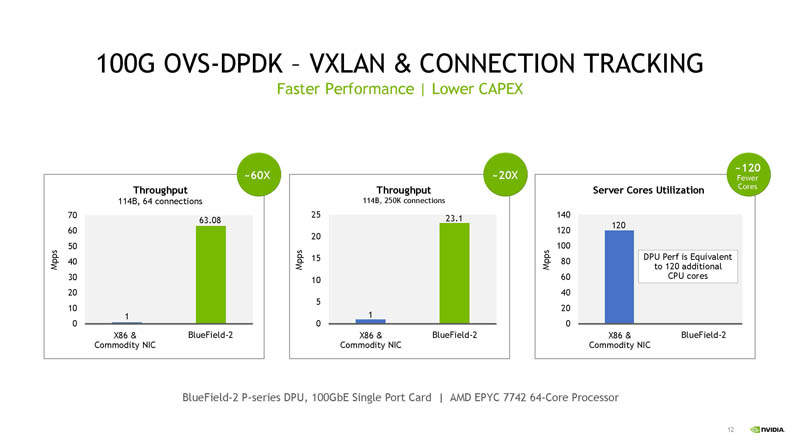

NVIDIA has a number of examples, one is using the OVS and DPDK performance.

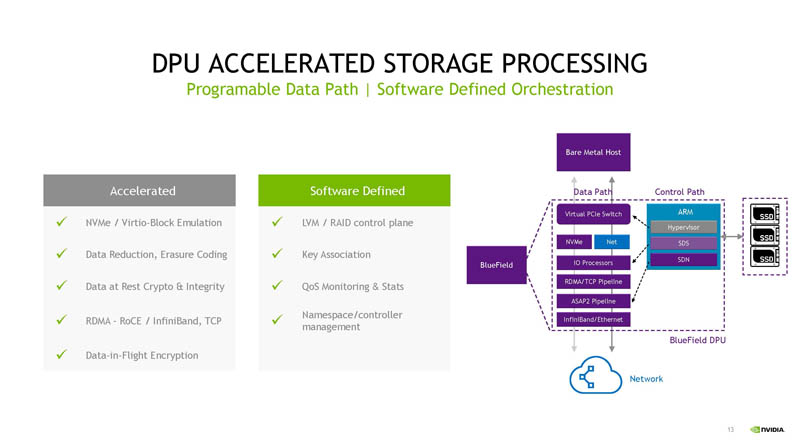

Likewise, for accelerated storage, we can see that the DPU can be connected to NVMe SSDs. LVM or software RAID can be run on the DPU and the storage can be attached directly to the DPU. We tried setting this up several quarters ago and it was not ready for public demo yet. As an impact, this can be that instead of a server with a RAID card and a high-speed NIC, this is more of a model where the BlueField DPU can take over those workloads without necessarily needing a host.

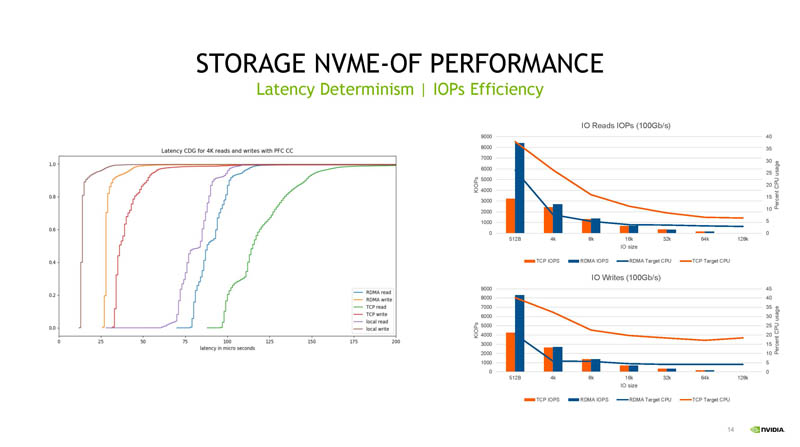

The NVMeoF stack is not overly difficult to accelerate. Very low power early SmartNICs could run this without an issue. Still, the idea is the opposite. SSDs can be accessed over the network and exposed to the host without needing to have local SSDs. Intel has been talking about diskless servers with Mount Evans and this is NVIDIA showing this use case with hardware acceleration.

NVIDIA can also offload some HPC functions. Eventually where this goes to is fabric attached GPUs like Grace. In the meantime, BlueField can act as Ethernet or Infiniband.

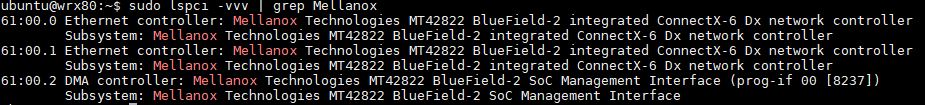

That is actually a point many do not realize with the BlueField-2, the NICs we have are ConnectX-6 VPI NICs.

As a result, one can switch them from Infiniband to Ethernet networking on their high-speed ports.

Final Words

Overall, we are very excited for BlueField-3. We do wish that NVIDIA had opted to use N2 cores instead of A78 for that product, but NVIDIA is looking to make major jumps in its DPU performance over time, and much faster than the rates we have seen on the server side.

With large vendors like NVIDIA, Intel, Marvell, VMware, Amazon AWS, and others all pushing DPUs, this is a technology transition that is happening and will become more apparent over the next few quarters.

How many u2 ports does bluefield 3 have?