It has taken over thirteen months since NVIDIA emerged victorious over Intel in the competitive bidding process for Mellanox. At the time, we went into detail about how NVIDIA to Acquire Mellanox a Potential Prelude to Servers. The central theme is that with Mellanox, NVIDIA has the opportunity to connect GPUs to a high-speed fabric without needing a traditional x86 CPU. Over the past few months, there has been a lot of change, potentially highlighting the need for this acquisition.

Changes in NVIDIA and Mellanox HPC Fortunes

With Intel Omni-Path is halting development after the first OPA100 generation, Mellanox in high-end clusters was looking unstoppable. Since the NVIDIA-Mellanox deal was announced HPE-Cray won the first three Exascale supercomputers with one all-Intel and two all-AMD designs using the Cray Slingshot interconnect, not Infiniband. Effectively NVIDIA-Mellanox, key in today’s high-end HPC space, was shutout from the first three Exascale systems.

There is a lot beyond those first three. For one, it is unlikely every supercomputer announced over the next few years will continue this trend of HPE-Cray dominance. As a result, there are still plenty of opportunities out there for NVIDIA-Mellanox. Make no mistake, these three wins are a big endorsement for Slingshot that will come up in every NVIDIA-Mellanox deal.

Another big development is that we have seen NVIDIA CUDA on Arm Becoming a Reality. Likely at the NVIDIA GTC 2020 Keynote Set for May 14 we will hear more on this development. Promotion CUDA to Arm does a few things. It helps solve NVIDIA’s biggest weakness in traditional HPC markets today by adding CPU options. While Intel and AMD are pitching future x86 CPUs plus their GPUs, NVIDIA did not have a real high-end CPU option. We have heard that Marvell ThunderX3 has been doing well with NVIDIA GPUs.

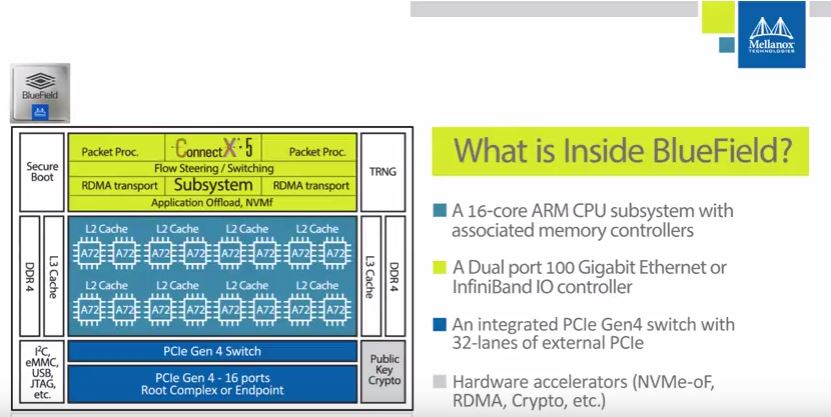

Next, NVIDIA effectively now has IP to put GPUs directly on fabric without even needing a host x86 CPU. With Mellanox BlueField, NVIDIA has Arm cores to handle the management. It also has a ConnectX-5 NIC with offload engines. There is local RAM for cache. Finally, there is a PCIe Gen4 switch.

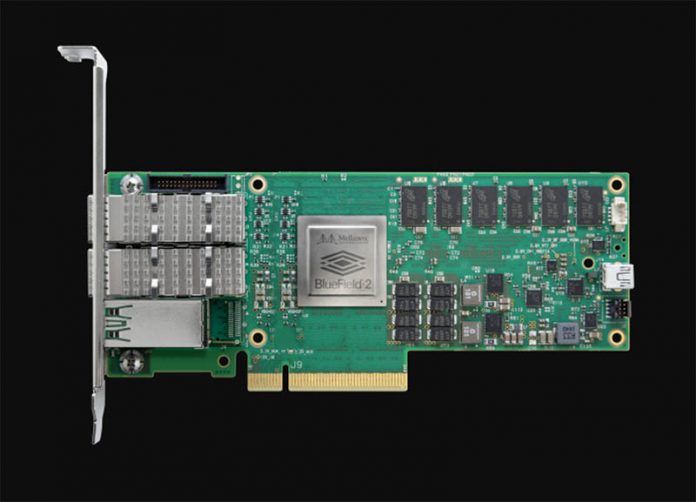

We have already seen demos of Mellanox Bluefield-2 IPU SmartNIC Bringing AWS-like Features to VMware. The idea that one can run GPU host functions on one of these NICs is very feasible even if VMware is not known as a powerhouse in the HPC market.

Looking at larger AI systems, storage is a big challenge. Adding BlueField-2 NICs and future NICs with all of the NVMeoF offloads allows one to integrate storage onto the fabric as well.

What is interesting is that with Mellanox, NVIDIA now has the key to putting storage and its PCIe accelerators directly on high-performance fabrics. We are using fabrics here because Mellanox is a big player both in Infiniband networking but also in Ethernet NICs.

Final Words

We will certainly hear more about this in the coming weeks and months. Still, the acquisition closing is a big deal for NVIDIA, Mellanox, and the industry. With recent channel checks, we have been hearing more stories of Mellanox lead times increasing in recent weeks. Hopefully, NVIDIA’s increased scale helps strengthen that supply chain.

What is perhaps most exciting is seeing where NVIDIA is planning to take this technology beyond the above. If NVIDIA’s plan is to indeed liberate GPUs from traditional servers, then this may mark one of the biggest changes in architecture for awhile. One could imagine even startups with racks of GPUs in the office without x86 servers.

In a world where AMD and Intel have both CPUs and accelerators, NVIDIA now has accelerator and fabric along with a plan for (Arm) CPUs.

What’s the deal about future POWER? As far as I know, IBM has their custom nodes at (now) GlobalFoundries with SOI and eDRAM on chip, wouldn’t that be the CPU of choice if you continue to have NVlink integration?

“In a world where AMD and Intel have both CPUs and accelerators, NVIDIA now has accelerator and fabric along with a plan for (Arm) CPUs.”

ARM would take the place of the Xeons which are usually paired with the Nvidia GPUs – even in the DGX systems, it’s Xeons. More or less a traffic cop.

Intel has both CXL and GenZ. Sure AMD will support those as well, but no need to discuss low production niche products.

GenZ (inter rack) is not much different than IB – and looks the be created for the same purpose – high speed low latency interconnection.

-In Slack We Trust-

On the Mellanox lead time note, yes, it takes us anywhere from 4 to 8 weeks to acquire network adapters, cables, and switches.

It’s been that way for at least 8 to 12 months.