The Top500 list has seen waning interest from submitters for years, but this list had some interesting shifts. We have seen big changes in CPUs deployed, accelerators, and even interconnects. One of the biggest stats is that 9 of the top 25 systems appeared in this list which is a huge turnover of 36% in the top 25 but then only 8% on the next 475 spots of the list.

For those wanting to take a trip down into the archives, you can find our previous pieces here: June 2023, November 2022, June 2022, November 2021, June 2021, November 2020, June 2020, November 2019, June 2019.

Top500 New System Trends

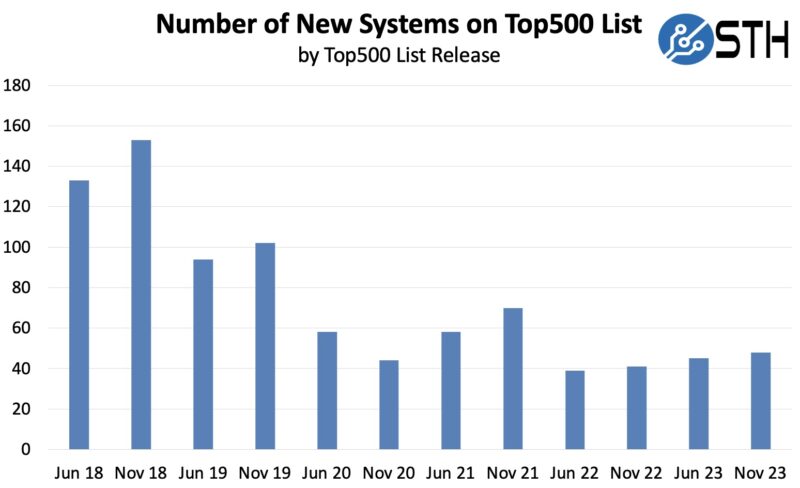

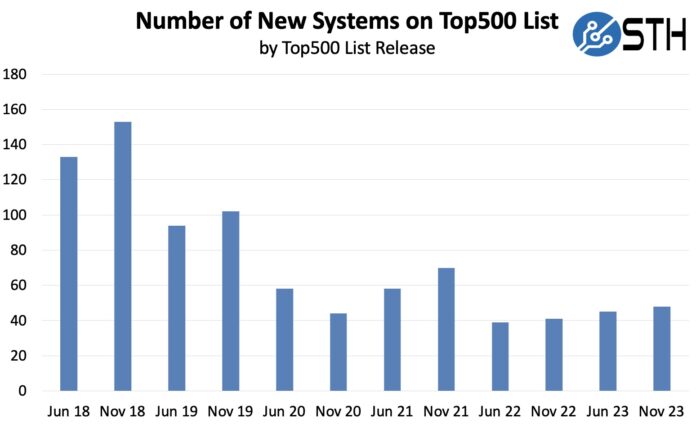

First, we highlight the sheer quantity of turnover in the Top500. When we started doing this analysis in 2018, we more than a quarter of the list turnover with each new publication. In 2020, the industry noted the decrease in systems attributed to the pandemic. It seems like we are now on a new trendline with this list slightly below the average since 2020.

In this list, we have 48 new systems up from 44 in June. These are up from 2022’s lists with 41 and 39 new systems. Another notable change in this list is that Lenovo had fewer web hosting style clusters that submitted Linpack runs.

It seems as though the Top500 list is dying and perhaps the top 25, top 50, or top 100 would be more relevant these days. Part of that is that China is not submitting domestic systems, but a Chinese vendor, Lenovo, is submitting systems outside of China.

Top500 New System CPU Architecture Trends

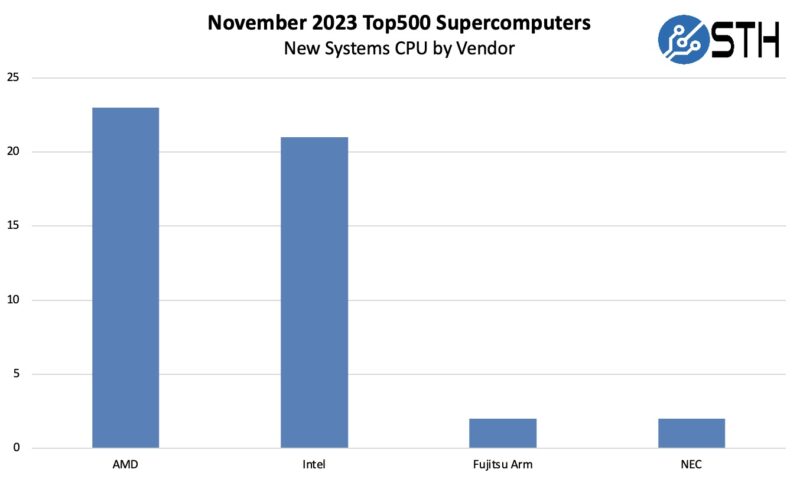

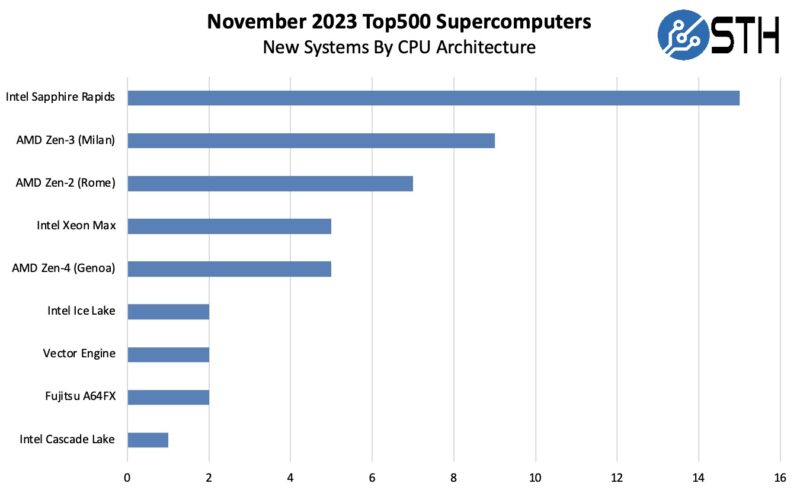

In this section, we simply look at CPU architecture trends by looking at what new systems enter the Top500 and the CPUs that they use. Let us start by looking at the vendor breakdown.

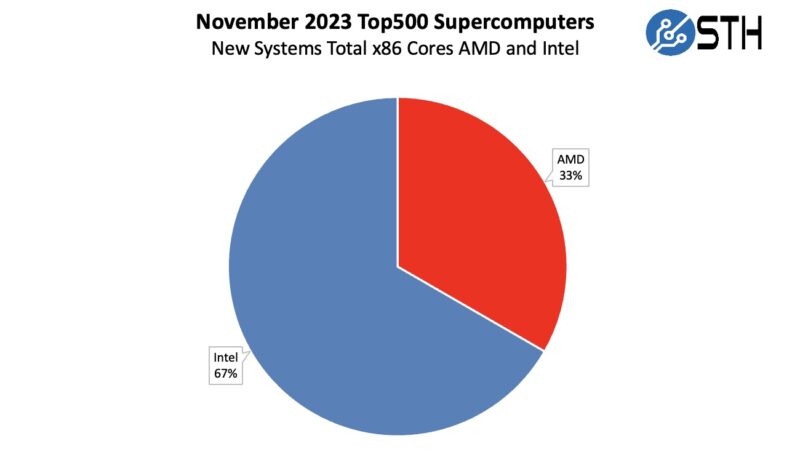

AMD edged out Intel again based on the number of systems, but that only tells part of the story. While AMD had more systems, Intel had more cores deployed.

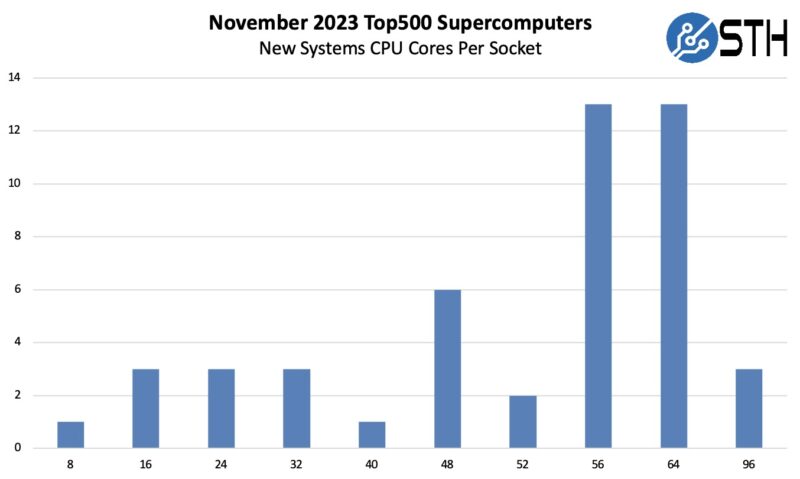

When we go to CPU cores per socket, the big change is the drop-off in 32-core systems. This list’s systems generally have more CPUs than on previous lists.

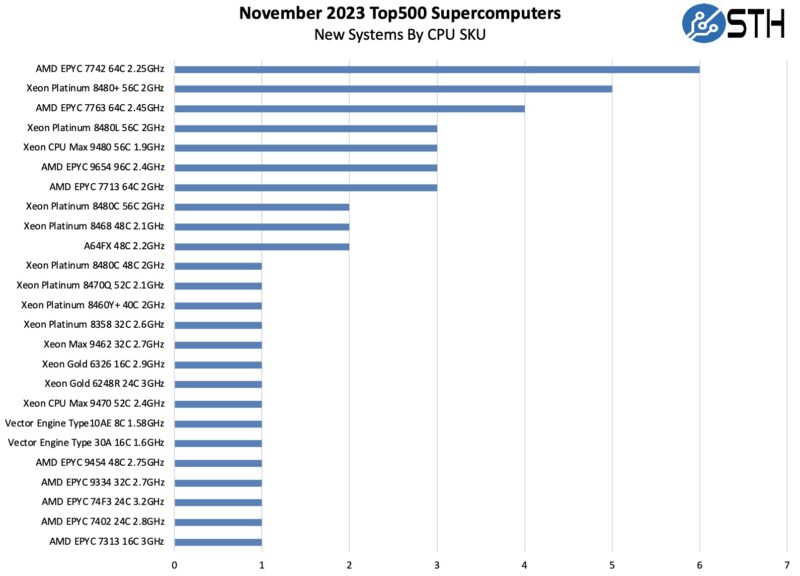

We finally see 96 core parts with the AMD EPYC 9004 “Genoa” series showing up. We also see 56 core parts which are generally newer Intel Sapphire Rapids Xeon parts.

In the June 2023 list, the most popular architecture was AMD’s Zen3 or 3rd generation AMD EPYC. In this list, the 4th Gen Intel Xeon Scalable Sapphire Rapids really did well.

The wrinkle is that instead of being “Sapphire Rapids HBM”, the parts are now Xeon Max. Xeon Max alone is keeping pace with AMD’s current generation Zen 4 “Genoa” architecture. If you want to learn more about Xeon Max, check out our Intel Xeon MAX 9480 Deep-Dive.

Here are the actual SKUs that are being used:

It is somewhat crazy that the 2019 AMD EPYC 7002 series Rome CPUs are the most popular in a November 2023 list.

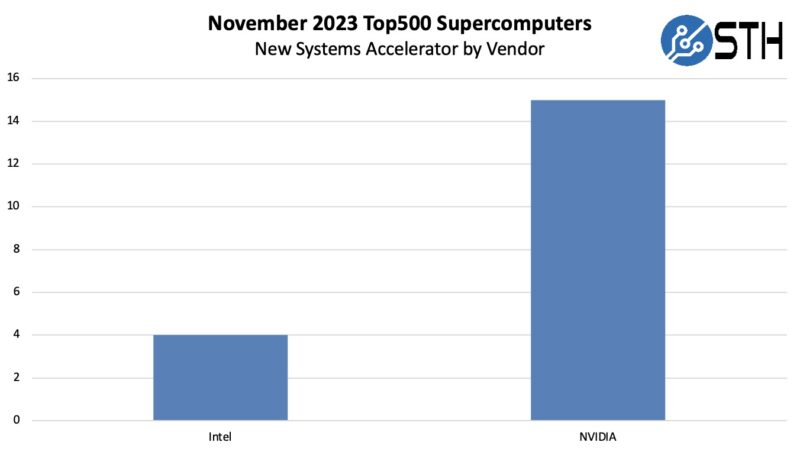

Accelerators or Just NVIDIA?

NVIDIA has been so dominant in the accelerator for HPC market that we have a section specifically addressing that in its title. In this list, it was nowhere near as dominant, but it was close:

Here is the big question: Where is AMD? For four lists, the AMD MI250X accelerator has been in the #1 spot on the Top500, with several smaller systems deployed. Now, AMD is absent.

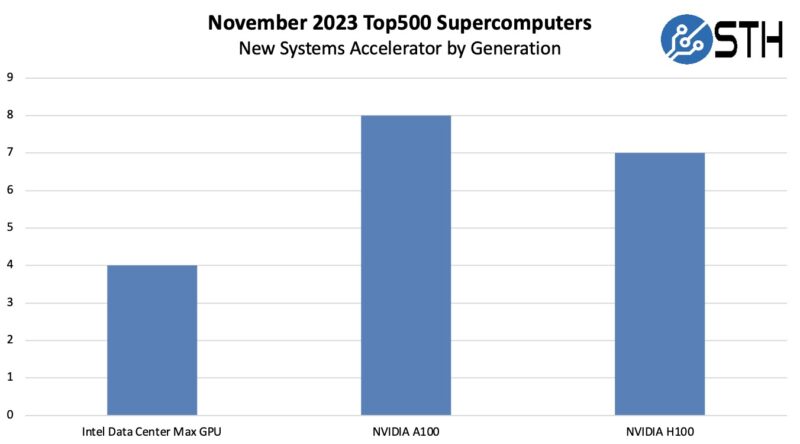

What may make more sense is that the NVIDIA A100 is doing relatively well. The NVIDIA H100 has sold for over MSRP in many big deals this year. The H100 is faster, but the AI performance delta between the A100 and H100 is much larger than the FP64 side.

Putting this into a bit of perspective, any AI installation that has 10 or more DGX H100 or HGX H100 systems could make the Top500 list.

Given the NVIDIA data center revenues, it feels like NVIDIA’s customers are building systems where the customers simply do not care about the Top500. In other words, the folks deploying the new and popular HPC accelerator are not submitting to the Top500 to displace Lenovo’s web server clusters.

Fabric and Networking Trends

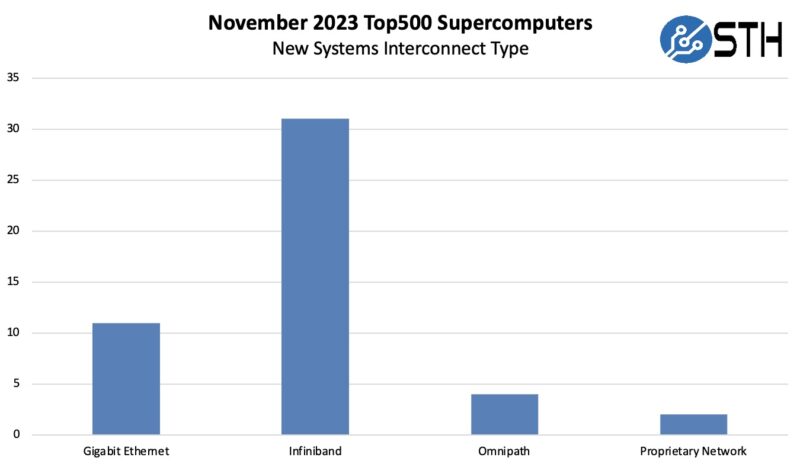

Here is one that many regulars to this piece will identify with. On the Interconnect side, Ethernet had been by far the most common solution. In 2023 InfiniBand made a huge comeback.

This is another big list for Infiniband especially after Ethernet had carried many of the previous lists.

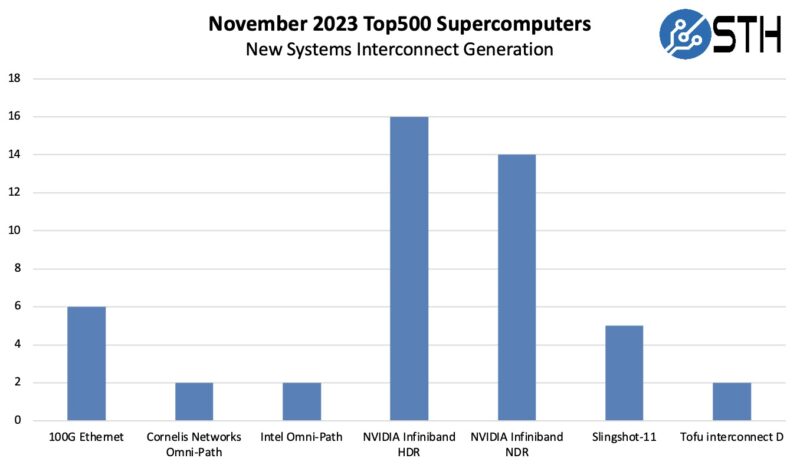

Here is a look at the Interconnect by generation details.

We actually saw four Omni-Path systems which is more than we expected.

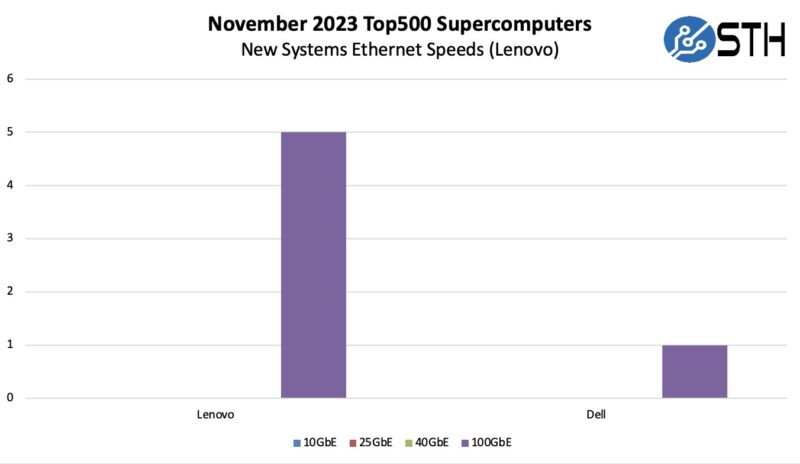

Lenovo had fewer stuffing systems than it has had in previous lists. There were five CPU-only Ethernet clusters. Dell’s cluster used the PowerEdge R750xa with NVIDIA A100 PCIe GPUs and 100GbE.

Final Words

Seeing NVIDIA’s massive GPU sales, and knowing those AI systems have interconnects to support runs to make the Top500 really underscores a challenge for the Top500. At SC23, the big dollars were advertising AI solutions. AMD did not even hold a SC23 pre-brief because they told us they are all-in on AI. Many of the talks at SC23 were about how mixed-precision computing can help achieve scientific advancement instead of using double precision. One could argue that the goal of the Top500 was to track the largest systems for scientific computing. It would be hard to argue, however, that all of these untracked AI systems are not making scientific advancements. We now have a list with many large AI systems are not submitting, Chinese domestically deployed systems are not submitting, but web hosting clusters submitting results. Maybe it is just the gap between Frontier and two new Exascale systems hitting the list. Still, it feels like the Top500 is measuring something that fewer are finding beneficial at this point. Perhaps that will change again over time.

Although the high-performance Linpack used to rank the Top500 is largely irrelevant from a scientific point of view, it is a wonderful stress test to ensure a new supercomputer works according to specification. Thus, running HPL makes sense as part of engineering best practices when installing new systems that will be used primary for AI or even cloud micro services. What doesn’t make sense is submitting such tests to the Top500. Thankfully, many aren’t.

As to why there’s not been so much movement further down the list, my theory is the smaller sites have been more affected by the post-epidemic inflation and can’t afford an upgrade. That’s, anyway, the case here.