Today, Microsoft showcased its newest NVIDIA GB200 systems through the official Microsoft Azure X account. The company also showcased its next-gen NVIDIA training and inferencing platform, which is key because it also shows the giant cooling solution needed for this rack. By giant the coolant distribution unit, or CDU, takes up twice as much aisle space as the GB200 compute rack.

New Microsoft Azure NVIDIA GB200 Systems Shown

Here is the post of the Microsoft Azure NVIDIA GB200 system on X where it says it is the first NVIDIA Blackwell cloud.

Microsoft Azure is the 1st cloud running @nvidia‘s Blackwell system with GB200-powered AI servers. We’re optimizing at every layer to power the world’s most advanced AI models, leveraging Infiniband networking and innovative closed loop liquid cooling. Learn more at MS Ignite. pic.twitter.com/K1dKbwS2Ew

— Microsoft Azure (@Azure) October 8, 2024

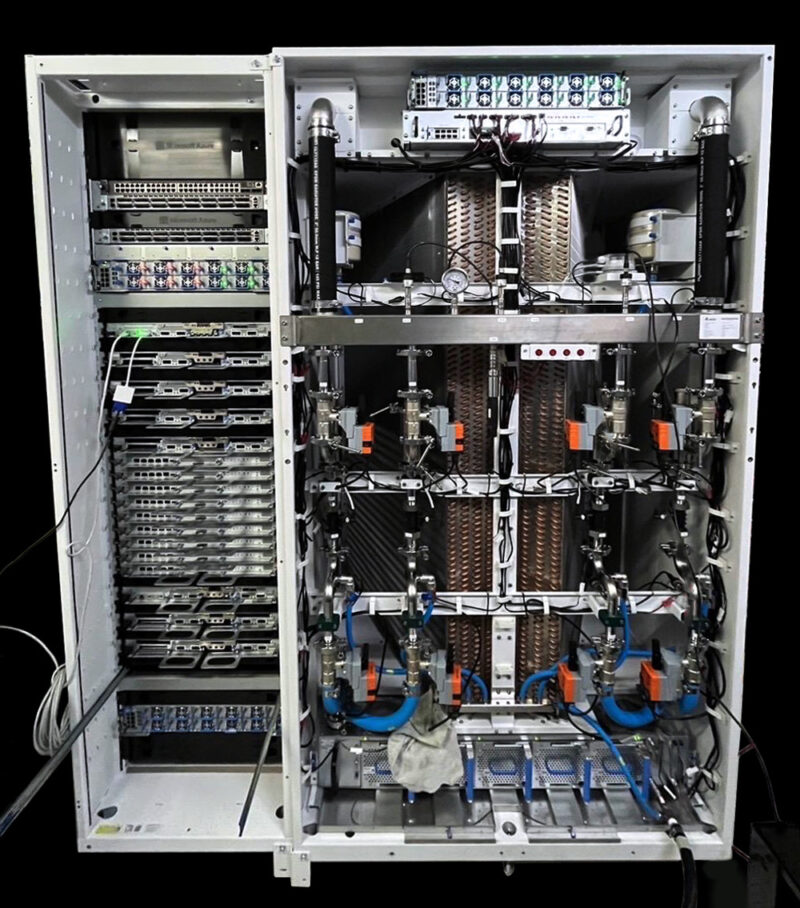

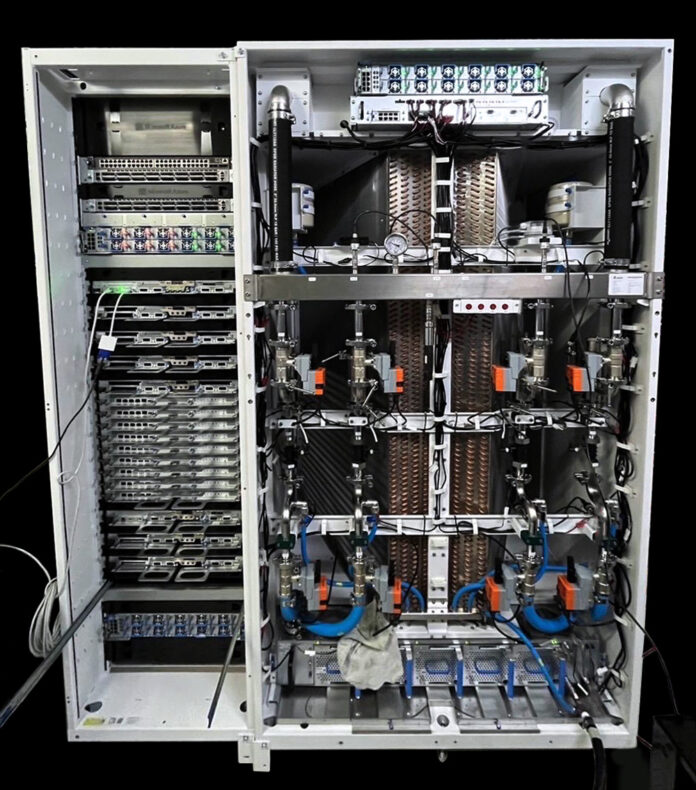

Taking a quick look at the rack, something is immediately obvious, the cooler dwarfs the compute rack, even though the compute rack only shows 8 GB200 trays installed.

The left side of the image is the GB200 rack. The right side is twice as wide as the left. On the right side, we can see the giant liquid-to-air heat exchanger, essentially a fancy version of a car radiator, along with the pumps, power, and monitoring.

Final Words

Kudos to Microsoft Azure for being the first cloud with the NVIDIA GB200 solutions. We just thought that it is fun that Microsoft, unlike secretive organizations like AWS and Google, was proud enough to share its design not just for the rack, but also for the CDU.

For years, folks on the desktop side have used larger and larger air coolers, and liquid coolers. This is fun for computer enthusiasts because it is a case where the liquid cooler is roughly twice the size of the compute and networking rack itself.

Of course, this is necessary as the NVIDIA GB200 NVL designs are very dense.

Patrick’s Editor’s Note: Usually, heat exchangers that are two racks wide are for multiple racks. Microsoft did not picture it, but I sense that the cooling might actually be for multiple racks, including those not shown, since that would be a huge one for a single GB200 rack with eight compute trays installed. For reference, we covered CoolIT in-row liquid-to-air CDUs that were two racks wide, but they can do 180-240kW in a similar footprint. CoolIT said its 240kW in-row CDU can handle up to four GB200 NVL72 racks. Note that is not the CDU it looks like Microsoft is showing but it gives you some sense of how much cooling is normal in that footprint. Microsoft only showed one rack that looks like it is in progress with the heat exchanger, but it is likely part of a larger installation. Who knows. But what they shared was just the two side-by-sde.

This is very interesting to see that on such a large load that it’s still liquid to air, I would imagine that the CDU is cooling multiple racks. With such a large coil unit it should handle 2 racks on each side. Even then it’s crazy that they did not go liquid to chip on these. I especially like the Belimo valves that they have used on it.

That cooling unit is called the “Callan”, an evolution of the smaller “Sidekicks” they publicly announced last year. MS can release more info on their own as far as specific specs, but they are quite the site in person, impressive tech!