At OCP Summit 2019, Intel had its new 100GbE adapters on display in the new OCP NIC 3.0 form factor. STH agreed to an embargo of the information about these NICs during a recent briefing, however, Intel has now shown them publicly. As a result, we are simply going to show what Intel is publicly displaying about these NICs. Intel has been largely absent from the 100GbE generation even though we started to see vendors like Mellanox ConnectX-4 (soon NVIDIA) have adapters in 2014/ 2015. Intel had the multi-host FM10420 but no mainstream successor to Fortville. Four or five years later, Intel is joining the 100GbE NIC ecosystem about two years after it joined the 25GbE ecosystem with the Intel XXV710 adapters.

Intel 100GbE OCP NIC 3.0

Based on the OCP NIC 3.0 form factor, the Intel 100GbE OCP 3.0 NIC utilizes the new card form factor. Both the first and second generation OCP NIC form factors utilized a mezzanine form factor. Here is an example from our Gigabyte H261-Z60 server review where a single port 10GbE adapter is installed in the node:

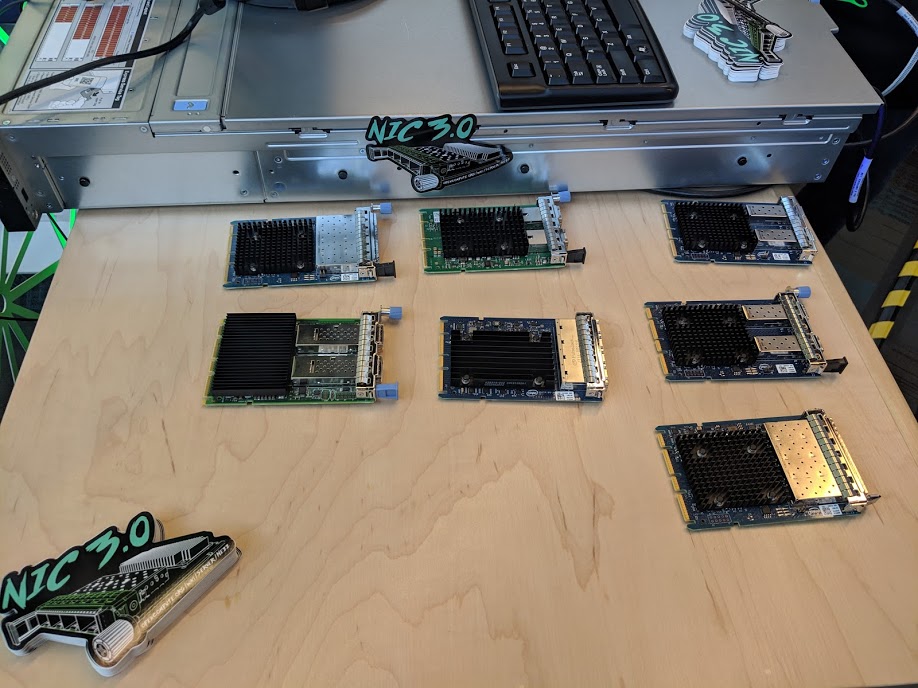

Intel is wholeheartedly supporting the new OCP NIC 3.0 form factor. Here is a spread of NICs that the company is showing off:

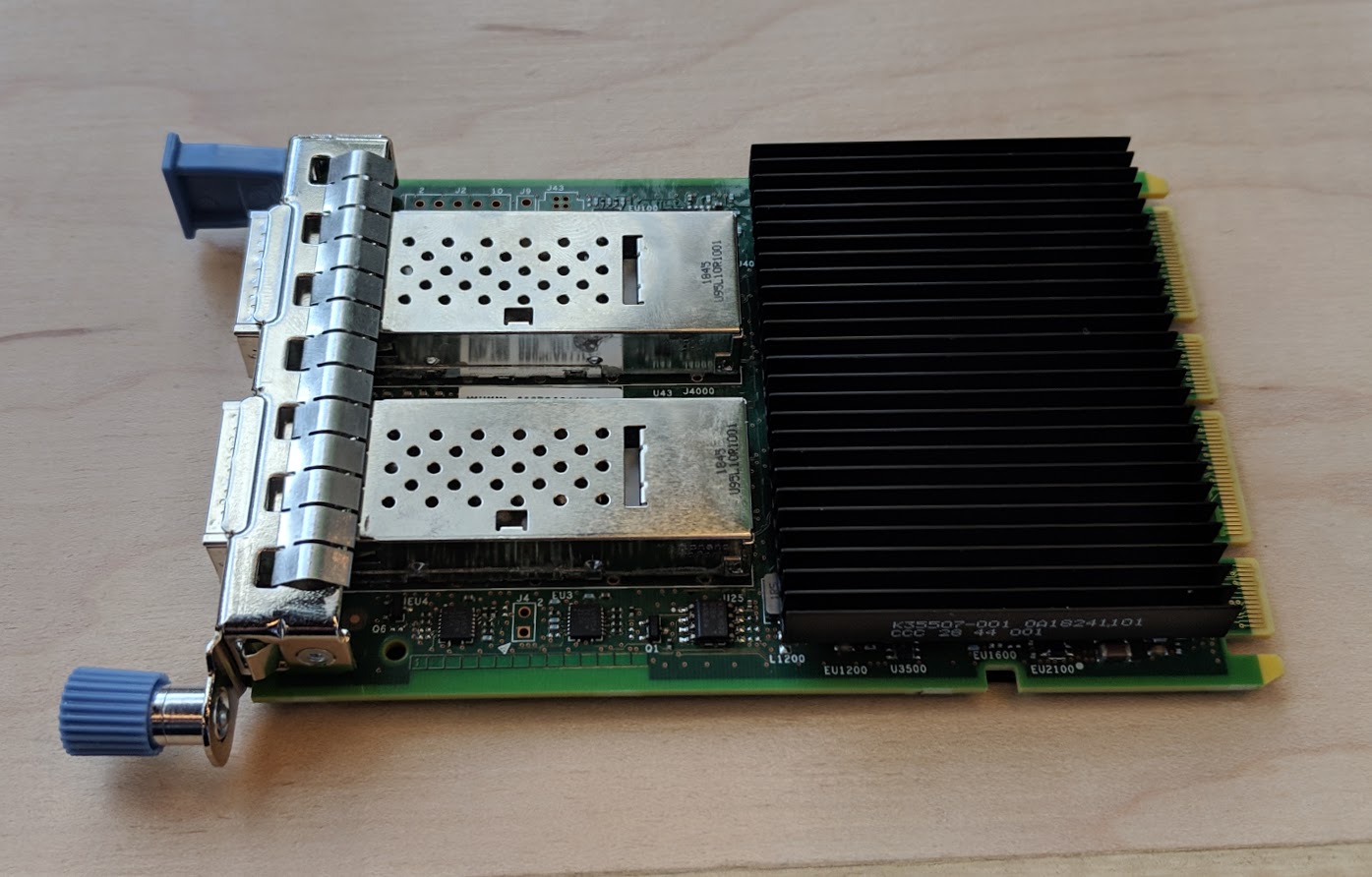

One of the cards is the Intel 100GbE OCP 3.0 NIC. Here is a better shot of that:

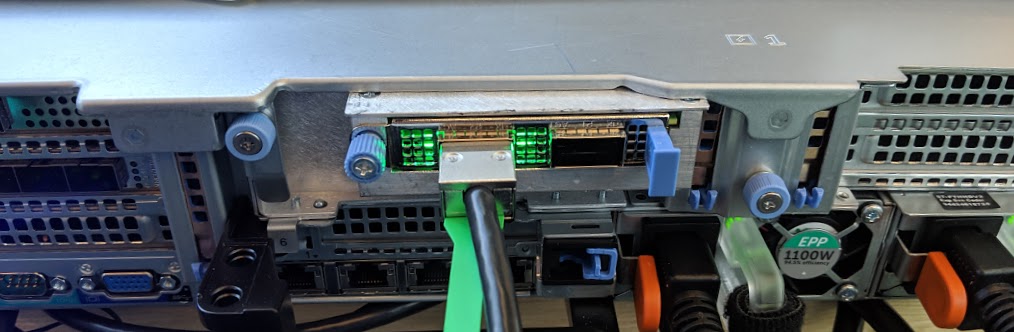

Intel also showed the 100GbE NIC in a Dell EMC PowerEdge server running a 100GbE demo:

This particular server had multiple NICs, even PCIe versions. The demo is not showing any sort of offload features, which we expect in 100GbE generations due to the processing power required at that line rate. It is simply showing the 100Gbps speeds going over the wire.

Final Words

It is great to see that Intel is joining the 100GbE fray. We recently reviewed the Mellanox ConnectX-5 VPI 100GbE and EDR InfiniBand adapter which was released in 2016 and will be replaced in 2019 with the ConnectX-6 version. For the market, it is great to see that Intel is providing choice in vendors. We will have more details on this new card as we can release them.

Personally, I would have expected Intel to have added RDMA/iWarp features to the adapter. Faster speed is good but RDMA can significantly reduce message latency.

They should have recognized that their OPA tech is running a distant 3rd is high-speed networking space and started a beachhead into more accepted tech (non proprietary tech) like iWarp {given that RoCE is a Mellanox thing}

BinkyTO – just to clarify, we are under embargo on the features of this adapter. We can only say it exists per the information shown at OCP.

I know that is a pain, but it is what we have when Intel slow trickles the release information.

Patrick, I will read between the lines and await new details as they are released, though I find it odd that such details would be dribbled out.