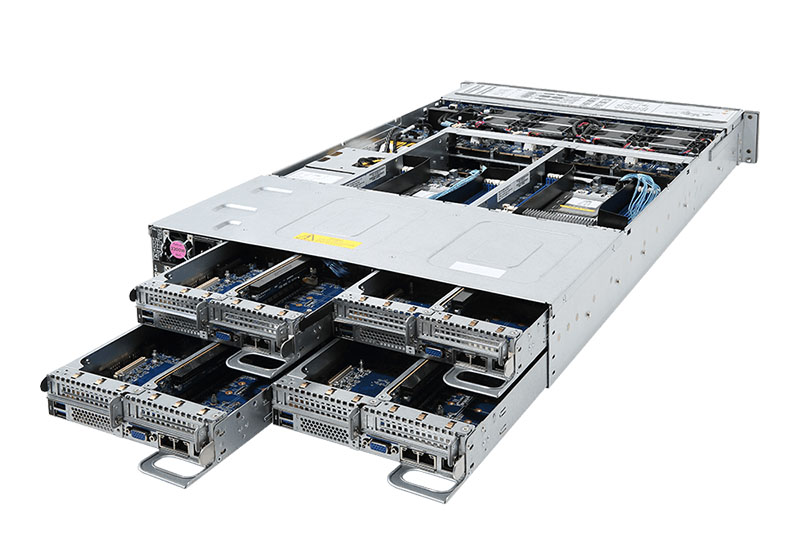

With 4 nodes in 2U the new Gigabyte H261-Z60 offers a massive amount of compute power in a compact chassis. The 2U4N designs are extremely popular as they double compute density versus standard 1U servers, have lower power and cooling costs, and are easy to service by using trays in a shared chassis with redundant power supplies. This is a topic we have gone over several times at STH, and we even have a specific test methodology for these types of systems. You can read about that in our piece: How We Test 2U 4-Node System Power Consumption. Bringing this great form factor to the AMD EPYC 7000 series is a win for the market.

Gigabyte H261-Z60 Overview

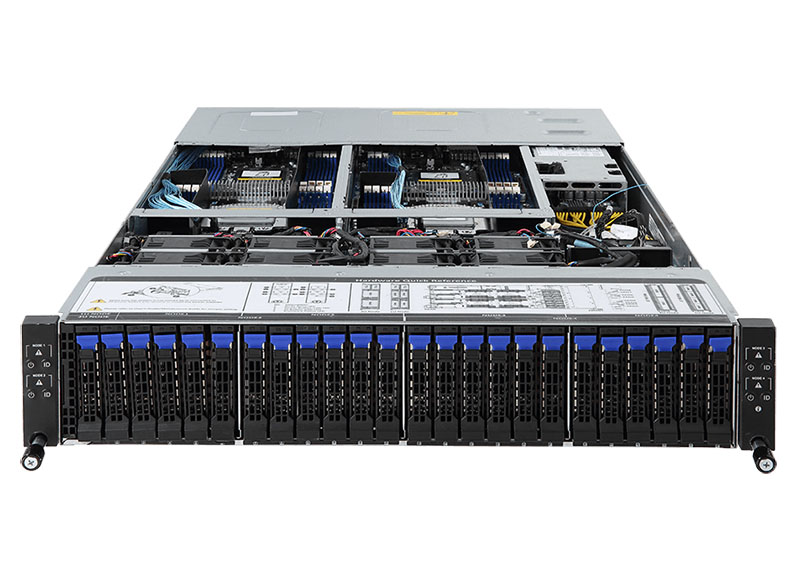

We like that the chassis that Gigabyte uses is a 2U 24x 2.5″ bay chassis. Gigabyte has a flexible backplane that allows different storage configurations that we discussed in our Gigabyte R281-G30 Versatile Compute Platform Review.

The rear of the chassis features four node sleds along with dual 2.2kW 80 Plus Platinum power supplies. The design allows for dual PCIe x16 (LP) expansion cards plus an OCP mezzanine networking card which is an awesome expansion capability in this form factor. Internally, there are dual M.2 NVMe SSD slots on each node as well.

With dual AMD EPYC 7000 series CPUs this means that the new Gigabyte H261-Z60 is capable of handling up to 256 cores and 512 threads in a single 2U chassis. Compare this to the previous generation of servers where the Intel Xeon E5-2699 V4 was the top bin at 22 cores. That means in a single generation we have gone from 176 cores and 352 threads maximum in a 2U to 256 cores and 512 threads for about a 45% increase in density versus just one generation prior. The CPUs are each flanked by 8x DDR4 DIMM slots which means that the memory bandwidth will go from 4 channels per CPU in the Xeon E5 era to 8 channels per CPU in the AMD EPYC 7000 series or twice as many RAM channels.

Final Words

At STH, we have the Supermicro which is the (basis for the HPE Apollo 35) 2U 4-node system with testing wrapping up and the Cisco UCS C4200 and C125 M5 project starting hopefully next quarter. AMD EPYC 2U 4-node servers are an awesome product segment to use once you experience the density achieved. Patrick saw the Gigabyte H261-Z60 at his visit to the Gigabyte Computex booth this year and said that he thinks it is a great design.

What’s the max CPU count on one of these babies? Also any room for GPUs? I would not mind having this setup mining during idle time.

Each node has two sockets and there are four sockets. With the first generation of AMD EPYC 7000 that is 32 cores * 8 sockets = 256 cores/ 512 threads.

GPU wise you cannot put large GPUs that are double width.

Please do a comparison on 2U-4node system from 2010 to 2018.

Can you include the prices too?

I want to make a 256 core server with amd 64 core X 4. Is it suitable for this?