The GPU compute segment is rocking right now. There is an insatiable appetite by firms investing in deep learning and AI for GPU compute platforms to train their models. While many workloads have moved to the cloud, on-prem deep learning servers tend to have a 3-4 month ROI versus getting the same capacity online. We delved into this in our piece: DeepLearning11: 10x NVIDIA GTX 1080 Ti Single Root Deep Learning Server (Part 1). Gigabyte is capitalizing on this trend. The new Gigabyte G481-HA0 and Gigabyte G481-S80 GPU compute servers are optimized for either PCIe 3.0 x16 add-in cards or SXM2 cards for different compute models.

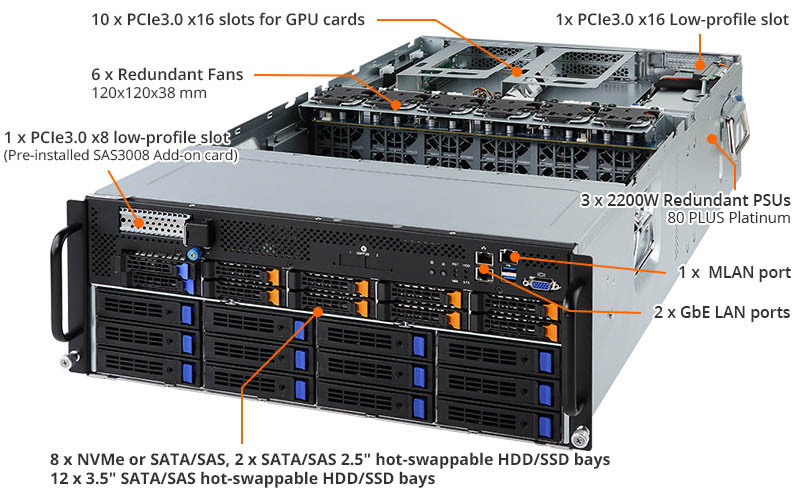

Gigabyte G481-HA0 GPU Compute Server

The Gigabyte G481-HA0 GPU compute server can handle up to 10x PCIe 3.0 x16 GPUs. The server is a dual root design with 5x GPUs that can be attached to each PCIe root that are then attached to an Intel Xeon Scalable CPU.

The platform has two GPUs that sit atop of the main segment of 8x GPUs at the rear of the chassis. Perhaps one of the more defining features is the array of 8x NVMe, 2x SATA/ SAS and 12x 3.5″ SATA/ SAS drives. Storage in the deep learning field is still evolving and many organizations look to retain datasets or cached data in chassis for better performance.

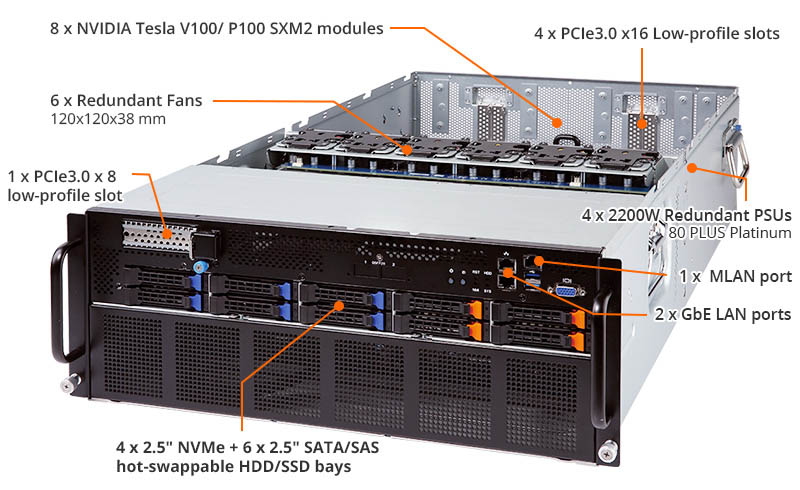

Gigabyte G481-S80 GPU Compute Server

The Gigabyte G481-S80 GPU comptue server is designed to take advantage of the NVIDIA SXM2 modules, either the NVIDIA Tesla V100 or Tesla P100. This is a popular option for the deep learning data scientists because the server utilizes NVLink for high-speed GPU-to-GPU communication.

In terms of front drive expansion, there are four NVMe U.2 2.5″ drive bays and six SATA/ SAS hot swap bays. Previous generations of GPU compute servers utilized a single NVMe add-in card in the local chassis for high-speed storage. The 4x PCIe 3.0 x16 slots at the rear of the chassis are designed for high-speed 100GbE/ EDR Infiniband.

Gigabyte G481-S80 and G481-HA0 GPU Compute Server

Here are the specs on the server:

| Model | G481-S80 | G481-HA0 |

| CPU | Dual Intel Xeon Scalable series processors (up to 205W TDP per socket) | |

| DIMM | 6-Channel RDIMM/LRDIMM/NVDIMM DDR4, 24 x DIMMs | |

| LAN | 2 x 1GbE LAN ports (Intel® I350-AM2, front) 2 x 1Gb M-LAN ports (front & rear) |

2 x 10GbE LAN ports (Intel® X550-AT2, rear) 2 x 1GbE LAN ports (Intel® I350-AM2, front) 2 x 1Gb M-LAN ports (front & rear) |

| Storage | 4 x 2.5″ U.2, 6 x 2.5″ SATA/SAS hot-swappable HDD/SSD bays | 8 x U.2 or SATA/SAS, 2 x SATA/SAS 2.5″ hot-swappable HDD/SSD bays 12 x 3.5″ SATA/SAS hot-swappable HDD/SSD bays |

| Expansion Slots | 5 x low profile PCIe Gen3 expansion slots 1 x OCP Gen3 x16 mezzanine slot |

12 x low profile PCIe Gen3 expansion slots 1 x OCP Gen3 x16 mezzanine slot |

| GPU Supported | 8 x SXM2 form factor GPU modules (NVIDIA V100 or P100) | 10 x PCIe GPU card |

| PSU | 4 x 80 PLUS Platinum 2200W PSU | 3 x 80 PLUS Platinum 2200W PSU |

Overall, these are exciting options for the deep learning communities.

Hey guys, another great article..

Any ideas if this can run GTX/RTX 2080/Ti Blower/Turbo cards?