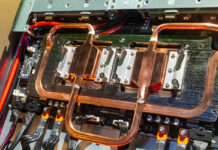

This is one of the most unique mini systems that we have seen in a long time. When we first heard of the 4-port 2.5GbE and 2-port 10GbE units, we were intrigued by the addition of SFP+ ports. That is something that after countless mini-PC reviews we have heard asks for from our readers and the YouTube comments. When we reviewed The R86S it had many of the features we wanted but lacked a bigger CPU and expandability. (Note we have a vastly upgraded R86S review in progress.) This unit has an Intel Core CPU and is based on a motherboard that we would not expect. In this review, do not miss the internal hardware overview because we were shocked at how these are built.

New 4x 2.5GbE and 2x 10GbE Intel Core Firewall and Virtualization Appliance Overview

For this, we have a video with both the 10GbE model as well as the model that has eight 2.5GbE ports. We are going to be doing more of these multi-unit videos over the next quarter and then have written reviews for both. The 10GbE one was the one the team picked for this video to go live with first.

As always, we suggest watching in a tab, browser, or app for a better viewing experience.

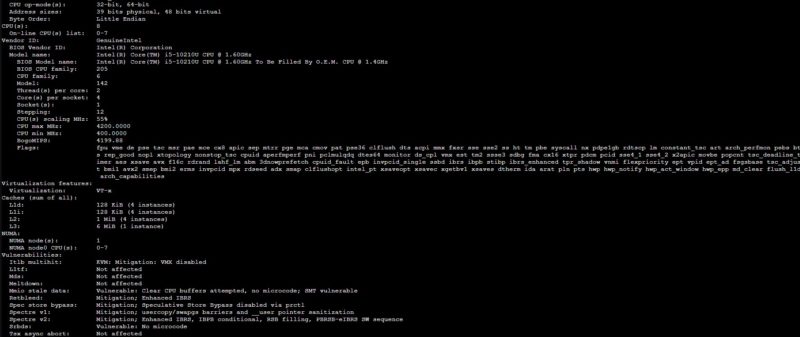

Since we had both units, we ordered the top two CPU options. One was an Intel Core i5-10210U that was in the 8x 2.5GbE model.

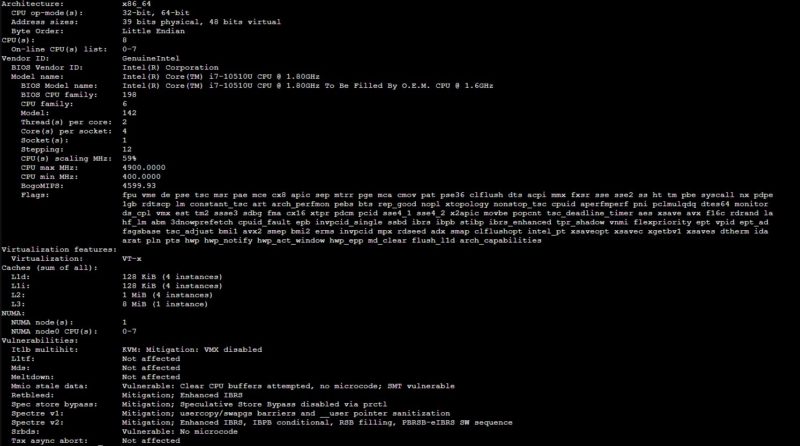

We also had this system with the Intel Core i7-10510U CPU. Since both CPUs are options available in both systems, and we successfully and easily did a motherboard swap, we are going to present results from both, and an unexpected recommendation.

In our system, we got 16GB of DDR4 memory and a 256GB NVMe SSD. The pricing of the Topton unit was $512 at the time we ordered on AliExpress. Since then prices have moved a bit, and the same configuration can sometimes be found for less and a barebones for around $400 with discounts. We also found the units for sale under the “Moginsok” brand albeit at a higher price on Amazon.

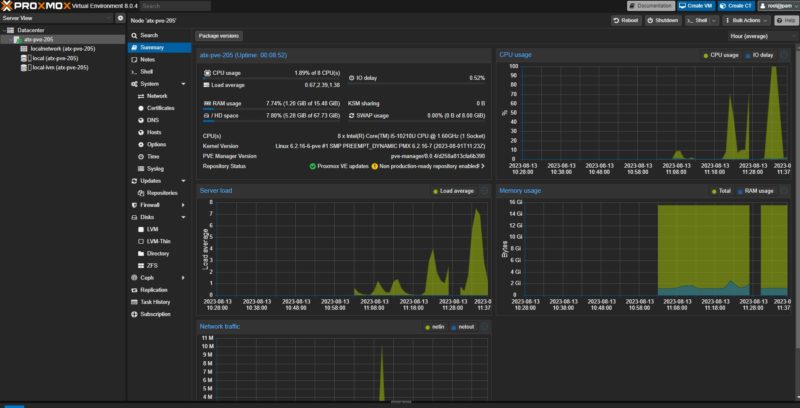

There is a decent premium for getting more capability than we see in some of the fanless 4-port and 6-port 2.5GbE units. We ended up running mostly Proxmox VE 8 as a base OS on these. They came pre-installed with pfSense Plus, likely to be compatible with the Intel i226-V before the latest pfSense CE 2.7 release. We also ran OPNsense on it, but Proxmox VE as a base hypervisor felt like a better use of the hardware.

Next, let us take a look at the outside of the system before getting to the crazy internal overview.

I’d honestly love to see the 10Gb units as Debian kubernetes hosts.

Or even better, find a compatible 4-6 bay SSD chassis (making use of those onboard SATA ports). With how cheap second-hand ~250-500G enterprise SATA drives are, this is potentiallu looking like the most cost effective way to make a strongly perfirmant cephfs cluster.

I’m thinking the x520 would get the latency values low enough that one could realistically deploy high performance distributed storage in a home lab, without having to sacrifice their firstborn… I might have to give this a shot!!

As i read this article I thought I was reading another installment of “What’s absurd to you is totally normal for us” on the “Fanless tech” website.

So this device has SATA ports that you may or may not be able to use. That’s a waste of a Dollar or more in costs. And those Molex ports – more waste.

And those “over the top” CPUs that make the case hot. Does the case get as hot as a Texas concrete road at the peak of summer heat when it doubles as a frying pan?

Ok, interesting product but…a perfect case of how to stuff 10 pounds of stuff in a 2 ounce baggie.

No thanks.

Do the SFP ports negotiate at lower multigig speeds? Like 5 and 2.5?

@Michael, not if they continue to be routed to an Intel 82599 / X520 series network controller.

Do they offer AMT management? Poor-mans KVM…

This is an excellent little box, add a bunch of SATA drives for bulk storage, and you got yourself an all-in-one node. Get 3 nodes, and now you have a Ceph cluster, and you can use DAC to have each node connect to two other nodes, not even going to need a switch. 2.5G for some inter-node communication and you’re essentially ready for Proxmox and Ceph or Rancher HCI.

@Patrick Did you try ESXi 8/8U1 during your testing? The VMware HCL lists a handful of X520-based NICs as compatible with 8, using the ixgben driver. Wondering if it could be coaxed into working if it’s not out of the box.

The review states, “We then tried the Core i7 system after it was done with weeks of stress-ng, and it failed Geekbench 6 as well. We did multiple tests, and it happened several times at different parts of the Geekbench 6 (6.0 and 6.10) multi-core tests.”

Since the system is not stable enough to complete one of the tests, how is this going to work as a server? Would extra cooling make it stable? Would down clocking the RAM fix the crashes? What about CPU speeds?

Without knowing why the system is unstable and how to fix it, I don’t see how the conclusions could be anything other than doesn’t work stay away.

Earlier today, the Youtube thumbnail was nauseatingly positive about these little units, faulty as they were. Then the article says We don’t like the heat, the noise, the TDP, the stability, the chosen components…

Hey guys running around today. Eric – we literally had stress-ng and iperf3 running on this unit for 30 days straight. The only thing that crashed it was Geekbench 6, but not 5. All we can do is say what we found. Steve – we tried 8. I usually try staying safe on VMware since the HCL is so much pickier than other OSes.

MOSFET – I can tell you that is completely untrue. This was scheduled to go-live at 8AM Pacific. I was heading to go film something in Oregon so everything was scheduled. The thumbnail only says 10GbE 2.5GbE and that is all it has said. The title has not changed either. I am not sure what is “nauseatingly positive” when it is just factually the speed of the ports.

Man, I literally just bought (and had delivered) a Moginsok N100 solution with 4 x 2.5Gbit ports. Not sure how I missed this, as it would have completely changed my calculus.

Since Geekbench crashes at different places each run, this points to a hardware malfunction. My suspicion is continuous stress-ng creates too predictable a load to crash the system.

If I were to guess, the on-board DC-to-DC power regulators aren’t able to handle sudden changes of load when a fast CPU switches back and forth from idle to turbo boost (or whatever Intel calls it). Such power transients likely happen again and again during Geekbench as it runs each different test.

Repeated idle followed by all cores busy may not happen frequently in real-world use, but crashing at all is enough to ruin most server deployments I’d have in mind.

I wonder if the system would be stable after decreasing the maximum CPU clock speed.

@Chrisyopher – Your N100 is great.

@Eric – we did not see it on the Linux-Bench scripts either. Those are pounding workloads over 24 hours. We only show a very small portion of those scripts here but those would be more short duration bursts and long bursts. There is something amiss, I agree, but it does not seem to be a simple solution.

I wonder if Geekbench is doing something with the iGPU that stress-ng doesn’t touch.

This whole thing looks like an interesting platform in completely the wrong case and cooling solution.

It also reinforces that Intel (or someone) needs a low cost low power chip with a reasonable number of PCI lanes. Ideally ECC too, although that’s getting close to Xeon-D and its absurd pricing.

The X520 dual port on PCI-E x4 with 2.0 interface is actually not able to run at full speed (can only perform ~14Gbps when both ports pulling traffic together), the older R86S with Mellanox is OK because the card is running at v3.0. This is a downgrade

@Michael, normally it is the transceiver which takes care of that – most 10GBaseT transceivers can do 2.5 and 5 GbE besides 10GbE.

Oh if only it had SFP28!

(just kidding)

Very interesting device with two SFP+ ports. I wonder if they have a new Alder Lake based NAS board coming out, so getting rid of the old stock.

Topton has been having issues with these with the barebones customers. Customers are putting in off the shelf components and they struggle in the heat and hang. I am not sure Topton’s supplied RAM/SSD arent any better than anyones personal depot of parts, but these do run hot and the SSD should probably have a heat sink along with adding a Noctua fan.

You have to admit, Topton and others have their ears to the home server/firewall market by adding the 10GbE ports. But to keep the prices down they are going through the back of the supplier warehouses looking for the right combination to complete the checklist.

With as hot as they run, I would surmise they don’t have a very long useful life.

I’d be interested in seeing packet per second benchmarks on these devices running pfsense or opnsense. Having an understanding of how well the platforms perform with a standardized routing or NAT workflow would be interesting.

Something I’m missing in this review are detailed routing tests, since this device is clearly intended to be used as router.

This means that many people would buy this to put pfsense on it, so it’s nice that you would include stats on that too.

pfsense lan -> lan (2.5 and 10gbit), lan->wan (both 2.5 and 10gbit with firewall), vpn (ipsec, wireguard etc) speeds.

More technical people will understand that due to 4x on pci-e 2.0 the 10gbit expierence will be mediocre at best, but non technicial people must also get this kind of info :-)

What units support Coreboot? What is the purpose of having a firewall that can be accessed at the chip level and defeated by a powerful adversary?

I bought many small PCs for PFSense installations. All the rackmounts have failed after 4 years (PSU or SSD). The fanless were much more reliable but not recommended for non technical users because you never know if the unit is ON or OFF due to the LEDs sometimes inverted betweek disk and power ! It also by default try to boot on a not installed Windows with secure boot instead of PFSense. If you have customers using it on a remote location, it’s an issue. Also, too many PFBlocker rules can lead the unit to be too hot and to crash especially when stupid users put the unit in a closed cabinet !

My policy is to use second hand DELL servers R210, R220, R230 with the IDRAC Enterprise option and a new SSD. You just have to choose a CPU with AES hardware acceleration in order to imrove OpenVPN and IPSEC speeds. The IDRAC option allows you to repair everything when a software update goes wrong: just ask to the user to plug his mobile phone in usb share connexion mode on a PC in the same LAN and you are done.

Yes, a rackmount server is much more bulky than a mini pc but these DELL have half the depth of other models like R610 and the 3 Mhz or 3,5 Mhz CPU performs much better on single core tasks than the more expensive models.

I try to order from AliExpress, and my order was canceled, the i7 is out of stock and they informe me that “it is expected that we will launch an 11th generation cost-effective CPU in mid-September, you can buy it at that time.”

Happy with the previously reviewed firewall, I love to see more reviews. I want SFP28 next, absolutely.

Nadiva – Recording that video in about a week and a half.

I wonder how hard it would be to put this thing in a little NAS case with 6×2.5″ bays and some fans… it’s pretty much ideal, but I definitely like the NAS option.

If anyone has some information where to buy the motherboard alone please write here

Looking forward to the updated R86S review!

Just got the new one with a Intel U300E and DDR5! Going to try it out with Proxmox VE later.

https://imgur.io/VO0PEMa

@Noobuser

Do you have any updates about this model? I’m looking for a future router with 10GbE SFP ports for home.

Is stable? Is crashing as the previous ones in tests? Is getting hot?

Hi,

would it be possible to know how the console port is working on this device? What kind of operations are supported by using this console port? thank you very much.

Thank you