MSI RTX 3070 Ventus 3x OC Deep Learning benchmarks

Before we begin, we wanted to note that over time we expect performance to improve for these cards as NVIDIA’s drivers and CUDA infrastructure matures.

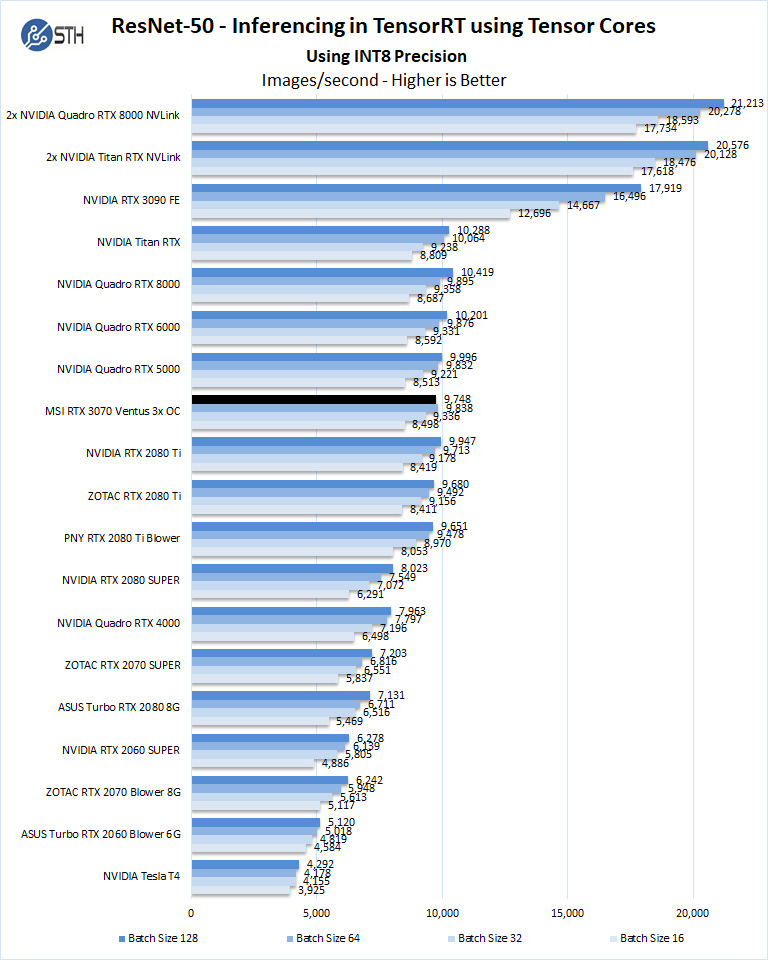

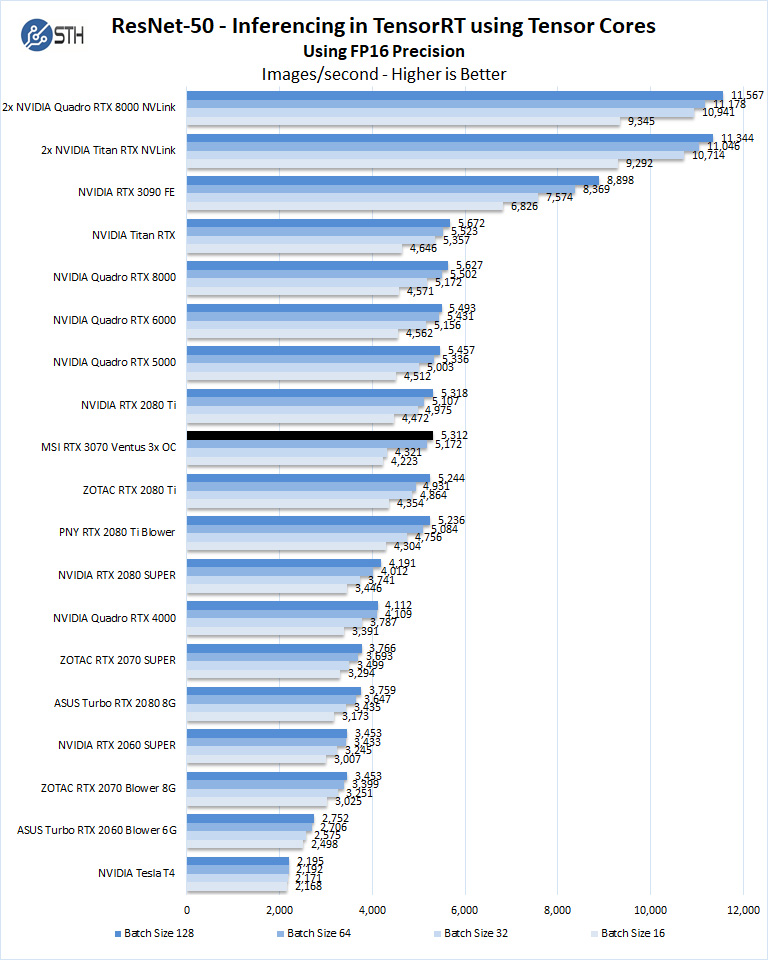

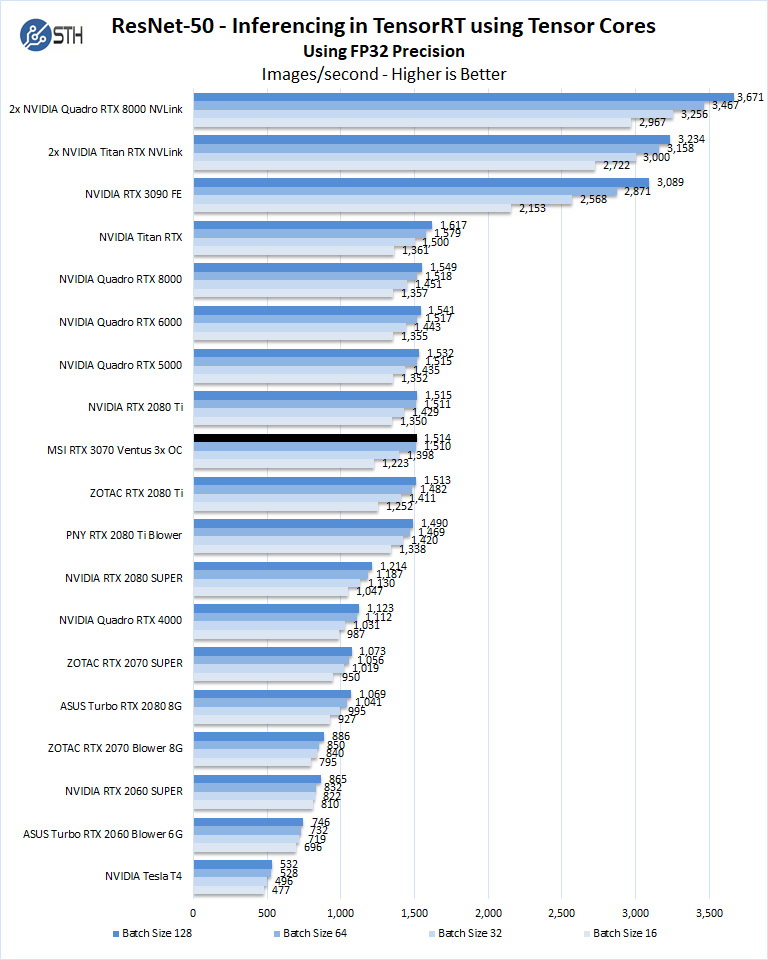

ResNet-50 Inferencing in TensorRT using Tensor Cores

ImageNet is an image classification database launched in 2007 designed for use in visual object recognition research. Organized by the WordNet hierarchy, hundreds of image examples represent each node (or category of specific nouns).

In our benchmarks for Inferencing, a ResNet50 Model trained in Caffe will be run using the command line as follows.

nvidia-docker run --shm-size=1g --ipc=host --ulimit memlock=-1 --ulimit stack=67108864 --rm -v ~/Downloads/models/:/models -w /opt/tensorrt/bin nvcr.io/nvidia/tensorrt:20.11-py3 trtexec --deploy=/models/ResNet-50-deploy.prototxt --model=/models/ResNet-50-model.caffemodel --output=prob --batch=16 --iterations=500 --fp16

Options are:

–deploy: Path to the Caffe deploy (.prototxt) file used for training the model

–model: Path to the model (.caffemodel)

–output: Output blob name

–batch: Batch size to use for inferencing

–iterations: The number of iterations to run

–int8: Use INT8 precision

–fp16: Use FP16 precision (for Volta or Turing GPUs), no specification will equal FP32

We can change the batch size to 16, 32, 64, 128 and precision to INT8, FP16, and FP32.

The results are Inference Latency (in sec).

If we take the batch size / Latency, that will equal the Throughput (images/sec) which we plot on our charts.

We also found that this benchmark does not use two GPUs; it only runs on a single GPU.

You can, however, run different instances on each GPU using commands like.

“`NV_GPUS=0 nvidia-docker run … &

NV_GPUS=1 nvidia-docker run … &“`

With these commands, a user can scale workloads across many GPUs.

Also one can use the —device=0,1,2,3,4,… a command to select which GPU to run on, more on this later.

We start with INT8 mode.

Using the precision of INT8 is by far the fastest inferencing method if at all possible, converting code to INT8 will yield faster runs. Installed memory has one of the most significant impacts on these benchmarks. Inferencing on NVIDIA RTX graphics cards does not tax the GPU’s to a great deal. However, additional memory allows for larger batch sizes.

Let us look at FP16 and FP32 results.

For these cards, we see them as a very affordable alternative to a NVIDIA T4 for inference, especially if they are installed in non-server edge nodes such as tower desktop form factors.

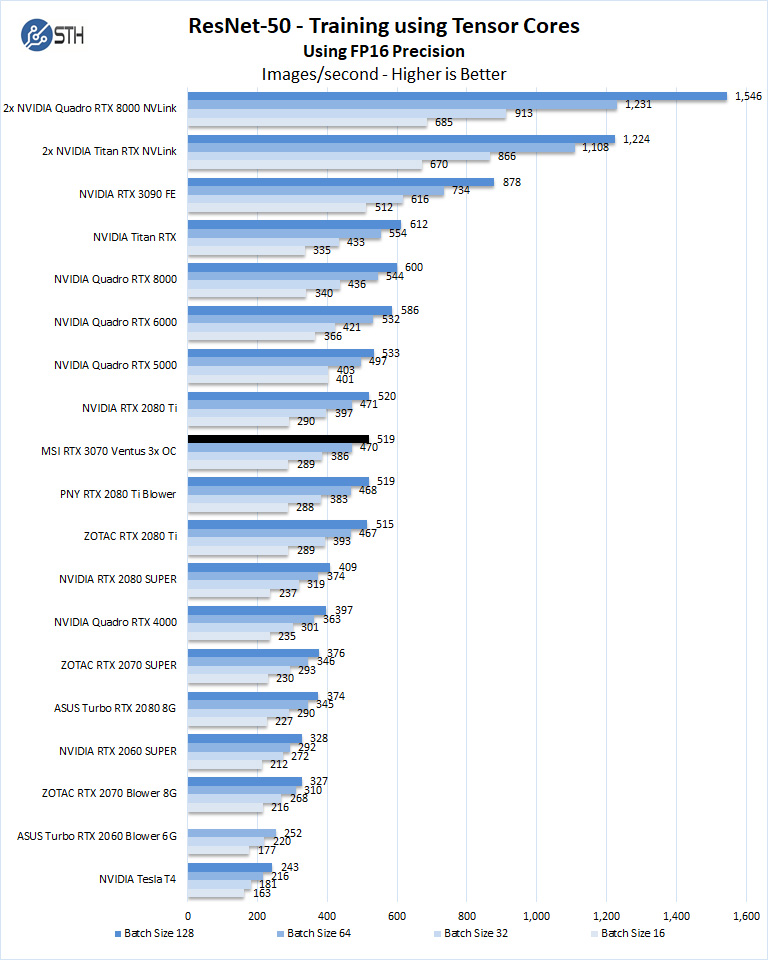

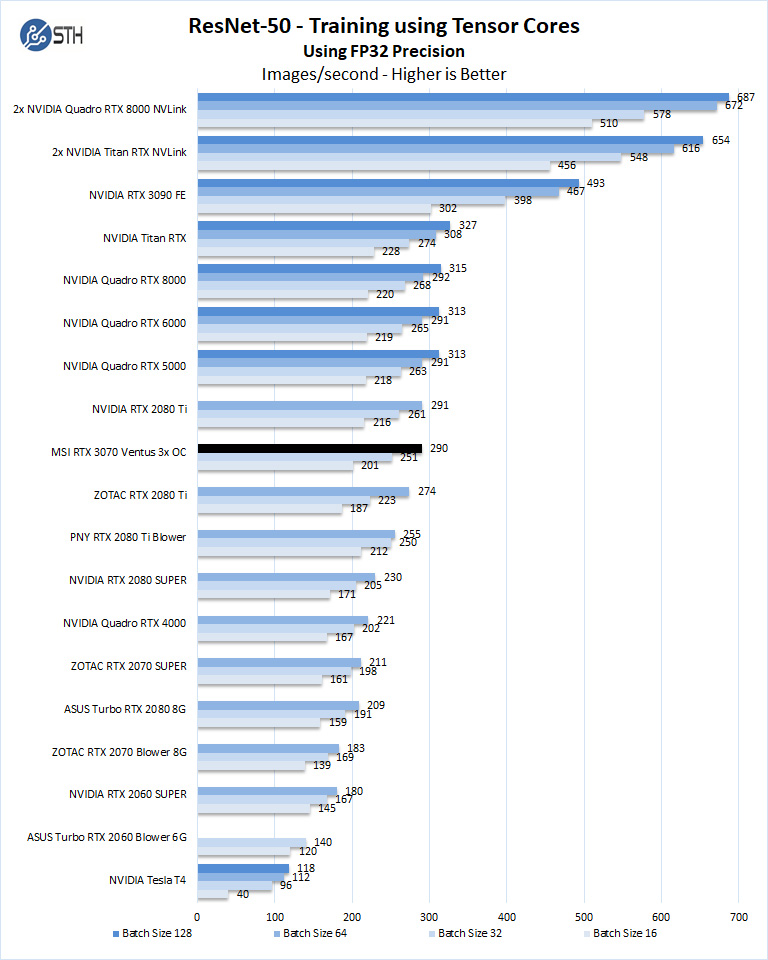

ResNet-50 Training, using Tensor Cores

We also wanted to train the venerable ResNet-50 using Tensorflow. During training, the neural network is learning features of images, (e.g., objects, animals, etc.) and determining what features are important. Periodically (every 1000 iterations), the neural network will test itself against the test set to determine training loss, which affects the accuracy of training the network. Accuracy can be increased through repetition (or running a higher number of epochs.)

The command line we will use is.

nvidia-docker run --shm-size=1g --ipc=host --ulimit memlock=-1 --ulimit stack=67108864 -v ~/Downloads/imagenet12tf:/imagenet --rm -w /workspace/nvidia-examples/cnn/ nvcr.io/nvidia/tensorflow:20.11-tf2-py3 python resnet.py --data_dir=/imagenet --batch_size=128 --iter_unit=batch --num_iter=500 --display_every=20 --precision=fp16

Parameters for resnet.py:

–layers: The number of neural network layers to use, i.e. 50.

–batch_size or -b: The number of ImageNet sample images to use for training the network per iteration. Increasing the batch size will typically increase training performance.

–iter_unit or -u: Specify whether to run batches or epochs.

–num_iter or -i: The number of batches or iterations to run, i.e. 500.

–display_every: How frequently training performance will be displayed, i.e. every 20 batches.

–precision: Specify FP32 or FP16 precision, which also enables TensorCore math for Volta, Turing and AmpereGPUs.

While this script TensorFlow cannot specify individual GPUs to use, they can be specified by

setting export CUDA_VISIBLE_DEVICES= separated by commas (i.e. 0,1,2,3) within the Docker container workspace.

We will run batch sizes of 16, 32, 64, 128, and change from FP16 to FP32.

Some GPUs will not show some batch runs because of limited memory.

The RTX 3070 compares well to similar GPU’s like the RTX 2080 Ti. Having only 8GB of memory means we can see that we could not complete batch size = 128 runs on the card.

There was another impact to that limited memory figure. We could not complete the OpenSeq2Seq (GNMT) testing as we did in the recent NVIDIA Quadro RTX 6000 GPU Review because it requires more GPU memory.

Next, we will look at the GeForce RTX 3070 power and temperature tests and then give our final words.

Hey that’s the card the card I was able to find and got it for $525 at microcenter. Last one in stock woot! It completed the missing piece to my build. Wish the card had light though, don’t care for rgb but a nice lit up RTX would be cool.

Thanks William, an excellent comparison that we’ve been waiting for.

Shouldn’t the article have a Tag for “compute”, and it would be helpful (for search) to have “compute” in the article’s title.

Also, the “Arion v2.5” benchmark would benefit from adding a few CPUs; for comparison.

We really appreciate seeing how much to decide to spend, and which card we should consider for compute applications.

I have this card and though a good card MSI really locked down the power consumption. There’s a interesting read on reddit about flashing this exact card and performance gain on reddit. I personally flashed this card with a bios from the MSI 3070 SUPRIM X which raises the TDP to 300 watts. Ive been running it for a few months with no issues and even mine with it when not playing games.

Google: reddit Flashing BIOS to RTX 3070 Ventus 3x OC