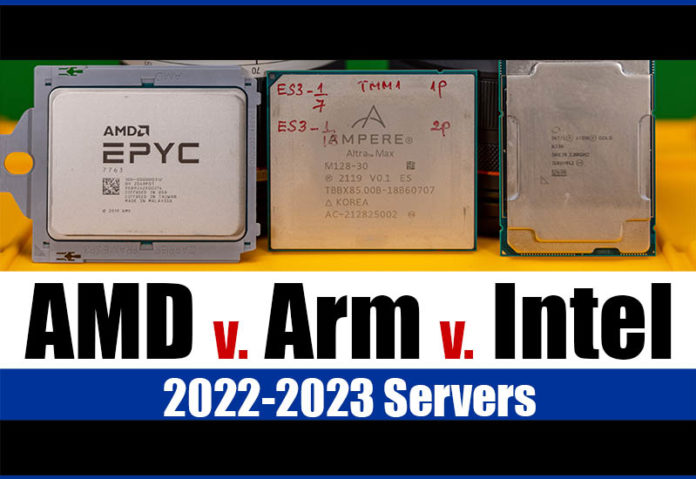

This article will discuss the main server CPU ideologies that major vendors are using in 2022-2023. In 2022, many of the chips we thought would be out by now are instead delayed. As a result, we are going to take a moment to give our readers a perspective on where the market is heading. Specifically, we will talk about the Arm, Intel, and AMD server CPU ideologies. We will also discuss why buying a server 18 months from now will look much different than it does today.

Video Version

We have a little video version of this one as well that you can get to here:

As always, opening this in its own browser, tab, or app will make for a better viewing experience. This piece is more in the vein of a What is a DPU A Data Processing Unit Quick Primer, Compute Express Link or CXL What it is and Examples, and Server CPUs Transitioning to the GB Onboard Era type of video where we just chat about features coming to servers.

The Simple 2022-2023 Server CPU Framework

In 2022-2023, we have a simple framework that we are going to use:

- Arm – More Cores, More Better

- Intel – More Accelerators, More Better

- AMD – More Moderation, More Better

This is not correct English just to have it stick a bit more so folks remember it over the next year and a half. We are also going to discuss how this has changed a lot recently.

Arm – More Cores More Better*

Arm CPUs are on a path of More Cores, More Better, with some big caveats.

Today’s Arm CPUs scale (publicly) to 128 cores. This is led by the Ampere Altra Max at up to 128 cores improving over the 80-core Ampere Altra. Ampere’s CPUs have become the de-facto standard for non-CSP specific Arm CPUs. Even Oracle Cloud, Microsoft Azure, and the lagging Google Cloud are using Ampere Altra (non-Max) CPUs. HPE and Inspur make two of the top 3 OEMs that have Ampere servers (Dell has transitioned to laggard in CPU and GPU technology adoption, but we expect an announcement at some point.) What Ampere did, that the Marvell-Cavium ThunderX2 team did not is managed to get Arm server firmware to be more broadly usable. There is still a platform gap between Arm and x86 that is many times larger than Intel to AMD, but Ampere has done the work to close this significantly. Ampere is building the high-core count Arm CPUs for cloud service providers.

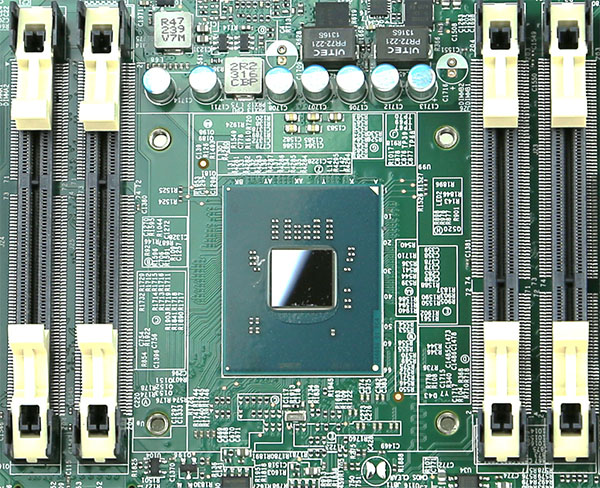

As an aside, some analysts like to point to the Huawei/ HiSilicon Kunpeng 920 as a PCIe Gen4 Arm server CPU competitor. Now having used both, we can see why even the Chinese domestic market has transitioned to preferring Ampere. The photo below will give away a project we have been working on.

In 2022-2023, Ampere’s next move will be with Ampere Announces 5nm Arm Server CPU AmpereOne. We expect 5nm process technology will bring higher core count adoption, along with new custom-designed cores.

While many like to compare Arm CPUs directly to x86 CPUs, there is an important caveat. Currently, a lot of the world’s enterprise software is x86-only, and much of it is licensed per core. For those markets, achieving the highest possible per-core performance is the goal since software license costs are so high relative to hardware. Enterprises faced with large numbers of workloads that are x86-only, end up driving cost savings by having a larger x86-only footprint, rather than mixing architectures. Sometimes that even means Intel-only not even Intel and AMD.

Many of the Arm server CPUs to date have taken a different approach. Instead of aiming for maximum per-core performance, they are focused on hitting consistent vCPU performance. For a cloud service provider (CSP) that is usually a key value driver. Customers do not need AVX-512 to run Nginx web servers or Redis key-value stores. Instead, shifting silicon priorities to integer workloads and then trading SMT for physical cores means Arm vendors can provide more consistent performance across the chip. For a cloud provider trying to drive up utilization, that is the key metric. One of the reasons we have not seen more 128 core deployments also comes down to memory and I/O bandwidth and achieving that balance for customers.

AWS Graviton3 has gone a step further. The 64-core part even does away with chasing maximum per-node performance by limiting nodes to a single CPU. AWS can then focus on hitting the right I/O and memory mix along with the right power per rack metrics for its installation.

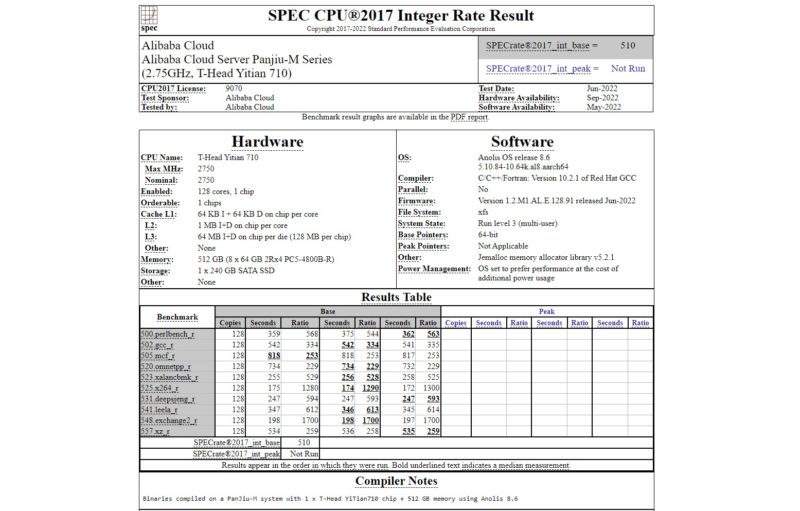

Recently we covered the Alibaba Cloud Panju-M T-Head Yitian 710 a new PCIe Gen5 128 core processor that is expected to debut in late Q3 2022. This further focuses on integer performance and is the current top SPECrate2017_int_base single socket result on SPEC.org’s website. Alibaba declined to submit floating-point results. Then again, there are large workloads, as simple as web servers, that are integer focused.

In 2023, we expect to see the NVIDIA Grace and Grace Hopper. For now, these are focused on the HPC market. NVIDIA desperately needs Arm CPUs. NVIDIA offloads a lot of its floating point and AI to its GPUs, and network processing, crypto, and compression offloads to its BlueField DPUs. Its main issue right now is that Intel and AMD have delayed their PCIe Gen5 parts.

Without CPUs and platforms, NVIDIA is sitting on technologies like its next-gen H100 GPUs, next-gen BlueField-3 DPUs, ConnectX-7 Infiniband, and more. NVIDIA may say that it plans to keep partnering with Intel and AMD. It is also experiencing having its products ready, but its chief competitors holding up the platforms it needs to launch. While NVIDIA says it plans to keep partnerships vibrant, one can only imagine with each passing day how knowing one’s competitors are holding up its business will erode those partnerships. Stay tuned in a few weeks as we have Ampere and A100 server content coming because this is an industry trend.

While much of the Arm market is focused on “More Cores, More Better”, there will be a market such as for Supercomputers and even just providing next-generation connectivity (e.g. NVIDIA) that Arm servers will fill.

On the opposite end of the spectrum from “More Cores, More Better” is Intel with “More Accelerators, More Better.”

Intel – More Accelerators More Better

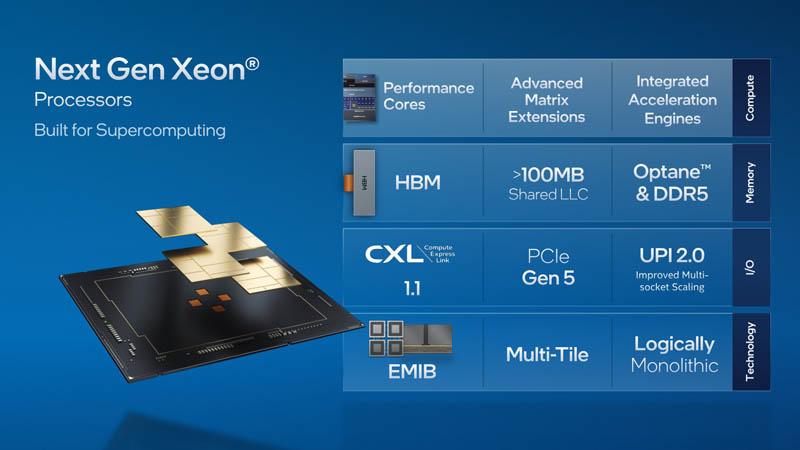

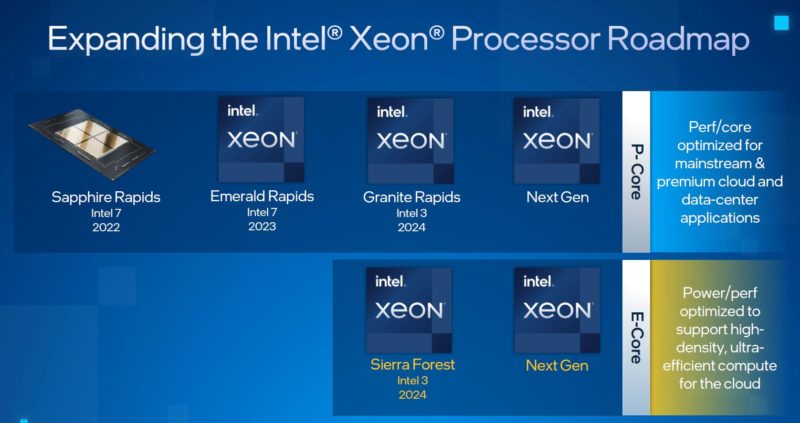

When one looks at the next-generation Intel Xeon codenamed “Sapphire Rapids” (or 4th Generation Intel Xeon Scalable processors) there is a key theme. Here is the slide from SC21 on the next generation Intel Xeons:

Here is the update slide at the Intel Investor Meeting 2022:

What one will quickly notice is that the amount of the slide being dedicated to acceleration is increasing. QuickAssist and Crypto Acceleration get mentions. We are going to have a piece using dedicated hardware QuickAssist accelerator cards with Ice Lake in the next two weeks or so. That will be followed by looking at the technology integrated into Ice Lake D. For longtime STH readers that know that we plan content quarters in advance, the progression of card/ chipset, to integrated, along with the above slide will hint at where we believe this technology is headed.

We do not think Intel will have leadership core counts in 2022-2023. Instead, we do think that Intel will end up with the highest per-core performance for many workloads. There is a bit of a difference between common benchmarks (e.g. one workload or one type of function) and real-world workloads that use many different functions. That is why instead of just showing OpenSSL performance using Ice Lake’s crypto engines, we used a stack including a database, nginx web server, php-fpm, and OpenSSL in pieces like AWS EC2 m6 Instances Why Acceleration Matters.

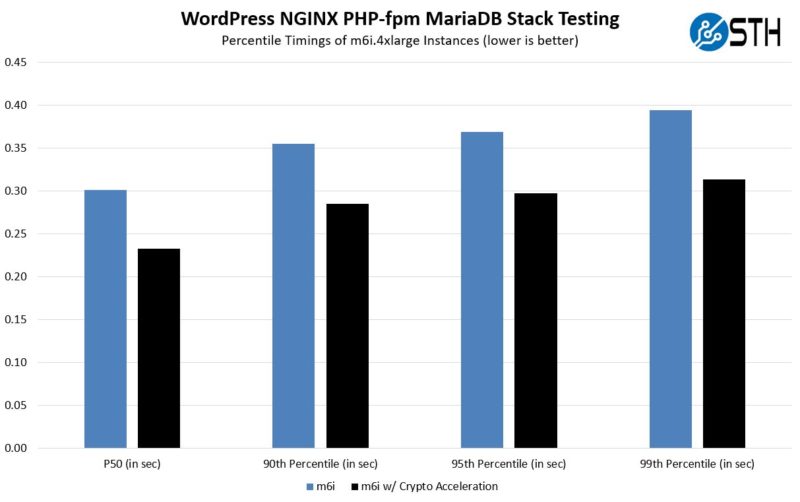

While the impact is nowhere near as dramatic as a 300% gain when taken in context, the performance differences are truly massive. Here is an example from that piece where only the crypto acceleration was turned on just from the small part of the chain that is the HTTPS termination:

Likewise, Intel also has Intel Sapphire Rapids HBM that will bring massive high-bandwidth on-package memory. For applications that are memory bandwidth bound, and that cannot fit into Milan-X/ Genoa-X style larger L3 caches, this is going to be a massive performance boost when looked at on a per-core performance basis.

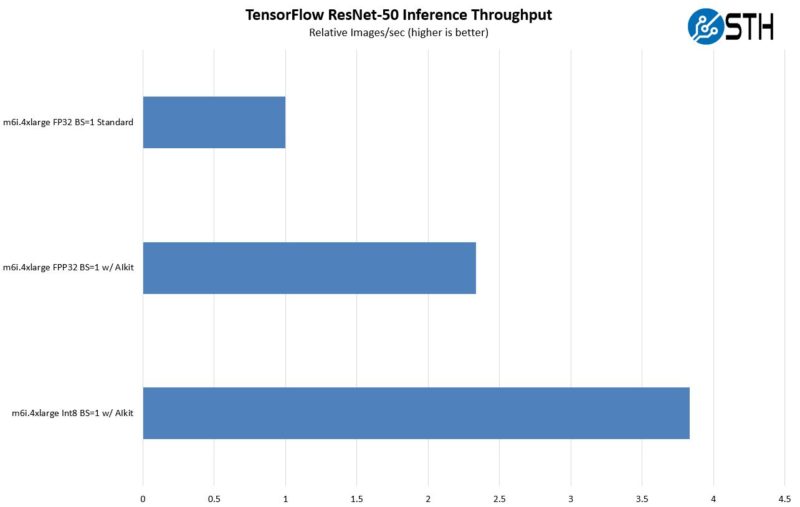

We have not talked about it much to date, but Intel is also pushing into areas such as AI acceleration. As we saw with Ice Lake Xeons, if your workload is not pure AI, like serving WordPress is not pure crypto acceleration, then the on-chip AI acceleration may be enough to not need a dedicated PCIe offload accelerator. Here is an inference example from Ice Lake:

What we will likely see in 2022-2023 is that Intel’s heavy use of accelerators, plus its existing technologies, means that on a heterogeneous set of workloads it has excellent per-core performance. Remember, per-core performance is critical with high per-core software license costs. A lot of this will come down to offloading portions of work like AI inference and crypto/ compression to onboard accelerators instead of using CPU cores.

Intel may end up with fewer cores, but it believes that the More Accelerators, More Better. AMD on the other hand is going somewhere in the middle.

AMD – More Moderation More Better

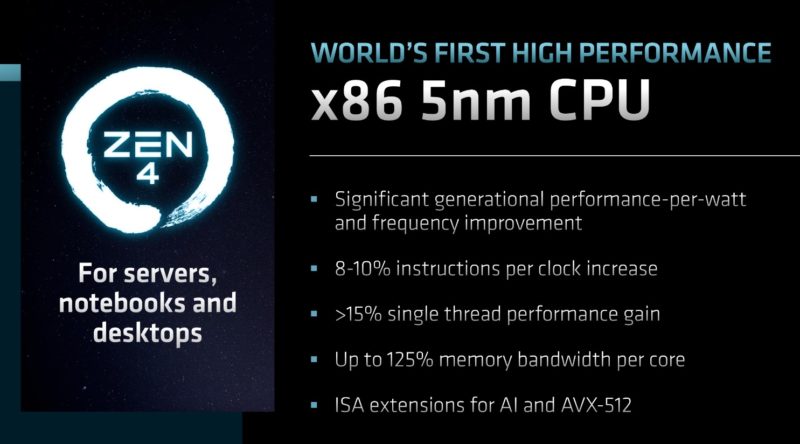

If you saw our AMD 2022-2024 Roadmaps the Video and our various AMD FAD 2022 articles, you probably learned about AMD’s next-gen parts for 2022-2023:

- Genoa / Genoa-X

- Bergamo

- Siena

These chips are going to increase core counts while also increasing acceleration capabilities.

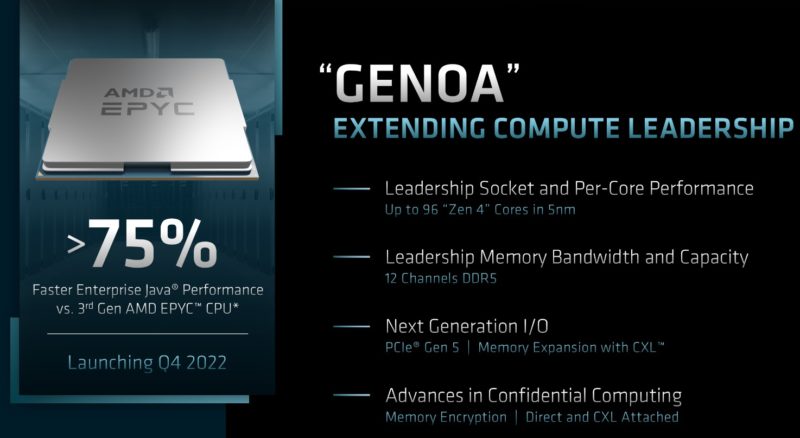

Genoa is AMD’s next-gen EPYC CPU. With the new generation, we will get up to 96 cores per socket. PCIe Gen5 and DDR5 will be supported, but we also think that there will be some aspects of moderation as 96 core chips with 12-channel DDR5 will be an extreme challenge to fit into common 2U 4-node dense compute form factors.

Still, with Zen 4, AMD is doing more than just going “More Cores, More Better.” AMD is also adding AI acceleration, AVX-512, and so forth. We do not expect AMD will to match Intel in accelerators. Instead, AMD is allowing development to happen on Intel, then following with support once there is a base of software. AVX-512 is a great example where one can get huge gains if the software supports it, but that support must be added first.

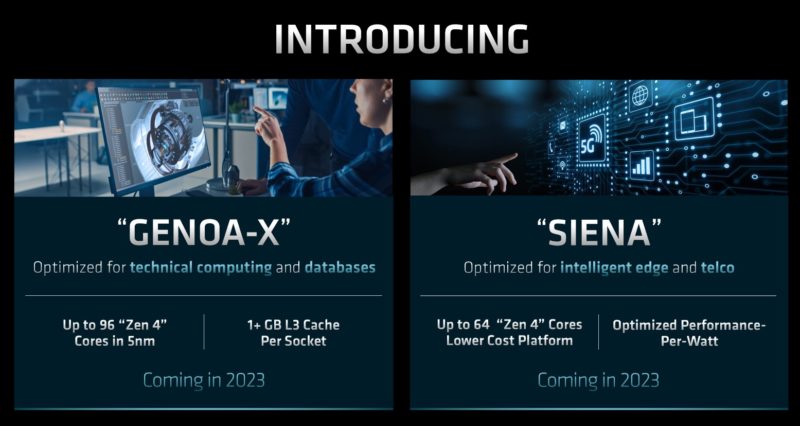

AMD also has Genoa-X as the next-gen Milan-X part for larger local caches. It then has Siena for a lower power and smaller footprint part.

In many ways, AMD is trying to straddle the Arm and Intel approaches in a “More Middle, More Better” approach.

AMD and Intel Planning for More Cores, More Better

As Arm vendors conceive of ways to increase per-core performance and add acceleration to their CPUs, AMD and Intel also realize they need to play in More Cores, More Better.

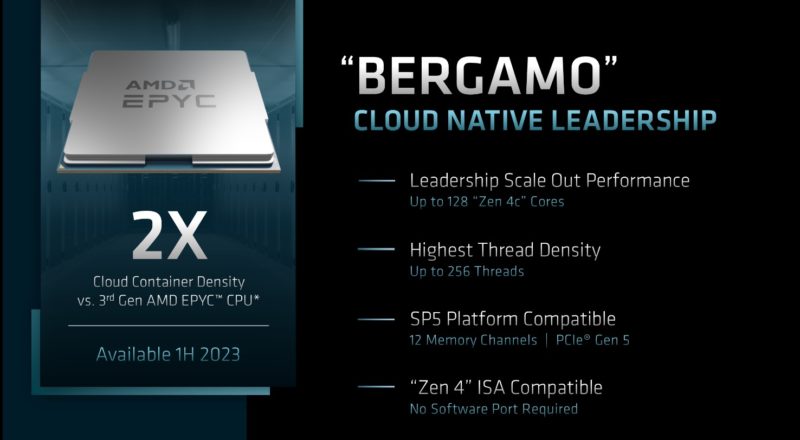

AMD has Bergamo coming in 2023. This will be a 128 core/ 256 thread part that increases core density in the same sockets as Genoa.

Intel for its part knows it needs a scale-out dense core architecture. For that, it plans to have Sierra Forest. Based on E-cores that are more akin to Arm’s current offerings, that should allow Intel to hit massive core counts that will dwarf 2022-era processors. Intel’s entry is slated for 2024.

This is the fun part of the market. The major players are all trying to address the market segments that the others are playing in.

Swapping Philosophies Intel and Arm in a Decade

One small, but fun point that we wanted to point out is that from 2012 to 2022, Intel and Arm have swapped philosophies.

In 2011-2013, Arm servers were starting to become a new idea. Arm’s idea was that it may not have had the best cores, but low power CPUs with accelerators would win large portions of the market. Arm vendors had two main issues. First, Intel in 2011-2013 was ahead of TSMC and others in terms of process technology. That manufacturing advantage made it such that getting tens of percent better efficiency was offset by Intel’s better process. Second, Intel built an “Arm-killer” CPU with QuickAssist that effectively set Arm servers back a decade.

The Intel Atom C2000 series with Avoton and Rangely was a direct counter to Arm. After it came out, we had a piece Intel can hold ARM (largely) out of the datacenter for 3 years. That was in 2014. By 2017 we had ThunderX2, and AWS had Nitro, but largely that prediction was spot on. It is 2022 and Arm is starting to make inroads, albeit in a different market segment. This was something that was bound to happen, it has just taken much longer than we expected eight years ago, and the Atom C2000 series was really the root of that delay.

Still, perhaps the most interesting part of this is that in 2022, Intel’s plan is fewer cores with accelerators while many Arm vendors are planning for many cores and going lighter on accelerators. Arm vendors will adopt some accelerators as Intel did in that era, but it is fun to see that if you picked “More Cores, More Better” and “More Accelerators, More Better” in 2012 they would be assigned to the opposite parties that we are assigning them in 2022.

Final Words

2022-2023 are going to be full of large changes in the server market. By the time the 2024-2025 servers come around, the 2021 generation of Ice Lake and EPYC Milan servers will be as quaint as we look at 2011-era Sandy Bridge Intel Xeon E5-2600 V1 servers today. That kind of change is going to scare many people. We are heading into an era where in three years we are going to see a scale of changes that previously took a decade in the server market.

Then again, that is perhaps the benefit of there being a more competitive market these days. As the industry has grown, we now have opportunities for solutions to address specific market segments in greater granularity. For those of us using servers, that competition means that we are going to get a lot of fun designs in the near future as participants expand their design philosophies to new segments.

Accelerators are well and good but Intel needs to execute better on the Software side, getting accelerator support integrated into standard libraries and shipped with standard Linux distributions. Otherwise this will remain a niche benefit for those who have the resources to custom-tailor their software to it (and maintain it), much like AVX-512.

The ARM scalable vector extensions that first appeared in 2019 with the Fugitsu A64FX, in Neoverse V1, ARMv9 and now extended as SVE2 to address other application domains are, in my opinion, a better accelerator design than AVX512. On the other hand, what Ampere has licensed and is doing with their own ARM-based CPUs appears notably different. Then there is Apple going in yet another direction. The point here is that the ARM ecosystem is really too diverse to characterise with a single CPU ideology.

Arm makers will use some accelerators like Intel did back then, but it is fun to see that if you chose “More Cores, More Better” and “More Accelerators, More Better” in 2012, they would be given to the opposite parties than they are now.

So which is better, Intel or AMD?