MLPerf is aiming to become the standard in AI benchmarking. There are two different sets of results that focus on two distinct areas, the MLPerf Training and Inferencing results. Today we have the MLPerf Inferencing v1.0 result. You can see our pieces on the v0.7 and v0.5 results discussions previously. One item that is becoming abundantly clear is that despite a vibrant ecosystem of AI chip companies, this is mostly a NVIDIA project at this point.

MLPerf Inference v1.0 Results

The MLPerf Inference results are broken into four main categories, there is the data center closed and open category set, and the edge closed and open sets. Each of these has subcategories depending on where the hardware is in its lifecycle from Available, Preview, and R&D/ Internal. Given the number of submissions, we are going to focus on the top-level segments.

MLPerf Inference v1.0 Datacenter Discussion

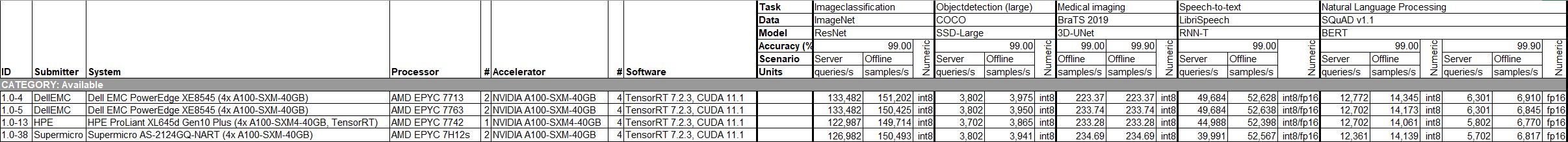

Perhaps the biggest challenge is this view (apologies given how much data is here you will want to open in another tab):

We have systems from Dell EMC, HPE, and Supermicro all with 4x NVIDIA A100 SXM4 cards. Supermicro and HPE use Rome generation CPUs, Dell is using Milan CPUs. Results are generally within a +/- 2% range. Except for a few outliers. By submitting MLPerf results, Dell and HPE have effectively shown that their hardware in the offline tests has negligible if any performance benefit over Supermicro’s, and sometimes falls behind. That is probably not the message they wanted to show to the market, but that is a good impact of MLPerf, it lets the industry see that data and evaluate accordingly. Of course, these companies offer different CPU configurations, so they can explain slightly different results, but basically, the value is being driven by NVIDIA in their submissions, not by the server OEM. Of course, there is service, support, and other vectors to compete on, but this format makes it harder for server vendors to really differentiate. Adding different accelerators is one good way to differentiate, but other accelerator vendors are not seriously competing in this space. Intel, for example, is not showing accelerator performance, even with partners, but simply Platinum 8380 and Platinum 8380H (4S/8S) performance and in only some of the tests.

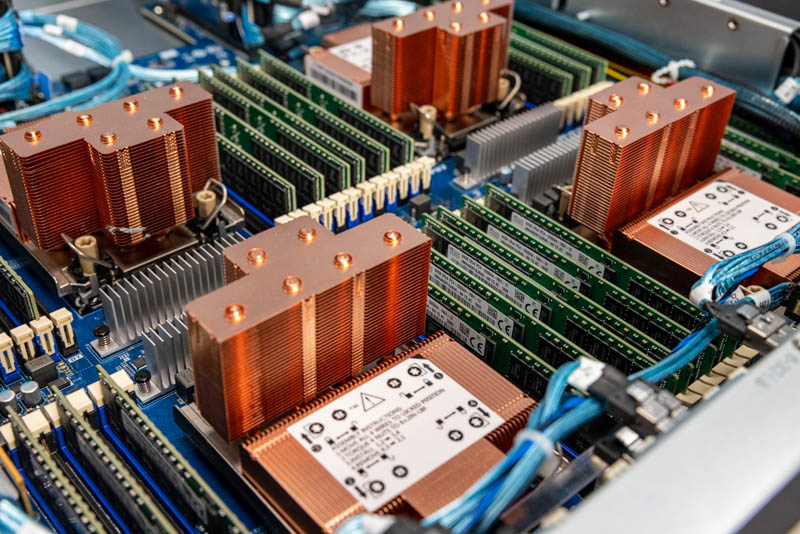

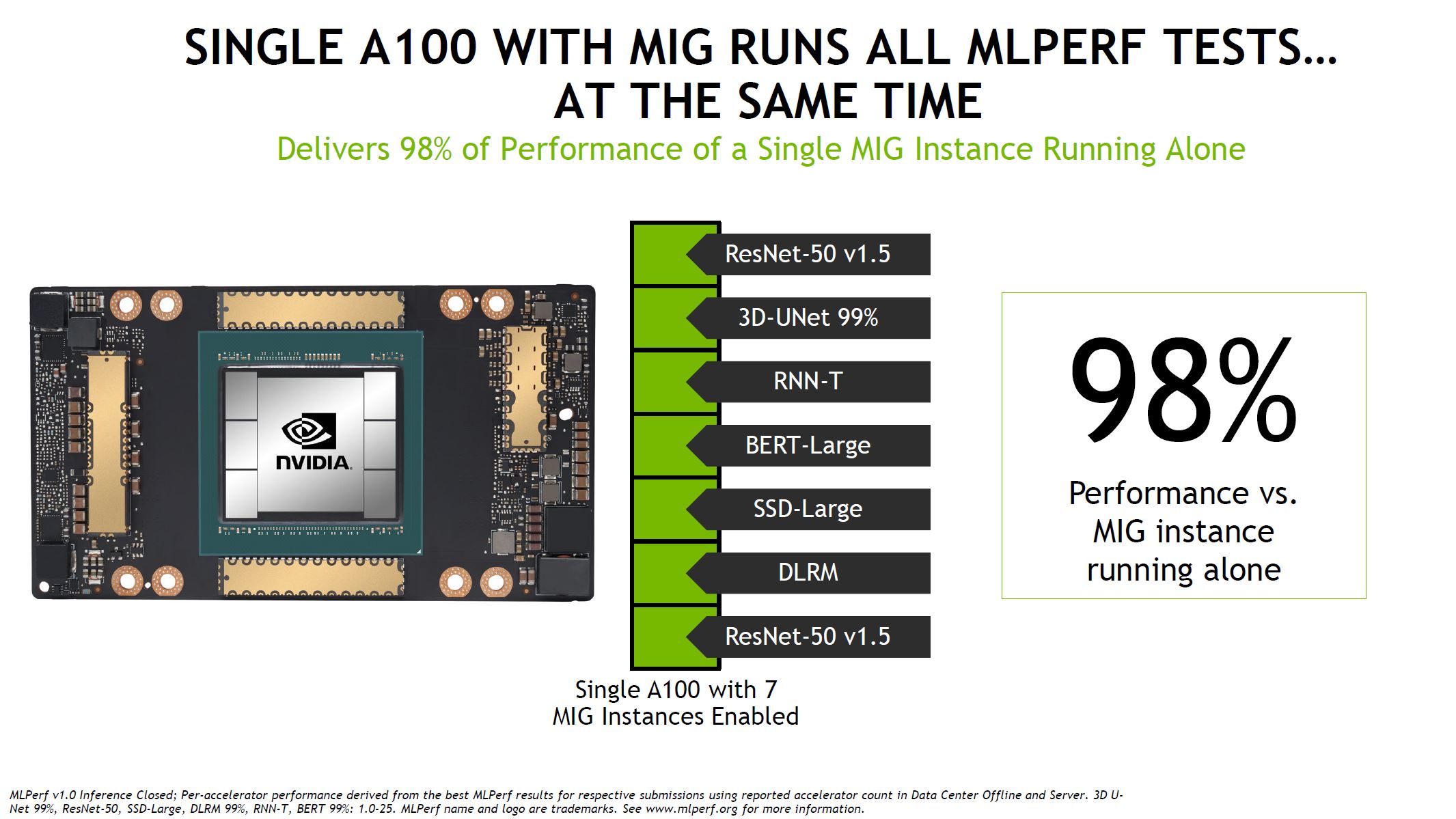

Perhaps the other useful part of this benchmark is that we can see the datacenter systems using A100 SXM, A100 PCIe, A40, A30, A10, and even T4 systems in different configurations. One other really interesting data point came out of this exercise. Specifically, NVIDIA split its A100 80GB GPU into 7x MIG instances or multi-instance-GPU.

Here it saw almost the same performance of running each of these workloads separately. Many of the AI inferencing workloads do not need a full NVIDIA A100, and have small memory footprints, so breaking these larger CPUs into smaller instances helps more efficiently distribute resources. We discussed this and showed a MIG instance being set up in our recent ASUS RS720A-E11-RS24U review with 4x NVIDIA A100 PCIe cards. Effectively, NVIDIA is allowing cards like the A100 and A30 to act like multiple inferencing accelerators in a system to improve performance.

MLPerf Inference v1.0 Edge Discussion

On the closed edge side, the results are still dominated by NVIDIA GPUs usually paired with AMD EPYC 7742 CPUs. Perhaps the most interesting results are the two Centuar AI Coprocessor submissions and the Qualcomm Cloud AI 100 results. Those along with the Edgecortix and Mobilint Xilinx Alveo results were not submitted across all of the tests, but there were some submissions adding to the diversity.

Otherwise, this is a lot of baseline Rockchip and Raspberry Pi 4 performance with a few interesting NVIDIA Jetson and other entries making up the majority of results.

New MLPerf Power Results

One aspect of the announcement that MLPerf did a great job on is adding power consumption figures. This is extremely important, especially on the inference side since so much of that is being done at the edge where there can be limited power and thermal budgets.

Here are a few key takeaways for the datacenter numbers:

- There were only six results in the closed datacenter category, zero in the open data center category. Of the closed datacenter results, AMD EPYC was used in systems at a 5:1 ratio here.

- The closed datacenter result that stuck out was the Qualcomm QAIC100 result. Qualcomm showed excellent power/ performance results. At the same time, it is also using much lower power processors in the Gigabyte R282-Z93 by using the AMD EPYC 7282 compared to other systems that have higher-end compute. Those CPUs are also the 4-channel optimized AMD EPYC 7002 “Rome” series parts.

- Aside from that one Qualcomm result, no other vendor submitted results with an accelerator vendor other than NVIDIA.

- From systems vendors, only NVIDIA and Dell EMC submitted results except for Qualcomm.

This is still an early result, and generally, these new types of tests require a cycle or two until more vendors participate.

On the edge side, the open category was all Raspberry Pi 4 results submitted by Krai, not to be confused with Xilinx’s Kria Edge AI SOMs launched yesterday. The closed division has nine results total but they have a pretty wide range and are not really of competitive parts. Dell EMC has a server, there are the Krai Raspberry Pi 4 results, and then NVIDIA has mostly Jetson AGX Xavier module results.

Hopefully, this is an area where the market picks up as it is something useful for the exercise.

Final Words

Overall, adding power numbers to the mix, even if optional, is great. It is also a positive step that we are seeing many vendors join in adding results for their systems as that will allow for some basis of using the results competitively. If results are available, RFPs can specify MLPerf levels much as SPECs numbers are used today.

Perhaps the biggest challenge looms. Intel has an AI silicon portfolio but it mostly focused on Cooper Lake parts. Xilinx discusses AI but has a handful of results. AMD is prominent on the CPU side only, but not on the accelerator slide here. Qualcomm’s datacenter submission only covered two of the models. Companies like Graphcore, Cerebras, and others are not submitting even a single result because they do not feel the need to compete in the NVIDIA-driven effort. Indeed, in our 2020 interview with Andrew Feldman CEO of Cerebras Systems we discussed its chip for MLPerf Training and the answer was it is more effective to spend resources getting customers on hardware than using MLPerf.

Google who was an early promoter of MLPerf is conspicuously absent from the results. We also do not have any of the China-market AI accelerators. The open edge side only had a single result outside of the ResNet Image Classification test. This is certainly looking like a mostly NVIDIA effort along with those that want to get some notoriety that comes from being on a list.

This brings us to the step forward and steps back of this release. Power is great, as are more OEMs competing. Still, aside from Krai’s stuffing result numbers with low-end boards, there is a trend. NVIDIA’s partner OEMs are starting to submit for MLPerf. Other accelerator vendors are largely staying away. This is getting close to being called simply “NVIDIA’s MLPerf Inference v1.0 that a few others submitted results to.” In many ways, this is going to be the more important of the two MLPerf benchmark areas (versus Training) so it is a shame that that is the impression one gets just by looking through the numbers on MLPerf/ MLCommons.

Intel isn’t in the market yet.

AMD does not support ROCm on RDNA & acquiring the two an mi50 or mi100 seems to require one to have relations with a systems integrator.

It should not surprise that nVidia dominates MLPerf. It has no actual competition.