A few months ago, Microsoft Azure had a blog post for MAIA. The company went into a lot more detail about its custom AI accelerator at Hot Chips 2024. Let us get into it.

Please excuse typos. These are being written live.

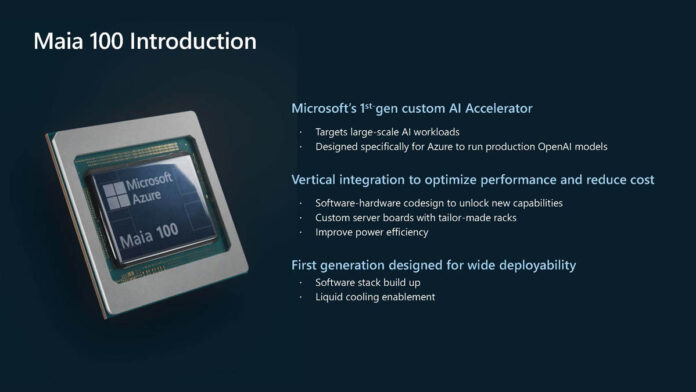

Microsoft MAIA 100 AI Accelerator for Azure

Microsoft built the MAIA 100 as a custom AI accelerator. This is specifically for running OpenAI models. It is telling that the middle point is clearly to reduce costs ( versus using NVIDIA GPUs.)

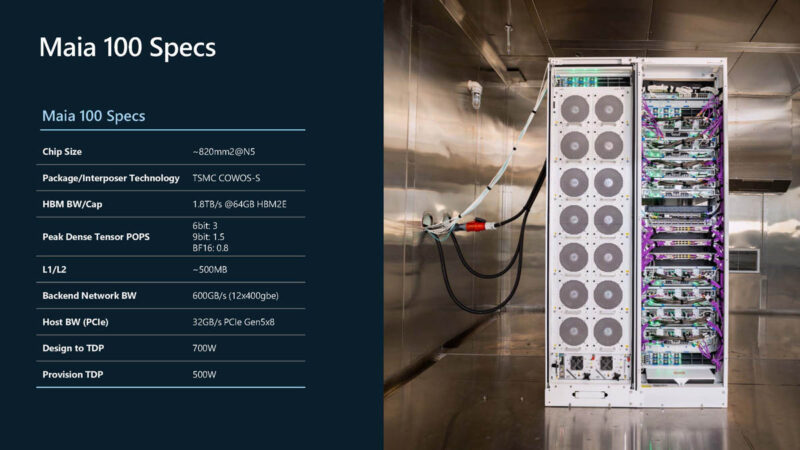

Here are the key specs. Microsoft is using TSMC CoWoS-S and it is a TSMC 5nm part. These chips also have 64GB of HBM2E. Using HBM2E means that Microsoft is not competing with NVIDIA and AMD for leading-edge HBM supply. Something surprising is that there is even a large 500MB L1/ L2 cache and the chip has 12x 400GbE network bandwidth. This is also a 700W TDP part. For inference, Microsoft uses 500W in production for each accelerator.

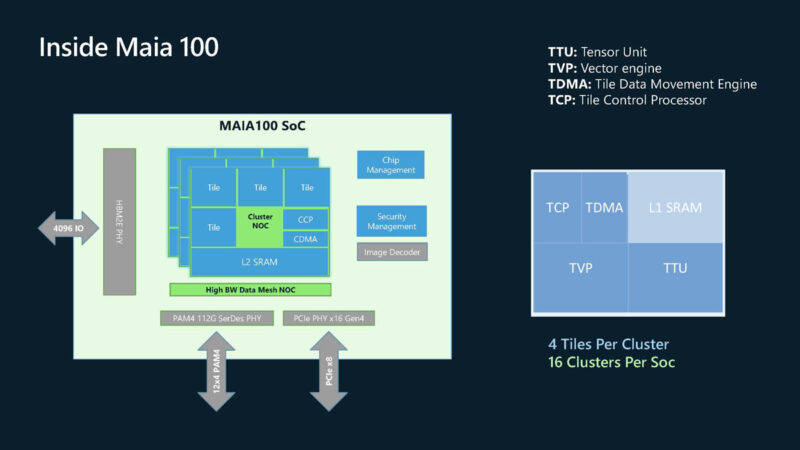

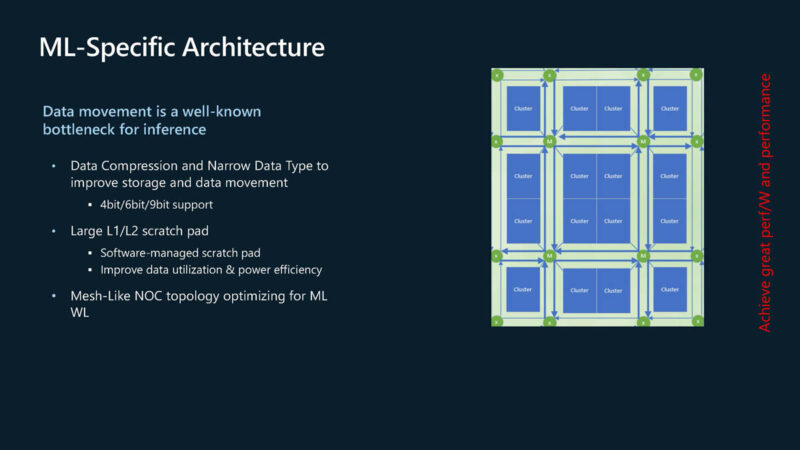

Here is a diagram of the tile. Each cluster has four tiles, and there are 16 clusters in each SoC. Microsoft also has image decoders as well as confidential compute capabilities.

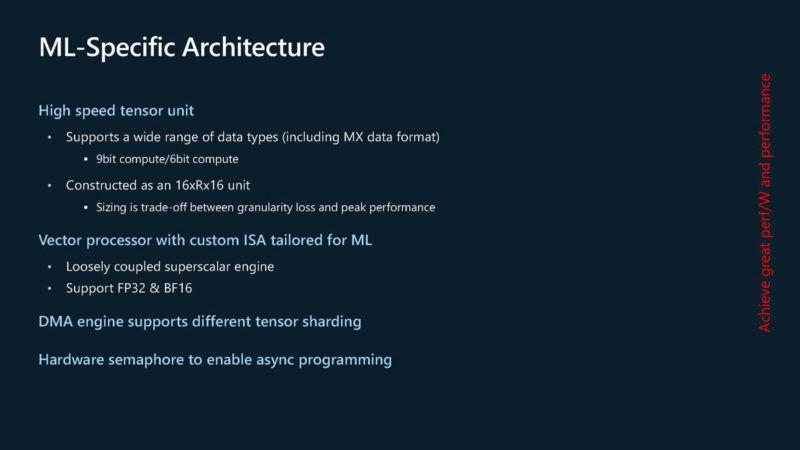

This accelerator has a wide range of data types. So this can support 9-bit and 6-bit compute.

Here are the sixteen clusters laid out with the NOC topology.

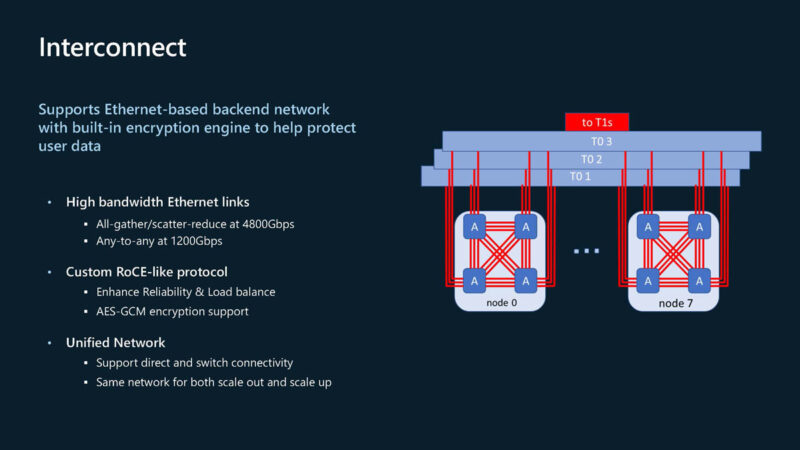

Microsoft wants to use Ethernet-based interconnect. There is a custom RoCE-like protocol and Ethernet instead of something like InfiniBand. Microsoft is also a promoter of the Ultra Ethernet Consortium (UEC) so it makes sense this is Ethernet based.

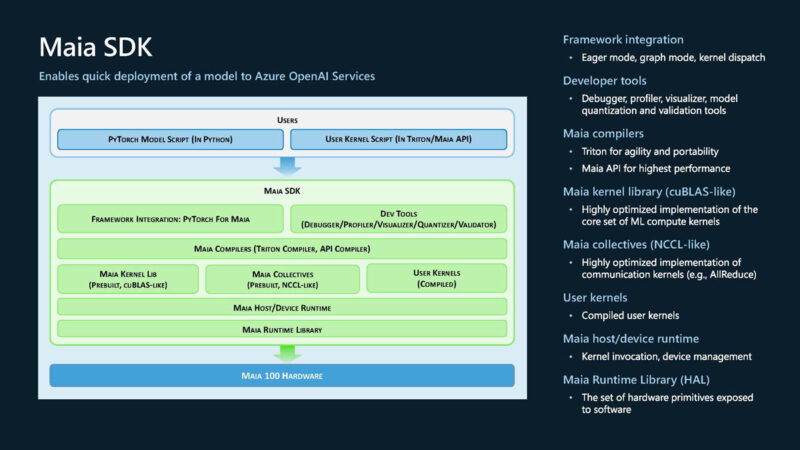

On the software side, here is the Maia SDK.

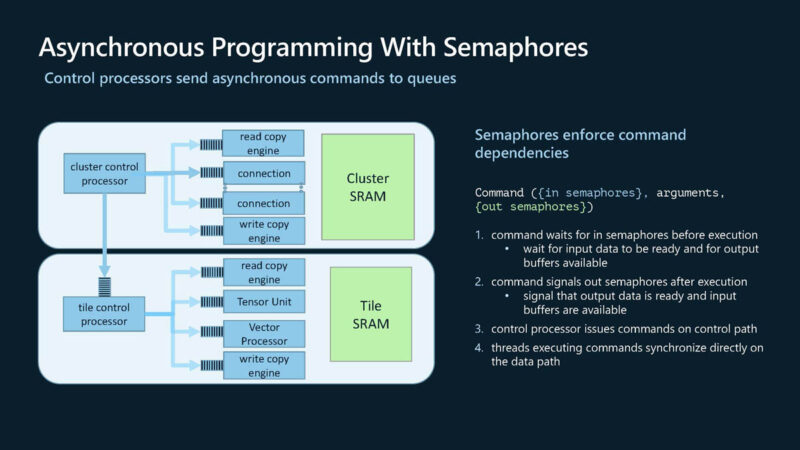

Here is the asynchronous programming model:

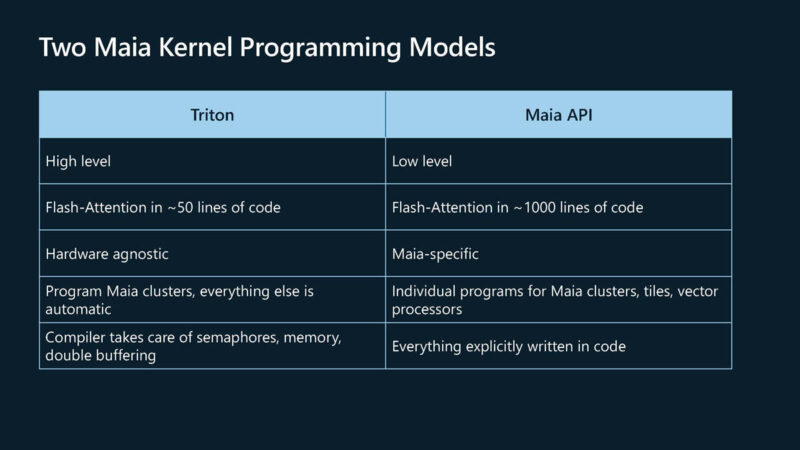

Maia supports programming via Triton or the Maia API. Whereas Triton may be at a higher level, Maia gives more control.

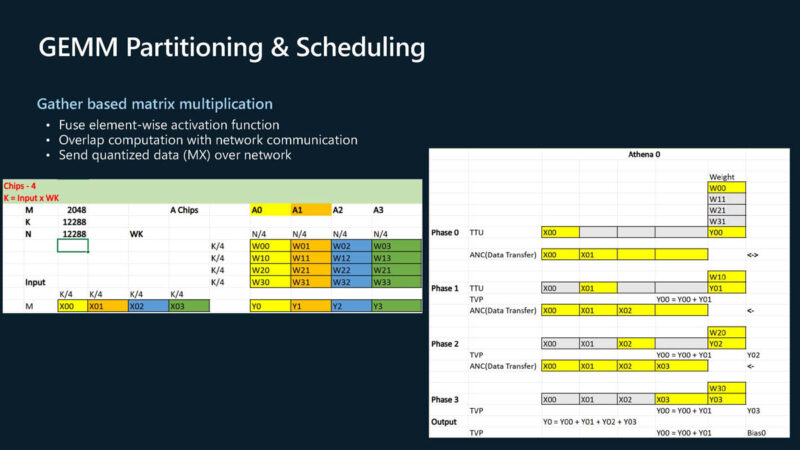

Here is the GEMM partition and scheduling. You have to love the Excel sheets.

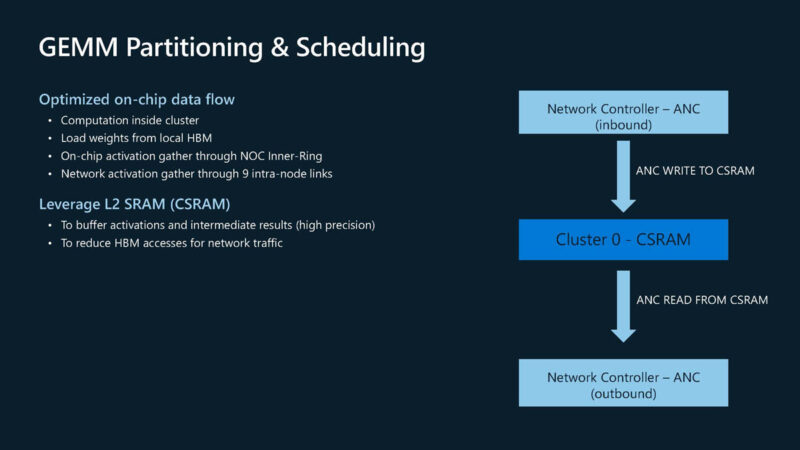

Here is the GEMM partition and scheduling.

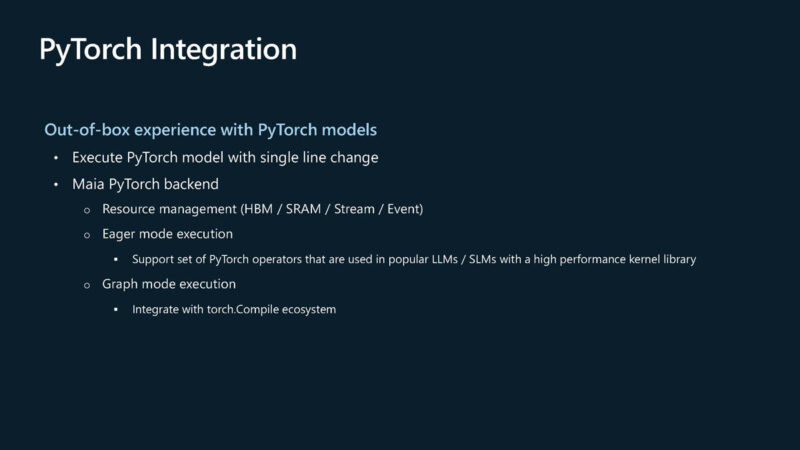

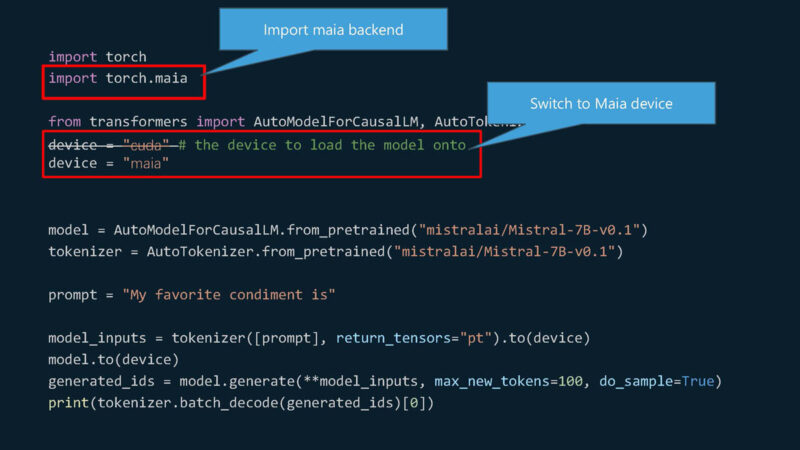

Maia 100 has out-of-box experience with PyTorch models.

The experience is importing the Maia backend and then loading Maia instead of cuda.

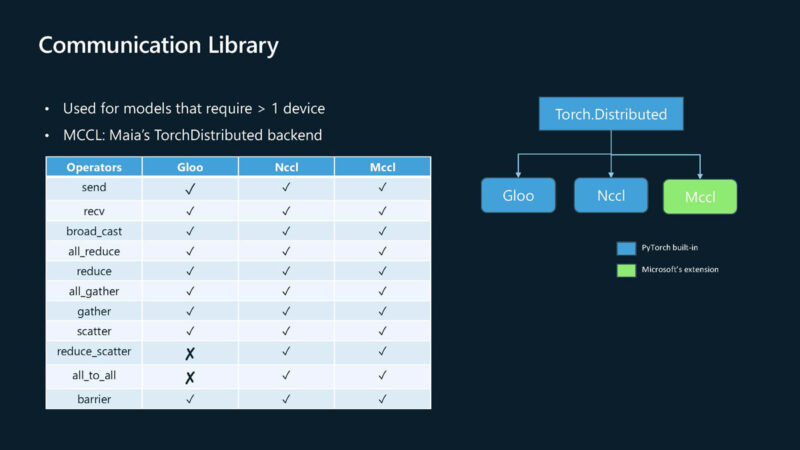

Here is the inter-Maia communication library.

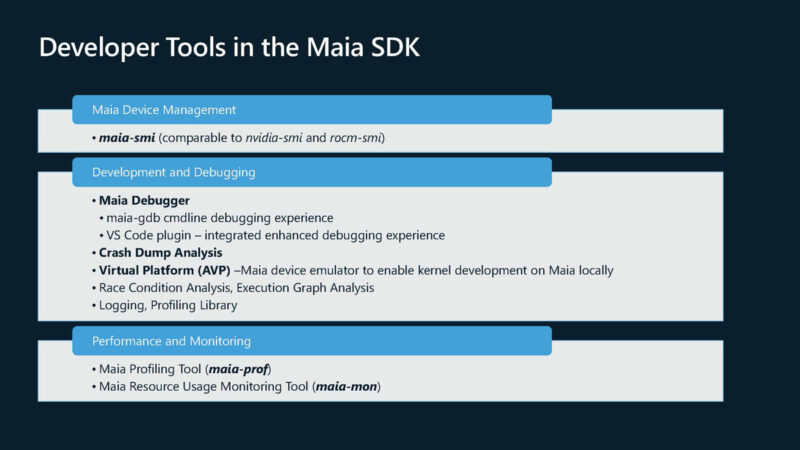

Here are the tools in the Maia-SDK. It is fun that there is maia-smi since we have been using nvidia-smi for years and now rocm-smi.

Our expectations were met with more details.

Final Words

Overall, we got a lot more on the Maia 100 accelerator. It was really interesting that this is a 500W/ 700W device with 64GB of HBM2E. One would expect it to be not as capable as a NVIDIA H100 since it has less HBM capacity. At the same time, it is using a good amount of power. In today’s power-constrained world, it feels like Microsoft must be able to make these a lot less expensive than NVIDIA GPUs.