Microsoft Azure just announced Lv2 storage instances powered by AMD EPYC. Today’s announcement is a big deal for two reasons. First, Microsoft Azure is a rapidly growing and large cloud platform so any new instance type is big news. Second, AMD EPYC is now being used for a cloud offering. Until this point, the cloud world has been primarily Intel-based and using dual socket servers. AMD told us this is the first major public cloud provider announcement with EPYC.

Microsoft Azure L Series Instances

Here are the key specs of the instances. You can sign up for them now, but they are not yet in the GA instance pools so we are going to withhold pricing information. If you wanted to try EPYC in the cloud, this is your chance. The instances are storage-focused so we see a heavy concentration of RAM and storage in the instances.

| Name | vCPUs | Memory (GiB) | SSD Storage (TB) |

|---|---|---|---|

| L8s | 8 | 64 | 1 x 1.9TB |

| L16s | 16 | 128 | 2 x 1.9TB |

| L32s | 32 | 256 | 4 x 1.9TB |

| L64s | 64 | 512 | 8 x 1.9TB |

Backing the Microsoft Azure LV2 instances are dual AMD EPYC 7551 CPUs. Each CPU has 32 cores and 64 threads for 64 cores 128 threads total running between 2.2GHz and 3.0GHz. That is more than Intel can currently muster in a dual socket design. Whereas we saw new AWS EC2 M5 instances with custom Intel Xeon Platinum 8175M CPUs, Microsoft can use standard parts. If you were to purchase a publicly available Platinum 8176M, for example, you would spend an additional $3000 just to support 1.5TB per socket. With AMD EPYC, Microsoft can provision up to 2TB per socket without a $3000 (before heavy discount) tax.

Microsoft is offering these instances with large amounts of SSD storage. This is undoubtedly taking advantage of the 128 high-speed I/O lanes for PCIe that a dual socket AMD EPYC system has. You can learn more about this in our AMD EPYC 7000 series FAQ.

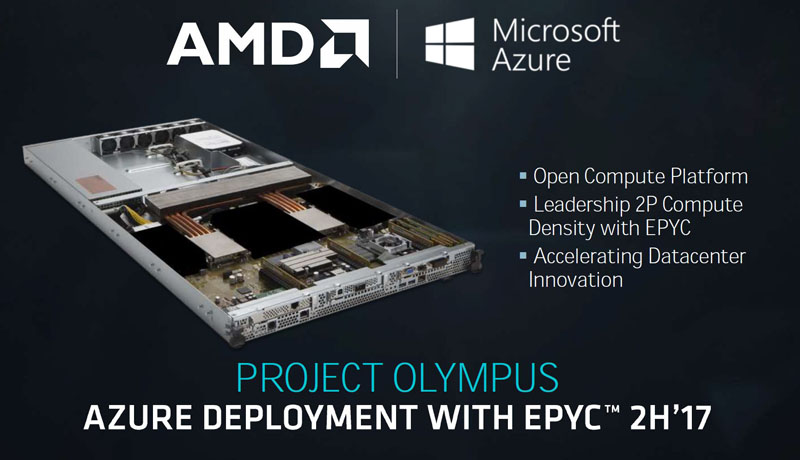

This announcement, Microsoft highlighted the fact it was using Project Olympus servers. We saw potential AMD EPYC Project Olympus system at Open Compute Summit 2017 and the announcement was previewed many months ago by AMD and Microsoft Azure:

It is notable that Microsoft Azure is using a two-socket platform. When discussing the Qualcomm Centriq 2400, this is another data point that the major public cloud service providers prefer dual-socket platforms.

Final Words

In the ABI (anything but Intel) AMD occupies a uniquely strong position. The Cavium ThunderX2 offerings are strong and come in dual socket platforms. Qualcomm Centriq 2400 is a first generation platform that Microsoft is heavily invested in promoting. Neither have an instance on publicly facing instance types at AWS, Azure, GCP or others. Customers can directly run their applications using native x86 on the new LV2 instances. Beyond the ABI space, this is a head-to-head win for AMD at Microsoft over Intel. We expect other cloud providers to follow suit taking advantage of the large EPYC platform RAM and I/O along with the x86 instruction set. These are the type of gateway wins that will push Intel to be more competitive in future products and with pricing.

This is a huge deal. I just wish they were publicly available today. These destroy the Amazon H1 instances you guys just covered

With the skyrocketing prices of DRAM nowadays, price advantage for Epyc over Xeon is gone. That is because Epyc requires anywhere from 2 to 4 extra sticks of DRAM to populate all memory channels. Also there were no GPGPUs used, while the Xeon ones have Tesla GPGPUs.

The cloud guys are using over 1TB of RAM per CPU.

Still not available, sadly, and neither is pricing (and ACU) info.