Get ready for a massive change in the coming years. Many know Single Root I/O Virtualization or SR-IOV. The new standard is emerging as Scalable IO Virtualization. Originally this was donated as an Intel specification, but it is now part of OCP. The main goal of Scalable IOV is to provide performance paths to access hardware while that hardware is shared in an increasingly virtualized, containerize, and composable infrastructure.

Scalable IO Virtualization is Replacing SR-IOV

These days, SR-IOV is well supported, but it is not perfect. SR-IOV was designed originally for 20 or so VMs. The vast majority of cloud instances are 8 vCPUs or less. As we move to 60-128 cores/ 120-256 threads in 2023, and then into several hundred cores per socket going forward, each CPU in a dual socket server will easily eclipse that 20 VM mark. Beyond that, new devices and topologies with things like CXL mean that the industry needs a different solution. That solution seems like it is going to be Scalable IO Virtualization or Scalable IOV.

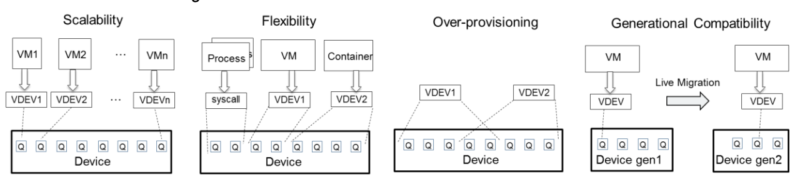

The main benefits that OCP members, like Microsoft are touting are scalability, flexibility, over-provisioning, and compatibility. For those wondering, SR-IOV can still be supported with backward compatibility in a Scalable IOV system.

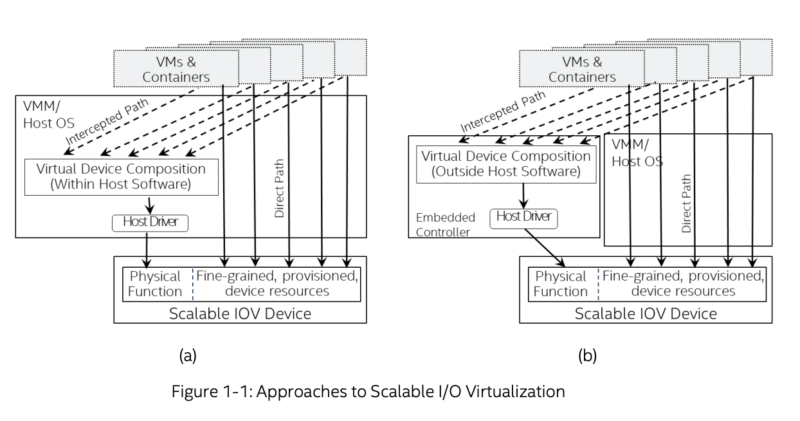

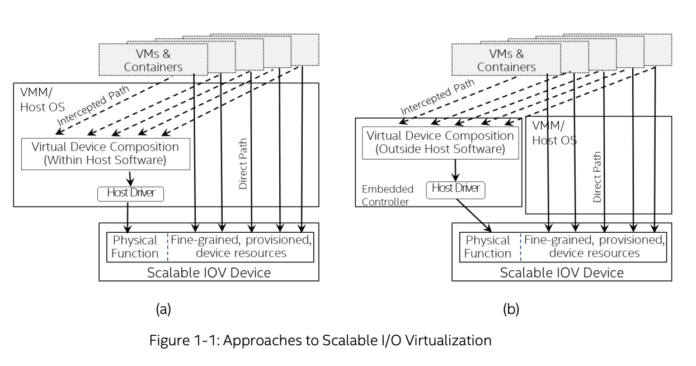

Scalable IOV uses hardware-assisted, efficient routing and has different modes for direct path and intercepted path functions. The intercepted path can be handled in software or on an embedded controller. While allowing more fine-grained, and performance and access.

Final Words

If you want some holiday reading material, checking out the OCP Scalable I/O Virtualization spec would be a great way to learn more. At STH, we will cover Scalable IOV as we start to see it more in future generations of servers.

Since it is Christmas Eve and I am on my second-to-last flight of 2022, I just wanted to share some of my holiday reading material that I have had bookmarked since it was presented at the OCP Summit 2022 Keynote a few months ago. Tomorrow we will have our Q4 Letter from the Editor, but I wanted to get a quick piece up and say Merry Christmas to the STH community.

“SR-IOV was designed originally for 20 or so VMs.”

What are the properties of SR-IOV that inhibit higher VM counts/density?

Still waiting for _usable_ SR-IOV on GPUs…

“What are the properties of SR-IOV that inhibit higher VM counts/density?”

The silicon support for Virtual Functions (VF’s) is the primary limit. Typically 32 or 64 VF’s per controller. That probably seemed like a lot back in the early 2010’s, as consolidation ratios tended to be lower with 10GbE host networking, but now with 25/100GbE, and immense core counts on virtualization hosts, it is quite reasonable to shovel hundreds of VM’s onto a host. However, it is the inability to migrate VM’s with SR-IOV that may be the bigger limit.

Thank you, that helps. Google suggests that the SR-IOV spec allows up to 64,000 VFs per device, so the limitation would appear to be with actual device implementation, rather than SR-IOV itself. Presumably that could be solved with newer device implementations (which would be required to support a new standard anyway) without actually requiring a new standard. Or is there something else silicon-level that limits scaling VFs?

I’m not at all opposed to new standards and progression of of technology, I guess I’m more curious than anything.

Hi Greg,

You said “Google suggests that the SR-IOV spec allows up to 64,000 VFs per device”, can you provide any reference link or spec section? I cannot understand why Total VFs cound reach at most 64,000.

Hi,

Do you have some performance comparision between this and SRIOV? Thanks.

Maggie, that is on the list of things to do this year. It is still fairly new.