Micron put out a press release with a few facts about its HBM3E progress. HBM3E is the cutting edge of shipping memory today and it is used primarily in high-end AI accelerators and GPUs. More specifically, and perhaps more pertinent to the times, Micron’s HBM3E memory is set for the NVIDIA H200 in the second quarter of 2024.

Micron HBM3E in Production Set for NVIDIA H200 Use in Q2 2024

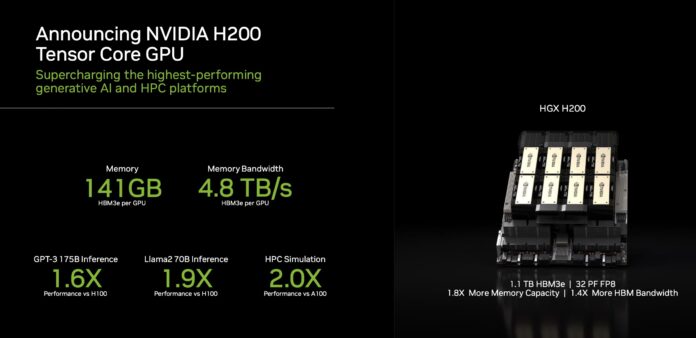

Micron’s newest HBM3E has capacities of up to 24GB. The previous generation HBM3 memory was more commonly in 16GB sizes. If you have seen the six packages next to the main NVIDIA H100 die, there are usually five 16GB packages with one structural piece. With HBM3E and 24GB modules, NVIDIA, and others, can increase the capacity of accelerators and provide more memory bandwidth. NVIDIA will use six packages of 24GB to increase memory to 141GB with 144GB physically on the card. There is a limitation that keeps NVIDIA from using a full 144GB.

From Micron some of its HBM3E features are:

- 9.2 gigabits per second (Gb/s) per pin

- More than 1.2 terabytes per second (TB/s) of memory bandwidth

- ~30% lower power consumption (Source: Micron)

That 30% lower power consumption figure is noted versus “competitive offerings” but it leads a lot to the imagination on exactly what it could be. Is HBM3 competitive with HBM3E? Is it GB to GB of HBM3E? Is it on a performance basis? It would just be nice if Micron could include more on those competitive numbers.

Final Words

For many, the fact that the Micron HBM3E 24GB modules are in production might be the less exciting fact. Instead, it might be that the memory will be in the H200 for Q2 2024. That means if you have a June 2024 or later H100 order, it is likely you are buying a slightly older generation than the best available on the market. We expect the H200 to ship and still sell in volume before the NVIDIA B100 starts shipping. Historically, there has been a gap between NVIDIA’s announcement and shipping products in the data center with new generations.