Micron said it is now shipping “production capable?” HBM3E in 12-High stacks. That means we get 36GB per package, up from 24GB, and higher performance. For the AI industry, this is a big deal because it often means we can get higher-performance and higher-capacity accelerators.

Micron HBM3E 12-High 36GB Higher Capacity AI Accelerators

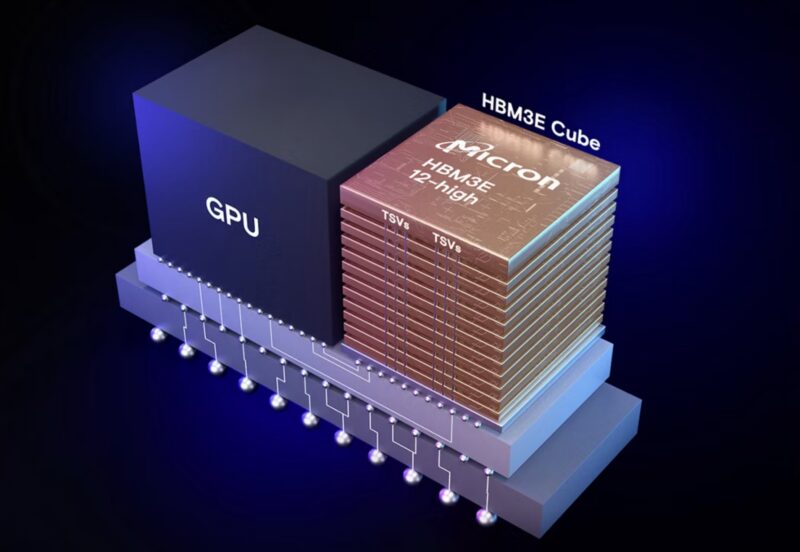

The new 12-High stack is what it sounds like. 12 chips stacked with Through-Silicon Vias (TSVs) into a HBM3E cube. This cube is usually packaged next to a GPU with a high-performance interconnect through a substrate.

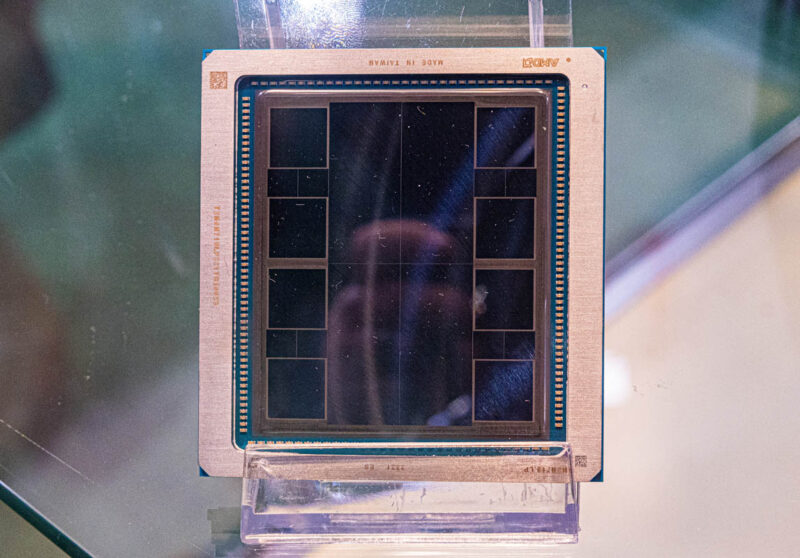

An advantage of having a 12-high stack versus an 8-high stack is that we can increase the memory capacity by 50% from 24GB to 36GB. While that may sound like a no-brainer for AI inference and training tasks that are memory capacity and bandwidth-bound, it is not as simple as just stacking more chips. Not only to the data and power connections need to be made, but the actual chip itself has Z-axis constraints. For example, an accelerator like the AMD MI300X is made up of many pieces of silicon. You can see the eight HBM stacks on the outside.

If AMD wanted to use Micron’s HBM3E in something like a MI325X, then it would have to ensure the packages stayed at the same height. If this did not happen, then the package would need to be adjusted or there would be a real risk of cracking GPUs like we saw happen reasonably often in the NVIDIA P100/ V100 era. Note AMD has not confirmed Micron as a supplier, so we are just using this as an illustration. Still, the benefits are palpable since we have 36GB HBM3E packages x 8 sites = 288GB of memory.

In addition to this, HBM3E also offers performance benefits over previous-generation parts with 9.2Gbps pin speed and what Micron claims is 1.2TB/s of memory bandwidth.

Final Words

36GB HBM3E is going to be an important product, but one we only expect in higher-end chips. Usually, the big AI vendors like NVIDIA and, to some extent, AMD will get access to the new chips and best bins of the chips because they can drive volume and pay the most for them. That is also why we see chips like the Microsoft MAIA 100 AI Accelerator for Azure use HBM2E because that tends to be lower cost. Hopefully, we start to see AI accelerators with this soon. Tomorrow’s review will not use these, even though it will be of a cool AI system.