As if this week did not have enough announcements, we get a new memory from Micron. In March, JEDEC published the GDDR7 memory standard. Two months later and Micron is announcing its GDDR7 memory. As with all things today, not only is the memory for GPUs and gaming but also for AI.

Micron GDDR7 for AI and Gaming

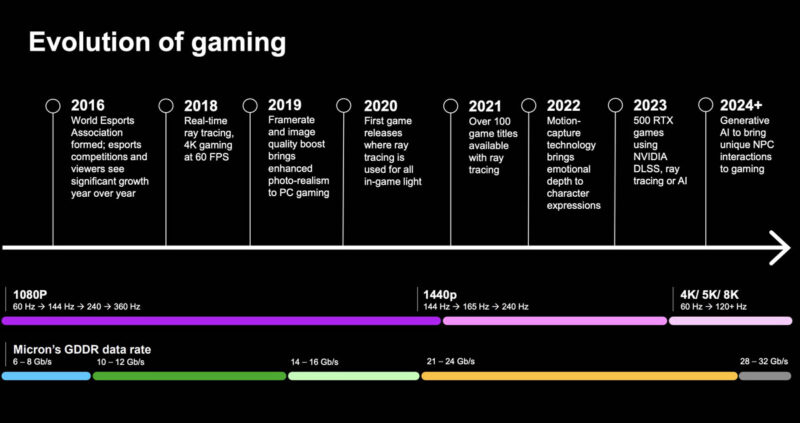

The new Micron GDDR7 uses Micron’s 1β (1-beta) DRAM technology. Form a performance perspective, it is designed to deliver 32Gb/s of power optimized memory for over 1.5 TB/s of system bandwidth. The new memory will also use PAM3 signaling. Micron sent us a quick “Evolution of gaming” slide that started in 2016 or so.

At first, it felt like 2016 was a bit late to start on the evolution of gaming timeline, but that coincided with GDDR5 and GDDR5X. Now for 2024-2025 Micron has GDDR7.

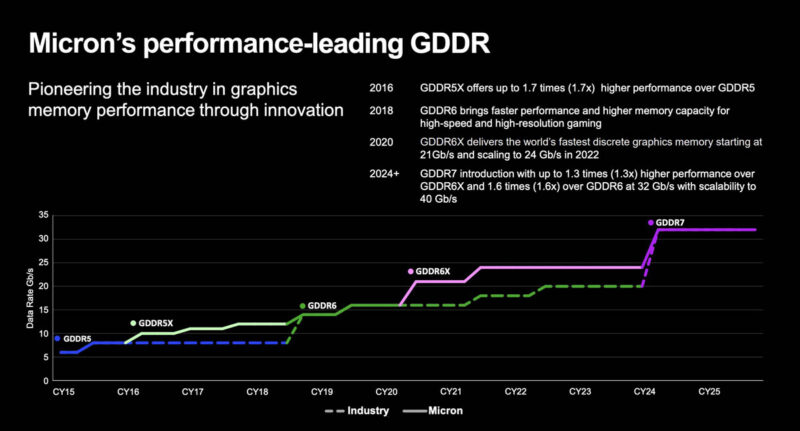

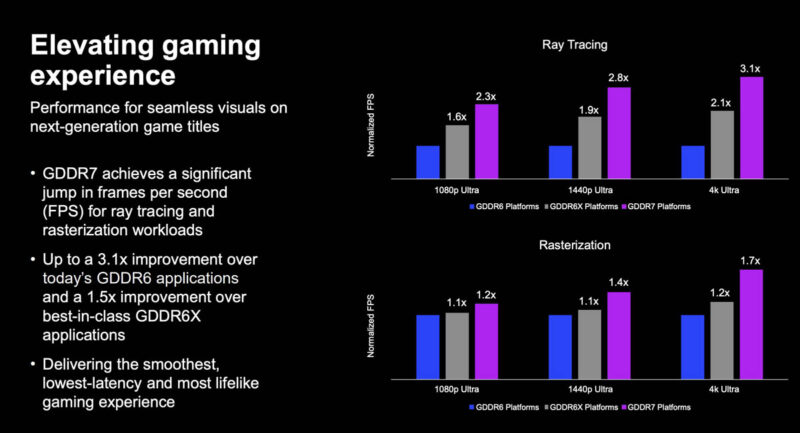

As one might expect, GDDR7 is set to be faster than GDDR6/ GDDR6X.

Micron says that GDDR7 can be 3.1x faster than GDDR6 and 1.5x GDDR6X.

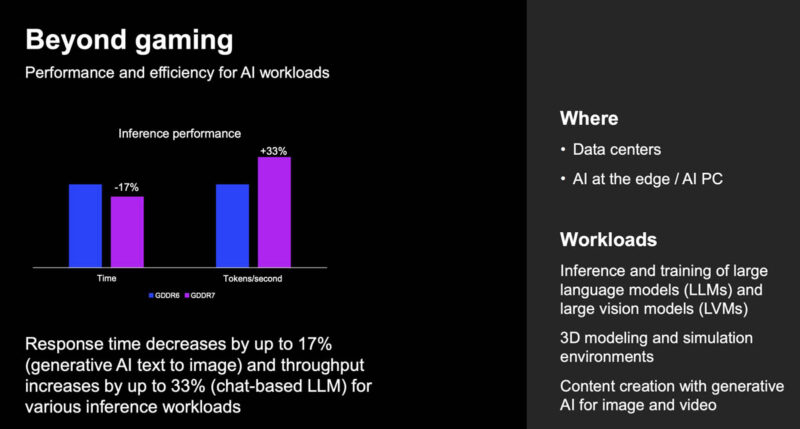

Since it is 2024, all things must be AI, including GDDR7. On the other hand, aside from the largest GPUs, there are many high-dollar GPUs for workstations and data centers that use GDDR memory, such as the NVIDIA L40S, NVIDIA RTX 6000 Ada, and so forth. Also, we are in an era where gaming GPUs are regularly running local AI inference. Even though it feels a bit overplayed these days, the shift is happening.

Final Words

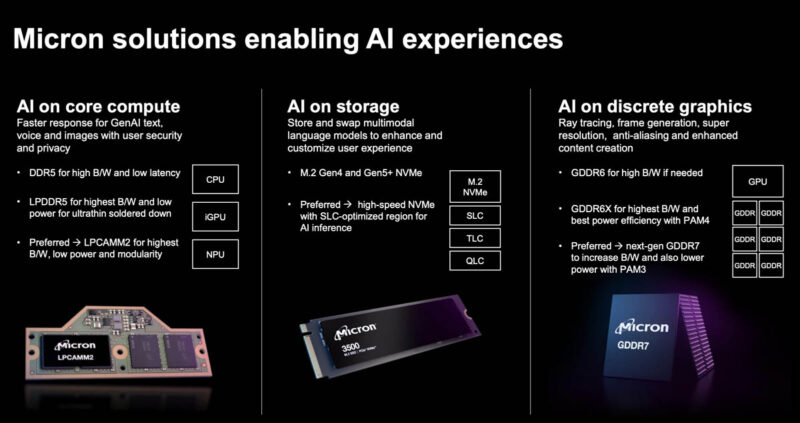

Overall, we expect the next round of high-end desktop and non-HBM data center GPUs to start utilizing GDDR7 memory. Beyond performance, capacity is also becoming a big consideration not just for gaming, but also for AI inference. It is somewhat strange, but GDDR7 has an issue that does not have to do with the memory technology. Instead, GPU makers are prioritizing data center GPUs and AI accelerators over gaming parts. As such, NVIDIA and AMD are not racing to put out the newest gaming GPUs. AI GPUs are high-margin and high-growth products.

Something we are seeing is that some smaller AI accelerators are using fast non-HBM memory types to lower cost and make manufacturing easier.