The Micron 6500 ION has been a very popular drive. Micron told us it has been the most popular drive in its class. Building on that success, the company is launching a new Micron 6550 ION SSD with double the capacity at 61.44TB and new PCIe Gen5 performance.

Micron 6550 ION 61.44TB PCIe Gen5 NVMe SSD Launched

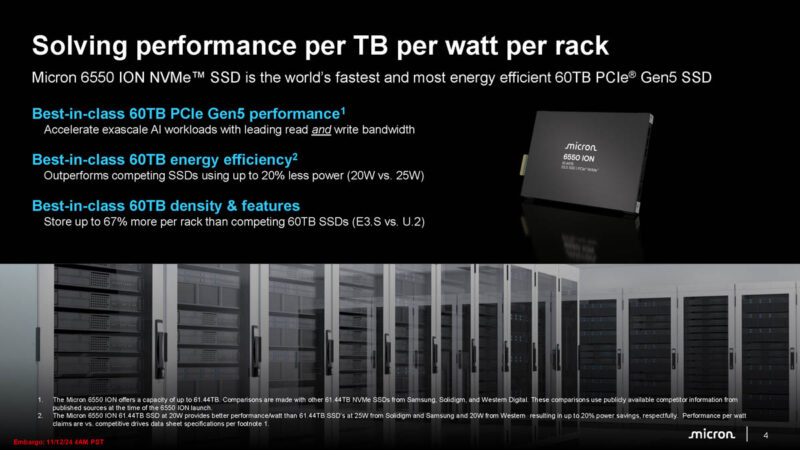

Starting with the mandate of the Micron 6500/6550 ION line, it is to provide the best performance and density at the lowest power. That is what made the 30.72TB Micron 6500 ION drive successful. In the meantime, the industry saw the Solidigm D5-P5336 61.44TB SSD arrive in the market. That Solidigm drive does not offer superior performance, but it was a lower-cost QLC SSD with a huge capacity that means the Solidigm drive has been sold out for months.

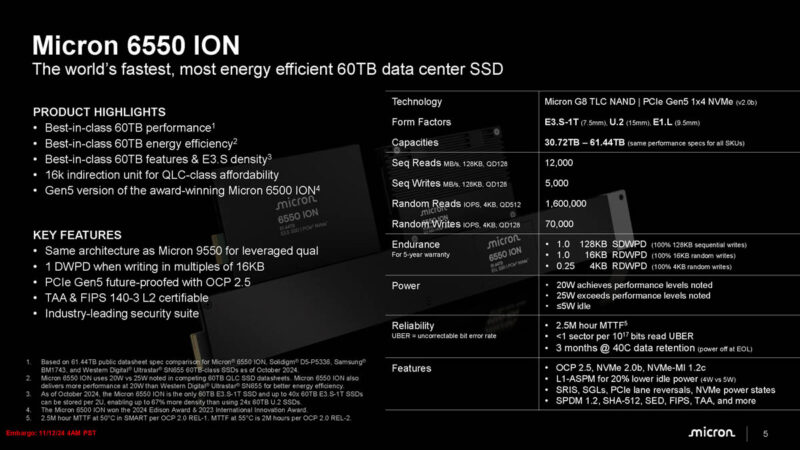

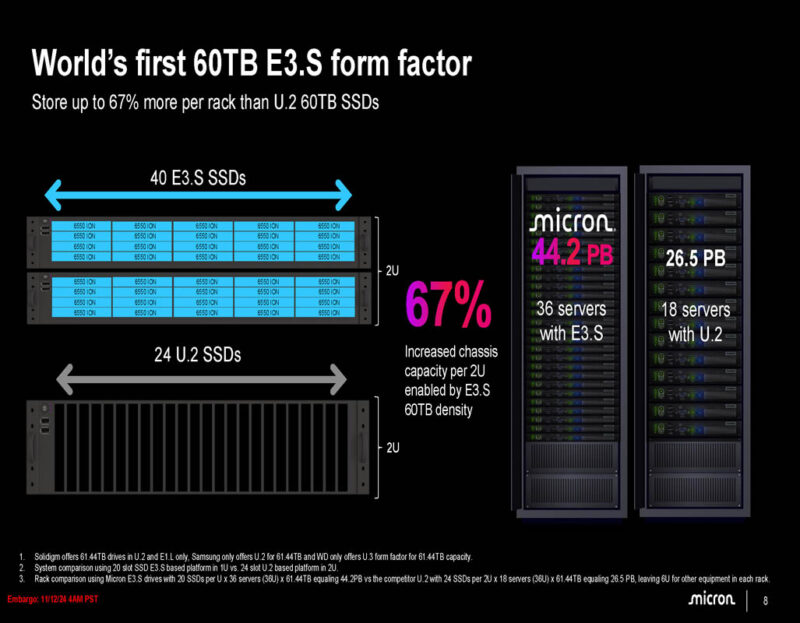

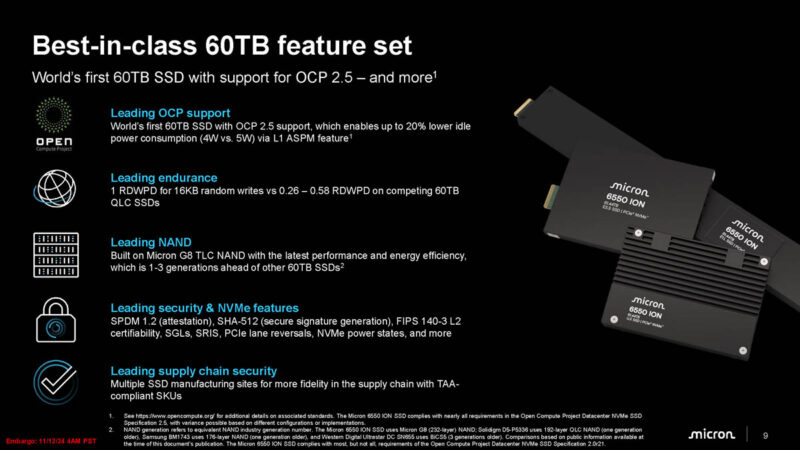

Micron’s doubling of capacity to 61.44TB is a big deal. Instead of going the slower QLC PCIe Gen4 route, the company has a PCIe Gen5 NVMe SSD that delivers more performance. It also can come in E3.S, U.2, and E1.L form factors. The E3.S is meaningful since it is a denser form factor than U.2.

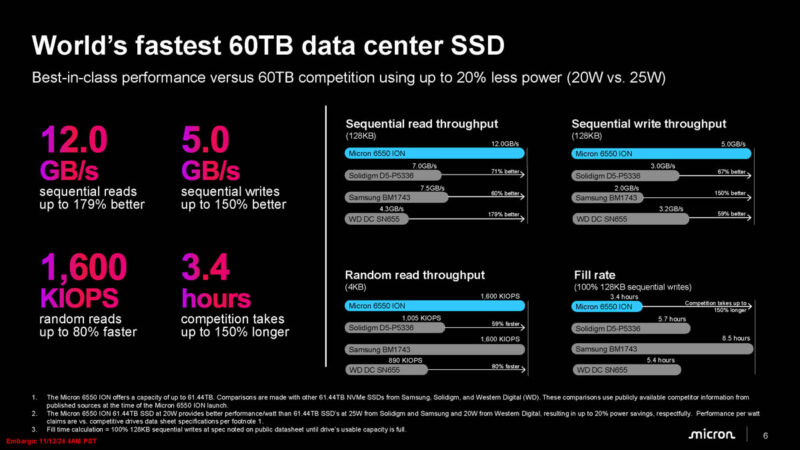

In terms of speed, the sequential read speed is up to 12GB/s due to the NAND and the controller’s PCIe Gen5 interface. Another nugget is that the ratings are at 20W for efficiency, but the drive can achieve higher performance if you select the 25W operating mode.

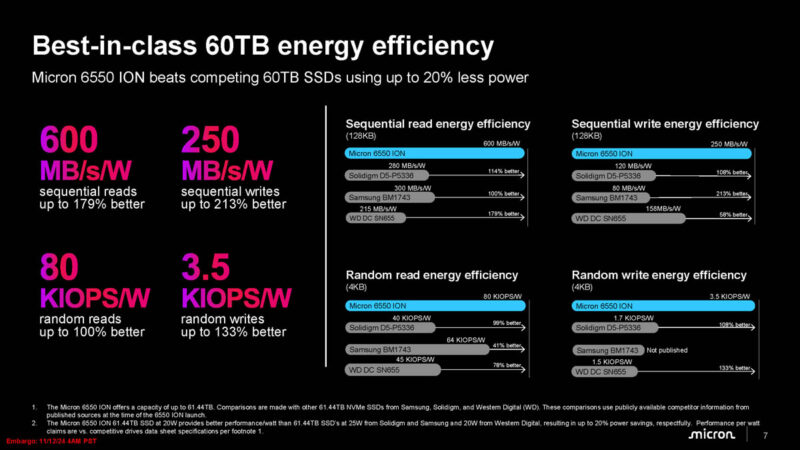

In terms of the energy efficiency, Micron said its drive does well.

The E3.S form factor means that one can pack these into systems. We are seeing the 122.88TB drive class emerge as the high-capacity SSD category these days in the U.2 form factor, but with E3.S and 61.44TB drives, the Micron 6550 ION in E3.S can get close.

Another option is to use only a PCIe Gen5 x2 interface per drive instead of PCIe Gen4 to get most of the performance but at more drives per node.

Micron also has a new generation of features.

Final Words

The Micron 6500 ION has been popular, even if it is not the densest drive around. The 122.88TB generation of drives from other vendors will sell very well just due to their high capacity and density. Micron is aiming for capacity at higher levels of performance which is an interesting value proposition. Our sense is that these will sell well. Hopefully we get to show you these soon.

While we don’t need this kind of performance…. This kind of capacity in the home sector would be amazing.

Imagining 2-4 of these capacity drives in a ZFS pool on a 10Gb network. Could easily stick with PCIe3 speeds/performance or even lower, just want ~120TB of storage without a ton of spinning disks.

Though we may disagree on the filesystem, 100% agree with the rest James.

What James and CS said.

Yep, what the gents above said. If the price is right 16-20TB of 4x4pcie would also do it for me.

Yeah, I would like to see this level of capacity made available for laptop and mobile/small form factor workstations. External drives would benefit from this, too. It’s just too bad this is only available for servers and NASes. Otherwise I’d buy one yesterday.

QLC LOL

The most surprising thing/lead for me is this is TLC flash. Micron’s specs image mentions this but kind of significant when the same capacity Solidigm is only pQLC/PLC.

I rather have lower prices (5 cents per TB) in a reasonable size. I’d put 4-12 drives in a Raid 10 or Raid 6 and call it a day.

What exactly runs RAID10 or RAID6 on PCIe drives?

Seems like the RAID controller would be a massive bottleneck. That’s the only reason I mentioned ZFS above. To bypass any hardware bottlenecks.

Many NASes run Linux and software RAID, so I imagine any similar device with NVMe drives will do the same.

I recently bought three of Micron’s 15 TB versions of this drive second hand on eBay, as they were much cheaper per TB than new Samsung QVO 8TB SATA drives (seemingly the largest in the consumer space at the moment).

My server is only PCIe 3.0 with the Dell R720 NVMe cage having a PCIe 2.0 switch in it, so I can only get around 1.5 GB/sec read/write from each drive. Weirdly running ZFS across all three doesn’t give me much of a speed boost, only going a tiny bit faster than a single drive. Using hdparm on three drives at the same time lets me go over 4 GB/sec so there’s some other bottleneck in ZFS that is even slower than the PCIe 2.0 switch. It’s not encryption or compression as it’s the same speed whether they are on or off (but significantly higher CPU usage when they are on). I do see one CPU core pegged at 100% during ZFS transfers though so there appears to be something in there that can’t run in parallel across multiple cores.

So at least in my case with my old server, I can just barely saturate a 10 Gb network link with ZFS and NVMe drives, but if I went up to a 25 Gb NIC in the same machine, I’d have to switch to a different filesystem with Linux software raid in order to saturate the 25 Gb link.